How AI gateways enable scalable agent orchestration

See how AI gateways enable scalable agent orchestration by providing centralized routing, governance, and reliability

AI agents are moving from research demos into production systems, where they don’t just answer questions but collaborate, delegate tasks, and use external tools. This shift introduces a new challenge: orchestration. As soon as you move from a single agent to a network of agents, coordination becomes complex. Requests need to be routed across models, usage must be monitored, and safeguards have to stay consistent across the system.

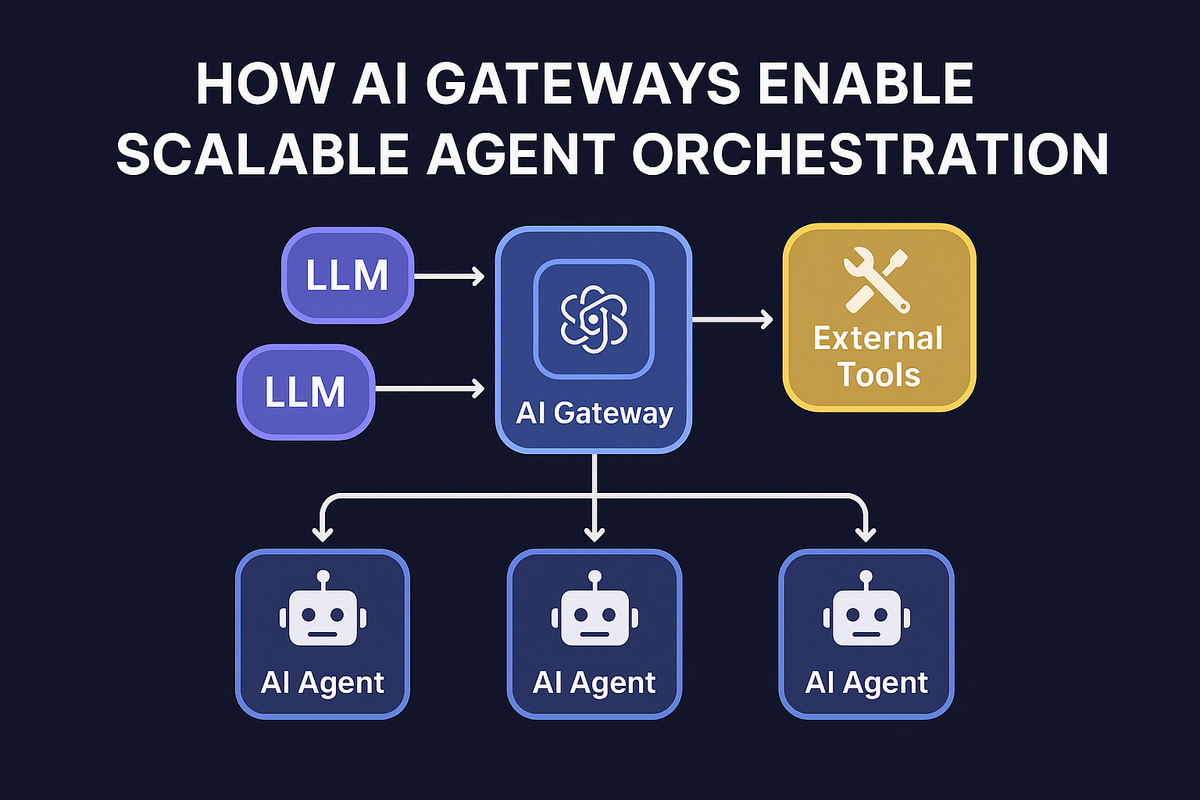

This is where the AI gateway comes in. Acting as the control layer between agents and the models or tools they rely on, an AI gateway provides the scale, governance, and reliability needed for multi-agent systems to work in practice.

AI agents and their orchestration needs

AI agents are designed to operate beyond single responses. They can plan, break tasks into smaller steps, call tools, and even collaborate with other agents. This makes them powerful building blocks for research assistants, customer support copilots, or enterprise workflows that span multiple functions.

But scaling agents introduces orchestration challenges:

- Communication: agents often need to exchange context and outputs in real time, which creates routing and dependency complexity.

- Tool invocation: agents rely on external APIs, databases, and models. Managing credentials and usage across these tools becomes harder as the number of agents grows.

- Data consistency: when multiple agents are working together, ensuring that they operate on accurate, authorized data is critical.

- Governance: enterprises need a way to enforce guardrails, budgets, and access control across agent-driven interactions.

These challenges compound quickly. What works for a single agent prototype breaks down when organizations need dozens of agents working together.

Where AI gateways fit in

An AI gateway acts as the control plane between agents and the models, APIs, and tools they rely on. Instead of each agent independently managing requests, credentials, and guardrails, the gateway centralizes these responsibilities.

With an AI gateway in place:

- Routing is unified – requests from agents can be directed across multiple providers or models without changing the agent’s logic.

- Governance is enforced consistently – rate limits, RBAC, budgets, and data guardrails apply across every agent interaction.

- Credentials are secured – sensitive keys for models or tools never sit inside individual agents; they’re centrally managed at the gateway.

- Observability becomes holistic – every agent request, response, and tool call is logged and traceable, making it easier to debug and audit complex workflows.

This centralization turns what would be a fragile, distributed setup into a manageable system giving enterprises confidence that agents can scale without losing control.

Key benefits of AI gateways for agent orchestration

The biggest advantage an AI gateway brings to agent systems is scalability. Instead of agents sending unmanaged, parallel requests that can overload models or tools, the gateway manages concurrency through batching, queuing, and intelligent routing. This ensures that even large, multi-agent workloads can run smoothly without bottlenecks.

Governance is another critical layer. With a gateway in place, organizations can enforce role-based access, set budgets, and apply compliance guardrails consistently across all agent activity. Sensitive credentials no longer live inside individual agents, reducing the risk of leaks or misuse.

Interoperability is equally important as agents begin to span multiple providers. A gateway provides a single, unified interface to OpenAI, Anthropic, Bedrock, Vertex AI, and more. This allows AI agents to call the best model for the job without needing bespoke integrations for each provider.

Reliability is built in at the gateway level. Retries, failovers, caching, and rate-limit handling ensure that agent workflows keep running even if an individual model or API encounters issues. The result is a more resilient orchestration layer where agents can depend on stable performance.

Finally, AI gateways make cost management possible at scale. With fine-grained logging, enterprises can attribute usage and spending to specific agents, teams, or workflows. This visibility helps forecast budgets, avoid overruns, and optimize provider selection based on cost-performance tradeoffs.

Together, these benefits turn what would otherwise be fragile prototypes into robust, production-ready agent systems.

Real-world orchestration scenarios

Consider a research assistant built on top of multiple agents. One agent might be responsible for searching academic papers, another for summarizing findings, and a third for generating a structured report. Without a gateway, each agent would need to manage its own API keys, route requests across different models, and handle errors independently. With an AI gateway in place, all of this coordination happens through a single control layer, making it easier to scale the system and ensure consistency across every agent’s output.

In an enterprise setting, the orchestration challenge becomes even more complex. Imagine a compliance workflow where one agent reviews contracts, another checks them against financial policies, and a third ensures regulatory language is included. These agents may rely on different models and tools, and their activity needs to be monitored for both cost and security. By routing their interactions through an AI gateway, the enterprise gains visibility into every request, applies guardrails uniformly, and ensures sensitive data is handled according to policy, all while allowing the agents to collaborate seamlessly.

These scenarios highlight why enterprises adopting multi-agent systems increasingly view the AI gateway as essential infrastructure. It not only simplifies technical complexity but also enforces the governance, security, and reliability required to make agent orchestration practical at scale.

Future of multi-agent systems

As agent ecosystems evolve, orchestration will only become more complex. New standards like the Model Context Protocol (MCP), tool registries, and agent hubs are expanding what agents can do, but they also introduce more moving parts that need to be governed.

The AI gateway sits at the center of this landscape as the shared control layer—ensuring that no matter how many agents, tools, or models are in play, enterprises can scale responsibly with full visibility and control.

Portkey’s AI Gateway already powers this kind of orchestration. If you’d like to see it in action for your own workflows, you can book a demo today.