AI Gateway vs API Gateway - What's the difference

Learn the critical differences between AI gateways and API gateways. Discover how each serves unique purposes in managing traditional and AI-driven workloads, and when to use one—or both—for your infrastructure.

API gateways have been around for years - they're the workhorses that manage web traffic, route API calls, and handle basic security. They work great for typical web applications and microservices.

But AI systems bring different challenges. You need to manage heavy inference loads, route traffic to GPU clusters, and deal with AI-specific compliance rules. That's where AI gateways come in - they're built specifically for machine learning workloads.

The rise of AI applications has pushed us beyond what traditional API gateways can handle effectively. While both types help manage traffic flow, AI gateways are designed for LLM apps with features you won't find in standard API gateways.

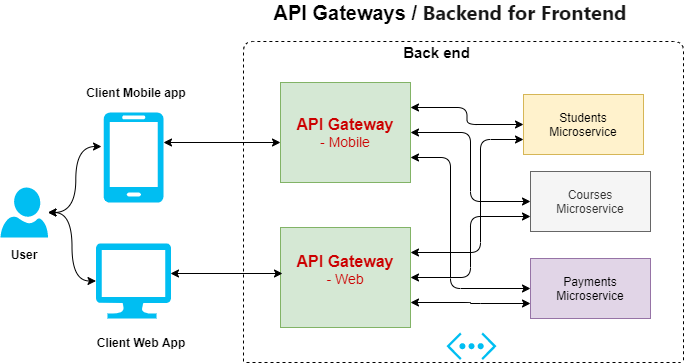

What's an API Gateway?

API gateways are foundational pieces of applications. They handle the critical tasks of routing traffic between clients and services, managing authentication, and controlling request volumes through rate limiting.

For teams building microservices, API gateways provide a central control point for managing service-to-service communication and enforcing API policies. They're optimized for traditional web traffic patterns - REST APIs, HTTP requests, and the standard flow of data between applications.

But as we'll see, AI workloads bring new patterns that push beyond these traditional boundaries.

What's an AI Gateway?

An AI gateway is a specialized layer that sits between your application and LLM providers like OpenAI, Anthropic, or Cohere. It processes all your LLM API calls, adding critical functionality beyond basic routing:

The top AI Gateway monitors model responses and performance metrics, enforces safety rules on outputs, manages your prompts centrally, and caches responses to cut down on API costs.

Think of it as traffic control built specifically for LLM applications - making sure your calls to language models are fast, reliable, and don't break the bank.

The key is that it understands LLM traffic: knowing when to cache similar prompts, how to handle rate limits across providers, and what to watch for in model outputs. This makes it different from traditional API gateways that mainly focus on HTTP routing and auth.

AI Gateway vs API gateway

Let's break down how AI and API gateways differ:

API gateways act as reverse proxies, primarily managing traffic flow between clients and backend services. API gateways do the standard traffic management work - routing requests, checking auth, and controlling load. They're built for typical web apps where you're passing data between services.

AI gateways, in contrast, function more as forward proxies, optimizing the interactions between applications and AI services, such as large language models (LLMs) or custom AI models. They monitor responses to catch issues, cache similar prompts to save costs and ensure outputs stay within safe bounds. They also understand rate limits across different LLM providers and how to handle prompt variations.

Security looks different, too. While API gateways focus on keeping endpoints secure, AI gateways also watch for sensitive data in prompts and ensure that model outputs follow your rules.

Scaling is another key difference. API gateways help you handle more web requests. AI gateways help you handle more LLM calls efficiently - knowing when to cache, when to route to different providers, and how to keep costs under control as volume grows.

When do you use which? API gateways are your go-to for standard web applications. AI gateways make sense when you're building apps powered by language models - think chatbots, content generation, or anywhere you're making heavy use of LLM APIs.

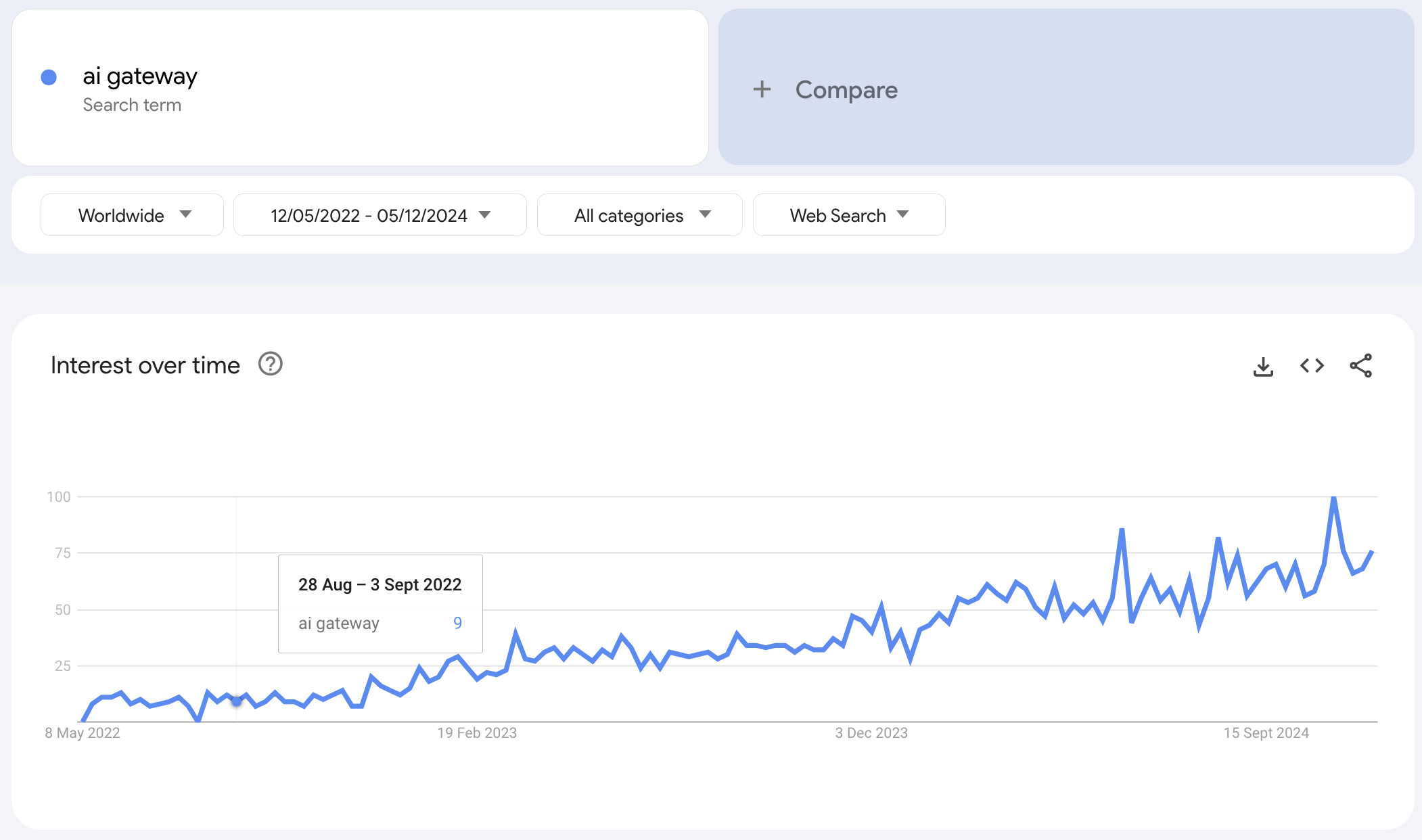

Why AI Gateways are taking off

The rise in interest around AI gateways is undeniable.

Let's talk about why AI gateways are becoming a thing.

It's pretty simple - as more companies put LLMs into production, they're hitting common problems that need solving.

First, running LLMs in production is different from regular APIs. You need to watch what the outputs are, track their performance, and catch issues fast. When your customer support chatbot starts giving weird answers, you want to know right away. Then there's compliance. If you're using LLMs with customer data, you need to prove you're handling it right. AI gateways track your prompts and responses, so you can show auditors exactly what's happening.

The cost is a big one. LLM API calls aren't cheap, especially at scale. AI gateways help by caching common responses and routing traffic across different providers.

The integration piece matters too. Most companies using LLMs also use vector databases, analytics tools, and other AI services. AI gateways connect these pieces without you having to build all the plumbing yourself. Bottom line: as LLMs move from experiments to core business tools, teams need infrastructure built for language models. That's the gap AI gateways fill.

When should you use an AI Gateway?

Let's look at some real examples where AI gateways make sense:

Say you're building a customer support chatbot. You're making thousands of calls to GPT-4 daily, and you need to:

- Keep customer data secure

- Track when responses go off-script

- Cache common support answers

- Handle failover between different LLM providers

Or take a content generation tool. You've got multiple teams using different prompts, and you need to:

- See which prompts work best

- Control costs across departments

- Make sure outputs follow brand guidelines

- Keep an audit trail of what's generated

Here's a trickier one: legal document analysis. You're processing confidential contracts with LLMs, so you need to:

- Catch any sensitive data before it hits the LLM

- Route different document types to different models

- Keep detailed logs for compliance

- Cache similar queries to cut costs

The pattern here? When you need to handle the scale, security, and costs of LLM apps - that's when an AI gateway starts making sense.

Making the Choice

Here's a practical framework for deciding if you need an AI gateway. Ask yourself these questions:

Scale and Cost Are you making over 10,000 LLM calls daily? Are API costs becoming significant? If yes, an AI gateway's caching and routing features will pay for themselves quickly.

Security and Compliance Handling sensitive data or need audit trails? AI gateways give you the logging and monitoring you'll need for compliance teams and security reviews.

Complexity of Usage Running multiple models? Using different providers? Need prompt management across teams? AI gateways shine when your LLM usage gets complex.

Development Stage

- Early prototype? Stick with direct API calls

- Production-ready app? Consider an API gateway

- Scaling LLM product? Time for an AI gateway

The simple version: if you're just experimenting or building something small, you probably don't need an AI gateway yet. But if you're running LLMs at scale in production, dealing with compliance, or managing costs across teams, an AI gateway will make your life easier.

Most teams start with direct API calls, move to an API gateway as they stabilize, and add an AI gateway when their LLM usage demands it. Let your actual problems drive the decision.

Final Thoughts

API and AI gateways solve different problems. API gateways handle web traffic. AI gateways handle LLM traffic. The choice depends on what you're building.

Here's what it comes down to: if LLMs are core to your product, and you're running them at scale, you'll probably need an AI gateway. The costs, compliance needs, and operational headaches of managing LLMs in production make them worth it.

But don't rush to add one just because you're using LLMs. Start simple. Let your needs grow naturally. When you find yourself worrying about LLM costs, building monitoring tools, or writing prompt management systems - that's your sign. Time to look at an AI gateway.

If you're ready to try one out, check out Portkey. While most AI gateways extend existing API infrastructure, Portkey was built from scratch to avoid the pitfalls of legacy designs.

It's one of the leading open-source AI gateways - so you can start using it right away and even peek under the hood to see how it handles LLM traffic. It's a solid way to get started with an AI gateway without a big commitment.

Remember: good infrastructure choices solve real problems, not theoretical ones. Choose based on what your team actually needs today, not what you might need tomorrow.