AI Governance Checklist for 2025: control and safety via an AI gateway

A 2025-ready AI governance checklist for enterprises. Learn how to enforce safety, compliance, and control through your AI gateway layer.

2025 is shaping up to be the year of AI accountability. The EU AI Act has kicked in. The U.S. is actively pushing AI executive orders and safety standards. Global regulators are no longer asking “what models are you using?” — they’re asking how you’re governing them.

We’ve created a practical, battle-tested AI governance checklist for 2025, based on what we’ve seen work across enterprises. From input/output guardrails to audit logging, from model usage controls to observability — this checklist gives you a structured way to bring oversight to every part of the LLM lifecycle.

And yes, if you’re using Portkey, you’ll find notes on how to implement these governance controls directly using the Portkey AI Gateway.

Let’s get into it.

1. Define clear roles and responsibilities

AI governance starts with ownership. Without clear accountability, it’s easy for AI projects to become fragmented, with no one team responsible for safety, compliance, or performance.

At the top, product and business teams should take responsibility for defining use cases and understanding their associated risks. They are best positioned to evaluate whether a use case is high-stakes (e.g., generating insurance summaries) or low-risk (e.g, writing social media captions), and whether the output meets user expectations. These teams should also define what success looks like — speed, accuracy, tone, or compliance.

Platform and infrastructure teams should own the how. They ensure models are called reliably, usage is consistent across environments, and critical systems have failover and fallback logic. They are also responsible for integrating observability, rate limiting, and guardrails to reduce operational risk.

Meanwhile, compliance and security teams bring oversight, defining what’s acceptable in terms of prompt structure, data usage, output sensitivity, and model choice - often guided by the organization’s terms of service. Tools that enforce these policies help ensure that data usage aligns with legal requirements such as GDPR and CCPA, reducing the risk of privacy violations or regulatory breaches

Their job is to reduce exposure to privacy violations, bias, or regulatory breaches. Tools that enforce these policies help ensure that data usage aligns with legal requirements such as GDPR and CCPA, reducing the risk of privacy violations or regulatory breaches.

Without these layers working in tandem, governance becomes reactive, trying to fix hallucinations or leaks after they’ve reached production.

With Portkey, you can structure these responsibilities directly into your infrastructure:

- Assign meta tags (like team: claims, use_case: copilot) to every call or request using metadata.

- Set org-wide policies for logging, allowed models, and token limits.

- Use role-based access controls (RBAC) to ensure only the right teams can access logs, analytics, and modify models or plugins.

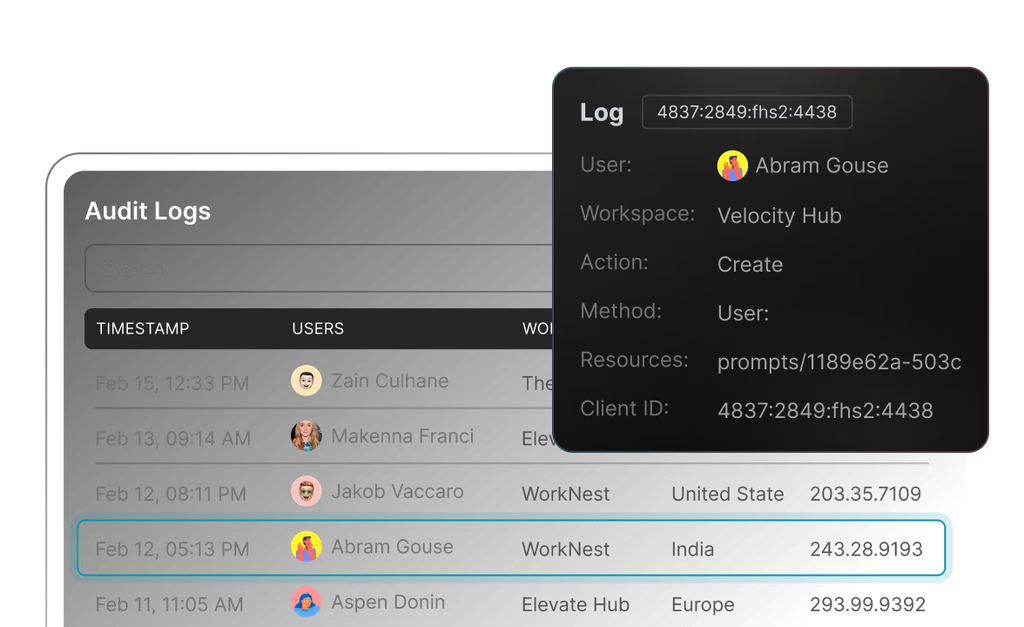

- Track who accessed what, when, using real-time audit logs, making it easy to trace issues to owners.

By establishing responsibility upfront, your teams can move faster, stay compliant, and avoid last-minute escalations when something breaks.

2. Inventory of all AI systems in use

You can’t govern what you can’t see.

As generative AI adoption accelerates across teams, one of the biggest governance challenges is shadow AI, i.e, models being used without visibility or review. Different teams might be calling OpenAI, Claude, or open-source models directly, often bypassing central controls or duplicating effort.

That’s why a core part of your 2025 AI governance checklist should be building and maintaining a centralized inventory of every AI system in use across environments, providers, and teams.

This inventory should go beyond just listing models. It should include:

- The model name and version (e.g., gpt-4o, mistral-7b-instruct-v0.3)

- The provider (OpenAI, Azure OpenAI, Google, Cohere, etc.)

- The use case and where it is deployed

- Associated risk level (e.g., based on business impact, compliance exposure)

- Any custom prompts, system instructions, or plugins used

Most importantly, this inventory needs to be live and should automatically update as teams ship new features, swap models, or experiment with new providers.

How Portkey helps:

Portkey acts as a central gateway for all your LLM traffic, giving you a complete model catalog of every model being used across your organization:

- See all active models, including base models, fine-tunes, and hosted open-source variants.

- Set which teams can have access to each LLM, and control spending and usage.

Instead of manually maintaining a spreadsheet or relying on team declarations, Portkey gives you the control and observability, ensuring nothing slips through the cracks.

This model inventory becomes the foundation for enforcing policies, monitoring behavior, and proving compliance when auditors or regulators come knocking.

3. Evaluate model risk and categorize use cases

As your organization scales AI adoption, one key governance principle is model-use case alignment. Simply put: not every model is right for every job.

Some models are fast and cheap, great for internal tooling. Others offer higher reasoning capabilities, better suited for high-risk decision-making. The goal of governance here is to ensure you’re assigning the right model to the right use case and applying appropriate controls based on risk.

Start by classifying use cases by risk:

- Low-risk: Internal tooling, content drafting, summarization of non-sensitive data

- Medium-risk: Customer-facing answers, operational workflows (e.g., claims routing), analytics summaries

- High-risk: Health, financial, or legal information generation; automated decision-making; regulated outputs

Then match the right model class:

- Use open-source or smaller models for low-risk, latency-sensitive tasks

- Use provider-backed or high-accuracy models (like Claude, GPT-4, Gemini) for user-facing or business-critical flows

- For high-risk use cases, enforce human-in-the-loop, audit logging, and fallback logic regardless of model type

How Portkey helps:

Portkey gives you the infrastructure to govern model assignments and enforce guardrails.

- Use specific models based on teams or use cases.

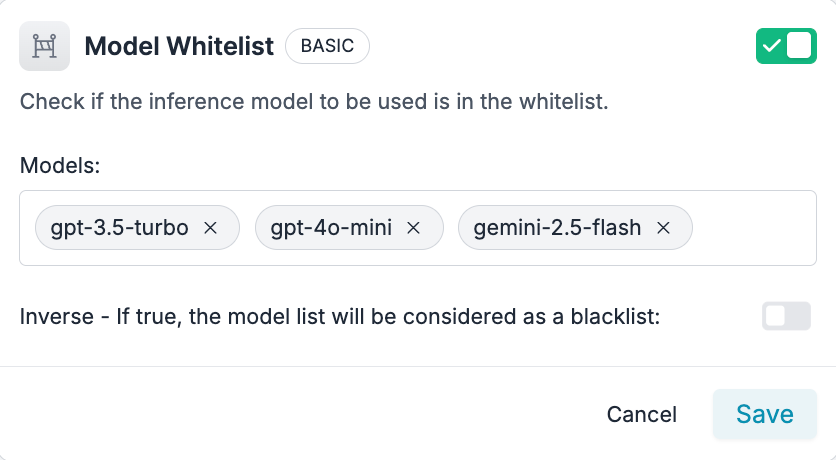

- Add a model whitelist guardrail, ensuring only approved models are used for some specific flows.

- Apply org-wide guardrails while configuring stricter thresholds for high-risk categories.

- Swap models without code changes when requirements evolve, using Portkey’s AI Gateway.

This decoupling of model and use case, with control over risk and safety baked into the routing, is essential to maintaining speed and compliance at scale.

4. Implement guardrails on inputs and outputs

Guardrails are the frontline defense in AI governance; they help ensure models don’t go off-script, generate unsafe responses, or leak sensitive information. In 2025, with stricter regulatory focus and broader AI adoption, guardrails are not optional.

But not all guardrails serve the same purpose. Effective governance means choosing the right guardrails for the right risks. Let’s break it down:

Security-focused guardrails

- Prompt validation: Use regex or token validators to block malformed or malicious inputs (e.g., prompt injection).

- JWT token verification: Enforce authentication before a prompt is processed.

- Model whitelisting: Restrict which models can be used per route — especially critical in high-risk, regulated workflows.

Use these on: Internal copilots, finance/legal assistants, or anything connected to private infrastructure.

Compliance and safety guardrails

- PII and PHI detection: Ensure models don’t ingest or generate sensitive user data without checks.

- Output moderation: Use LLM-based classifiers to catch toxic, biased, or unsafe outputs before they reach the user.

- “Ends with” or structured output validators: Ensure model responses follow a specific format (especially important in retrieval or decision support tools).

Use these on: Healthcare, insurance, and legal use cases, or anything where regulatory exposure exists.

Quality and consistency guardrails

- Character, sentence, or word count checks: Prevent overly verbose or vague outputs.

- Lowercase or malformed input detection: Catch accidental or improperly formatted prompts.

- Webhook-based dynamic validation: Integrate business logic checks (e.g., “is this product ID valid?” or “is this intent supported?”)

Use these on: Customer support bots, summarization systems, or any system that requires clarity and reliability.

Portkey lets you attach AI guardrails directly to each call, no code changes required. You can:

- Use regex matchers, webhook validators, and JWT authenticators to secure inputs.

- Add output moderation using your own LLMs or Portkey’s built-in unsafe content filter.

- Configure guardrails globally or custom, depending on risk level, team, or provider.

- View guardrail hits in real-time and analyze trends to refine policies over time.

By enforcing these guardrails at the gateway level, you ensure that every model call, regardless of provider or interface, is governed consistently and safely.

5. Monitor for drift, performance, and misuse

Models can drift not just in accuracy, but in tone, safety, and speed. Prompts can evolve in unintended ways. And usage can spike unpredictably, leading to cost overruns or abuse. Governance in 2025 requires continuous observability for compliance, optimization, and early incident detection.

Model behavior and drift

- Track how model outputs change over time, especially for use cases like summarization, recommendations, or decision support.

- Set up feedback loops to flag degrading quality.

Performance and latency

- Monitor response time across models, requests, and providers.

- Flag slowdowns that could affect user experience, or SLA violations for external users.

- Watch for failed responses or fallback activations (e.g., when a provider times out or a safety filter blocks an output).

Misuse and abuse

- Detect anomalous usage: sudden surges in tokens, repeated prompts, or suspicious IPs.

- Identify usage patterns that may indicate scraping, automated misuse, or bypass attempts.

- Track prompt injections or manipulation attempts over time.

Portkey gives you built-in LLM observability for every model call:

- Logs and traces for every request and response — with token counts, latency, costs, and model used.

- Dashboards and alerts for latency spikes, error rates, cost anomalies, and guardrail hits.

- Feedback collection plugins to integrate thumbs up/down, free-text comments, or human review signals.

- Real-time anomaly detection for unexpected behavior or cost surges — especially critical in high-volume deployments.

All of this is visible in one place, without needing to cobble together logs from different providers or tools.

Ensure data governance and provenance

Behind every AI output is data, and that data must be governed just as tightly as the models themselves. In 2025, data misuse is the fastest path to regulatory violations, reputational damage, or broken user trust.

Your AI governance program needs to ensure that input data is clean and compliant, and that output data is traceable and attributable. That’s where provenance comes in — knowing what went into a response, and how it was generated.

Key areas of data governance

a. Input validation and data classification

- Ensure prompts don’t contain sensitive data that violates internal or regulatory policies (e.g., PII, PHI, credit card info).

- Classify and tag input data sources structured vs. unstructured, internal vs. external, synthetic vs. real.

- Block unsafe prompt patterns using regex or webhook guardrails.

b. Provenance tracking

- Log every prompt-response pair with metadata: who triggered it, what model was used, and what fallback logic (if any) applied.

- For multi-model or agentic setups, capture the full chain of reasoning and intermediate steps.

- Maintain records that make every output explainable — critical in regulated industries.

c. Data retention and auditability

- Define how long prompts and responses are stored, and who can access them.

- Support deletion workflows for user data (for GDPR/CCPA compliance).

- Enable traceability from output all the way back to source inputs and prompt instructions.

Portkey gives you the infrastructure to implement real data governance without slowing your teams down:

- Automatically log every input and output along with metadata like user ID, route, model, risk level, and fallback path.

- Add PII detection or redaction guardrails before the model call (bring your own or use Portkey's guardrails).

- Export logs for audit trails, internal reviews, or compliance reporting — with full visibility into what data was used, when, and how.

Data governance and provenance are your safety net when things go wrong, and your proof of integrity when questioned.

Review and update policies continuously

AI systems don’t stand still — models evolve, regulations tighten, and new risks emerge. Governance policies that worked last quarter might be outdated today.

That’s why AI governance needs to be an ongoing process, not a one-time setup. Teams should establish a policy lifecycle, with regular reviews tied to product releases, model upgrades, or compliance updates.

At a minimum:

- Review your model inventory and usage every quarter

- Reassess guardrails when new use cases or providers are added

- Update fallback logic and moderation thresholds as models change

- Track global regulatory changes and adapt policies accordingly

Because Portkey's AI Gateway centralizes your routing, guardrails, and logs, updating policies doesn’t require code changes or coordination across teams. You can:

- Roll out guardrail updates instantly across all requests

- Change model assignments without touching application code

- Version and document every change for auditability

In short, Portkey makes governance flexible, so your policies can evolve as fast as your AI stack does.

Implement access and usage controls

Unchecked access is one of the biggest risks in any AI system, whether it's prompt abuse, overuse of expensive models, or exposure to sensitive data. Strong governance requires defining who can use which models, when, and how much.

Access control

- Limit which users, teams, or services can access specific requests or models.

- Use Role-Based Access Control (RBAC) to prevent unauthorized changes to prompt templates, guardrails, or model configs.

Usage limits

- Set rate limits per user, API key, or team to prevent abuse or unexpected cost spikes.

- Implement token or budget caps across teams or environments.

Approval workflows

- For high-risk use cases, require approvals before new requests or models go live.

- Gate access to powerful or expensive models behind permission layers.

Portkey gives you all of this natively:

- RBAC controls who can view, edit, or deploy models and limits

- Set per-route quotas, rate limits, and budget ceilings

- Enforce team-specific usage policies using metadata tags

- Create staging vs. production environments to control rollout risk

These controls keep your AI stack secure, cost-efficient, and compliant, without slowing your builders down.

9. Maintain compliance documentation

Whether you're preparing for an external audit, internal review, or regulatory inquiry, you need a paper trail that shows how your AI systems are governed.

At a minimum, your documentation should cover:

- A complete model and route inventory, with associated risk levels and owners

- Guardrail configurations and change history per route

- Prompt templates, model settings, and fallback behavior for each endpoint

- Access controls, rate limits, and approval workflows

- Logs of incidents, overrides, or edge cases, with resolution steps

If you’re operating in a regulated industry or under policies like the EU AI Act, this is a requirement.

Portkey automatically captures much of this for you:

- All requests, responses, and guardrail hits are logged with timestamps and user attribution

- Audit logs let you demonstrate compliance, without stitching data together from multiple systems

Documentation is your insurance policy when something breaks, and your proof of control when regulators ask how your AI stack works.

10. Train teams on responsible AI practices

Governance tools are only as strong as the people using them. To make AI safe, compliant, and effective, every team involved, from engineers to product managers to legal, needs to understand how to build responsibly.

This isn’t just about ethics workshops or yearly compliance slides. It’s about hands-on, context-specific training that aligns with each team’s role.

How to make it actionable:

- Integrate governance training into onboarding for teams working with AI.

- Host regular internal reviews of high-risk use cases — share what went wrong and how it was mitigated.

- Offer playbooks and checklists tied to your own infrastructure, so teams don’t have to guess.

Portkey’s dashboards, logs, and policy infrastructure make AI governance transparent and teachable:

- Teams can explore how requests are routed, how guardrails are applied, and where fallback logic kicks in.

- Prompt templates and configs are versioned, so newer team members can learn from prior decisions.

- Use metadata tagging to build org-wide reports and make governance progress visible.

Governance is how you scale AI safely

AI governance in 2025 is the backbone of building responsibly. As model usage spreads across functions and regulators raise the bar on accountability, the most effective teams are shipping with guardrails, observability, and ownership baked in.

This checklist is your foundation. Whether you’re securing sensitive data, enforcing input/output standards, or reviewing model behavior, these ten principles help you stay ahead of risks — and avoid reactive firefighting later.

Portkey gives you everything you need to implement these governance controls — from centralized routing and live model inventory to input/output guardrails, audit logs, access policies, and more.

Start governing your LLM stack the right way. Try Portkey or book a demo today.