August at Portkey: 2 BILLION Requests, Guardrails, Tracing, and More

Last month at Portkey, we crossed 2 BILLION total requests processed through our platform. To think, Portkey just started a year ago when this number was at 0!

We're truly humbled to be production partners for some of the world's leading AI companies. And this drives us to continue innovating and making Portkey better. In July & August, we did 3 Major releases (and about 40 other releases).

Here's everything we shipped:

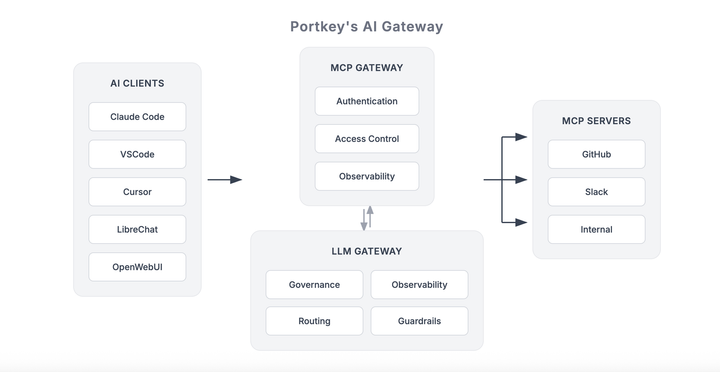

Release #1: Guardrails

When it comes to taking AI apps to production, we believe unpredictable LLM behaviour is THE BIGGEST missing component hindering it.

We're solving this by bringing 50+ state-of-the-art AI Guardrails (like PII detection, retrieval accuracy check, JSON schema match, and more) on the Portkey Gateway.

You can now synchronously run Guardrails on your requests and route them with precision.

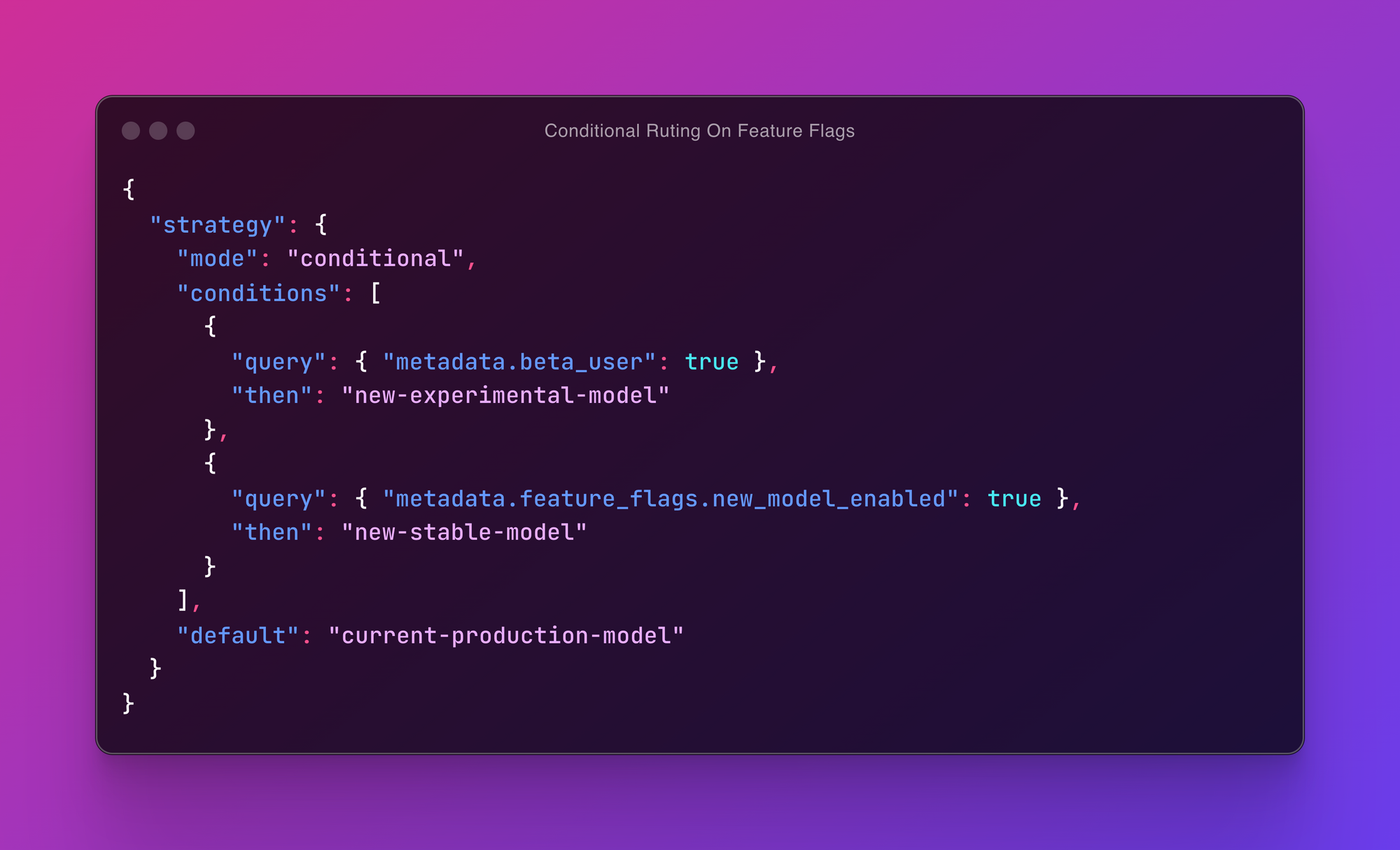

Release #2: Conditional Routing

As your app scales, you'll likely face stringent data residency requirements, bespoke model demands, and other such concerns.

With Conditional Routing on the Gateway, you can route your requests to different model configurations based on custom conditions.

Like, for an EU user, you can easily route your request to an EU hosted Claude model, for a beta tester, you can route the request to a preview model, and much more.

Here's a sample conditional routing config:

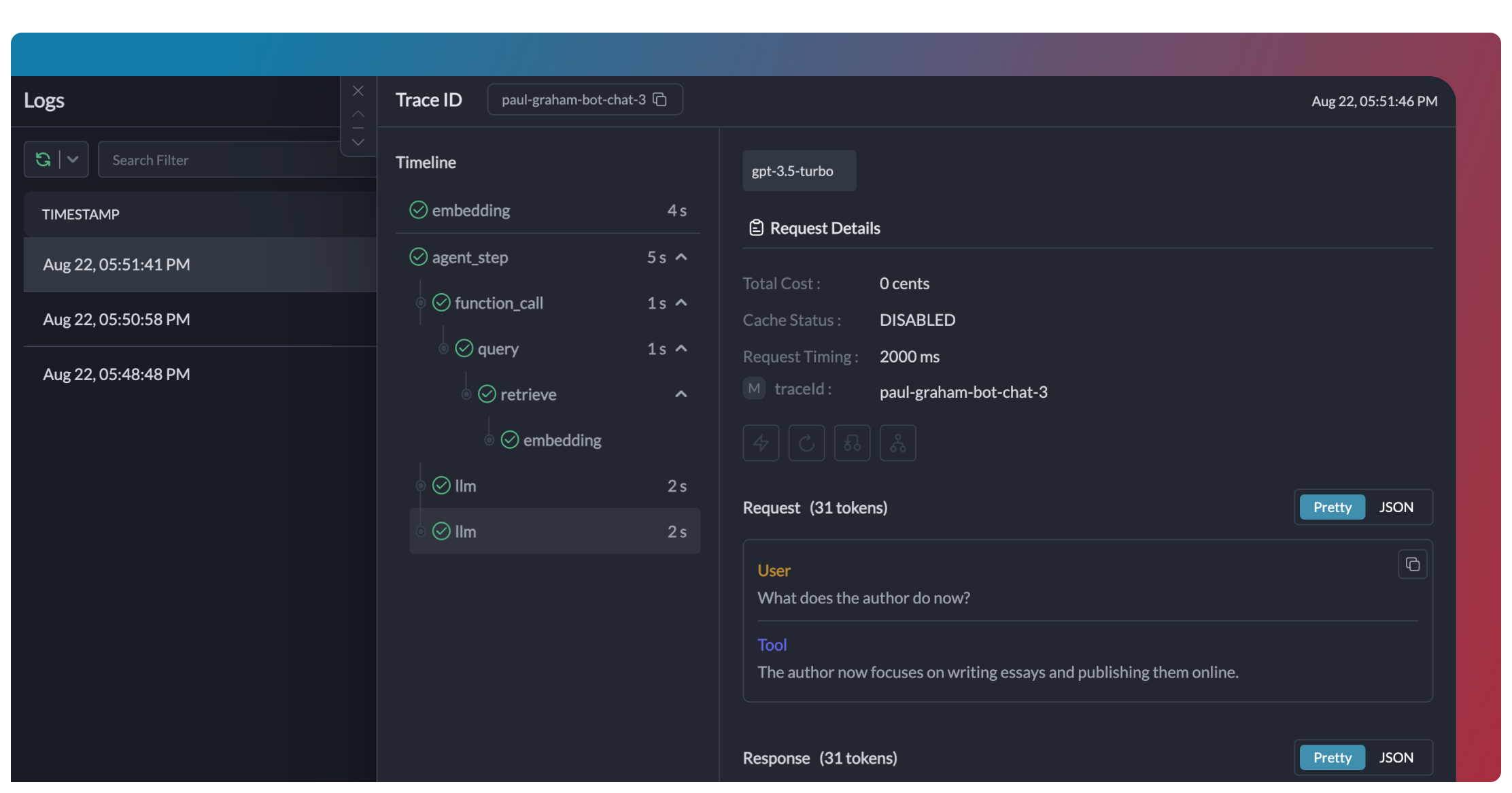

Release #3: Tracing

We now let you trace your whole agent/chat/RAG pipeline in a neat chronological view, with support for spans and parent spans.

Better illustrated with this image:

You can instrument your tracing however you want on Portkey, with complete support for Open Telemetry standard.

More New Features & Fixes

- Portkey now has Single Sign-On (SSO)

- Portkey can automatically handle auth for your Vertex account with Service Account-based Auth (more)

- You can load balance, fallback, and do more routing on OpenAI & Azure OpenAI's Audio routes (more)

- Prompt playground now supports parallel tool calling

- You can now add rate limits to virtual keys

- OpenAI's structured outputs is supported on Portkey (more)

- Anthropic's prompt caching is now available on Portkey

New Models & New Integrations

- Codestral Mamba, GPT-4o-mini, Llama 3.1's different models (7B, 70B), Mistral Large 2, New Gemini Experimental, Latest version of GPT-4o are some of the new models that work with Portkey, with more being added every week.

- Vercel, DSPy, Tembo DB now have native Portkey integrations.

- We also added support to call Cerebras inference, Azure AI inference, Github models, Huggingface, Deepseek, Voyage, Deepbricks, and Siliconflow providers.

New APIs

Community Updates

- We hosted Bengaluru's brightest AI engineers & leaders at out meetup with Postman. Read the report here.

- Webinar for engineers on how to put Aporia guardrails in production with Portkey. (watch here)

- We also chatted with the OSS4AI community about the "Guardrails on the Gateway" pattern (watch here)

And now, some praise

Says something when the Hoppscotch team likes Portkey!

That's it! This month, we are cooking → folders for prompt templates, advanced tracing, advanced conditional routing, some very very cool tools, and more!

PS: Leave a ⭐️ on the Gateway repo please?