Build vs Buy - LLM Gateways

The use of Large Language Models (LLMs) has changed how enterprises approach AI and automation. LLM Gateways serve as essential infrastructure for managing, securing, and optimizing AI models, helping companies organize their AI workflows.

But when faced with the choice of building an LLM Gateway from scratch or buying an off-the-shelf solution, what’s the best route to take?

This blog explores the benefits and challenges of both options to help you make an informed decision.

Why the Debate Matters: Build vs Buy

The decision between building or buying an LLM Gateway is crucial because it directly impacts an organization’s ability to use AI effectively. As AI models become increasingly complex and diverse, businesses must weigh the long-term value of customizing a solution against the efficiency and speed that comes with purchasing a ready-made platform.

What aspects should organizations look for?

- Time-to-market

- Costs

- Resources

- Scalability and maintenance

Building an LLM Gateway In-House

Building your own gateway puts you in the driver's seat - you control everything from customization to security. However, this approach demands time, resources, and expertise.

What are the advantages of building an LLM Gateway in-house?

First is customisation. You can tailor the LLM Gateway to your organization’s unique needs. You can prioritize specific functionalities such as data security, specific integrations, or unique model tuning requirements. This level of control allows for more flexibility and adaptability as your business evolves.

For organizations with strict security or regulatory requirements, building an internal solution ensures that all sensitive data stays within your environment. This can be important for industries such as healthcare, finance, or government where data privacy and compliance are non-negotiable.

Also, developing your own solution gives you the ability to scale according to your needs. If your business expands or requires a sudden increase in processing power, your infrastructure can be upgraded without waiting for a third-party vendor to make adjustments.

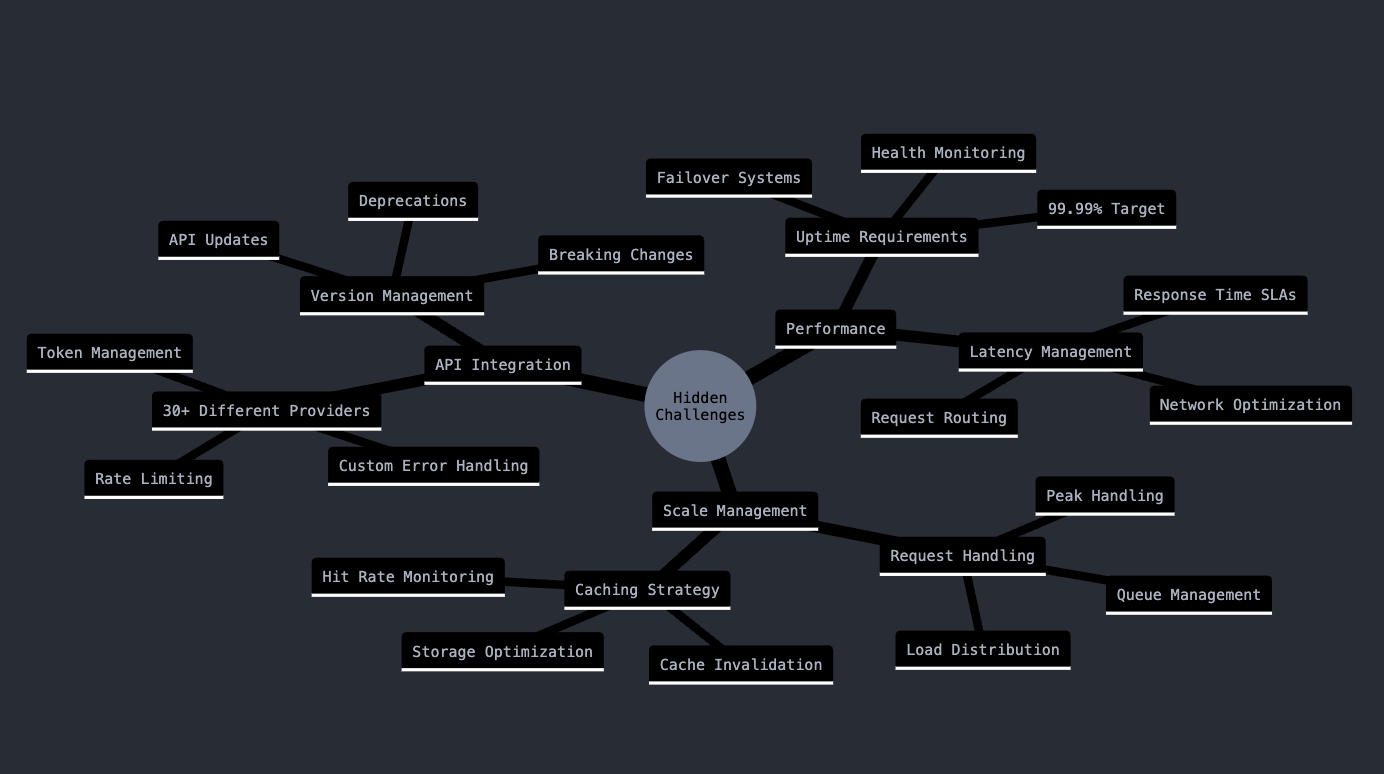

What are the challenges of building an LLM Gateway in-house?:

Building an LLM Gateway from scratch requires a team of specialized developers, AI experts, and IT resources. This can be expensive, especially if the business lacks an in-house AI team.

Additionally, the costs of building an AI Gateway can add up over time. From initial development to ongoing maintenance, your team will need to allocate substantial resources to ensure the gateway operates smoothly. This can divert attention from other critical business priorities.

AI models and technologies evolve rapidly. Ensuring that your LLM Gateway remains effective requires frequent updates, model tuning, and possibly even re-architecting parts of the solution. This continuous upkeep can be a drain on resources and may require specialized expertise that’s difficult to maintain in-house.

Building an LLM Gateway gives you control but introduces complexities that require careful management. It’s not the right solution for everyone, especially for businesses that need a fast and efficient AI implementation.

Buying an LLM Gateway: The Safer Route

For many organizations, purchasing an off-the-shelf LLM Gateway offers a practical and efficient way to manage their AI applications.

What are the advantages of buying an LLM Gateway solution?

Buying an LLM gateway can get you up and running with AI capabilities in days rather than months. You'll skip the lengthy development cycle and dive straight into implementation, which keeps you competitive in a fast-moving market.

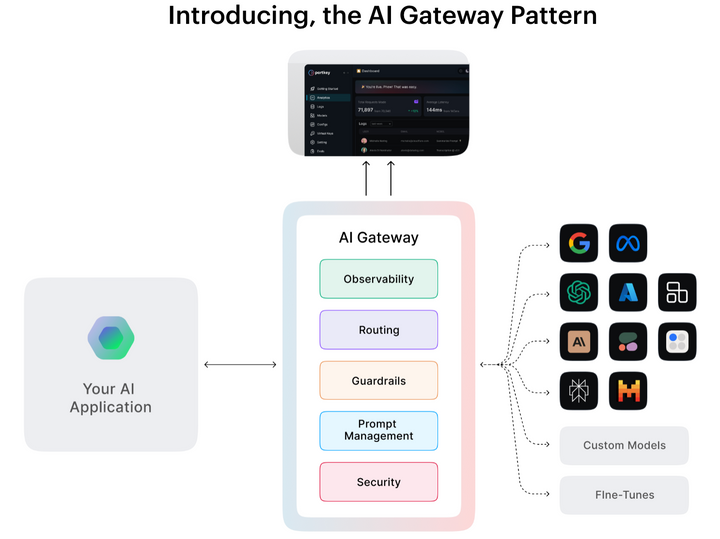

These platforms pack all the essential features you'll need - from observability tools to prompt management, model integrations, and security guardrails. When new AI capabilities emerge, they're rolled into regular updates, saving you from building these features from scratch.

One major advantage of established platforms is their built-in compliance with standards like GDPR, HIPAA, and SOC 2. Your sensitive data stays protected without spending months building security protocols. This alone saves significant development and audit time.

On the financial side, while subscription fees are part of the package, they often work out cheaper than building in-house when you factor in the full picture. Think about the costs of hiring an AI team, setting up infrastructure, and keeping everything running smoothly. For most organizations, buying proves to be the smarter financial choice.

The support ecosystem that comes with established vendors is invaluable. You get comprehensive documentation, access to technical teams who've handled similar implementations, and training resources to skill up your team quickly. This becomes especially crucial if you're new to AI implementation or working with limited internal resources.

What are the challenges of buying an LLM Gateway solution?

- Potential Vendor Lock-In: Relying on a single vendor can sometimes limit flexibility, particularly if the gateway doesn’t support integrations with all tools or platforms your business uses. However, many vendors now prioritize interoperability to reduce this risk.

- Less Customization: While most gateways allow some level of configurability, they may not fully align with specific organizational needs. However, leading vendors often provide APIs and SDKs to address this limitation.

Organizations that need to scale quickly, launch AI-powered products, or focus on operational efficiency often benefit most from buying. These platforms cater to such needs, offering flexibility, scalability, and advanced monitoring tools out of the box

Buying an LLM Gateway is often the preferred route for companies seeking rapid adoption, robust features, and minimal ongoing technical demands.

What should you choose?

Buying an LLM Gateway is often the better option for organizations that prioritize agility, scalability, and cost efficiency. It allows businesses to focus on what truly matters: building and optimizing AI-powered applications. Instead of dedicating resources to operational issues like maintaining infrastructure, updating models, or ensuring new integrations, organizations can rely on a vendor to handle LLMOps.

Pre-built solutions simplify budget management and cost control with built-in features like usage monitoring, caching, and optimized routing. These capabilities prevent resource waste and help teams stay within predefined limits without requiring constant oversight.

Additionally, purchased gateways provide the flexibility to adopt new features and integrations as they become available, keeping your AI ecosystem modern and adaptable. By outsourcing operational challenges, businesses can direct their efforts toward improving user experiences and enhancing the performance of their AI applications, for a greater return on investment.

While buying offers convenience, there are scenarios where building your own LLM Gateway is the more strategic choice. This route is most suitable for organizations with specialized requirements and extensive internal resources.

Ultimately, the right decision is the one that aligns with your business goals, resource availability, and desired outcomes. Assessing these factors holistically ensures your LLM Gateway strategy drives the most value for your organization.

For companies looking for a robust, scalable solution purpose-built for AI applications, Portkey stands out as a compelling choice. It is an enterprise-grade LLM Gateway that combines the flexibility of open-source software with a comprehensive suite of features tailored to AI apps. With Portkey, businesses can leverage observability, guardrails, budget control, and seamless integrations—all without the complexity of building from scratch.

Portkey’s open-source foundation ensures transparency and flexibility, making it adaptable to diverse organizational requirements. Whether your goal is to rapidly scale AI capabilities or maintain tight control over your infrastructure, Portkey offers the perfect blend of reliability and innovation.