Building AI agent workflows with the help of an MCP gateway

Discover how an MCP gateway simplifies agentic AI workflows by unifying frameworks, models, and tools, with built-in security, observability, and enterprise-ready infrastructure.

Agent workflows quickly move from experimental prototypes to mission-critical infrastructure in AI applications. Whether it’s orchestrating research assistants, automating support tasks, or powering internal copilots, agents are becoming the execution layer for real-world AI.

But building and scaling these workflows is far from straightforward.

Today’s ecosystem is crowded with frameworks, each offering its own way to manage memory, tools, and multi-step reasoning. While the innovation is exciting, the fragmentation comes at a cost.

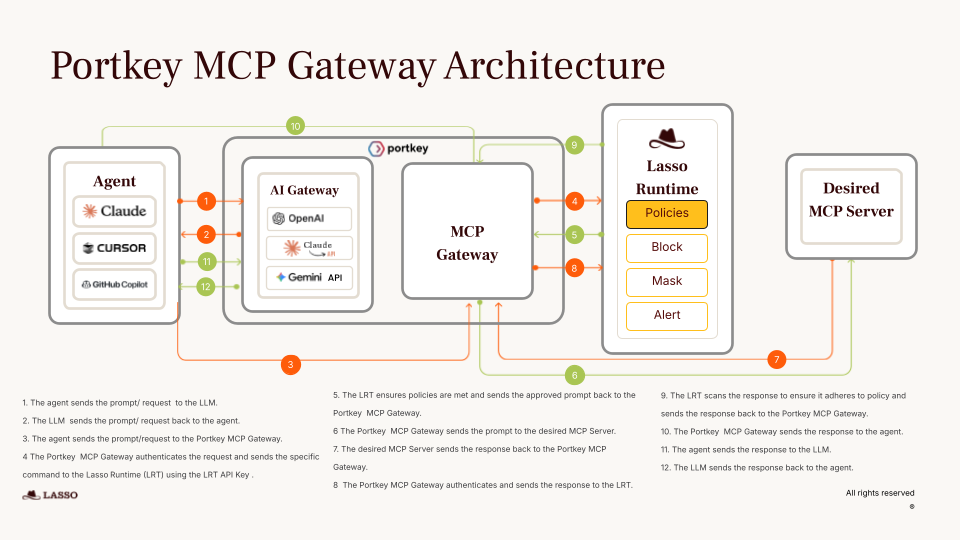

That’s why we built the MCP Gateway: a centralized control layer to run MCP-powered agents in production.

Check it out!

Challenges in today’s agent setup

What looks simple in a demo often turns into a web of tightly coupled systems, brittle integrations, and escalating infrastructure costs. The root problem starts with fragmentation.

There are too many competing AI agent frameworks, each with its own assumptions, APIs, and abstractions. There’s no standard way to compose multi-step reasoning, memory, or tool usage, which makes switching frameworks or integrating multiple ones operationally challenging.

These frameworks often come with a hidden cost: LLM provider lock-in. Many are built around specific model APIs, which means that if you start with one model provider and later want to switch for cost, performance, or compliance reasons, you may need to rewrite large parts of your system.

Tool integration is another pain point. Connecting agents to tools like search APIs, RAG pipelines, or internal knowledge bases requires custom adapters for each framework. As a result, teams end up duplicating work and maintaining fragile glue code across projects.

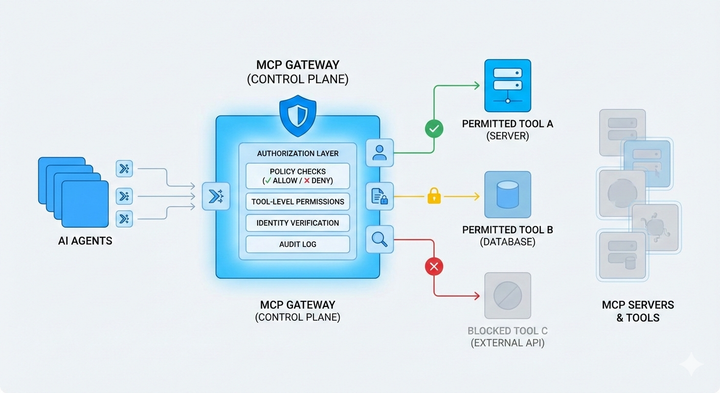

Also, there’s no consistent way to manage what tools an agent can access, what data it can see, or how permissions are enforced. Most setups rely on implicit trust between loosely connected components — not ideal for production systems handling sensitive data.

Observability is equally siloed with a limited view into agent behavior, and tracing a single user request across LLM calls, memory updates, and tool interactions is quite difficult.

And finally, the infrastructure just isn’t built for scale. Most agent workflows lack basic production features like intelligent routing, caching, batching, and failover. Without shared context or optimization layers, agents often rerun the same expensive operations, wasting compute and driving up cost.

Enter the MCP gateway

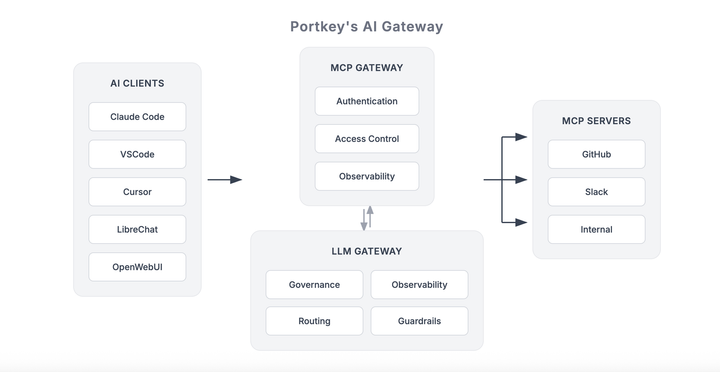

The MCP gateway changes the game for agent workflows. It acts as a standardization layer that connects any agent framework to any model or tool, while adding the observability, security, and infrastructure needed to move from prototype to production.

Instead of being locked into a single agent framework, teams can now build workflows that are multi-framework compatible. Whether you're using LangGraph for control flow, AutoGen for multi-agent reasoning, or CrewAI for team-based collaboration, the MCP gateway provides a consistent interface to plug into, reducing integration complexity and future-proofing your stack.

It’s also model-agnostic by design. The MCP gateway can route agent requests to different LLMs without needing to rewrite your agent logic. This makes it easy to optimize for performance, cost, or jurisdictional compliance.

But it’s not just a router. The MCP gateway brings enterprise-grade infrastructure to the table. Intelligent request routing, shared caching, batching, and load balancing are built in, enabling teams to run high-volume AI agent workloads with efficiency and reliability.

Security, too, is centralized. Instead of scattered permission logic across different frameworks and tools, the MCP gateway enforces unified access controls, audit logging, and governance across the entire stack, from AI agent to LLM to tool.

And when things go wrong, the MCP gateway gives you end-to-end observability. Every request is traceable across agents, LLM calls, tool executions, and final responses, giving teams the visibility they need to debug, optimize, and govern AI workflows.

What you end up with is a powerful abstraction: a single, consistent layer that connects your AI agents, models, and tools with production-grade infrastructure already built in.

| Capability | Without MCP Gateway | With MCP Gateway |

|---|---|---|

| Framework flexibility | Locked into specific agent framework (LangGraph, CrewAI, etc.) |

Plug in any framework via a standardized interface |

| Model routing | Hardcoded to a specific LLM provider |

Route to 250+ LLMs with no code changes |

| Tool integration | Custom-built wrappers for each tool and framework |

Unified tool connectors reused across agents |

| Security controls | Scattered, framework-dependent permission logic |

Centralized permissions, audit logs, and governance |

| Observability | Limited or siloed — hard to trace agent behavior | End-to-end visibility across agents, tools, and LLM calls |

| Production infrastructure | No caching, batching, or routing — high latency and cost | Built-in infra: caching, load balancing, retries, batching |

| Cost optimization | Manual cost tracking per LLM or agent | Unified cost management and optimization across models and tools |

| Dev effort | High — fragile glue code and duplicate logic everywhere | Low — shared abstractions and standard APIs simplify integration |

Final thoughts

AI Agent workflows have the potential to transform how teams build with AI, but today’s reality is fragmented, fragile, and hard to scale. Most setups are stuck reinventing infrastructure, struggling with integration, and flying blind without observability or cost control.

The MCP gateway flips the script. It turns agent development into a standardized, production-ready process. You get the freedom to use any framework, model, and tool without the lock-in or overhead. And you gain the infrastructure, visibility, and governance required to run AI agents reliably at scale.

If you're serious about moving agents from prototype to production, MCP gateway is how you build that future.