Chain-of-Thought (CoT) Capabilities in O1-mini and O1-preview

Explore O1 Mini & O1 Preview models with Chain-of-Thought (CoT) reasoning, balancing cost-efficiency and deep problem-solving for complex tasks.

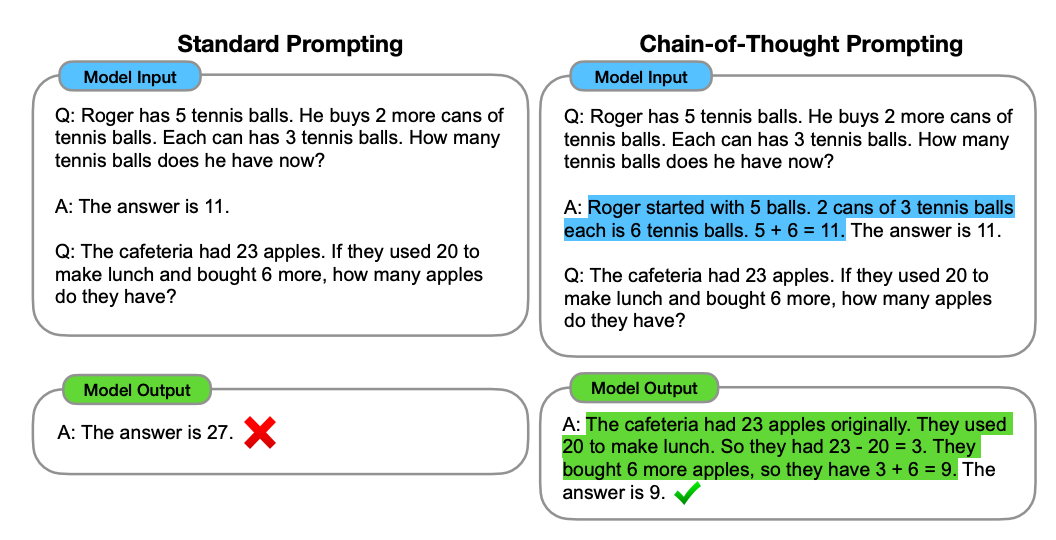

Chain-of-thought (CoT) reasoning allows models to break tasks down into logical steps rather than simply providing a direct answer, which opens the door for more nuanced problem-solving.

Two models—O1 Mini and Preview—are pushing the boundaries of Chain-of-Thought (CoT) reasoning, each introduced by OpenAI with specific use cases in mind. According to OpenAI, these models were designed to enhance the reasoning abilities of AI systems, allowing them to better handle tasks that require multi-step, logical problem-solving.

O1 Mini is tailored for environments where computational resources are limited but users still need the benefits of structured reasoning. OpenAI suggests O1 Mini for simpler use cases like basic programming tasks, educational tools, and applications where quick, step-by-step outputs are needed without heavy computational overhead. It is meant to strike a balance between cost-efficiency and reasoning performance, making it accessible for developers working with constrained resources but still looking to leverage CoT capabilities.

Preview, on the other hand, is designed for more demanding, high-complexity tasks. OpenAI markets Preview as the go-to model for use cases involving deep reasoning, multi-hop questions, and scenarios that require synthesizing information across multiple steps. This could include applications in legal analysis, research, and sophisticated decision-making systems, where it's critical to not only arrive at a final answer but also clearly present the reasoning process behind it. However, due to its complexity and power, Preview is also more computationally intensive, and OpenAI highlights this as a factor for users to consider.

While CoT shows promise, it also comes with some practical trade-offs, especially around cost, time, and resource usage.

We’ve taken a closer look at how these models perform, their challenges, and where they fit best.

What is Chain-of-Thought Reasoning?

Imagine asking an AI to solve a math problem and getting a detailed step-by-step explanation, just like a human tutor would provide. This is the power of Chain of Thought prompting.

Large Language Models (LLMs) like GPT-3, GPT-4, and others are capable of performing a wide range of tasks, from writing articles to answering questions. However, they often struggle when it comes to tasks that require multi-step reasoning. Enter Chain of Thought (CoT) prompting, a technique that helps AI break down complex problems by reasoning through each step logically.

Instead of spitting out a single answer, they “show their work,” providing a series of logical steps to explain how they reached their conclusion. This approach makes them useful for tasks that require deep reasoning—such as solving maths problems, logical puzzles, or answering complex multi-step questions.

This ability to "think out loud" helps in fields where transparency is essential, but it's not without its drawbacks, particularly when it comes to efficiency and resource consumption.

CoT in Action: o1-mini and o1-preview

As the field of artificial intelligence continues to evolve, particularly with Chain-of-Thought (CoT) reasoning, two models—O1 Mini and O1 Preview—stand out. Both represent significant advancements in AI’s ability to handle complex tasks, each offering unique strengths. Understanding these differences is crucial for determining which model is best suited for specific applications.

o1- mini: Compact but Efficient

If you’re operating in an environment with limited resources, O1 Mini is an excellent choice. This model is specifically designed to handle straightforward CoT reasoning tasks effectively. For example, when you use O1 Mini to tackle basic math problems, it methodically walks through each calculation step, providing clarity on how it arrives at the final answer. This structured approach not only yields accurate results but also fosters a better understanding of the reasoning process.

That said, it’s important to be aware of O1 Mini’s limitations. While it excels at simpler, linear tasks, it can struggle with more complex or domain-specific queries that require a deeper contextual understanding. This means that if your projects involve intricate problem-solving, O1 Mini might not be the most reliable option. It’s a great choice for balancing cost and computational efficiency, but keep in mind that it may not always meet the demands of more sophisticated tasks.

o1- preview: Built for complexity

In contrast, Preview is tailored for complexity. This model shines when it comes to handling multifaceted tasks that require deeper reasoning. If you’re faced with questions that involve synthesising information from various sources or multi-step thinking, Preview is the model to rely on. It excels in scenarios such as legal analysis or advanced research, where clarity and depth are paramount.

However, the increased capability of Preview does come with trade-offs. It consumes significantly more tokens and computational resources than O1 Mini, resulting in higher operational costs and potentially slower performance. If your project has the budget and timeline to accommodate this, Preview is an excellent option. Yet, for everyday tasks where efficiency is key, the resource demands of Preview might not always be justified.

O1 in action

Users have been finding a wide range of applications for O1 models in their work. Developers have frequently turned to O1 Mini for tasks like debugging and resolving logic problems, where its step-by-step reasoning has proven useful in breaking down complex code issues. For instance, many have shared how it helps write small blocks of code efficiently, such as in this coding example and in broader discussions on Reddit.

For more advanced tasks, O1 Preview has been used for multi-step problem-solving and synthesizing information from multiple sources. Users have shared how it outperforms other models in handling complex reasoning, with one developer noting its superior performance in certain coding tasks as compared to GPT-4, which they discuss in detail here. Others have reported being impressed by its ability to exceed expectations in challenging tasks, as reflected in another Redditpost.

Beyond technical fields, content creators are using O1 models for tasks like generating high-quality marketing copy and blog posts. This versatility is highlighted in various user experiences, such as in content creation discussions. These diverse examples showcase how users are leveraging O1 models across different domains, pushing the boundaries of what AI can achieve.

Cost, Time, and Token Usage

While CoT reasoning sounds great in theory, its real-world application comes with some challenges, especially when it comes to cost, time, and token consumption.

- Token Usage and Limits: CoT models inherently use more tokens because they break down tasks into multiple steps. This means that, depending on the complexity of the task, you might run into token limits before the model even provides a final answer. For longer or more complex queries, the model could use up its entire token allowance on just “thinking through” the problem, leaving no room to generate a final response. This can be a frustrating limitation, especially in scenarios where budgeted tokens are tight.

- Cost: As a direct consequence of increased token usage, CoT reasoning can drive up the cost of running these models, especially for larger models like Preview. In a production environment where you’re paying by the token, this can quickly add up. O1 Mini offers a cheaper option but doesn’t perform as well on complex tasks. So, there’s a trade-off between cost and reasoning depth.

- Processing Time: Breaking down a problem into multiple reasoning steps also takes time. If you’re working in a real-time application, this extra processing time can become a bottleneck. While Preview’s detailed step-by-step reasoning is great for offline tasks or research, it may not be suitable for live customer support or real-time analytics, where quick responses are crucial.

- Inconsistencies and Errors: Even with CoT reasoning, models can sometimes provide incorrect or inconsistent answers. While the process of breaking tasks down can enhance clarity, it doesn’t always guarantee accuracy. Models might follow flawed logic or misinterpret parts of the problem, leading to an incorrect conclusion despite the increased token usage and time spent. Additionally, some models might generate different results for the same task based on how the prompt is structured, which can be a major drawback for tasks requiring consistency.

So, When Does CoT Make Sense?

Despite the potential drawbacks of Chain-of-Thought (CoT) reasoning, such as increased token usage, higher costs, processing time, and occasional inconsistencies, there are still several scenarios where the benefits outweigh these challenges. Here are a few areas where CoT makes sense to use:

- Complex Problem-Solving:

In situations where tasks require multi-step reasoning, such as advanced maths problems or scientific computations, CoT can break down complex steps and offer a more transparent view of the model’s thought process. Even though it may consume more tokens, the ability to dissect a problem into clear, manageable pieces can be invaluable. - Research and Analysis:

CoT models are particularly useful in research fields like legal analysis, financial modeling, or medical diagnostics, where decisions need to be explained step-by-step. The transparency in reasoning helps researchers understand how a conclusion was reached, providing clarity even if the model’s output is imperfect or needs refinement. - High-Stakes Decision-Making:

In fields like governance, healthcare, and finance, where the reasoning behind a decision must be justified, CoT models shine. While the token cost might be high, the ability to provide logical steps in the decision-making process can increase trust in AI-driven recommendations. Even when the final answer is incorrect, the model’s reasoning provides insights that can guide human decision-makers. - Education and Training:

CoT models are ideal for educational purposes, especially in fields that require the explanation of processes, such as teaching maths, physics, or programming. The extra time and tokens are justified in these scenarios because the step-by-step reasoning helps students understand how to approach problems, fostering deeper learning. Inconsistencies in responses can even become teachable moments. - Exploratory Tasks with Iterative Feedback:

In exploratory or creative tasks where trial and error are part of the process (like brainstorming, creative writing, or coding), CoT can offer valuable insights by breaking down different approaches. The higher cost and occasional inconsistencies are less critical here because the iterative nature of the work allows for refinement over time.

A Balanced Take: The Potential and the Drawbacks

CoT reasoning is an exciting advancement, but it’s not a magic bullet. O1 Mini and Preview both highlight the potential of this technology, but they also reveal its limitations. While O1 Mini offers a resource-efficient solution for simpler tasks, Preview delivers deeper reasoning capabilities at a higher cost and computational load. Both models require careful prompt crafting to ensure they provide useful outputs without burning through tokens prematurely.

Ultimately, CoT models excel in tasks that require transparency, multi-step reasoning, and problem-solving, but they need to be deployed thoughtfully. If you can afford the extra tokens and time, they offer tremendous value in the right use cases. However, for everyday applications where speed and cost-efficiency are paramount, a non-CoT model might still be the better choice.

By understanding the trade-offs and strengths of CoT reasoning, we can better decide when—and where—it makes sense to integrate these models into real-world applications.

Try CoT for yourself!

Make CoT requests across some of the latest models with our CoT Cookbook.

You can now try out o1-mini and o1-preview models on the Portkey prompt playground and SDK!