How Fontys ICT built an institutional AI platform with a gateway architecture

Fontys ICT shares how it built a governed, multi-provider AI platform using a gateway architecture to ensure equitable access, EU compliance, and cost control.

Fontys ICT is a university of applied sciences in the Netherlands, where students, researchers, and teaching staff work on real-world ICT challenges.

As generative AI became a foundational technology across education and research, Fontys ICT set out to answer a specific question, Can a university of applied sciences offer advanced AI capabilities on its own terms, with fair access for all students and staff, transparent risks, controlled costs, and full alignment with European law?

To explore this, Fontys ICT designed and operated an institutional AI platform as a six-month pilot involving around 300 users. The goal was not to replace commercial innovation, but to create a governed, multi-provider AI environment that the institution itself could understand, control, and stand behind.

Fontys ICT has published a detailed whitepaper documenting this work. The report walks through the platform architecture, operational decisions, and governance learnings from running an institutional AI platform in practice.

The challenges of AI adoption in higher education

As generative AI adoption accelerated, Fontys ICT observed a pattern common across higher education: AI usage expanded faster than institutional frameworks could adapt to it.

Students, faculty, and researchers were already incorporating AI into coursework, experimentation, and research, but this usage sat largely outside formal infrastructure.

One of the earliest signals was unequal access. Advanced AI capabilities were increasingly gated behind personal subscriptions, creating disparities between students who could afford premium tools and those who could not. In an educational context, this directly conflicted with the principle of equal opportunity.

At the same time, institutional visibility eroded. AI tools were selected individually, APIs were integrated informally into assignments and projects, and data processing locations varied by provider. This made it difficult to understand where data was being processed, under which terms, and with what long-term implications for privacy and compliance.

Cost control emerged as another structural challenge. With subscriptions and API usage distributed across personal accounts, reimbursements, and free tiers, the institution had no consolidated view of AI spend. Planning, forecasting, and assessing the educational value of that spend became nearly impossible.

Finally, there was a pedagogical gap. Consumer-facing AI tools abstract away system behavior, model differences, and operational trade-offs. For an ICT-focused institution, this meant students were using powerful systems without visibility into how those systems are governed, evaluated, or integrated in professional environments.

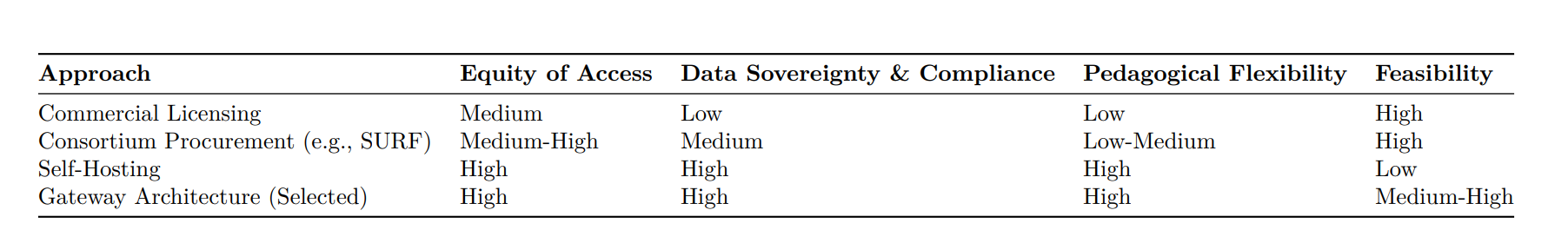

Evaluating the options for institutional AI adoption

Once it was clear that informal AI usage was not sustainable, Fontys ICT evaluated several approaches already common in higher education. Each offered partial relief, but none fully addressed the combination of equity, control, and adaptability the institution required.

Institution-wide commercial subscriptions promised simplicity and familiarity. However, at institutional scale they were costly, locked the university into single-vendor roadmaps, and offered limited transparency into data handling and model behavior.

Organizations like SURF negotiate bulk deals with AI providers on behalf of multiple institutions, securing better prices through collective purchasing power. But this reduced flexibility and innovation capacity.

Self-hosting open-source models offered maximum sovereignty and control. In practice, however, the operational burden of maintaining GPU infrastructure, managing model lifecycles, and keeping pace with frontier capabilities would have diverted resources away from education and research. It also risked isolating students from the models they would encounter in professional environments.

After assessing these paths, Fontys ICT concluded that no single approach could meet its requirements on its own. What the institution needed was a way to combine model diversity with institutional control, without locking into a single vendor, deployment model, or governance posture.

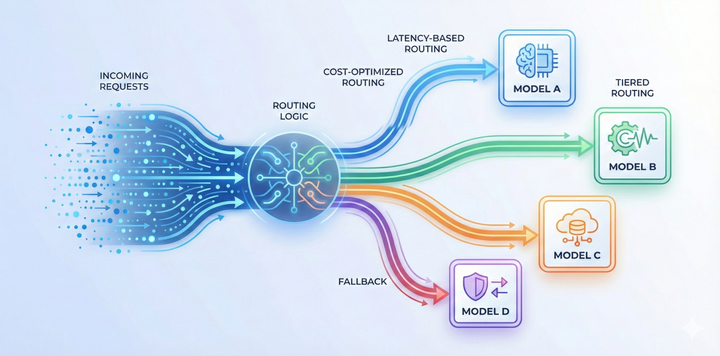

This evaluation led to the selection of a gateway architecture: an approach that could integrate multiple providers and hosting environments, while allowing the institution to centrally define and enforce access policies, routing preferences, budgets, and documentation.

The solution: a gateway-based AI platform

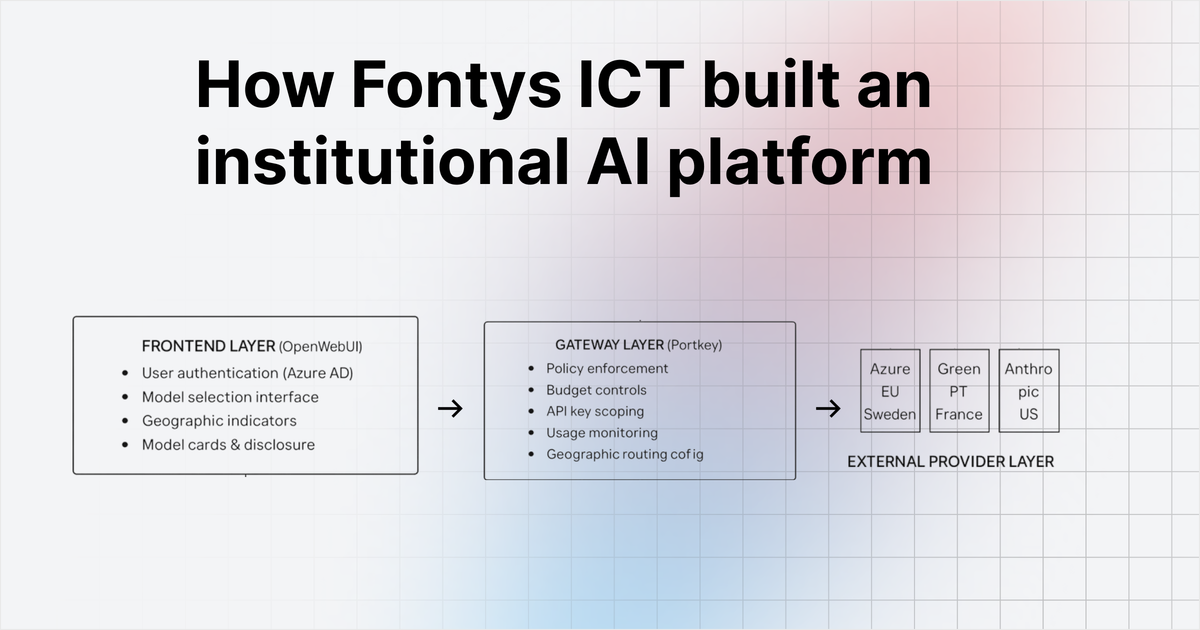

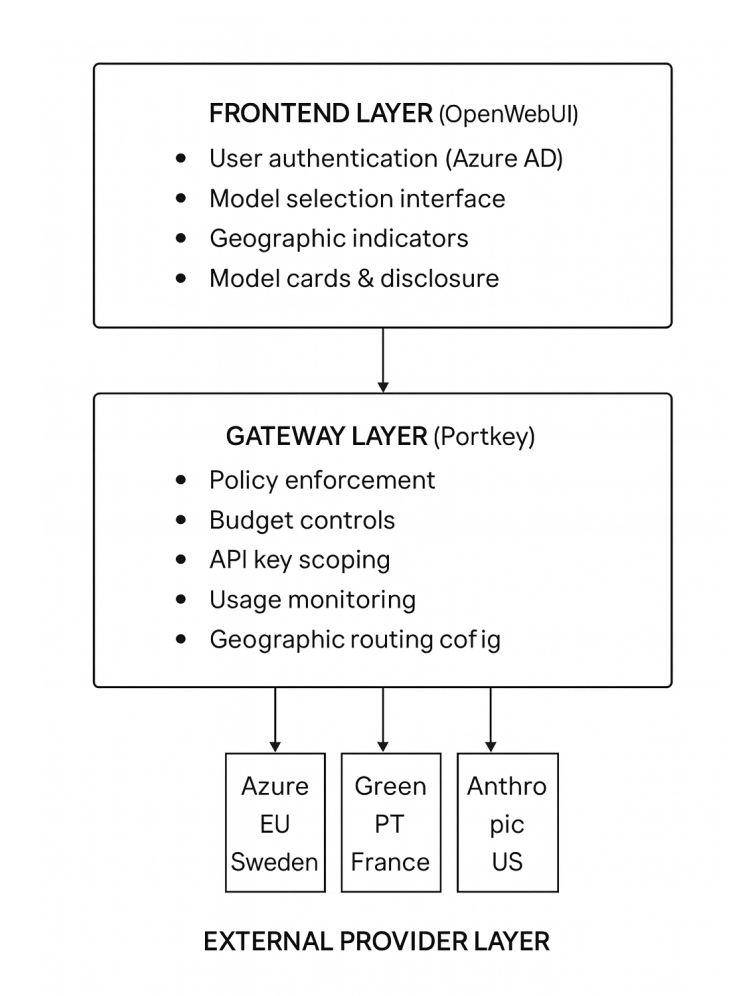

Fontys ICT designed its institutional AI platform around a simple principle: separate user experience, governance, and model providers, so each can evolve independently without compromising institutional control.

The result was a gateway-based architecture with three distinct layers.

This structure allowed Fontys ICT to expose a wide range of AI capabilities while ensuring that access, cost, data flows, and compliance were centrally defined and technically enforced.

The platform has:

- A frontend layer that provides a familiar, ChatGPT-style interface tied to institutional identity.

- A gateway layer that translates institutional policy into enforceable rules around access, routing, and budgets.

- A provider layer that connects to a curated set of commercial, open-source, and self-hosted models.

Rather than embedding governance logic into user interfaces or provider integrations, Fontys ICT treated governance as infrastructure.

Decisions about which models are available, where data is processed, and who can use what are encoded once at the gateway and applied consistently across the platform.

So, this architecture does not eliminate choice. Students and researchers can still work with multiple model families and providers. What changes is that every interaction happens within a framework the institution understands, controls, and can stand behind.

For the frontend, Fontys ICT deliberately avoided building a custom interface from scratch. Adoption mattered, and students and staff already had clear expectations shaped by mainstream AI tools. The goal was not novelty, but familiarity with institutional guardrails.

Fontys ICT selected OpenWebUI as the frontend layer. It provided a ChatGPT-style experience while remaining flexible enough to integrate tightly with the underlying gateway.

The interface is connected directly to institutional identity systems through single sign-on. Students, faculty, researchers, and staff access the platform using their existing credentials, and permissions are derived from organizational and course group memberships.

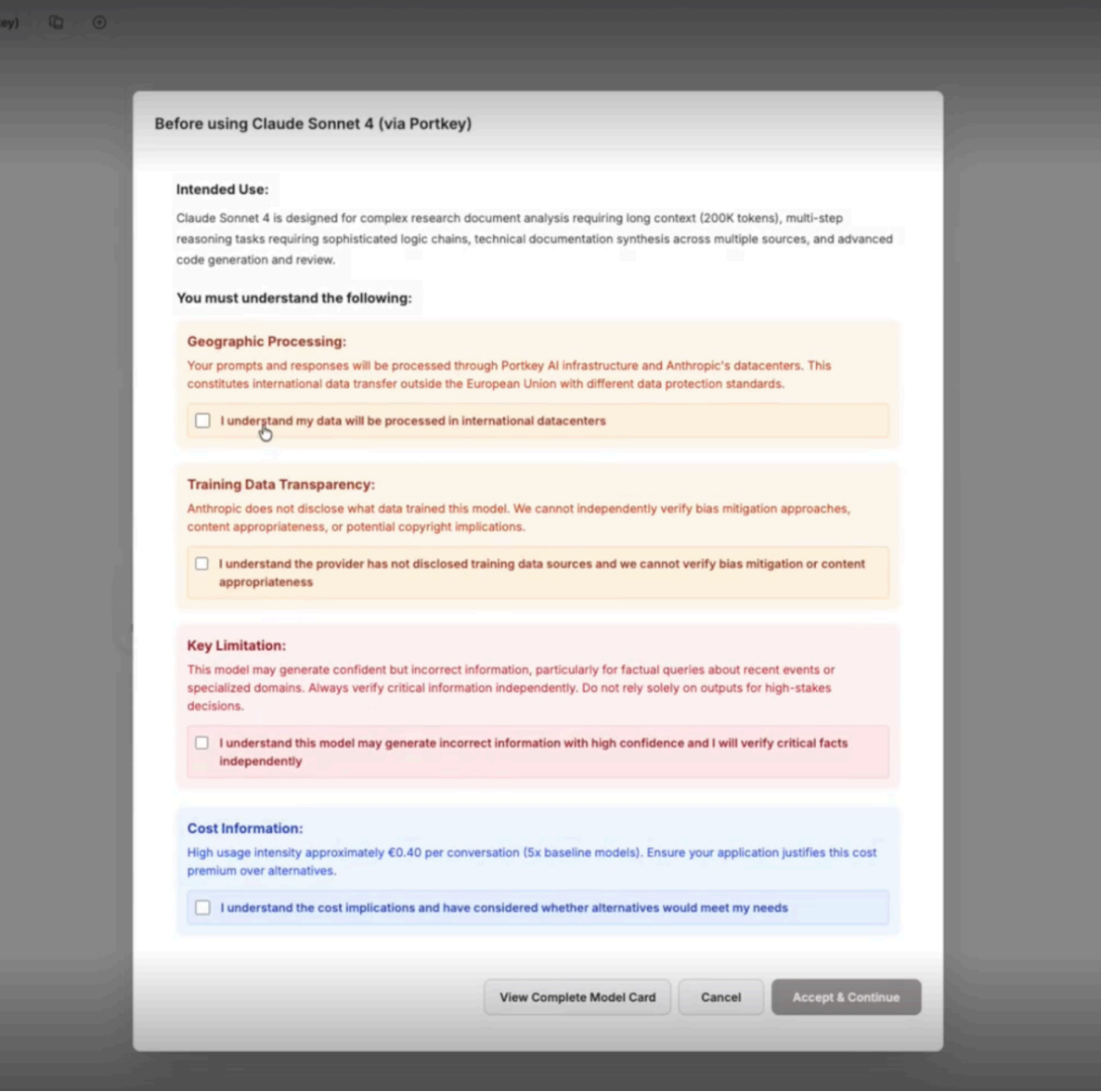

Model choice is intentionally explicit. Instead of routing requests invisibly in the background, users select a model at the start of each session from the set they are authorized to use.

Each option surfaces key context, such as hosting location, relative cost, and intended use, and more so model selection becomes a conscious decision rather than an implementation detail.

This design lowers the barrier to adoption by matching familiar interaction patterns, while reinforcing educational outcomes. Students are exposed to the reality that AI systems differ in capability, cost, and risk, and that choosing between them is part of professional practice.

If the frontend is where users interact, the AI gateway is where Fontys ICT asserts institutional control. This layer translates governance decisions into technical enforcement, ensuring that access rules, budgets, and routing preferences are applied consistently, without relying on individual judgment.

Fontys ICT selected Portkey as the gateway layer after evaluating custom-built and alternative commercial options. The gateway abstracts provider-specific APIs and authentication, while allowing Fontys ICT to define institutional rules once and enforce them everywhere. These rules include:

- Who can access which models, based on role, group, or project

- How much can be spent, with budgets enforced at user, model, and institutional levels

- Where requests are routed, prioritizing EU-hosted infrastructure by default

- What is logged, enabling auditability and cost attribution without capturing prompt content

Rather than distributing raw provider credentials, the platform issues scoped gateway keys. Each key carries explicit permissions and spending limits, preventing uncontrolled usage and making every request attributable to the correct user or group.

The gateway also gave Fontys ICT operational flexibility. New models and providers could be added through configuration rather than code changes. Routing could be adjusted when performance or risk profiles shifted. Budget ceilings could be refined based on real usage data, not estimates.

Most importantly, governance moved from guidance to enforcement. EU-first routing, access restrictions for high-risk models, and spend limits are not recommendations surfaced in documentation, rather are defaults enforced by the infrastructure itself.

Rather than committing to a single vendor or deployment model, the institution curated a portfolio of AI models spanning commercial providers, EU-hosted infrastructure, and self-operated systems.

EU-hosted models form the default baseline. Fontys ICT integrated models served through Azure AI Foundry, ensuring that commonly used models run in European datacenters, primarily in Sweden and Germany. This allowed most student and staff usage to stay within European jurisdiction without sacrificing capability.

To support sovereignty-first use cases and hands-on experimentation, Fontys ICT also exposed open-source models hosted on European infrastructure via GreenPT and institution-operated hardware in the Netherlands.

Frontier commercial models were included where pedagogical or research value justified it. Providers such as Anthropic and OpenAI remained important for curriculum relevance and advanced research, even when EU hosting was not available. In those cases, the platform flags non-EU processing clearly and requires explicit user acknowledgment before access.

What six months of operating the platform revealed

Operating the institutional AI platform for six months with around 300 users surfaced a set of practical, operational learnings that went beyond the initial technical design.

- AI governance is continuous, not a one-time setup

New models, provider updates, and changing performance characteristics required ongoing evaluation and it became an operational responsibility. - EU-first routing works when it is enforced by default

Most usage stayed within EU-hosted infrastructure when routing was handled centrally. When non-EU models were required, explicit disclosure and access controls made sure cross-border processing was intentional and documented. - Budget controls shape responsible usage

Spend limits did more than prevent overruns. They encouraged users to think critically about model choice, cost-performance trade-offs, and whether premium models were necessary for a given task. - Access decisions benefit from clear ownership

During the pilot, access approvals and exceptions were handled informally by a small technical team. This worked at pilot scale but the need for clearly defined responsibility showed as usage grows. - Visibility changes institutional posture

Centralized logging and attribution made AI usage understandable at an institutional level, enabling informed decisions about costs, access policies, and future expansion. - Governance cannot remain implicit at scale

Once AI is offered as shared infrastructure, decisions about access, routing, and budgets become institutional commitments rather than individual choices.

Institutional AI without losing control

Fontys ICT’s pilot showed that universities do not need to choose between innovation and governance. By treating AI as shared infrastructure the institution was able to offer advanced capabilities while retaining control over access, data flows, and costs.

The gateway-based approach made governance enforceable instead of aspirational. Institutional values around equity, transparency, and compliance were encoded directly into the platform’s operation, not layered on as documentation or policy after the fact.

Portkey helps universities, research institutions, and public-sector organizations build governed, multi-provider AI platforms. With centralized access control, EU-first routing, budget enforcement, and full usage visibility, Portkey enables institutions to adopt AI without losing oversight or accountability.

To see how explore how Portkey can support your institutional AI strategy, book a demo here.