How to add enterprise controls to OpenWebUI: cost tracking, access control, and more

Learn how to add enterprise features like cost tracking, access control, and observability to your OpenWebUI deployment using Portkey.

OpenWebUI is a powerful, open-source interface for interacting with large language models (LLMs). It’s lightweight, flexible, and works well with a range of model providers, making it a popular choice for organizations building internal tooling or deploying centralized AI interfaces.

For IT administrators and platform teams rolling out centralized instances of OpenWebUI, it’s challenging to keep track of costs, usage, audit trails, along with security enforcement.

In this blog, we’ll walk through:

- Why enterprise governance matters for AI interfaces like OpenWebUI

- The specific capabilities needed around observability, cost tracking, and security

- How to add these controls with minimal overhead

Why governance matters for OpenWebUI deployments

As OpenWebUI usage expands across teams, enterprise needs quickly go beyond just model access. Admins and platform teams start asking:

- Who’s using which models?

- How much are we spending on LLM calls?

- Are we staying within budget across teams?

- Can we restrict access to specific providers?

- Do we have audit trails for compliance?

Common enterprise requirements

When LLM usage scales inside an organization, the platform team typically needs to enforce certain standards across all users and tools, including those using OpenWebUI. Here are the most common enterprise requirements we see:

1. Cost management

Track spending across teams and use cases, set monthly budgets, and avoid surprise overruns. Attribution by project or API key is essential for accountability.

2. Access control

Restrict which teams can use specific models or providers (e.g., allow only R&D to access GPT-4, or only internal users to call Claude via Bedrock). Enforce routing rules based on API keys or metadata.

3. Usage analytics

Observe how different teams are using LLMs, volume, latency, provider breakdowns, and prompt patterns to inform optimizations and policy decisions.

4. Security and compliance

Maintain audit trails, enforce input/output guardrails, and ensure only authorized traffic hits external APIs, all while logging usage in a compliant format.

5. Reliability and failover

Prevent downtime or latency spikes by adding retries, fallbacks, and load balancing logic across model endpoints.

These are the building blocks of an enterprise-grade AI infrastructure, and they can be layered onto OpenWebUI through an AI gateway like Portkey.

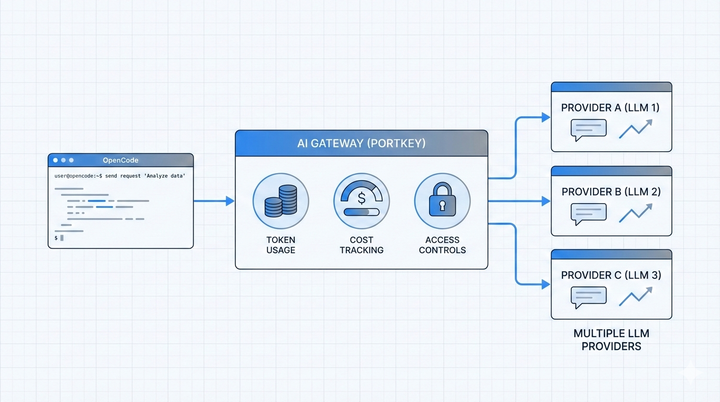

How Portkey solves this

Portkey sits in front of your OpenWebUI deployment as a centralized AI gateway and enhances it with enterprise-grade features like observability, cost tracking, and governance.

1. Cost tracking and budget controls

With Portkey, every LLM call is automatically logged with cost metadata. You can:

- Track spending by team, project, or API key

- Set monthly budget limits to prevent overruns

- View cost breakdowns by provider or model

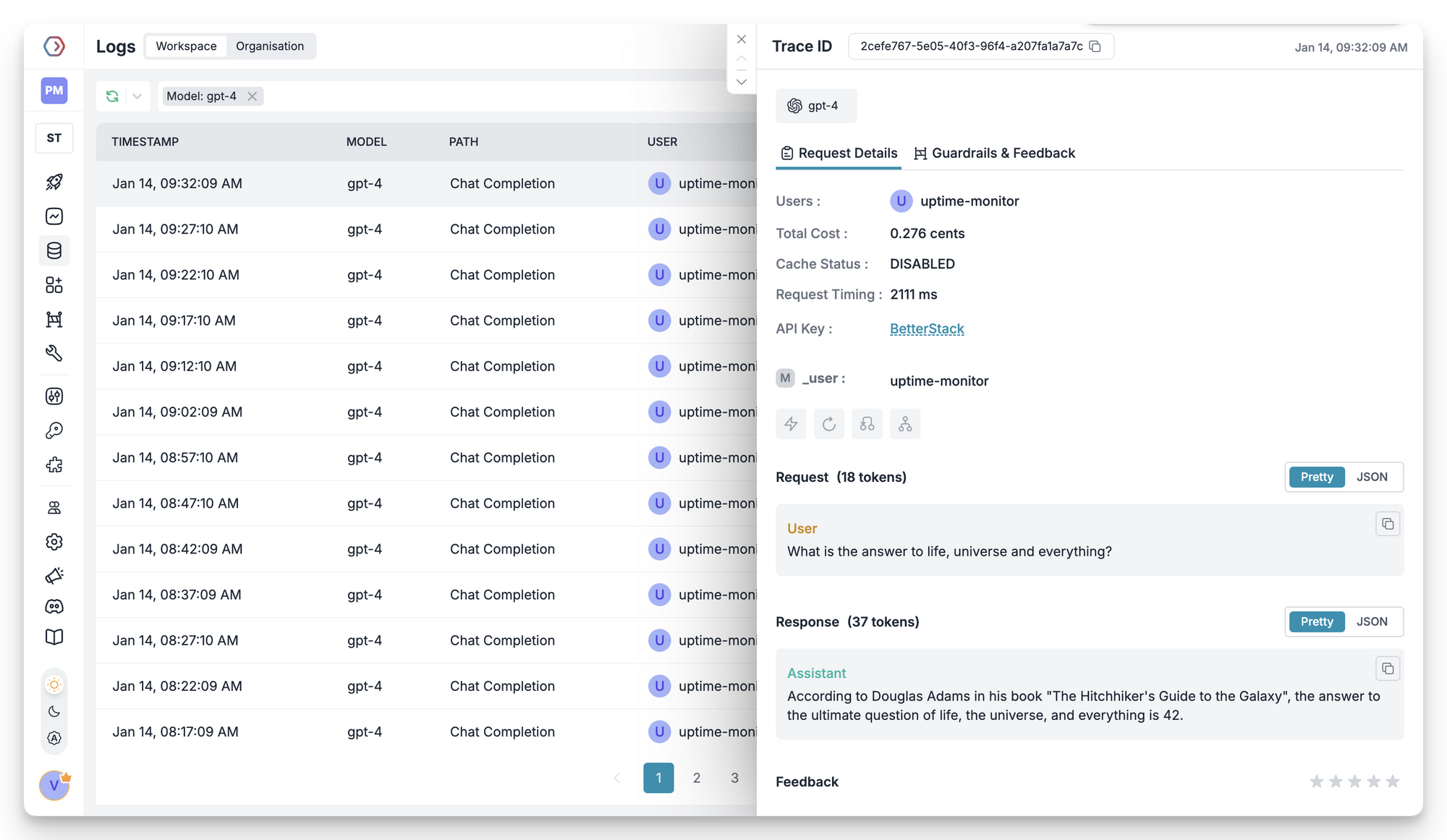

2. Advanced observability and analytics

Portkey provides detailed logs and dashboards to track:

- Prompt/response pairs

- Latency and error rates

- Token usage

- Provider-level metrics

All requests can be tagged with metadata like user ID, team, route name, or use case — making it easy to debug and analyze trends across your org.

3. Access control and routing

With Portkey, you can define access rules at the route or API key level:

- Define which teams or workspaces can access integrations. Set granular budgets and enforce rate limits at the workspace level to ensure responsible usage and prevent overruns

- Choose which models from each integration are available to teams.

4. Reliability and performance

Route requests based on metadata (e.g., send legal queries to Claude, product queries to GPT-4). Portkey also adds production-grade reliability features:

- Retries: Automatically retry failed requests

- Fallbacks: Route to a backup model if the primary fails

- Load balancing: Distribute traffic across multiple endpoints

- Circuit breaker: Configure per-strategy circuit protection and failure handling

5. Security and compliance

Portkey helps enforce enterprise-grade policies:

- Guardrails on inputs and outputs (e.g., profanity filters, data validation)

- JWT token validation for authentication

- Full audit logs for every request

- SOC2, GDPR, HIPAA, ISO 270001 compliance

6. Caching for efficiency

You can configure Portkey to cache LLM responses for repeated inputs, reducing cost and latency, especially for expensive models or repeat queries.

Open WebUI is now enterprise-ready

As OpenWebUI sees broader adoption across teams, enterprises need more visibility, control, and governance around how LLMs are being used. Cost tracking, access control, usage analytics, and compliance are critical for safe and scalable deployment.

Portkey helps platform and IT teams layer these capabilities onto any OpenWebUI setup without changing the user experience. With a single gateway, you can enforce policies, track usage, and optimize performance across every LLM call.

If you're deploying OpenWebUI in a centralized or shared environment, Portkey adds the enterprise foundation you need to support it at scale.

You can set up Portkey in minutes and start tracking usage, managing access, and controlling LLM costs without changing your existing setup. Or we can give you a detailed walkthrough.