Using an MCP (Model Context Protocol) gateway to unify context across multi-step LLM workflows

Learn how an MCP gateway can solve security, observability, and integration challenges in multi-step LLM workflows, and why it’s essential for scaling MCP in production.

Model Context Protocol (MCP) is rapidly becoming the backbone for managing context in complex LLM workflows. As adoption grows across agent frameworks and enterprise-grade AI systems, MCP is helping standardize how context is passed, updated, and reused across steps.

But while MCP brings structure, most current implementations are still fragmented into individual components, managed manually, or inconsistent across tools and models.

The problem: What’s missing in current MCP implementations

Most MCP setups are incomplete. They often focus only on the schema or protocol layer, leaving key operational needs like security, observability, and standardization to be solved ad hoc. This results in brittle, unscalable systems.

Lack of security and governance

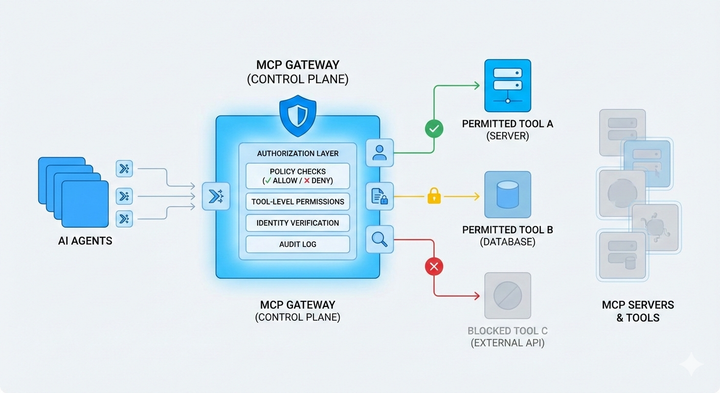

Most teams implementing MCP don’t have a centralized way to manage who can access what and how much across models, tools, and data sources.

- There's no built-in access control to restrict which agents, users, or services can access specific context slices, tools, or APIs.

- Even when access is granted, there's no mechanism to limit usage, such as rate limits, tool quotas, or role-based constraints.

- Audit logging is inconsistent or missing, making it hard to answer basic questions like: Which tool was invoked? What context did it see? Who triggered the call?

- Without clear enforcement points, compliance becomes a patchwork, with no guarantees around data residency, privacy boundaries, or usage policies.

Fragmented APIs and tool integration

Even with MCP in place, workflows often require developers to manually wire up multiple systems.

Tool interfaces vary widely, each exposing different input/output formats, protocols, or invocation methods. This lack of standardization adds significant integration overhead and makes orchestration brittle. Also, enterprises have legacy or internally developed tools that were never designed to work within modern LLM pipelines. Plugging these into a context-aware workflow typically requires a custom setup, slowing down development and increasing maintenance costs.

The result is a growing tangle of glue code and one-off solutions that undermine the scalability MCP is meant to provide.

No observability or cost visibility

Once you start chaining multiple tools, models, and context updates, visibility drops off a cliff. There's often no end-to-end trace that shows how context evolved from the initial request through every tool and model step. Currently, teams have no way to measure performance bottlenecks, such as which tool or model is slowing down a workflow.

Also, cost tracking is completely disjointed. LLM usage might be monitored, but tool costs, retries, and failovers are rarely accounted for in one place.

These gaps are the real risks for enterprises trying to operationalize LLMs or adopt MCP. To move from experimentation to production, teams need more than a protocol. They need a platform.

That’s where the MCP gateway comes in.

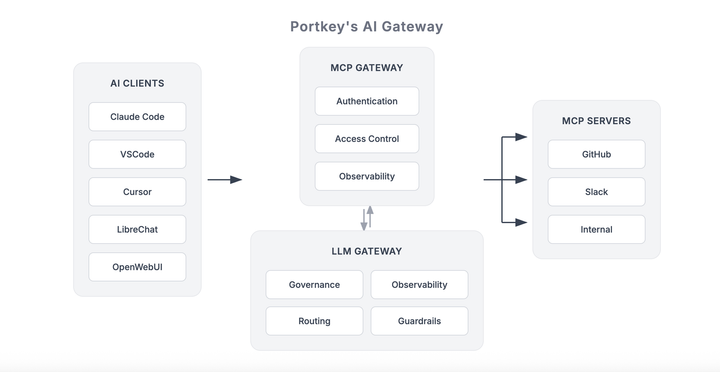

The role of MCP gateway in your LLM stack

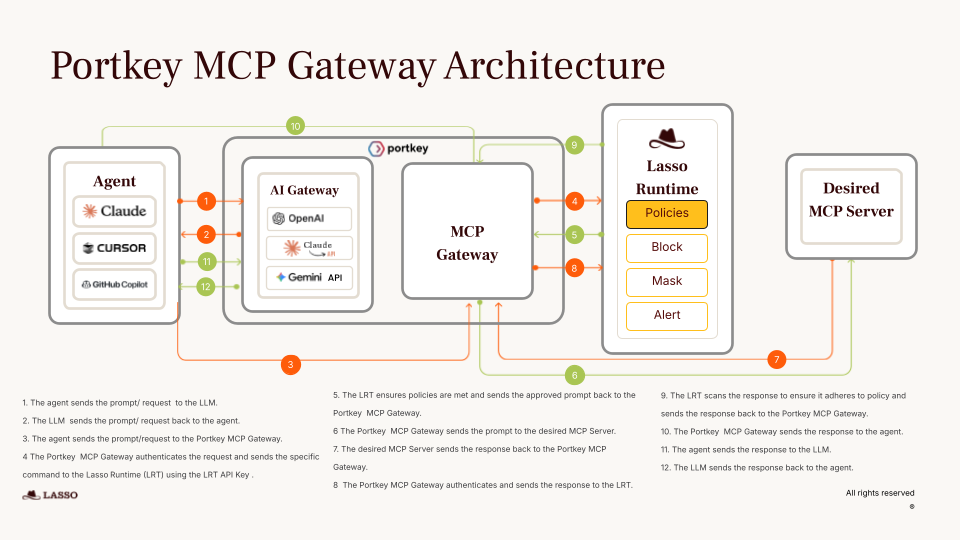

An MCP gateway addresses the operational gaps in today’s MCP implementations by acting as a centralized control plane for context-aware workflows. Instead of stitching together models, tools, memory, and governance in separate layers, an MCP gateway provides a unified way to manage context, security, observability, and tooling while remaining protocol-compliant.

- Access control: Define policies on who can access what, at the level of tools, models, or even context fields. Limit not just access, but usage frequency and scope.

- Audit logging: Capture a complete log of tool and model invocations, including what was accessed, who triggered it, and how context was used or modified.

- Compliance enforcement: Enforce data boundaries, residency rules, and org-specific policies around sensitive operations crucial for regulated environments.

- Consistent formats: Inputs and outputs for tools follow a common schema, making chaining and orchestration more predictable and maintainable.

- Plug-and-play support: Whether it's internal tools or external APIs, the gateway makes it easy to register and integrate tools without custom connectors.

- End-to-end tracing: Trace each step of a request - from user input, to model calls, to tool usage, and back - with full visibility into how context changed at each point.

- Tool performance metrics: Monitor latency, errors, retries, and throughput per tool, so bottlenecks and failures don’t stay hidden.

- Cost tracking: Attribute compute and API costs across both LLM usage and external tool invocations, essential for managing budgets and optimizing workflows.

MCP gave us a common language for context. But to actually run and enforce that context in production, you need infrastructure that wraps everything together: governance, observability, and tooling. That’s what an MCP gateway offers.

We’re building this at Portkey, a fully managed MCP gateway designed for enterprise LLM stacks. If you’re building multi-step workflows or planning to productionize MCP across teams, reach out for early access.

We’d love to hear what you’re working on!