MCP tool discovery for autonomous LLM agents

A concise summary of the MCP-Zero paper, explaining how active tool discovery enables scalable, autonomous LLM agents while reducing context overhead.

Large language model agents are increasingly expected to work with real systems like files, APIs, databases, execution environments through tools. As the number of available tools grows, however, most agent architectures struggle to scale in a clean, autonomous way.

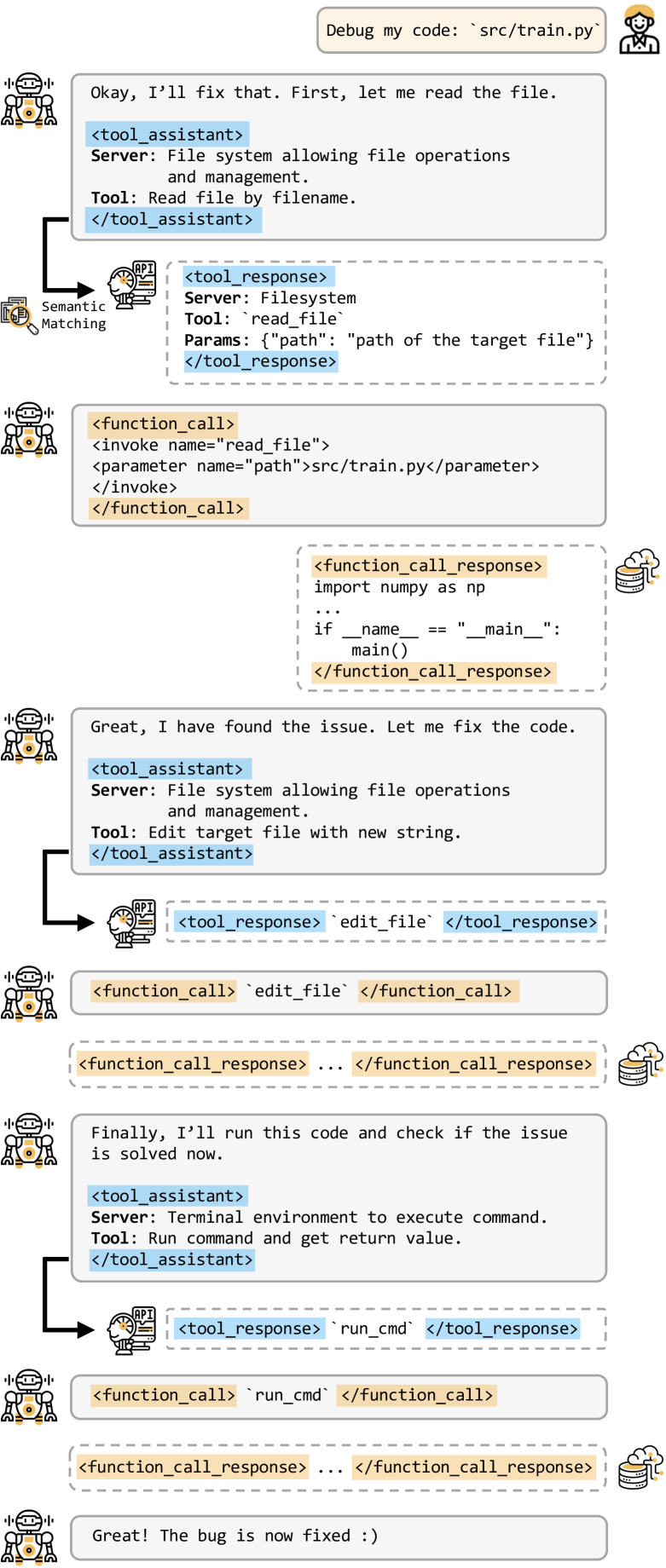

The paper “MCP-Zero: Active Tool Discovery for Autonomous LLM Agents” introduces a different approach to tool use: instead of giving models a fixed set of tools up front, it lets agents actively request the tools they need, when they need them. (Source).

At a high level, MCP-Zero reframes tool usage as a capability discovery problem rather than a retrieval or prompt-injection problem.

That’s why we built the MCP Gateway: a centralized control layer to run MCP-powered agents in production.

Check it out!

Why current tool selection approaches break down

Today, most LLM agents use one of these two patterns for tool access:

- System-prompt injection, where all available tool schemas are included in the prompt

- Retrieval-based selection, where tools are selected once based on the user’s initial query

But these have limitations at scale.

Injecting full tool schemas = extreme context overhead. Even a single MCP server can require thousands of tokens just to describe its tools, and large MCP ecosystems can exceed 200k tokens in total. This forces models into a passive role, scanning oversized prompts instead of reasoning about the task.

Retrieval-based approaches reduce prompt size, but they still assume that tool needs are static. Many agent tasks are multi-step. So, new requirements emerge as the agent reads files, inspects outputs, or encounters errors. Selecting tools once, just based on the initial user query, often results in missing or incorrect tools later in the task.

The key issue is autonomy: in both cases, the agent does not control how it acquires new capabilities.

How's MCP-Zero different?

MCP-Zero starts from a simple shift in responsibility: the agent itself decides when it needs a tool.

Instead of being handed a predefined list of tools, the model is prompted to explicitly declare missing capabilities as they arise during task execution. When the agent realizes it cannot proceed with its own knowledge, it gives a structured tool request that describes:

- the server domain (platform or permission scope)

- the tool operation it needs to perform

This request is generated by the model, not inferred from the user query. That distinction matters. Model-generated requests tend to align much more closely with tool documentation than raw user input, which improves matching accuracy downstream.

Importantly, the agent can make multiple tool requests over the course of a single task, rather than being limited to a single selection step at the beginning.

Once the agent gives a tool request, MCP-Zero uses a hierarchical semantic routing process to locate relevant tools efficiently.

This happens in two stages:

- Server-level filtering

The system first matches the requested server domain against MCP server descriptions (and enriched summaries) to narrow the search space. - Tool-level ranking

Within the selected servers, individual tools are ranked based on semantic similarity between the requested operation and the tool descriptions.

By separating server selection from tool selection, MCP-Zero avoids scanning thousands of tools at once while preserving precision.

A key difference between MCP-Zero and prior work is that tool discovery is iterative.

After receiving a tool, the agent evaluates whether it is sufficient for the current subtask. If it is not, the agent can refine its request and trigger another retrieval cycle. This creates a natural feedback loop:

- analyze the task

- request a tool

- use the tool

- reassess what’s missing

This is especially important for multi-step workflows such as debugging code, where tools from different domains (filesystem, code editing, execution) are needed in sequence.

What this means for MCP-based agent systems

As the MCP ecosystem grows with hundreds of servers & thousands of tools, the cost of naïvely exposing everything to an agent becomes unsustainable. Prompt injection does not scale, and retrieval-only approaches still treat agents as passive consumers of tools.

MCP-Zero shows a different path: treat tool discovery as an agent capability, not an external preprocessing step.

MCP-Zero reframes tool usage as an active, agent-driven process rather than a static configuration problem. By allowing agents to request tools on demand, route them efficiently, and refine their capabilities over time, it addresses both the scalability and autonomy limits of existing approaches.

For MCP-based agent systems, this points toward a more sustainable design pattern, where agents stay lightweight, adaptive, and in control of how they extend their own capabilities.

Source: arXiv:2506.01056 [cs.AI]