Model Context Protocol (MCP): Everything You Need to Know to Get Started

Learn how Model Context Protocol (MCP) revolutionizes AI integration, eliminating custom code and enabling standardized communication between LLMs and external systems. [Complete Guide with Examples]

The AI landscape is facing a critical inflection point. While models like GPT-4.5 and Claude 3.7 Sonnet demonstrate remarkable cognitive abilities, they remain isolated islands of intelligence—powerful but disconnected.

A critical challenge persists: How do we create truly interconnected AI systems with standardized access to external information? countless engineering hours spent crafting custom integration code that quickly becomes brittle, outdated, and nearly impossible to maintain at scale.

At Portkey's AI Practitioners Meetup our Co-Founder & CTO Ayush shared insights into how MCP is revolutionizing the way we build AI systems to solve complex workflows.

In this comprehensive guide, we'll decode MCP's architecture, compare it with current solutions, and look at practical implementations of MCP. Whether you're a solution architect planning enterprise AI infrastructure or a developer looking to eliminate integration headaches, understanding MCP isn't just advantageous—it's becoming essential.

Table of Contents:

- What is Model Context Protocol?

- MCP Core Architecture

- The Three Pillars of MCP Servers

- MCP vs. Traditional APIs

- How Portkey Implements MCP

- When and How to Implement MCP

- The Future of MCP

What is Model Context Protocol?

MCP is to AI systems what HTTP is to web browsers - it's the fundamental protocol that lets AI systems communicate smoothly. - Ayush Garg

The Model Context Protocol (MCP) is an open protocol that creates standardized communication channels between AI models and external systems. Just as USB-C lets you plug various devices into your computer, MCP provides a standard way for LLMs to:

- Talk to other AI models

- Access data from different sources

- Use tools and external services

- Work with pre-written prompts

Originally developed by Anthropic, MCP has evolved into an open standard, with growing adoption across the AI industry. It's quickly becoming the de facto method for AI-tool interactions, creating a unified ecosystem rather than isolated AI applications.

MCP Core Architecture

At its core, MCP consists of three essential components working in harmony:

MCP Hosts, like Claude Desktop or integrated development environments (IDEs), serve as the launching point. Think of hosts as the center to access data through MCP.

MCP Clients maintain individual connections with servers. These clients handle complex communication protocols, message formatting, and state management. They ensure reliable, secure communication between AI models and servers.

MCP Servers are like tools in a toolbox. Each one specializes in a specific task, making it easy to swap or upgrade without breaking the system.

Now that we understand the core architecture of MCP, let’s explore how these components work together to enable seamless AI integration

The Three Pillars of MCP Servers

MCP Server provides three powerful mechanisms for AI-system interaction:

Resources enable secure, controlled access to data. Whether it's files on your computer, database records, or API responses, resources provide a standardized way to read and work with information. AI models don't need to know the underlying data structure – they just request what they need through the MCP interface.

Tools are executable functions that AI models can call, but only with explicit user approval. This human-in-the-loop design ensures safety while enabling powerful automation.

Prompts serve as pre-written templates for common tasks. They help standardize how AI models approach specific problems, making interactions more predictable and maintainable.

When you want to use an MCP-enabled feature:

- The host application detects your intent

- It connects to the appropriate MCP server

- The server exposes its capabilities through resources, prompts, and tools

MCP vs. Traditional APIs

MCP is not just "another API lookalike." This is a paradigm shift in how we enable AI systems to interact with the world.

Why Traditional APIs Are Like Having Separate Keys for Every Door

Traditionally, connecting an AI system to external tools involves integrating multiple APIs. Each API integration means separate code, documentation, authentication methods, error handling, and maintenance.

Think of APIs as individual doors—each with its own key and rules:

| Feature | MCP | Traditional API |

|---|---|---|

| Integration Effort | Single, standardized integration | Separate integration per API |

| Real-Time Communication | ✅ Yes | ❌ No |

| Dynamic Discovery | ✅ Yes | ❌ No |

| Scalability | Easy (plug-and-play) | Requires additional integrations |

| Security & Control | Consistent across tools | Varies by API |

| Documentation | Self-documenting | External and often incomplete |

| Evolution | Adapts without breaking clients | Versioning headaches |

Key Differences That Make MCP Superior

- Self-describing tools: Unlike fixed API endpoints, MCP servers expose "tools" with semantic descriptions that include what the tool does, parameters, outputs, constraints and limitations

- Dynamic adaptation: When an MCP server changes its tools or parameters, clients adapt automatically—no breaking changes or versioning nightmares

- Built-in documentation: The interface itself is the documentation—no need to hunt down separate API docs

- Contextual tool availability: Tools can be made available based on context (e.g., only exposing messaging tools to authenticated users)

- Two-way communication: MCP supports persistent, real-time two-way communication where AI models can both retrieve information and trigger actions

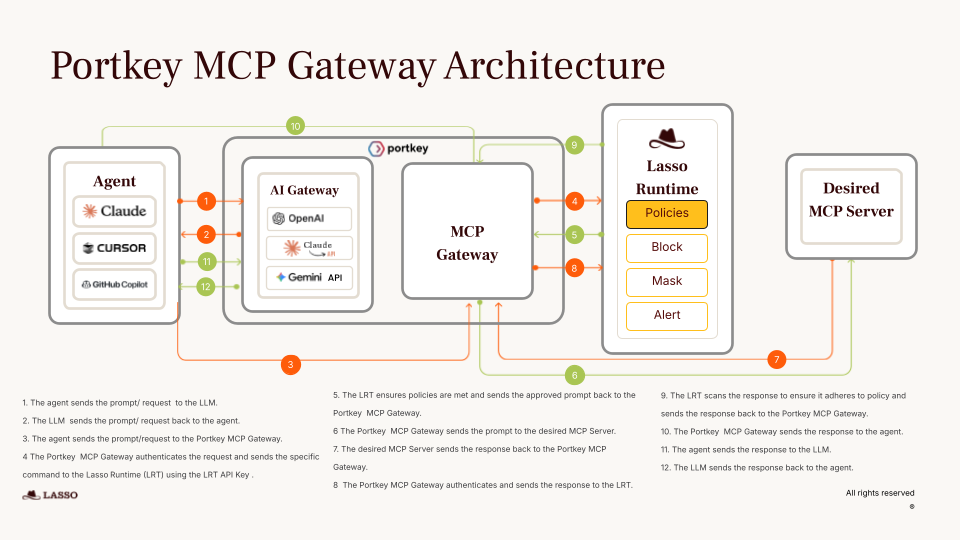

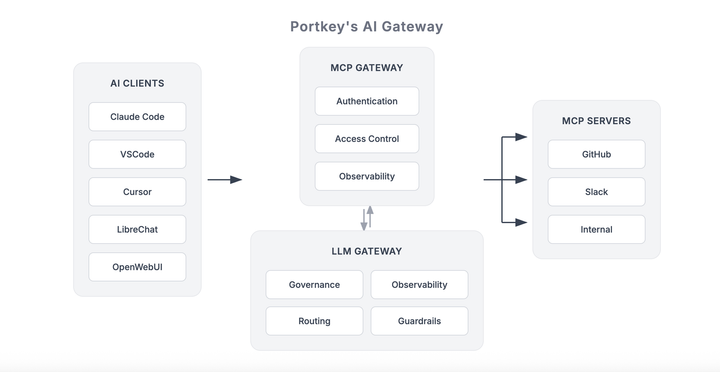

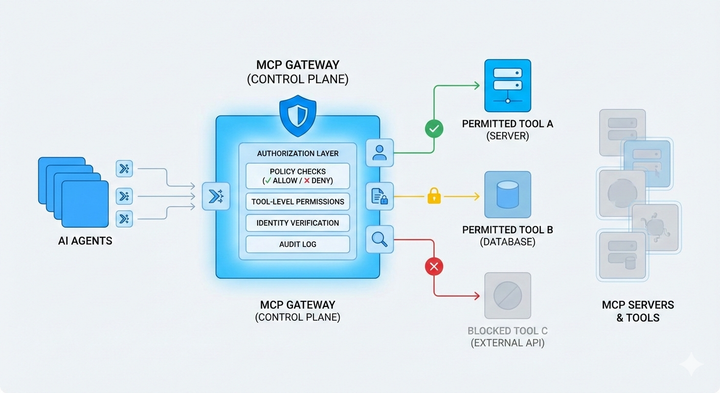

How Portkey Implements MCP

Portkey's implementation of MCP is elegantly simple yet powerful. Instead of overcomplicating things, Portkey's AI Gateway fully supports MCP while simultaneously acting as an MCP client. Here's how it works in practice:

End-to-End AI System: Portkey provides a complete AI solution built on top of MCP. At its core, MCP serves as a bridge to help solve business logic, while Portkey's gateway handles all the orchestration you need.

Simplified MCP Client: Portkey acts as an MCP client where you can easily configure your prompts, tools, and other context needed to make MCP work effectively.

Simple API Call: Once your prompt and tools are set up, you can connect everything to your app's UI using Portkey's Prompt API. A single API processes the entire workflow.

Comprehensive Monitoring: After implementation, you can view complete logs and metrics for your application directly within Portkey's dashboard.

When and How to Implement MCP

Ayush provides practical advice for MCP implementation, comparing it to microservices: just because you can break everything into tiny pieces doesn't mean you should.

Consider using MCP when:

- Multiple parts of your system need the same functionality

- You need to scale specific components independently

- You have clear boundaries between different parts of your syste

The Future of MCP

MCP's roadmap for 2025 focuses on expanding capabilities while maintaining its core principles:

Remote Capabilities

- Standardized authentication mechanisms

- Service discovery protocols

- Support for serverless environments

- Enhanced state management

Enhanced Agent Support

- Hierarchical agent systems

- Improved permission handling

- Real-time streaming capabilities

- Support for complex workflows

The protocol aims to evolve through open governance, where AI providers collaboratively shape its future through equal participation. This commitment to openness ensures MCP remains responsive to real-world needs while maintaining its foundational principles.

Ready to Transform Your AI Integrations with MCP?

Check out Complete Directory of MCP servers to discover pre-built servers for popular tools and services.

MCP represents a fundamental shift in how we integrate AI systems with external tools and data sources. By providing a standardized protocol for these interactions, MCP addresses the integration challenges that have historically slowed AI adoption and limited capabilities.

The key benefits of implementing MCP include:

- Reduced development time through standardized integration patterns

- Improved maintainability with self-documenting interfaces

- Enhanced flexibility to swap or upgrade components

- Future-proofing as the AI ecosystem continues to evolve

As you consider implementing MCP in your own projects, remember to start small, focus on clear boundaries between systems, and build incrementally. The goal isn't to rewrite everything at once, but to gradually move toward a more integrated, capable AI system.

Portkey provides everything you need to leverage the power of MCP in your applications. Whether you're a solo developer or part of an enterprise team, our platform makes advanced AI architecture accessible and manageable