How MCP(Model Context Protocol) handles context management in high-throughput scenarios

Discover how Model Context Protocol (MCP) solves context management challenges in high-throughput AI applications.

Managing context effectively in high-throughput AI applications is a critical challenge. Large language models (LLMs) require persistent context to generate accurate and coherent responses. However, when handling thousands of concurrent requests, ensuring consistency, minimizing latency, and maintaining security becomes difficult.

This is where the Model Context Protocol (MCP) comes in. MCP offers a practical solution through its scalable, real-time context management system specifically built for high-throughput environments. Let's look at how it works and why it matters for teams running demanding AI applications.

To join, register here -> https://luma.com/ncqw6jgm

Key challenges in high-throughput context management

Let's break down what makes context management so tricky when you're running LLM applications at scale.

When your system needs to handle thousands of requests at once, four main challenges emerge:

Context consistency becomes difficult. Each request must maintain its own conversation thread while the system juggles many inputs simultaneously. Contexts can bleed into each other without proper management or get lost entirely.

Latency builds up quickly. The traditional approach of processing one context operation after another creates a bottleneck that users experience as slow response times.

Scaling hits walls fast. As request volume grows, you need a way to dynamically allocate resources that can handle thousands of context-dependent operations without degrading performance.

Security risks multiply. With context data flowing across distributed systems, you need robust methods to restrict unauthorized access and maintain compliance, especially when handling sensitive information.

MCP tackles these challenges through its specialized architecture and optimization techniques, which we'll examine next.

How MCP optimizes context management

Context State Management

MCP’s state management system is specifically designed for the demands of high-throughput AI applications. This system keeps track of everything your LLMs need to know across thousands of concurrent conversations.

MCP maintains reliable context through three key mechanisms:

Real-time synchronization keeps context updated across your entire distributed system. When a piece of context changes in one place, that change propagates immediately to anywhere else it's needed, preventing inconsistencies and confused responses.

Priority-based queuing makes sure the most important context updates don't get stuck waiting behind less critical ones, even when the system is processing thousands of requests.

Session persistence protects against data loss by maintaining context even when brief service interruptions occur. If a server has hiccups or a connection drops momentarily, conversations can continue without losing their thread.

These capabilities prevent the context fragmentation that typically plagues high-volume LLM applications, keeping conversations coherent even under heavy loads.

Parallelized query execution for high throughput

Instead of processing one query after another, it works on multiple context operations at the same time.

This parallelized architecture brings several practical benefits:

Parallel query routing dramatically cuts down on wait times. By processing multiple context requests simultaneously, MCP reduces latency by 40-60% compared to sequential processing methods. This means your users get responses much faster, even during peak traffic.

Batch normalization solves a common headache when working with multiple data sources. MCP standardizes responses from diverse sources into a unified format, which means your application doesn't need custom code to handle different data structures from each source.

Distributed caching prevents the system from repeating work unnecessarily. By storing frequently accessed context in strategic locations throughout the infrastructure, MCP avoids redundant lookups that would otherwise slow down response times.

The combined effect of these optimizations is impressive: MCP can handle more than 5,000 context operations every second while keeping response times under 100 milliseconds. This level of performance means your applications stay responsive even under intense usage.

Scalability mechanisms

MCP's architecture was built from the ground up to scale with your needs, whether you're handling hundreds of requests or tens of thousands.

Stateless request processing is a key design choice that separates context operations from core model inference. This decoupling means your system can scale each component independently based on actual demand, rather than forcing everything to scale together.

Elastic resource allocation i.e. when your application experiences a sudden spike in usage, MCP provisions additional context servers without manual intervention. Once traffic returns to normal levels, these resources scale back down to optimize costs.

Context-aware load balancing routes requests based on both server capacity and the specific type of context being processed. This intelligent routing ensures that specialized context operations go to the servers best equipped to handle them.

These scalability features combine to deliver impressive performance metrics. MCP can maintain 99.95% uptime while processing over 50,000 requests per second, with automated failover and context replication across availability zones providing robust disaster recovery.

This level of reliability means your AI applications stay available even during unpredictable traffic surges.

Ensuring security, performance, and consistency

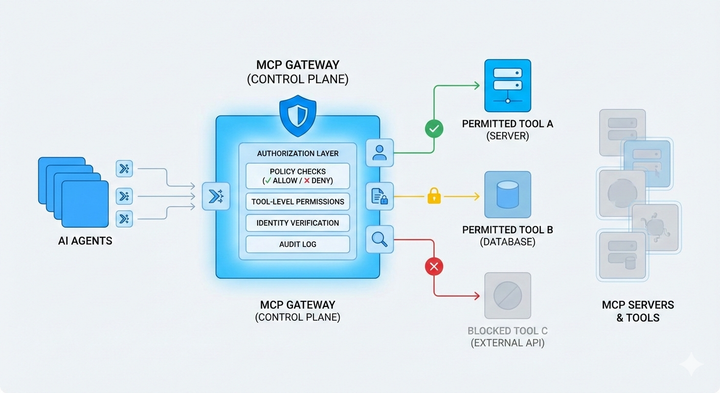

Security enhancements

Granular permission checks happen during the initial request validation stage rather than being performed separately for each API call. This front-loaded security model restricts unauthorized data modifications while eliminating redundant checks that would otherwise slow down processing. The system enforces attribute-level permissions that can limit access to specific parts of the context.

Encrypted context propagation protects data as it moves between components of your distributed system. This encryption ensures that sensitive context information remains secure during transmission, preventing potential interception or tampering. MCP also maintains immutable audit trails that track all context changes, providing a forensic record if security analysis becomes necessary.

Performance Optimizations

Compressed context serialization shrinks payload sizes by up to 70% compared to standard JSON, reducing network traffic and improving transmission times without requiring code changes.

Optimized request validation performs thorough checks while adding less than 10ms of overhead per request, maintaining data quality and security without slowing down your application.

Context Consistency Assurance

Version-controlled context blocks track changes over time, enabling your application to roll back to previous states if needed and providing a clear history of modifications.

Cross-node consensus protocols ensure that context updates are verified by multiple system components before being committed, preventing inconsistencies in distributed environments.

Why Model Context Protocol is ideal for enterprise-scale LLM applications

Large organizations deploy AI differently. Their needs center around three critical factors that MCP addresses head-on:

- Raw power meets rapid response with MCP processing 50,000+ requests per second at sub-100ms speeds. Your systems stay snappy even during traffic spikes.

- Downtime isn't an option for enterprise applications. MCP's 99.95% uptime comes from intelligent failover mechanisms that kick in automatically when problems arise.

- The middleware design lets MCP slot into existing architecture without disruption. It works as a universal translator between your models and data sources, whether they live in the cloud or your data center.

- The smart separation between context handling and model inference means you can optimize each independently. Scale up context management during complex conversations while keeping inference resources steady - or vice versa. This flexibility helps control costs while maintaining performance exactly where you need it.

For organizations where AI powers critical functions, MCP provides the foundation that makes large-scale deployment practical.

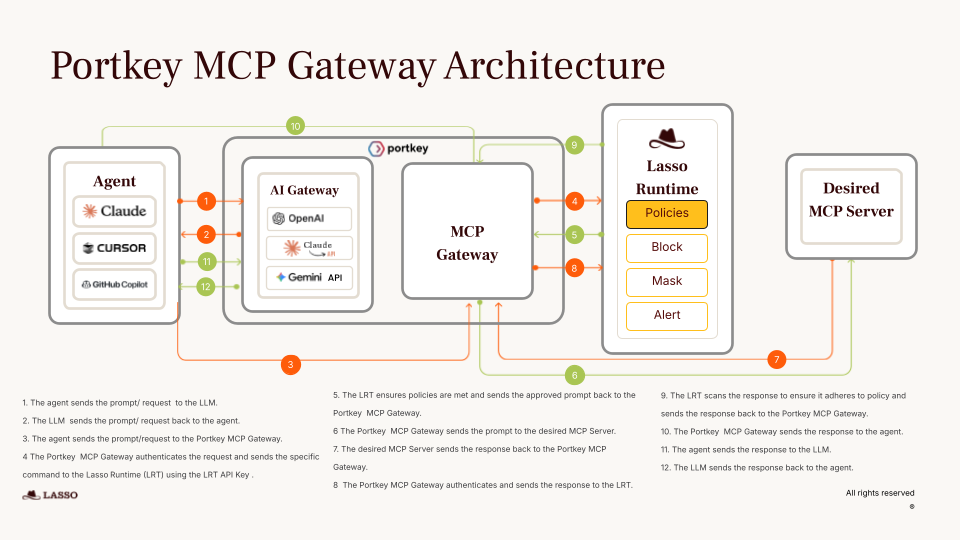

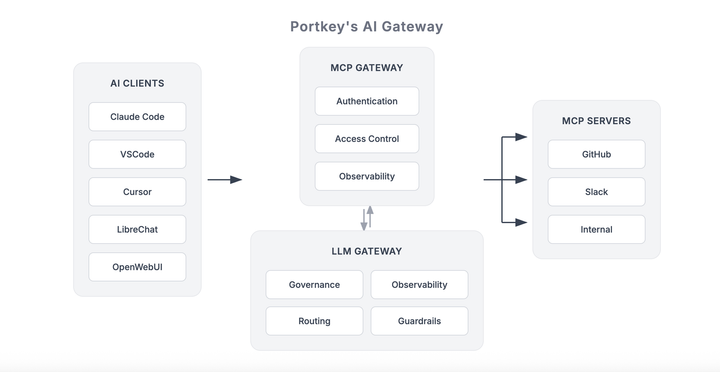

Portkey’s MCP Client

Portkey's MCP client offers a robust implementation of the open standard protocol, facilitating connections between large language models (LLMs) and a vast array of tools and data sources.

With access to over 800 verified tools, Portkey's MCP client enables AI agents to perform a wide range of tasks through natural language commands.

Reduce go-to-market time and operational costs with Portkey's platform that transforms complex agent development into a streamlined experience, allowing developers to deploy applications with minimal setup and integration challenges.

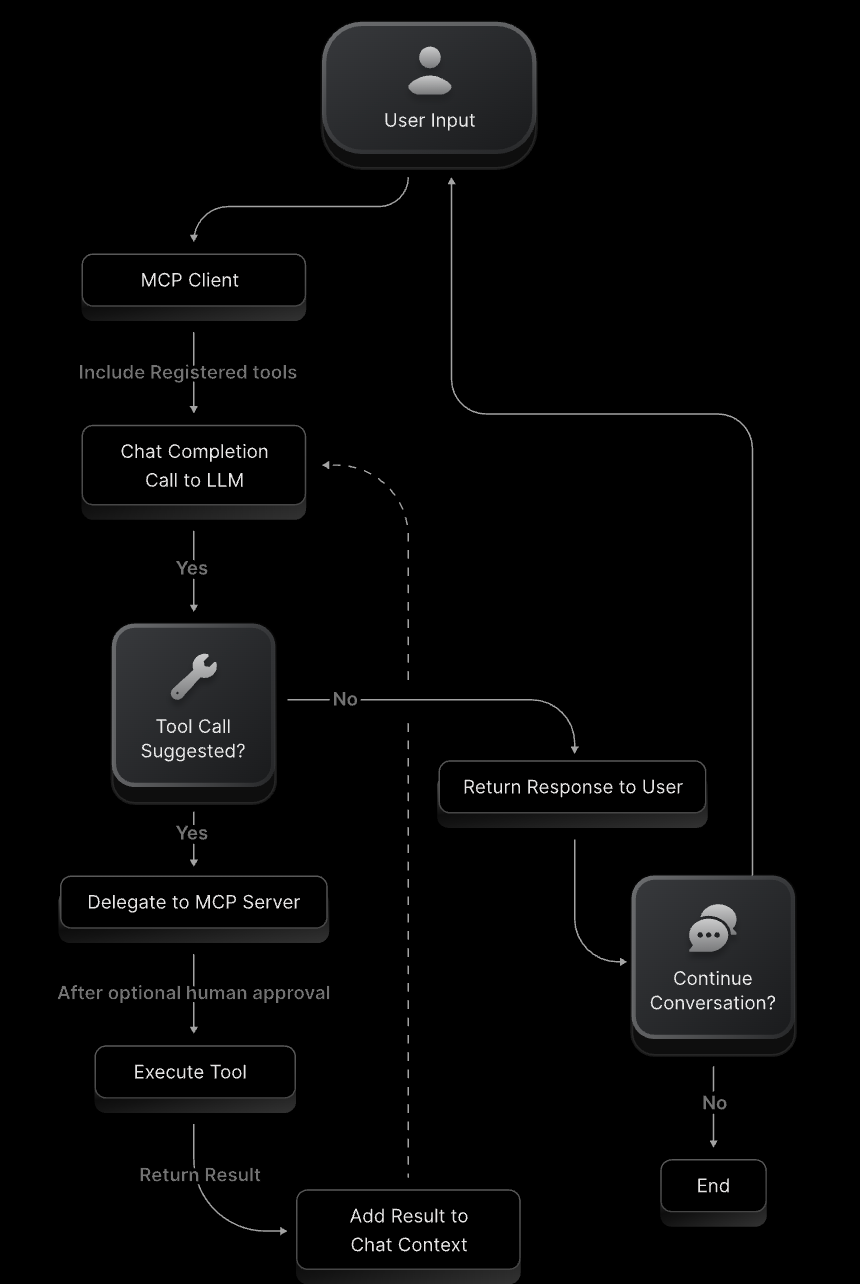

Changing how we build LLM apps

MCP changes how we think about handling context in busy AI systems. When thousands of conversations happen at once, keeping track of who said what becomes a serious technical challenge.

For teams running AI at an enterprise scale, the benefits are clear. Your applications can handle more conversations with better accuracy and faster responses. Context stays intact even when traffic spikes or minor disruptions occur.

If you're looking to build AI systems that can truly scale with your business needs, MCP provides the foundation that makes it possible. The technical complexity happens behind the scenes, leaving your team free to focus on creating better AI experiences rather than troubleshooting context management issues.