OpenAI's New Agent Tools: Navigating Strategic Implications for Enterprise AI

OpenAI just redefined how enterprises build AI agents—with new Responses APIs, built-in tool integrations, and building blocks for agents.

For enterprises invested in AI, these launches bring exciting capabilities and strategic dilemmas: How should enterprises adapt without becoming overly dependent on OpenAI? What does this mean for enterprises invested in AI?

As we process these developments at Portkey, we wanted to share our analysis and practical recommendations for enterprises navigating this rapidly evolving landscape and outline a forward-thinking strategy.

What OpenAI Announced

- The Responses API — A new API primitive that combines the simplicity of Chat Completions with the tool-use capabilities of the Assistants API.

- Built-in tools — Direct way to use web search, file search, and computational use.

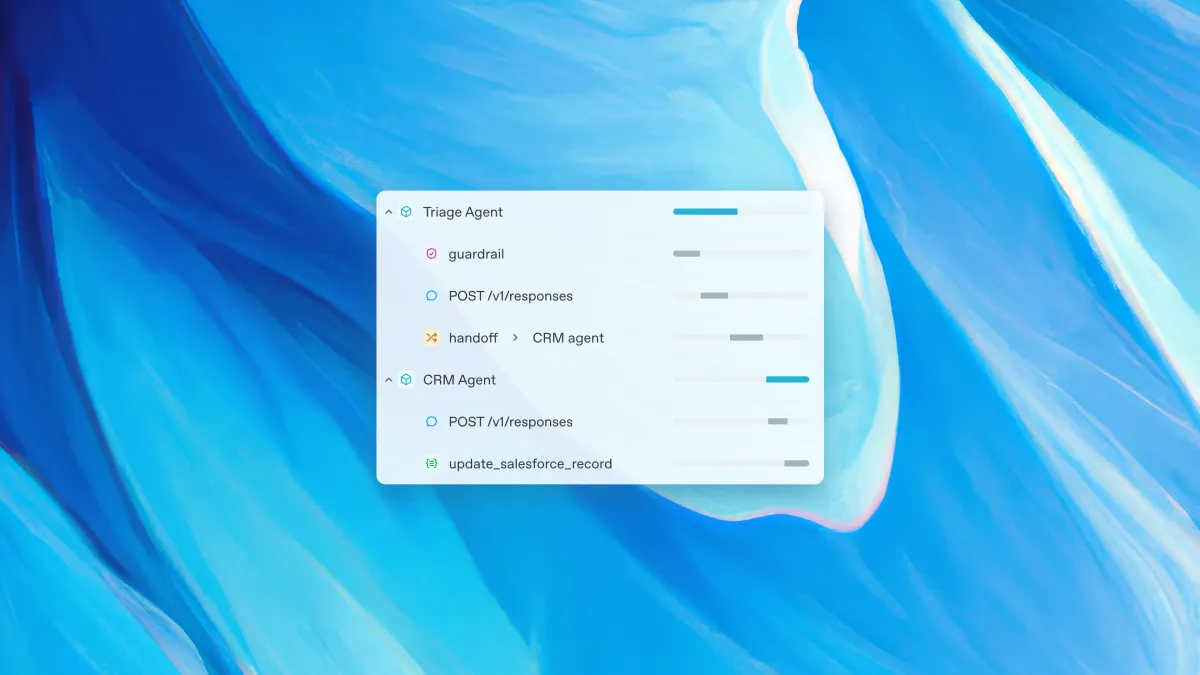

- The Agents SDK — An open-source toolkit for orchestrating single-agent and multi-agent workflows

- Observability Tools — Built-in tracing and debugging to simplify agent management.

These announcements represent OpenAI's strategic move toward providing more comprehensive infrastructure for building autonomous agents – systems that can independently accomplish tasks on behalf of users.

Our Analysis: What This Means for Enterprises

Portkey team sat down yesterday to talk about the implications of this launch (and what it means for us & our customers). Here are our key observations:

1) Evolution, Not Revolution

"These launches are not exactly new. It's not the first time someone has done a web search API – Perplexity has been doing it. They introduced file search, so they packaged it neatly!" - Visarg Desai

While OpenAI's announcements are significant, they represent a formalization and integration of capabilities that have been emerging across the ecosystem. What's notable is how they're being packaged together and positioned as part of OpenAI's long-term direction.

This integration creates the risk of "functional lock-in" – where your applications become dependent on vendor-specific features. For instance, if you design your system around OpenAI's web search tool, you'll have a harder time swapping in a different model (from Anthropic or an open-source LLM) that doesn't offer the same integrated capability.

Actionable Advice:

- Evaluate dependencies to prevent your AI infrastructure from becoming overly reliant on a single provider.

- Implement abstractions or middleware (like an AI Gateway!) to maintain flexibility.

2) A Gradual Shift in API Standards

The Responses API signals an important shift in how OpenAI views its API ecosystem:

"This could be a big enough thing that would make us think about what becomes industry standard... would the RAG style expressed in Response API (i.e. append the output of the reranker to inputs) become the standard for RAG?" - Sai Krishna

This shift might eventually cascade across the industry, but OpenAI has been careful to position Chat Completions as continuing to be supported. There's no urgent need to migrate existing implementations, but new projects would inevitably consider building on the Responses API.

3) Stateful APIs and Simplified Context Management

A notable innovation in the Responses API is how it handles conversation history:

"Each response that you send to the responses API now also has an id. So let's say you want to build a chat app... you need to send the whole chat history in every turn - now, whatever new session that you pop up, you just need to refer to a previous response ID which is already stored in OpenAI logs." - Vrushank Vyas

This significantly simplifies chat application development by shifting the burden of context management to the API provider. However, this convenience comes with important tradeoffs around data privacy. The Responses API automatically stores all interactions on OpenAI's servers - including your prompts and model outputs - even if you don't explicitly opt in. This raises significant concerns for enterprises with strict data governance requirements who may not want sensitive conversations persisted on third-party infrastructure.

Actionable Advice:

- Use gateways or intermediaries for sensitive use-cases to ensure data remains under your governance.

4) Pricing Considerations

The pricing model for these new capabilities may be a deciding factor for many enterprises:

"10 cents per GB per day is just the storage cost. Companies would end up paying hundreds of thousands of dollars just to keep their files on OpenAI." - Ayush Garg

For large-scale implementations, costs could be significant, potentially driving enterprises to seek alternatives.

Strategic Recommendations for Technology Leaders

Based on our analysis, here are practical recommendations for enterprises considering how to approach these developments:

For Production Systems: Don't Rush to Migrate

"If something is already in production... I don't see that there will be any emergency or urgency to move all the use cases which are working." - Ayush Garg

Production systems with stable OpenAI-based implementations using Chat Completions should continue as they are. The migration path from Assistants API to Responses API is more urgent (with a 2026 sunset date announced), but even that gives teams ample time to plan transitions.

For New Projects: Consider the Longer-term Direction

For new development, particularly those involving agent-like capabilities, it's worth exploring the Responses API and Agent SDK as they represent OpenAI's strategic direction.

For Multi-vendor Strategies: Beware of Lock-in

"Both Anthropic and Google are here... just like couple of instructions over same with start creating agents. Tell us start what you want to do."

OpenAI isn't the only player building agent infrastructure. AWS Bedrock, Anthropic, and others are rapidly developing similar capabilities. A robust multi-vendor strategy remains important for enterprises concerned about vendor lock-in.

Industry data shows this is becoming standard practice - the percentage of organizations using multiple LLM providers has increased dramatically from approximately 22% to 40% in 2024 alone. This trend reflects growing recognition that no single provider can meet all needs and that diversification helps mitigate risks.

There are practical reasons for this approach. Real-world performance data shows significant reliability differences between providers: some LLMs respond with rate limit errors over 20% of the time, while others maintain error rates below 1%. By implementing a multi-vendor strategy, enterprises ensure they're not at the mercy of a single provider's limitations.

Watch the Adoption Patterns, Not Just the Announcements

"Personally I didn't find anywhere in my circle where even people are even talking about this announcement." - Naren

Despite the technical merits, market adoption remains to be seen. Keep an eye on developer communities and adoption metrics before making major strategy shifts.

Implications for Portkey

These announcements highlight the evolving landscape of AI infrastructure. As an AI Gateway provider, we see several key implications that align around three strategic pillars: Orchestration, Observability, and Interoperability.

The Shift from Transformation to Orchestration

The AI infrastructure landscape is moving beyond simple API transformation toward full orchestration of complex workflows:

"From gateway point of view it's now more about giving them a unified orchestration layer for capabilities and not just transforms. So it's not about okay we support chat completions, it's about we support this particular capability across providers."

This requires rethinking what an AI gateway provides – moving from a pure translation layer to a capability orchestration layer. With proper orchestration, enterprises can compose sophisticated AI workflows that might include OpenAI's tools alongside capabilities from other providers or internal systems.

Interoperability Remains Critical

As models and APIs evolve, interoperability becomes even more important. Organizations need flexibility to adapt to changing API standards while maintaining consistent application behavior.

And Observability and Governance Gain Importance

With more complex AI systems, observability and governance become increasingly critical:

"One way to think is gateways also will be where you do metering, where you do permissioning, where you do access rules." - Vrushank

These platform capabilities become essential as AI deployments grow in complexity and scale. A comprehensive observability strategy gives enterprises detailed visibility into AI performance, costs, and behavior across all providers. This unified monitoring approach is far more valuable than siloed tracking within each vendor's ecosystem, especially as organizations adopt multi-provider architectures.

The governance layer becomes equally important for enforcing consistent security policies, access controls, and compliance requirements across your entire AI footprint. This centralized control ensures that regardless of which model or provider handles a particular request, the same enterprise standards are applied.

Looking Forward

The AI infrastructure landscape continues to evolve rapidly. While OpenAI's announcements represent important developments, they're part of a broader industry trend toward more capable and integrated AI development platforms.

For enterprises, the key remains balancing innovation with practical considerations like cost, reliability, and flexibility. Rather than rushing to adopt every new capability, focus on building sustainable AI architectures that can evolve alongside the rapid pace of innovation.

Recommendations for CTOs

Enterprises should consider these practical guidelines

| Scenario | Recommended Action | Rationale |

|---|---|---|

| Existing stable Chat Completion projects | No immediate migration | Stability and cost-efficiency maintained |

| Using Assistants API (deprecated mid-2026) | Begin planning migration | Scheduled sunset creates urgency |

| New agent-focused projects | Adopt Responses API & Agents SDK | Aligned with OpenAI’s long-term strategic direction |

| Sensitive data or strict governance | Consider AI Gateway integration | Maintain control over data handling |

Why an AI Gateway Is Crucial

An AI Gateway (like Portkey) acts as the universal adapter in your AI ecosystem, allowing:

- Seamless orchestration of workflows across multiple AI providers

- Centralized observability and governance

- Simplified handling of vendor API evolutions

Portkey’s Strategic Response

Portkey is rapidly evolving to support OpenAI’s latest APIs and integrations, providing enterprise users:

- Immediate compatibility with the Responses API and its tool integrations

- Unified observability, regardless of vendor

- Flexibility to switch or combine providers without disruption

"Our mission remains enabling enterprises to orchestrate, observe, and control their AI infrastructure seamlessly. OpenAI’s latest launch reinforces why having an open gateway strategy is critical to enterprise success."

Final Thoughts & CTO Action Items

OpenAI’s new tools bring valuable capabilities, but enterprises must strategically adopt them while maintaining architectural flexibility. Here’s our recommended action list for CTOs:

- Assess dependencies within your current AI infrastructure.

- Evaluate potential lock-in risks and create mitigation strategies.

- Explore or expand AI Gateway strategies to retain flexibility and control.

- Monitor the broader AI vendor landscape to inform strategic decisions continuously.

- Implement unified monitoring and logging across all AI interactions to maintain visibility into costs, performance, and behavior.

- Design your systems with standard interfaces that abstract away provider-specific details, allowing you to swap implementations without extensive rework.

In a rapidly evolving landscape, the most successful enterprises will embrace innovation without compromising on flexibility, observability, and strategic autonomy.

Portkey is an open-source AI Gateway designed to provide fast, reliable, and secure routing to 1600+ language, vision, audio, and image models.

Interested in shaping your enterprise’s AI strategy to navigate vendor evolutions and maintain flexibility? Connect with the Portkey team here.