Opentelemetry semantic conventions for GenAI traces

How Opentelemetry's semantic conventions enable rich analytics on Portkey

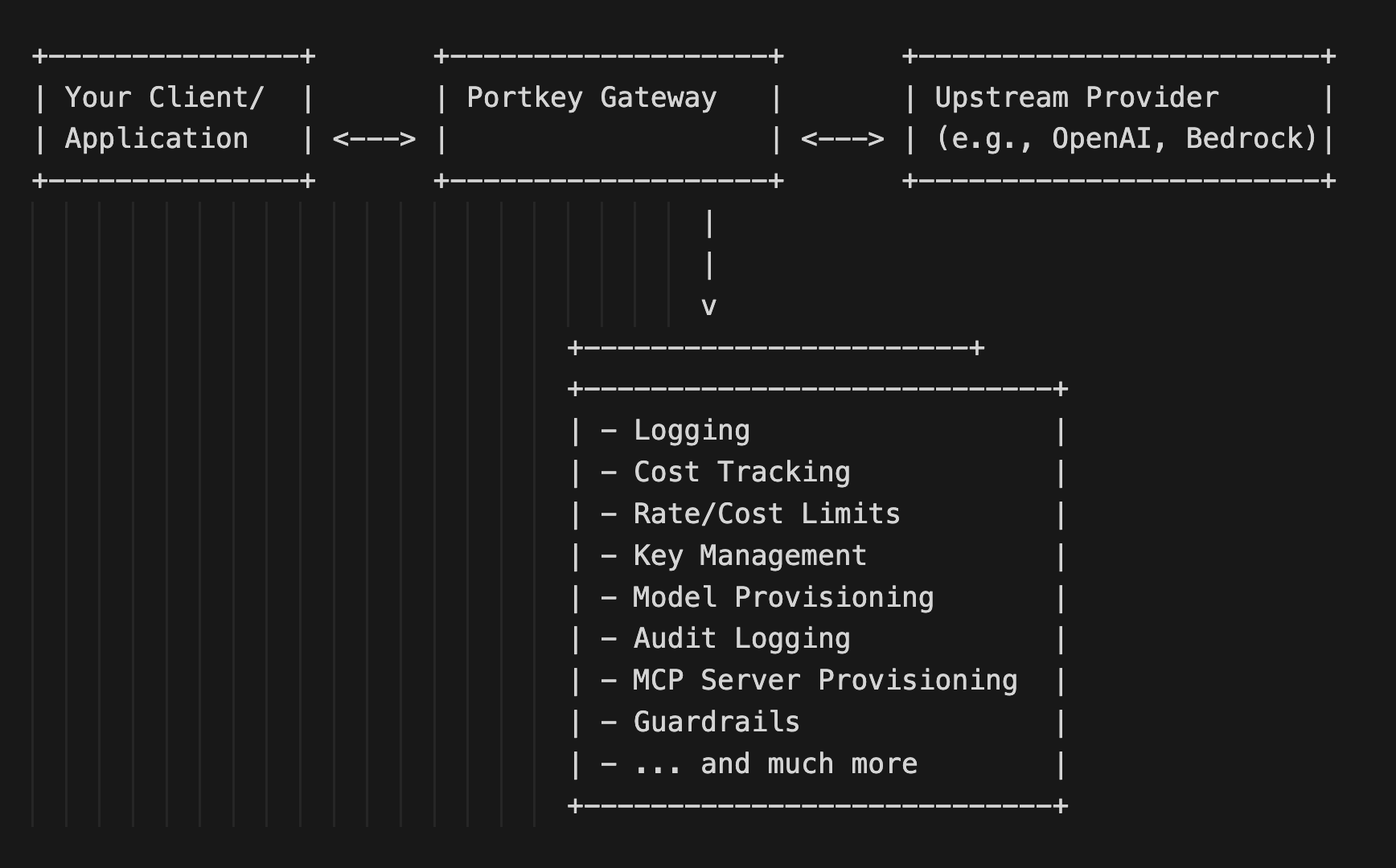

At Portkey, observability for your applications is something we care about deeply, no request should go unaccounted for in terms of cost and latency. With the Portkey gateway, all your requests are logged securely for you by default. A simplified flow looks like this:

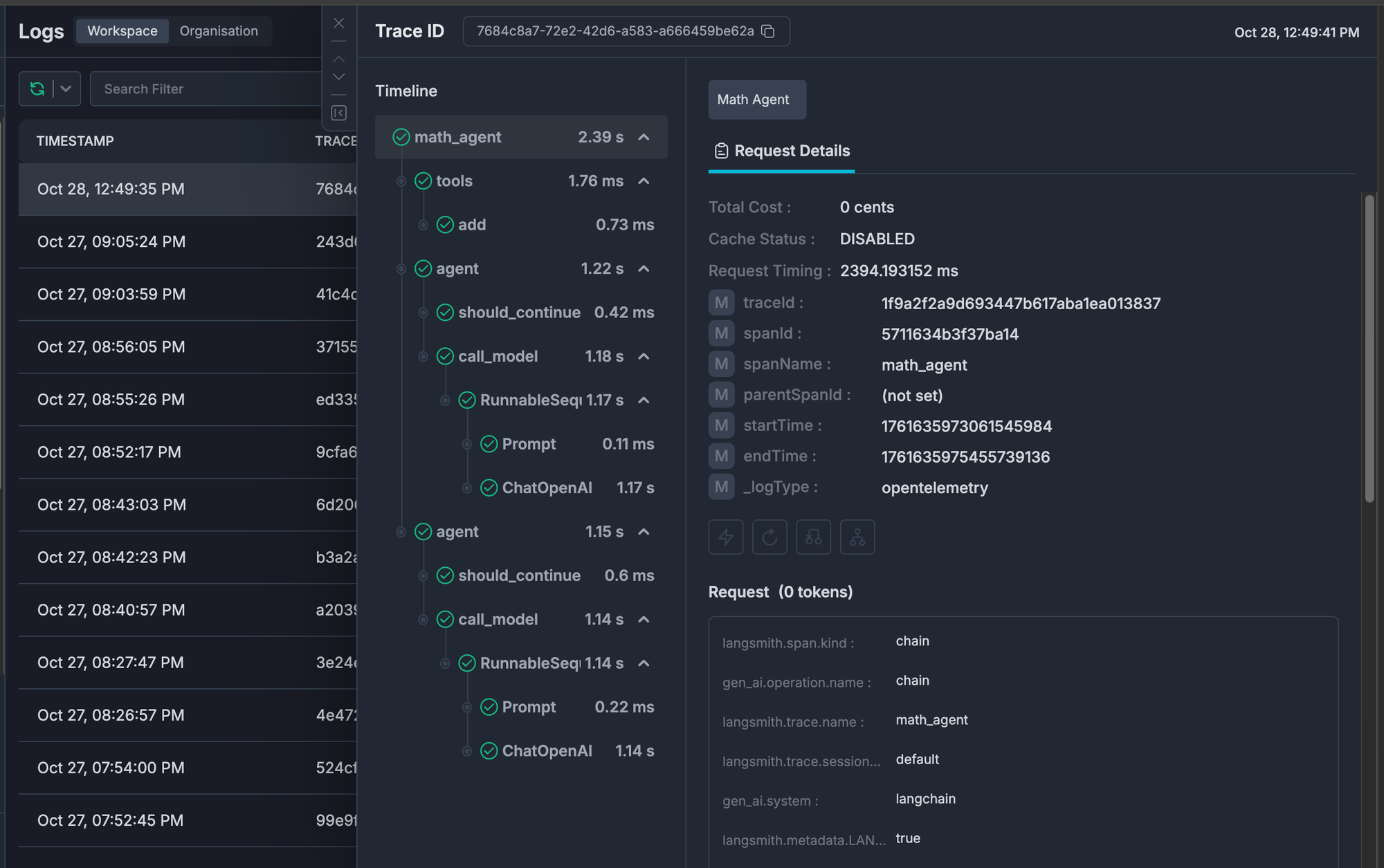

While the AI gateway captures all your inference requests, most LLM based applications are non-deterministic by default (think agents, multi-turn, mcp tool calling), in production environments you'd often want deeper observability into the functioning of your applications.

Opentelemetry has come out with a development spec for handling tracing of such applications, though the spec is still in development, we believe that following semantic conventions, when it comes to logging, brings great big benefits to observability (think about how you'd want to filter by a certain model or search for a specific pattern in a response)

from langgraph.prebuilt import create_react_agent

import os

# These environment variables need to be set before importing langgraph

os.environ["LANGSMITH_OTEL_ENABLED"] = "true"

os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = "https://your-portkey-enterprise-url.com/v1/otel"

os.environ["OTEL_EXPORTER_OTLP_HEADERS"] = (

f"x-portkey-api-key=your-portkey-api-key"

)

os.environ["LANGSMITH_OTEL_ONLY"]="true"

def add(a: float, b: float) -> float:

"""Add two numbers."""

return a + b

math_agent = create_react_agent("openai:gpt-4o", tools=[add], name="math_agent")

result = math_agent.invoke(

{"messages": [{"role": "user", "content": "what makes portkey the fastest ai gateway?"}]}

)

print(result["messages"][-1].content)

You can make use of any tracing library like arize phoenix, langfuse, openllmetry, langsmith and any other tracing library that supports sending otel traces with Portkey as the logging layer to enable rich analytics and helps you debug issues within seconds

Here's a few examples on how to configure the tracing libraries, (we support the full spectrum of libraries that send otel traces, these are just a few examples)

We also have robust support for exporting your traces using our analytics APIs following the semantic conventions to import to your data analytics tools

Check out the experimental otel export docs here!

Though the semantic conventions are still evolving, we're pretty bullish on the standards to help with rich analytics!

To contribute to the conventions, checkout the spec here: https://github.com/open-telemetry/semantic-conventions/blob/main/model/gen-ai/spans.yaml