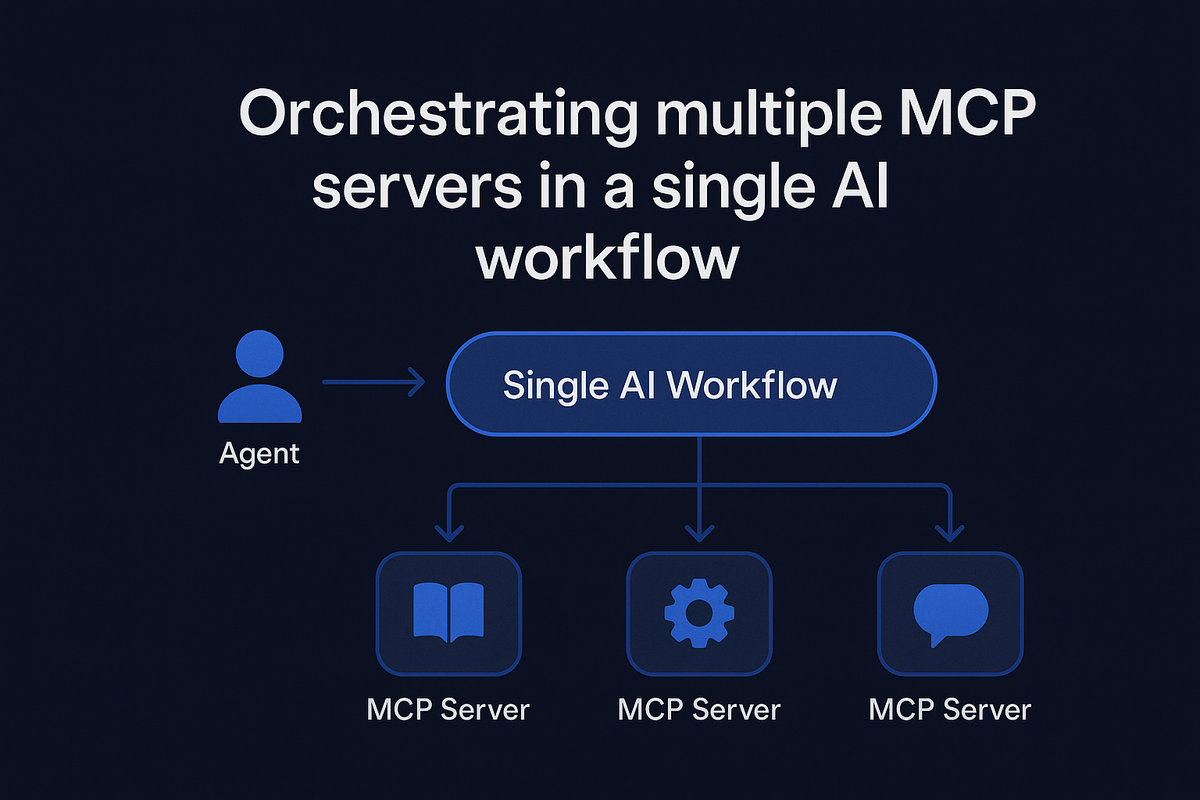

Orchestrating multiple MCP servers in a single AI workflow

Discover how centralized governance simplifies authentication and policy across MCP servers

MCP servers are the connective tissue between AI agents and the tools they rely on. They allow LLMs and agents to read from databases, trigger workflows, post updates, and interact with countless external systems, all through a consistent, standardized interface.

But as teams start to run workflows that span multiple MCP servers, each hosting different sets of tools, a new layer of complexity emerges. Authenticating to each server separately, managing scattered permissions, and keeping policies consistent across environments quickly turns into operational overhead.

testing MCP servers is broken.

— Rohit Agarwal (@jumbld) November 13, 2025

curl → figure out auth → write JSON payloads → parse responses → repeat for every tool

we're not in 2010.

Postman solved this for REST APIs 12 years ago.

why are we still curl-ing AI servers?

introducing Hoot. 🦉 pic.twitter.com/p6YOvoHGCH

The multi-server orchestration challenge

In a simple setup, an agent might connect to a single MCP server and run a handful of tools it exposes. But in real-world deployments, the toolset an agent needs is rarely confined to one server.

Consider an incident-response workflow:

- MCP Server A hosts a knowledge base search tool.

- MCP Server B connects to the team’s issue tracker.

- MCP Server C integrates with a messaging platform.

The agent needs to query recent incidents, create a ticket for the new issue, and post an update, all in one flow. Without orchestration, this means:

- Authenticating to each MCP server independently.

- Applying and updating access rules in multiple places.

- Keeping policies in sync as tools or agents change.

- Managing separate logs and audits for each server.

As the number of servers and tools grows, so does the likelihood of policy drift, where different servers enforce slightly different rules. The result is fragmented control, inconsistent permissions, and a higher risk of unauthorized or unintended actions.

Governance and authentication is the missing layer

In the context of MCP servers, governance means more than just “who can log in.” It’s about defining and consistently enforcing the rules for which agents can access which tools, under what conditions, and in which environments.

It includes setting limits, ensuring compliance, and maintaining full visibility over every interaction.

Authentication is the front door: verifying that the calling agent is who it claims to be before it can invoke any tool.

Without a unifying layer, governance and authentication become distributed problems. Policies may differ from server to server. Credentials might be managed inconsistently. And when something needs to change like revoking a tool permission or adjusting a rate limit, it has to be done in multiple places, increasing both operational cost and the chance of mistakes.

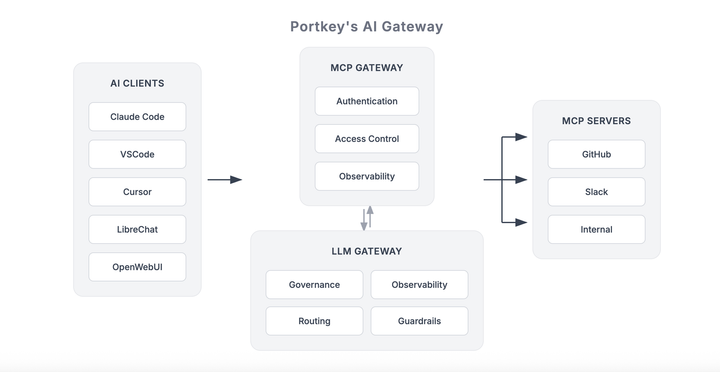

A centralized governance and authentication layer changes the equation. It gives you one place to define access policies, validate requests, and manage credentials, no matter how many MCP servers are in play.

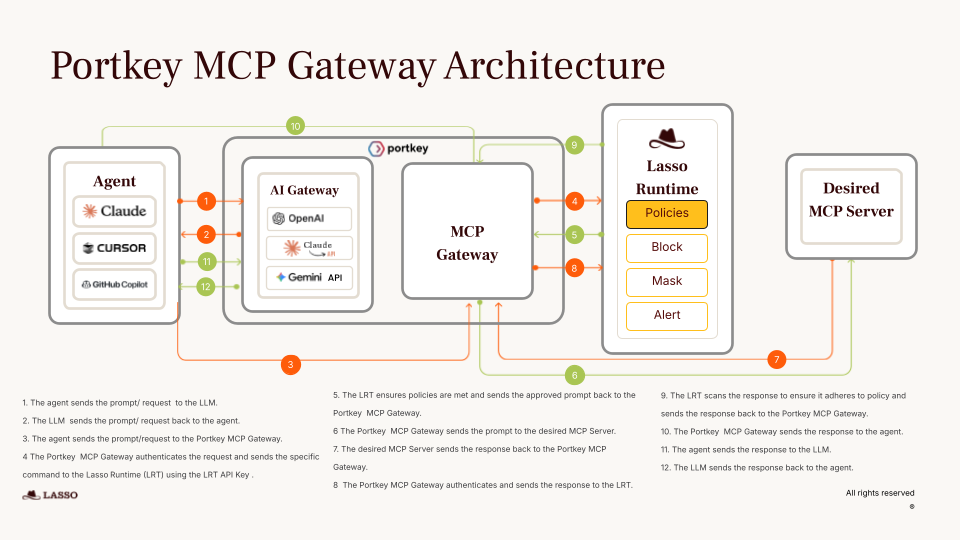

Centralized governance for MCP servers

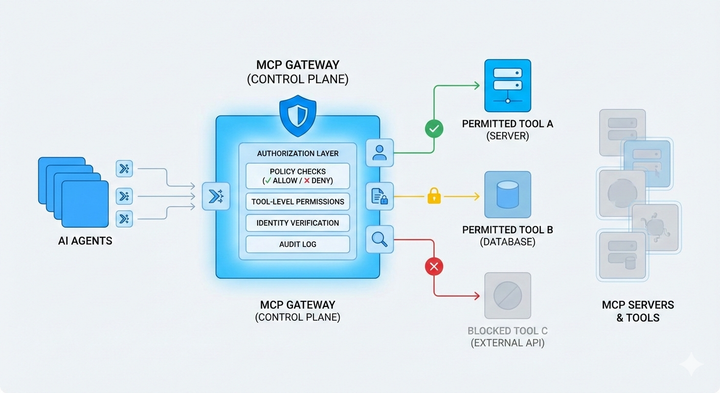

A centralized governance layer acts as the control plane for all MCP server activity. Instead of each server handling authentication and authorization in isolation, every agent request flows through this central point before it reaches any tool.

In this model:

- Authenticate once – An agent proves its identity to the control plane, which then issues short-lived, scoped credentials for any MCP server it’s authorized to use.

- Govern once – Access policies are defined in a single location and applied uniformly, regardless of where the tools are hosted.

- Audit once – All tool calls and policy decisions are logged in one system, creating a unified record for compliance, debugging, and analytics.

The benefits compound quickly:

- Consistency – No more policy drift between servers.

- Speed – Onboarding a new tool or agent means updating one policy store, not five different servers.

- Security – Short-lived, server-specific credentials reduce the blast radius if a token is compromised.

- Visibility – A complete view of which agents used which tools, when, and with what parameters.

Governing MCP server tools

When orchestration spans multiple servers, tools become the unit of control. Governance works best when each tool is treated as a first-class resource with clear metadata, risk posture, and policy hooks.

Make tools addressable and auditable

- Stable identity: each tool has a canonical name, server identifier, and version.

- Capability tags: search, write, admin, destructive. Useful for broad policy rules.

- Provenance: who published the server, when it was last updated, and any attestations.

- Ownership: a team or owner accountable for lifecycle and incident response.

Classify risk and data domains

- Risk tiers: read, mutate, destructive. Tie tiers to stricter approval and monitoring.

- Data domains: customer data, code, finance, HR. Limit cross-domain flows by default.

- Environment scope: dev, stage, prod. Most tools should not span all three.

Define contracts and guardrails

- Input and output schemas: structured contracts allow validation and redaction.

- Allowed arguments: enforce ranges, patterns, and allowlists for high-risk fields.

- Side-effect flags: mark tools that create, update, or delete to trigger extra checks.

Apply quotas and rate limits

- Per agent, per tool, per environment controls prevent runaway usage.

- Burst vs sustained limits keep critical systems responsive during incidents.

- Approval gates for sensitive actions, with a human in the loop when needed.

Versioning and rollout policy

- Pin versions for production workflows to ensure repeatability.

- Staged rollout: dev → stage → prod with automatic rollback on error budgets.

- Sunset rules: deprecate old tools and enforce migration deadlines.

Observability and audit

- Uniform telemetry: success, failure, latency, input size, and data domain tags.

- Traceability: every call includes who, what tool, which server, and why it was allowed.

- Outcome review: periodic audits on high-risk tools to validate policy efficacy.

The result is a shared language for control: tools are discoverable, classifiable, and governable across all servers, without rewriting server-side logic or duplicating policies.

Governance also needs to define which agents can use which tools, in which environments, and under what conditions. Without this, even the most carefully classified tools can be misused.

By centralizing governance, administrators don’t have to define permissions separately for each MCP server. Instead:

- One policy store defines which agents can use which tools.

- Scoped credentials are issued dynamically, allowing only the approved calls.

The promise of MCP is that a single agent can access a wide variety of tools. In practice, the most valuable workflows span multiple MCP servers.

With centralized governance, this becomes safe and seamless: agents authenticate once, policies are applied consistently, and every tool call, no matter which server it lives on, is governed, authorized, and logged through a single control plane.

Benefits of this model

- Consistency: each tool call, whether on Server A, B, or C, goes through the same policy checks.

- Safety: no tool is ever invoked without an explicit policy decision, preventing accidental overreach.

- Efficiency: agents can run multi-server workflows without redundant re-authentication.

- Traceability: one audit log covers the entire flow, making it clear which tools were invoked, why, and under what authority.

Centralized governance as the next step

As MCP adoption grows, the ability to orchestrate across multiple servers will become the norm. Centralized governance provides the foundation for that future, a single layer to handle authentication, policy, and audit across every tool.

The next step is standardizing these governance patterns so multi-server workflows are not just possible, but a built-in part of how MCP is used at scale.

At Portkey, we’re building an MCP Hub — a centralized governance module for MCP that makes this vision real. If you’d like a sneak peek, you can book a demo and see how centralized governance works in practice.