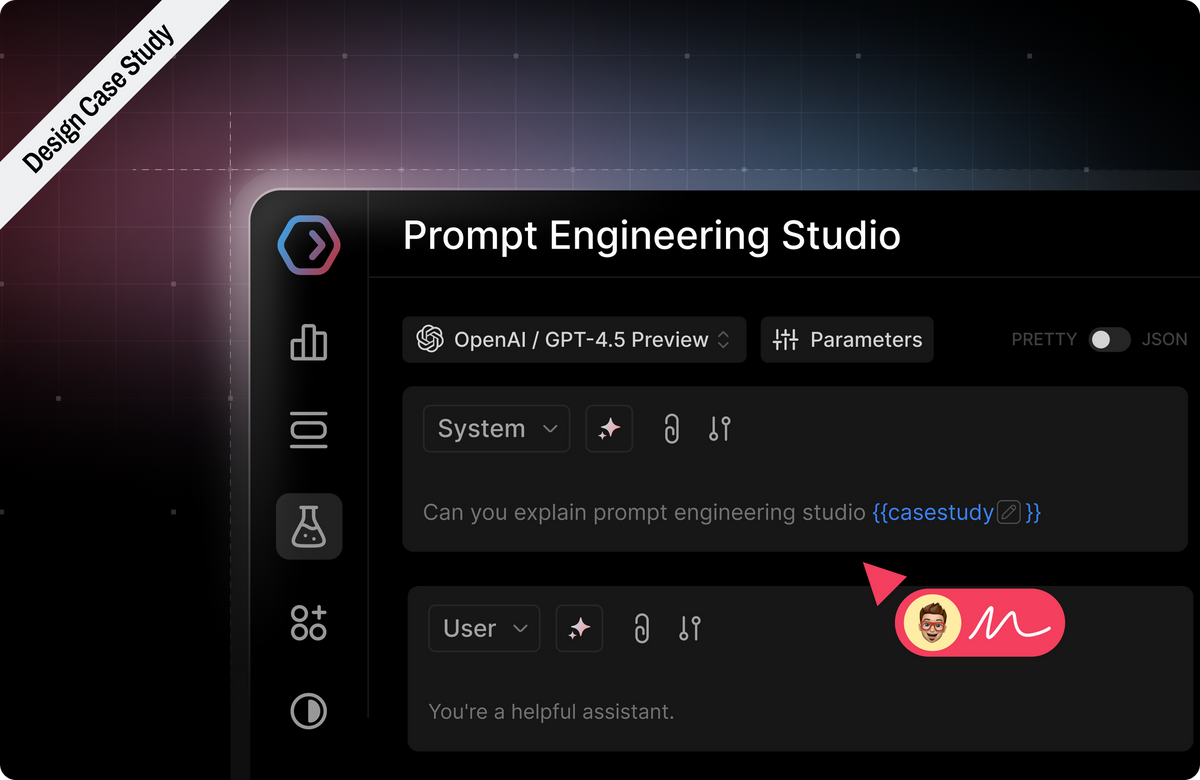

Portkey Prompt Engineering Studio User-Centered Design Case Study

Crafted with thoughtful design, our Prompt Engineering Studio empowers users to create, refine, and optimize prompts effortlessly, blending usability with innovation.

We transformed Portkey's Prompt Engineering Studio through user-centered design principles, focusing on enterprise user workflows. Our redesign created a more intuitive, scalable solution that makes prompt engineering faster and more collaborative for enterprise teams.

We made the transformation and introducing features like side-by-side prompt testing, simplified AI provider selection, and streamlined tool integrations to enhance usability and accelerate prompt creation.

Project Overview

- Feature: Prompt Engineering Studio

- My Role: Design Director

- Tools: Figma, Miro, Linear, Slack, and Posthog

- Project Duration: 3 Months

- Collaborators: Myself, 1 Developer, Stakeholders (CEO, CTO)

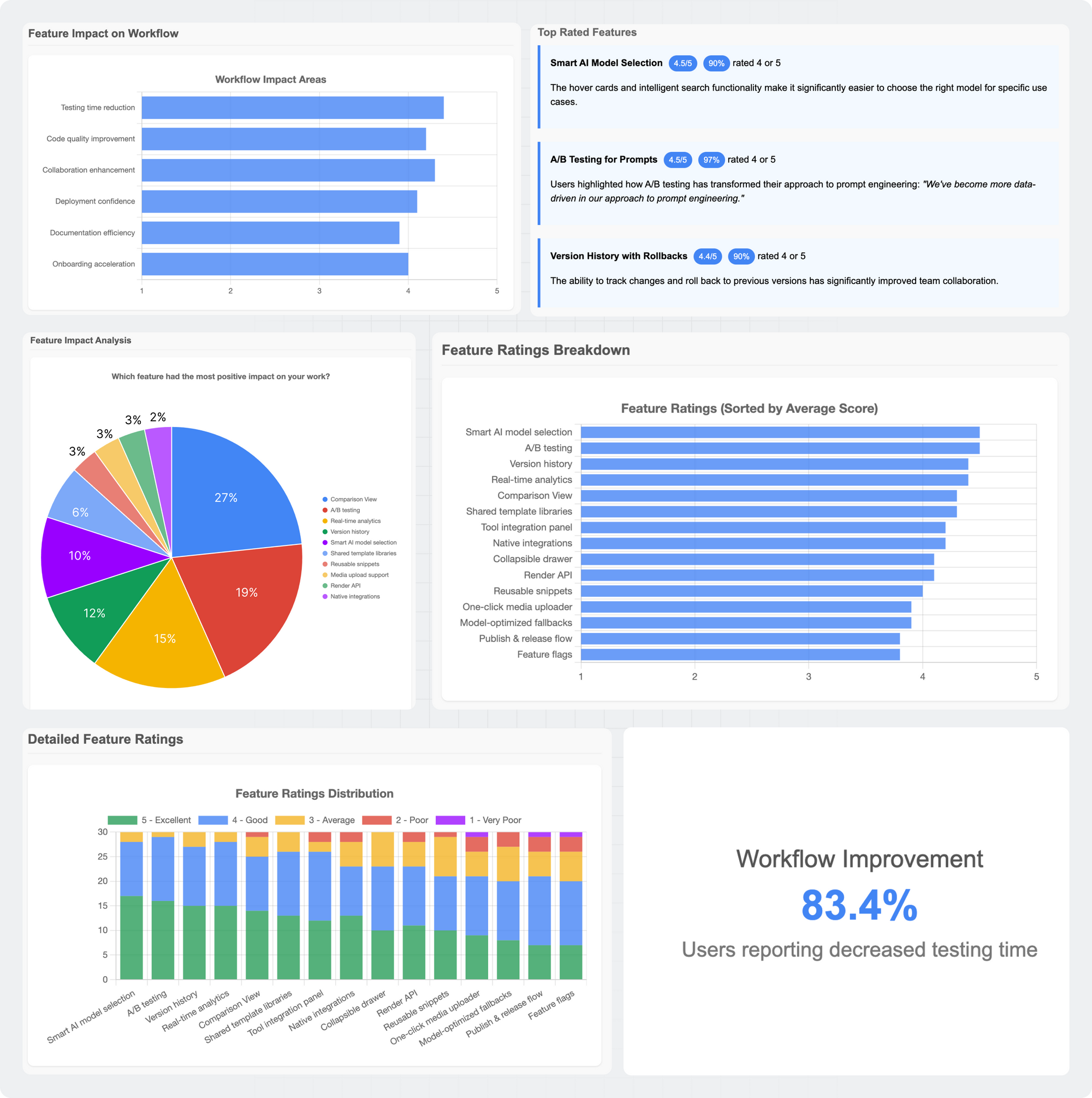

The Prompt Engineering Studio redesign targeted key user pain points to streamline workflows and enhance usability. Our approach combined user feedback with data analysis to prioritize improvements that would deliver the greatest impact.

Problems

- Inefficient Comparison Process: Users faced delays in prompt testing due to the inability to compare outputs across AI providers and saved prompts simultaneously, disrupting workflow.

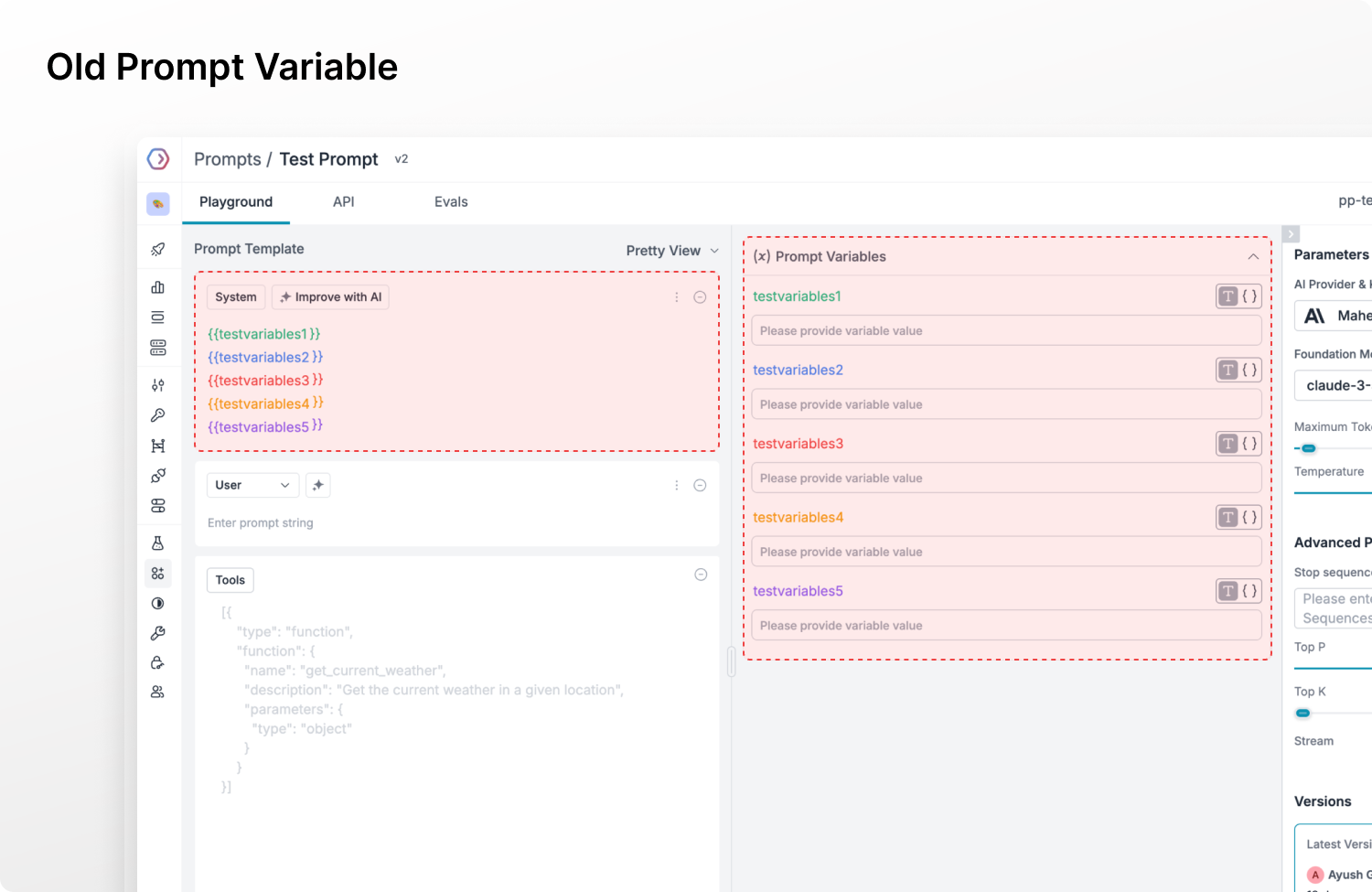

- Cluttered Interface: The overwhelming display of current prompt variables and hidden parameters caused cognitive overload, reducing productivity.

- Challenging Model Selection: Users struggled to find suitable AI models due to poor search functionality and limited model information.

- Limited Multimodal Support: The lack of media upload options restricted users from testing multimodal prompts, limiting advanced AI exploration.

- Workflow Friction: Advanced users experienced delays due to disjointed tool integrations and complex prompt configurations.

Solution

- Dynamic Comparison View: Enabled side-by-side testing of 1600+ AI models, allowing instant output comparison and faster iteration.

- Focused Interface Design: Simplified prompt variables and parameters with collapsible drawers, reducing clutter and improving user focus.

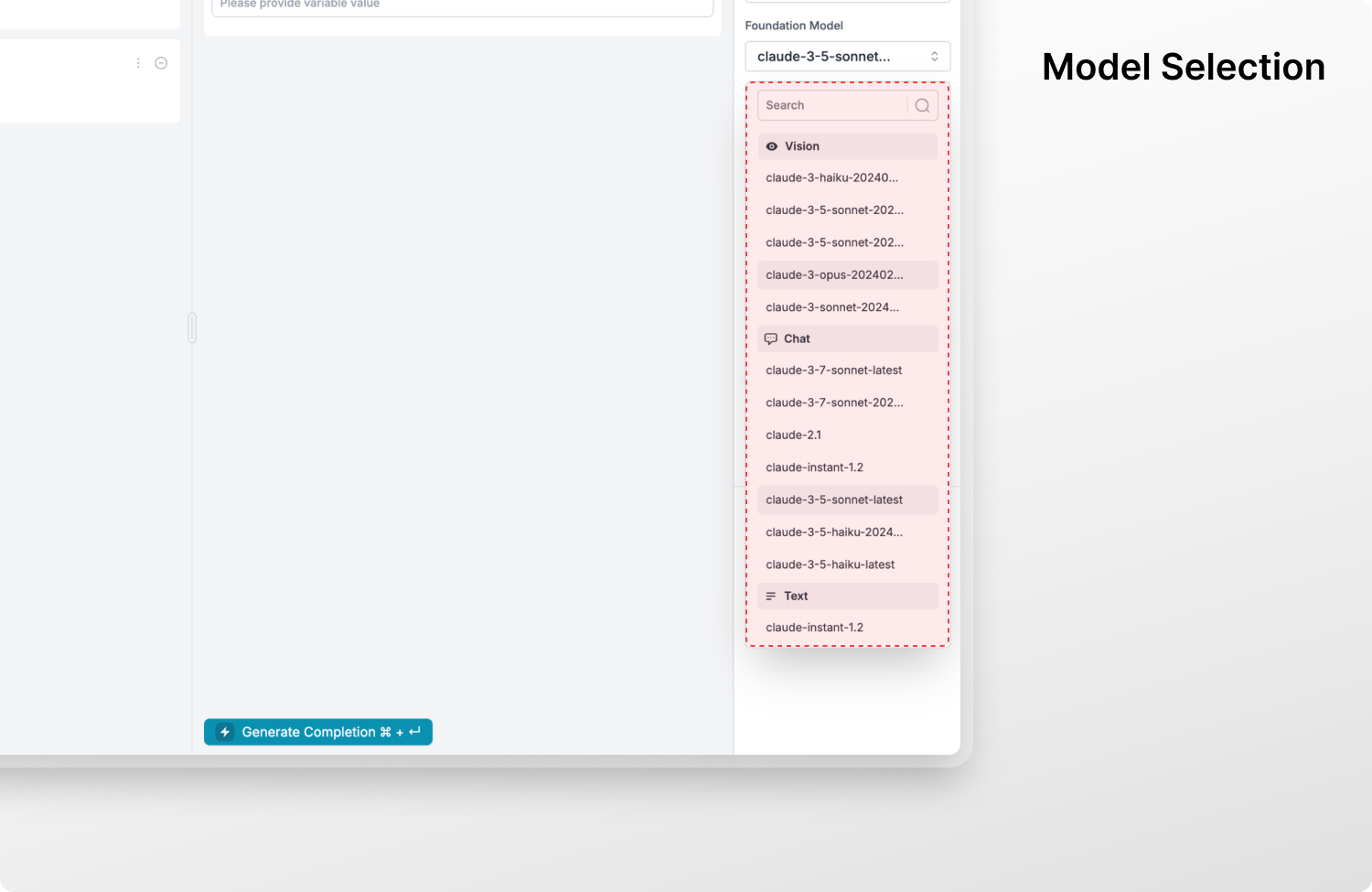

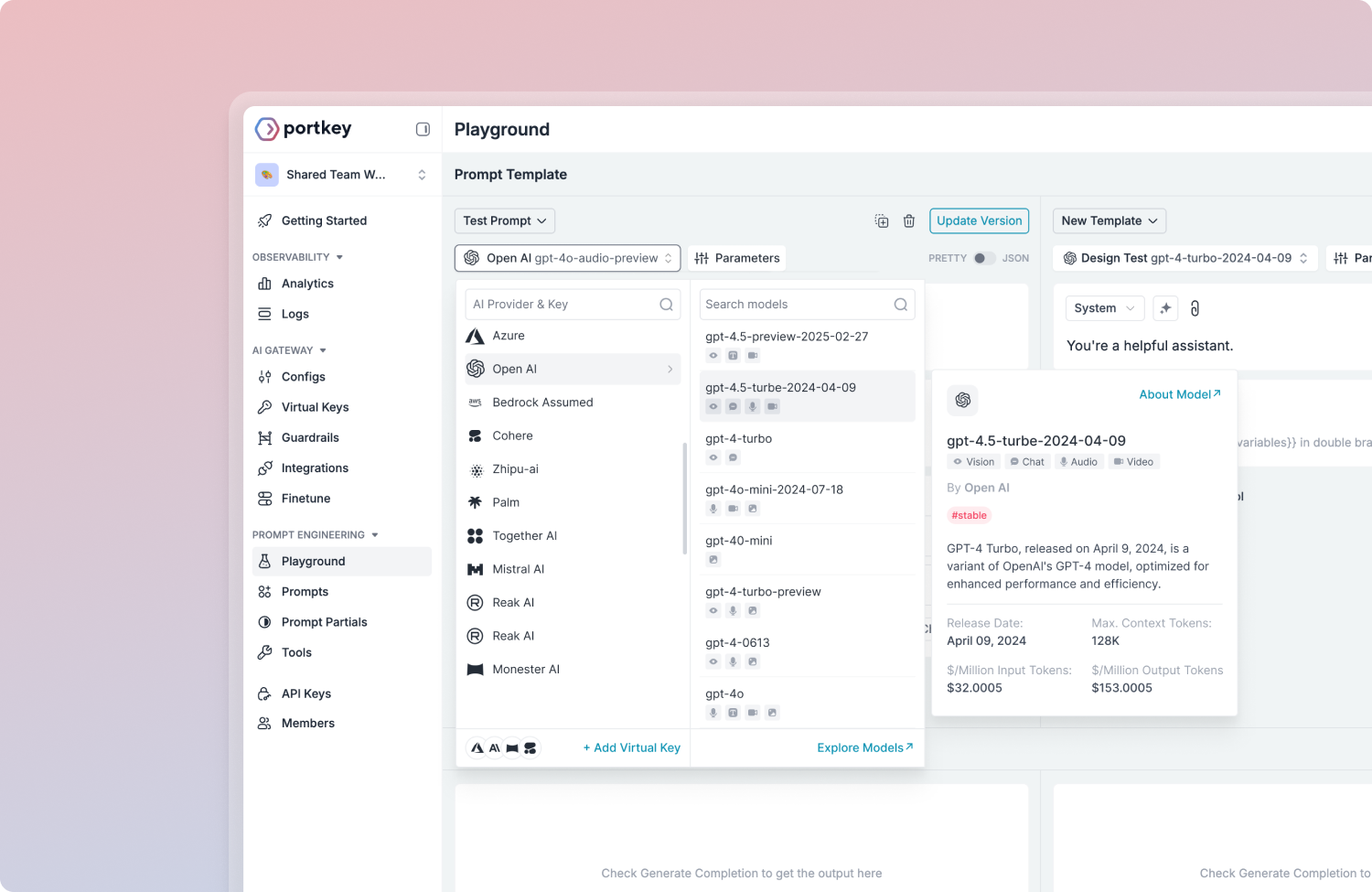

- Intelligent Model Selection: Introduced smart search with hover cards for quick access to relevant model specifications.

- Seamless Media Integration: Added a one-click uploader for images, audio, and video, enhancing multimodal prompt testing.

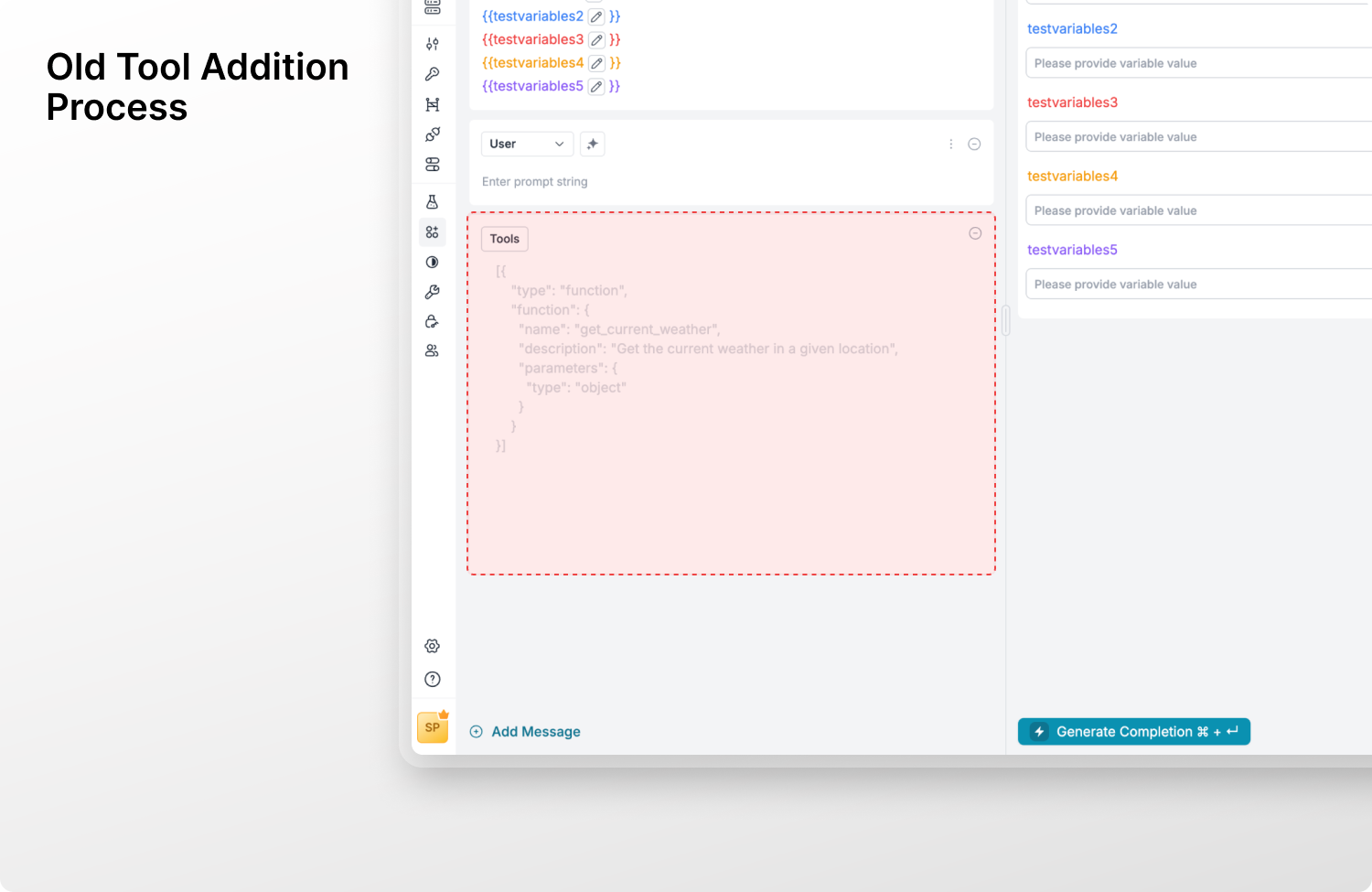

- Streamlined Workflow: Unified tool integration into a single panel, simplifying external connections and reducing customization steps.

Where Challenges Ignite Potential

70% Faster Testing

Accelerated testing, driving engagement and experimentation.

30% More Prompts Tested

Increased prompt testing per session, fostering rapid experimentation.

40% Quicker Model Selection

Reduced model selection time by 40%, speeding up decision-making.

22% More Media Support

Enhanced media testing, integrating images, audio, and video.

Design Process

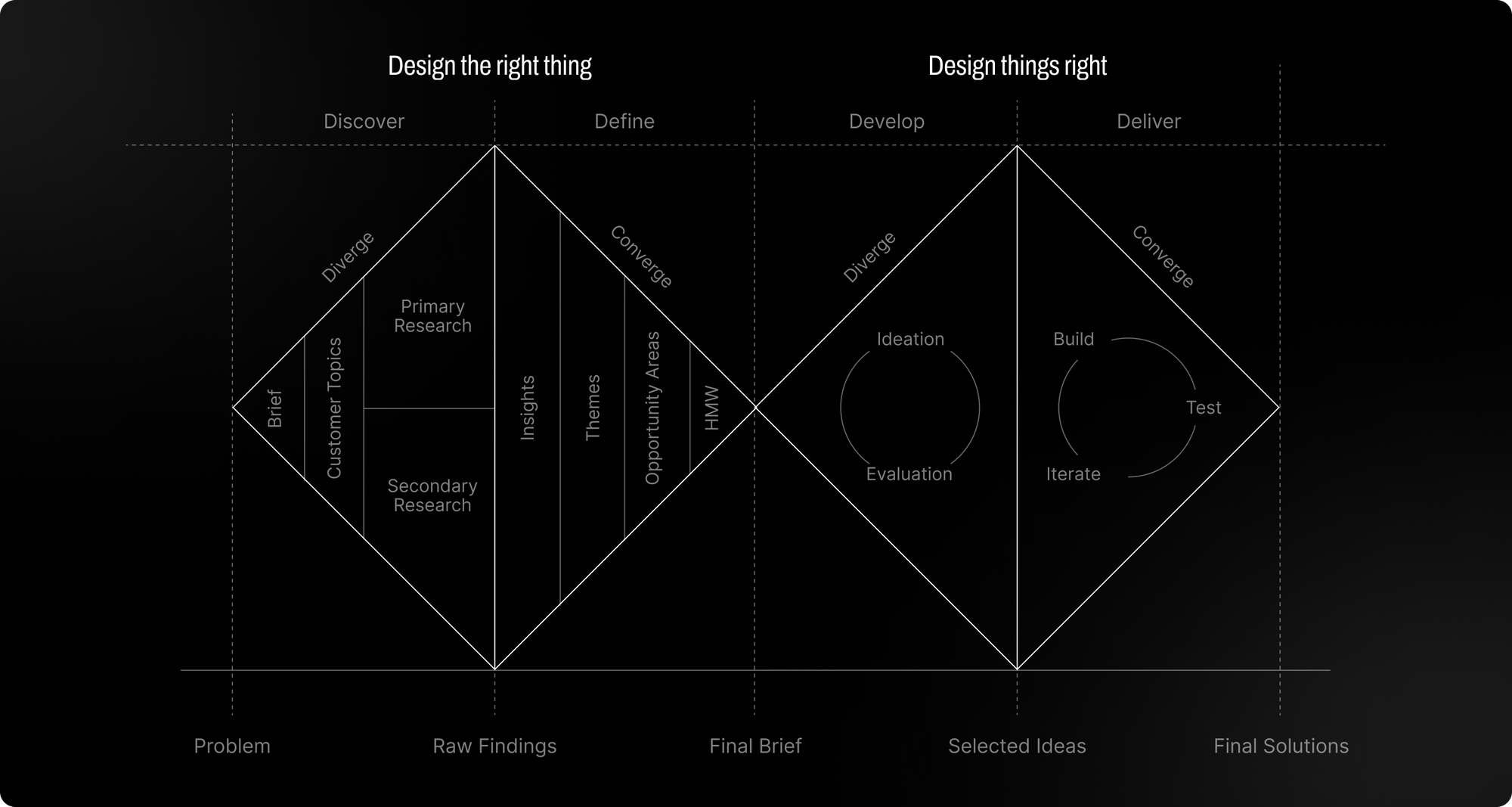

Our design process, guided by the Double Diamond framework, ensured a comprehensive exploration of user needs and the development of effective solutions.

Research revealed five key challenges: inefficient comparison of prompts, cluttered interfaces, difficulty in model selection, lack of multimodal support, and workflow friction due to disjointed tools. These insights highlight areas for improvement.

Research Methods

We conducted user interviews, analyzed usage analytics, and benchmarked against competitors to understand user pain points and identify improvement opportunities. Our research focused on addressing workflow inefficiencies and unmet needs in the prompt engineering process. We explored areas like usability, feature adoption, pain points, and feature validation.

Using both qualitative and quantitative methods, we conducted interviews with 5-7 engineers to uncover challenges, usability testing to spot friction points, and surveys to collect feedback on ease of use and feature importance. These insights guided our efforts to improve the feature.

Key Insights

- Users struggled with the lack of A/B testing or we call side-by-side comparison of AI providers and saved prompts.

- Prompt configuration felt cumbersome due to the overwhelming display of variables and hidden parameters.

- AI provider selection lacked context, leading to confusion when choosing the right model.

- The interface was not conducive to multimodal testing, limiting users' ability to test with media inputs.

- Tool integration required multiple steps, making the workflow unnecessarily complex.

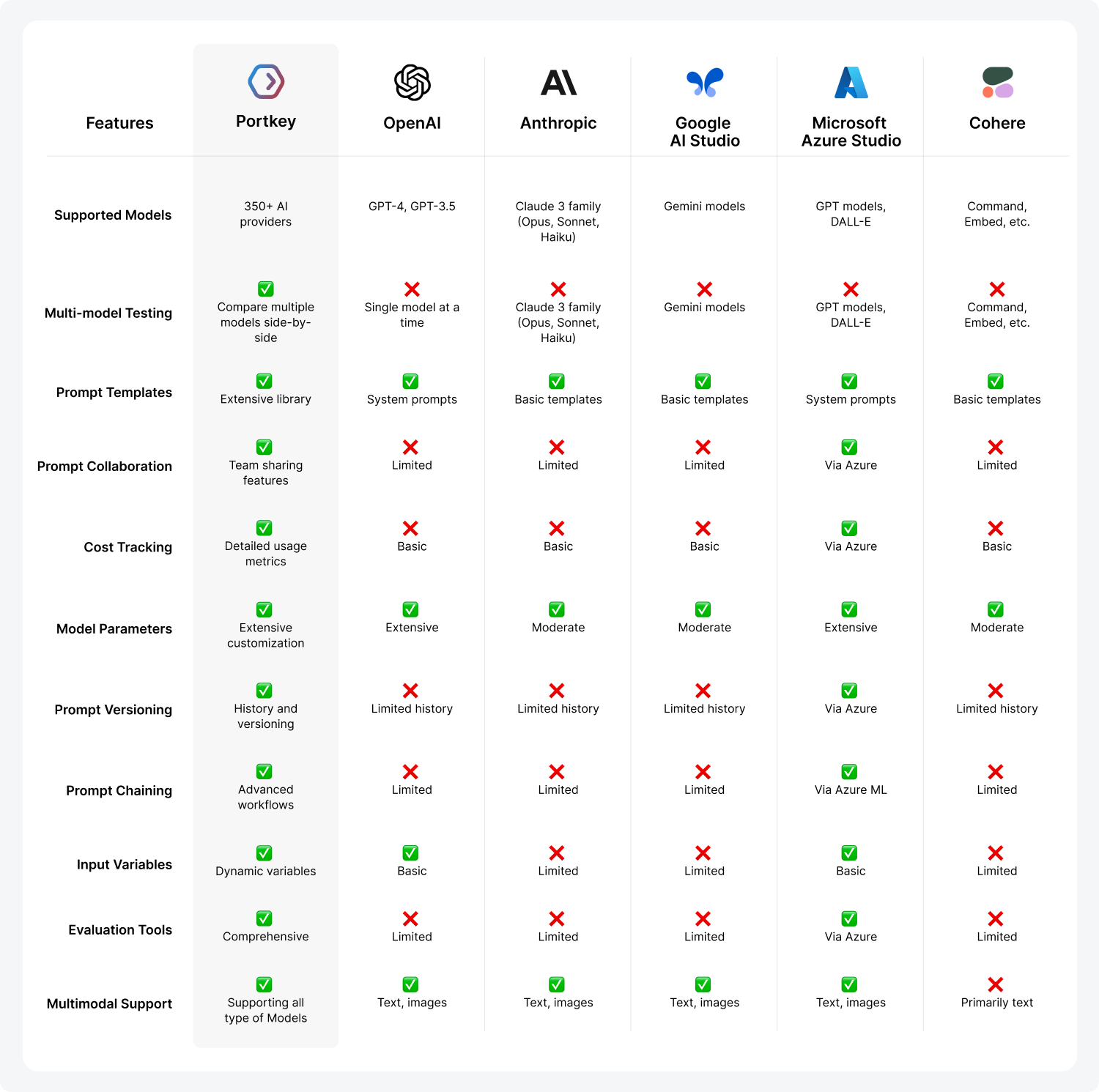

Market & Competitive Analysis

Our competitor analysis revealed opportunities for Portkey.ai to stand out with a more intuitive, feature-rich design, focusing on flexibility in areas like side-by-side comparison, multimodel testing, prompt collaboration, versioning, parameter management, and media input integration.

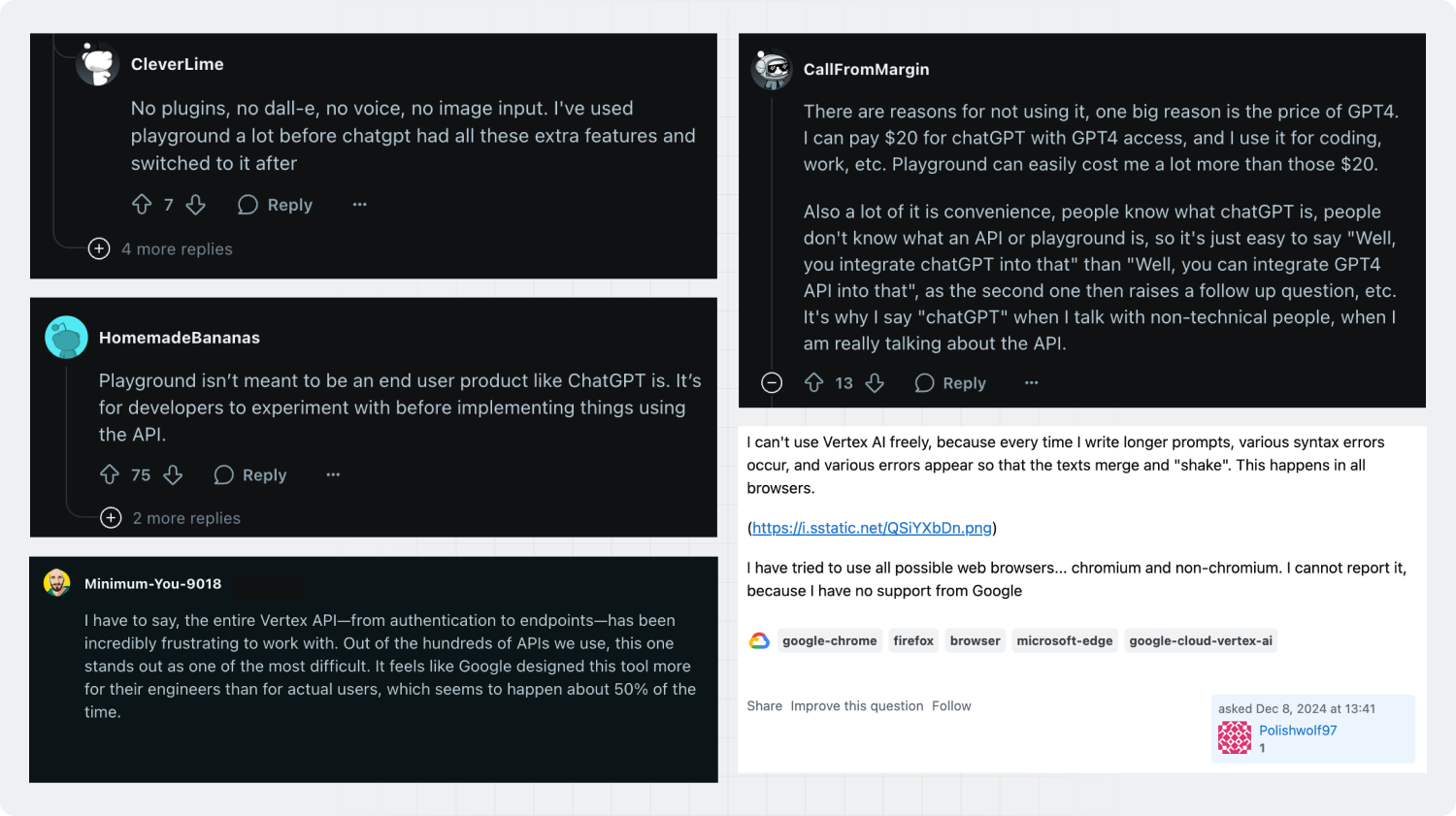

Some of the users' frustrations with the existing tools available in the market.

Challenges & Bottlenecks

To gain diverse perspectives, I met engineers of varying experience levels across Vancouver, which helped me understand their challenges and workflows in prompt engineering.

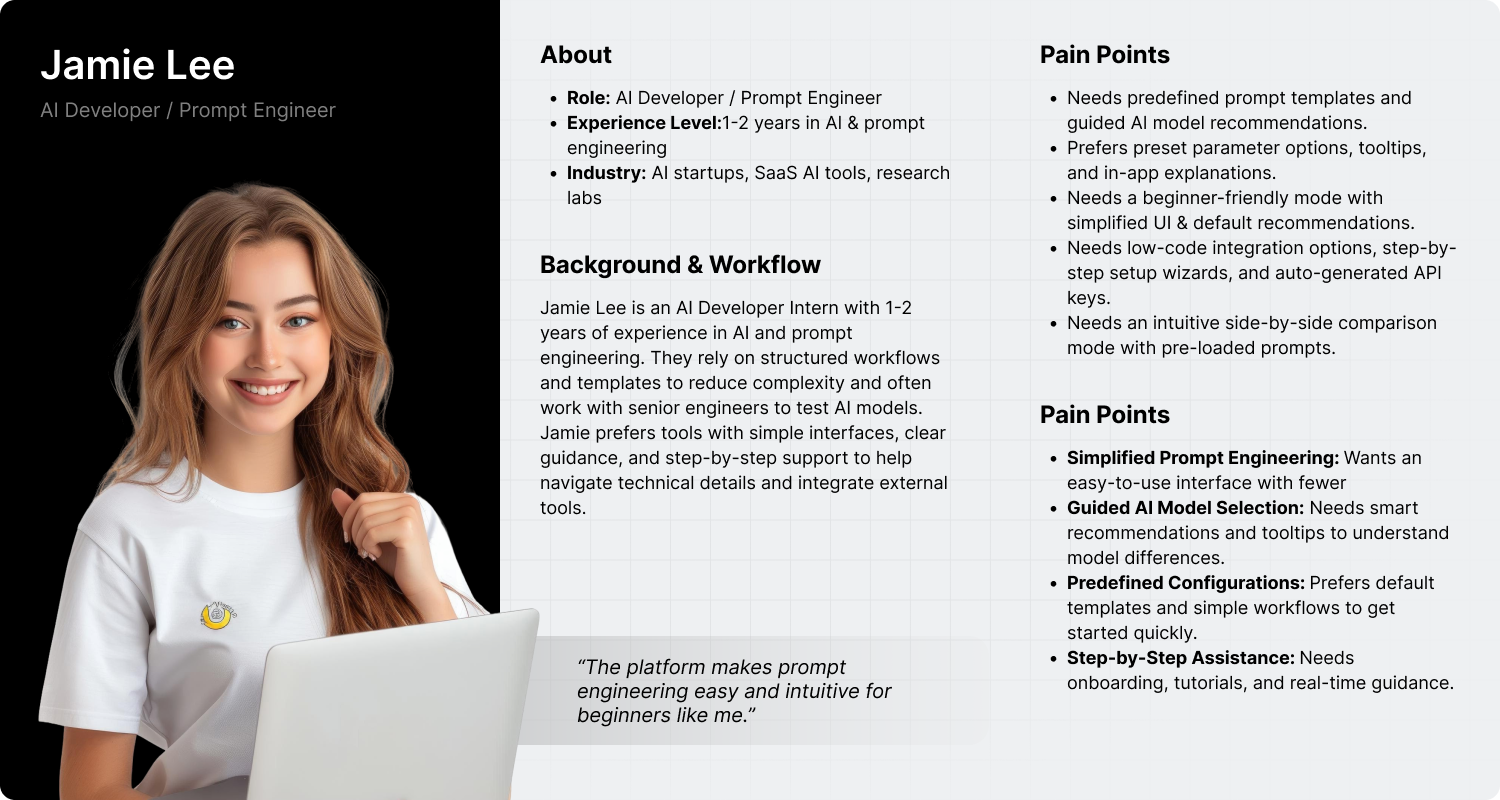

A major challenge was balancing conflicting feedback: advanced users wanted powerful, customizable features, while novices preferred a simpler, guided experience. Initially, we focused on advanced users, which may have overlooked some usability issues for beginners. More targeted research for novice users could have provided better insights and improved accessibility.

In the Define Phase, we focused on understanding the problem and creating a simpler, collaborative space for real-time prompt testing and adjustments. The goal is to build a tool that prioritizes speed, simplicity, and collaboration for an engaging prompt engineering experience.

Key Insights & Opportunities

Based on user feedback and insights, we’ve identified key areas where improvements can be made to enhance the overall experience. These opportunities focus on simplifying workflows, reducing complexity, and adding features that users need to work more efficiently and effectively.

- Facilitate Comparative Insights: Create an intuitive interface for comparing AI prompts across models.

- Mitigate Complexity: Simplify and reorganize cluttered interfaces to improve focus and productivity.

- Expand Multimodal Testing: Add multimedia support for testing prompts with images, audio, and video.

- Simplify Tool Onboarding: Streamline the custom tool setup process into a single, intuitive panel.

- Clarify AI Model Choices: Enhance search functionality and provide detailed model insights to help users select the right model.

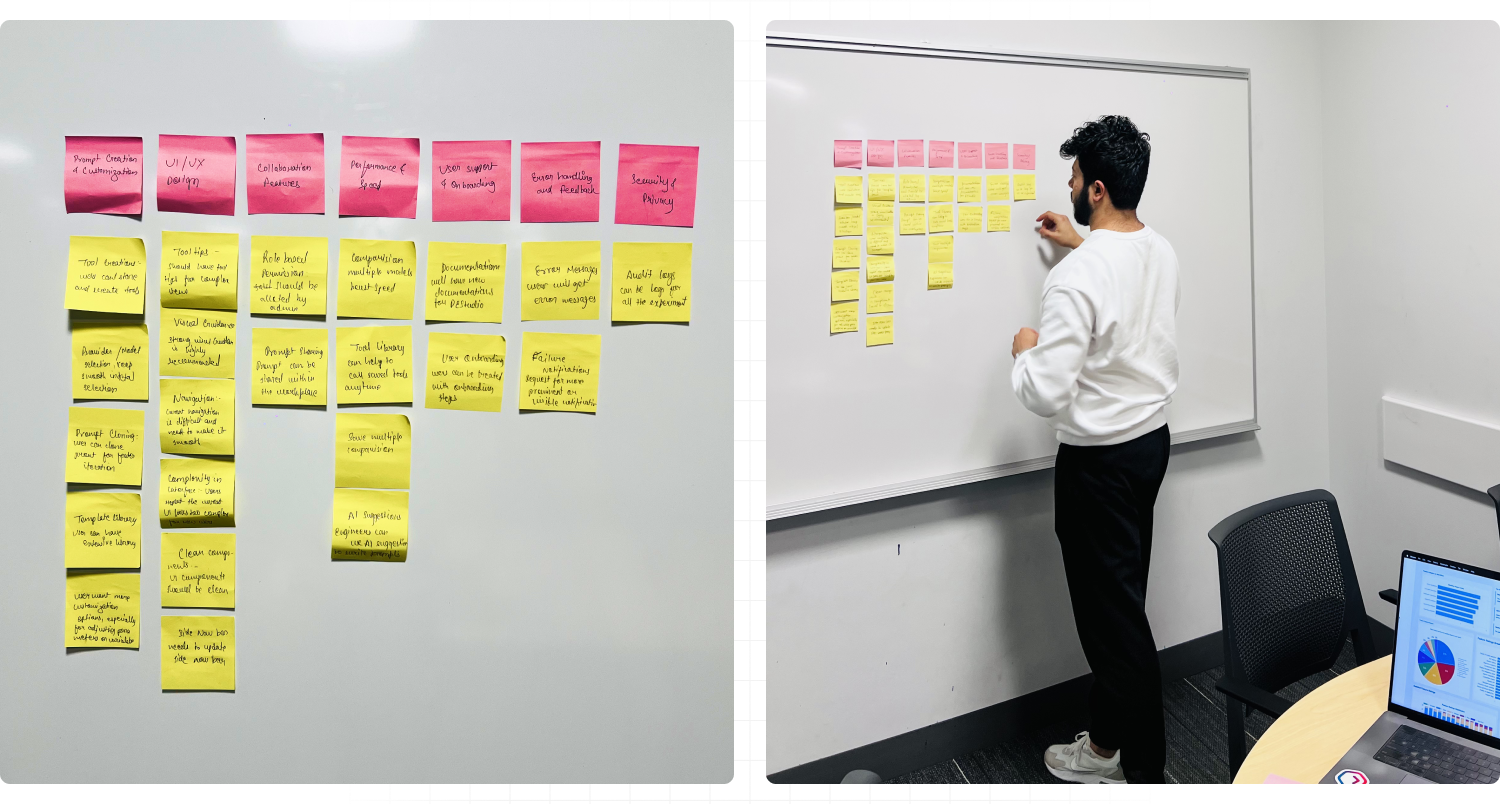

Affinity Mapping

Affinity Mapping helped us organize user feedback into key themes like customization, UI complexity, and tool integration, guiding design improvements to create a more intuitive and effective Portkey Prompt Engineering Studio design.

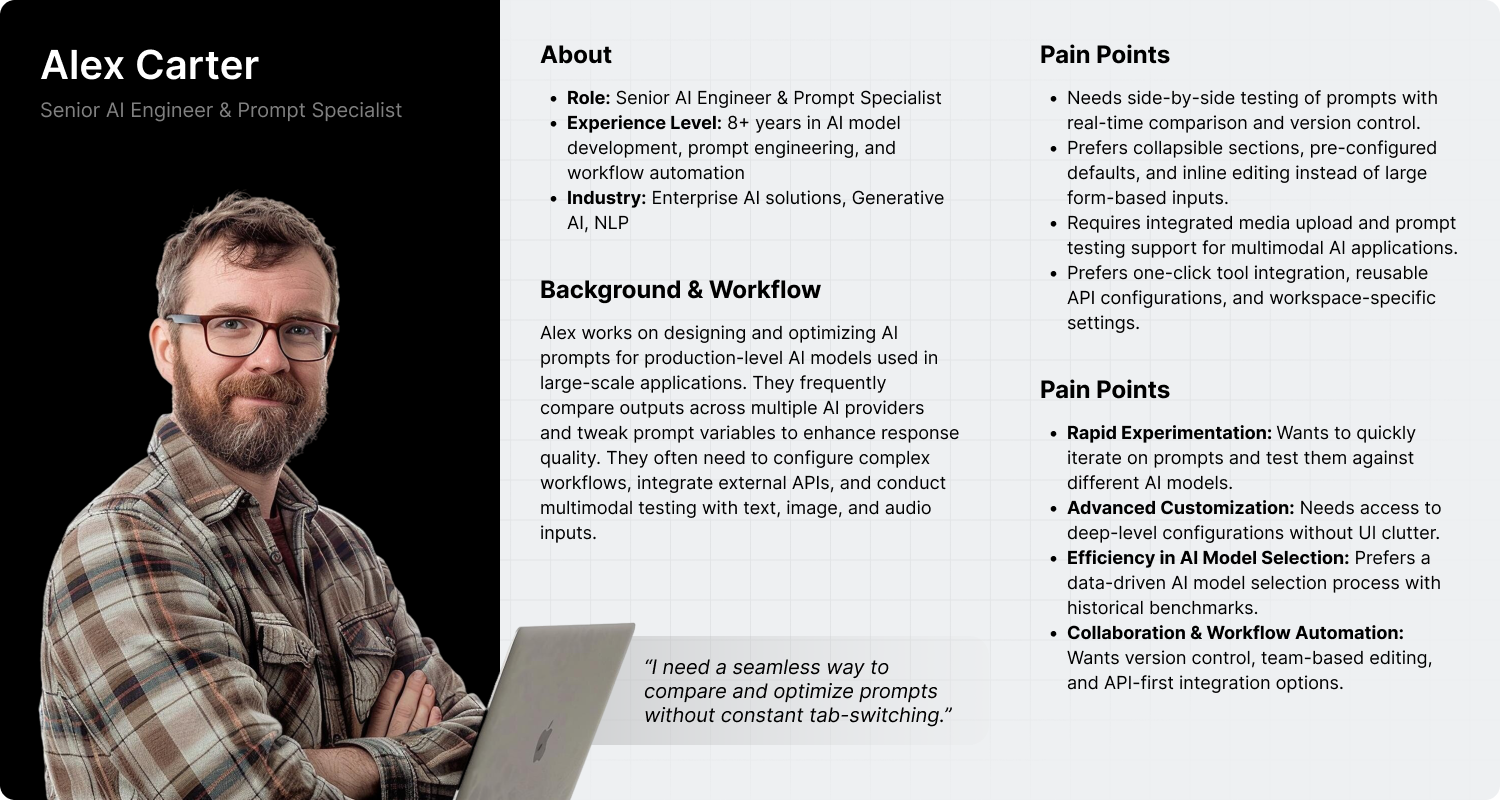

User Personas

Novice Users: Individuals new to prompt engineering who need a simple, guided experience with easy-to-use features and clear guidance.

Advanced Users: Experienced AI engineers and prompt developers who require more flexible, powerful tools and advanced customization options for prompt testing and iteration.

Design Goals

Our vision emerged from watching real users struggle with workflows that should have been effortless.

- Better usability and workflow: Inspired by a frustrated user clicking through multiple screens for a simple task, we aimed to create an interface that feels like a natural extension of thought.

- Side-by-side comparison of prompts: Observing a power user using multiple browser windows inspired us to implement side-by-side prompt comparison.

- Simplifying AI model selection: A user’s confusion with the model dropdown led us to simplify the selection process, making it as easy as choosing a photo filter.

- Supporting media input: A creative director’s struggle with testing image prompts motivated us to add seamless media support to maintain creative flow.

- Reducing complexity in tool integration: We simplified advanced users’ complex workflows, reducing fifteen steps to just three.

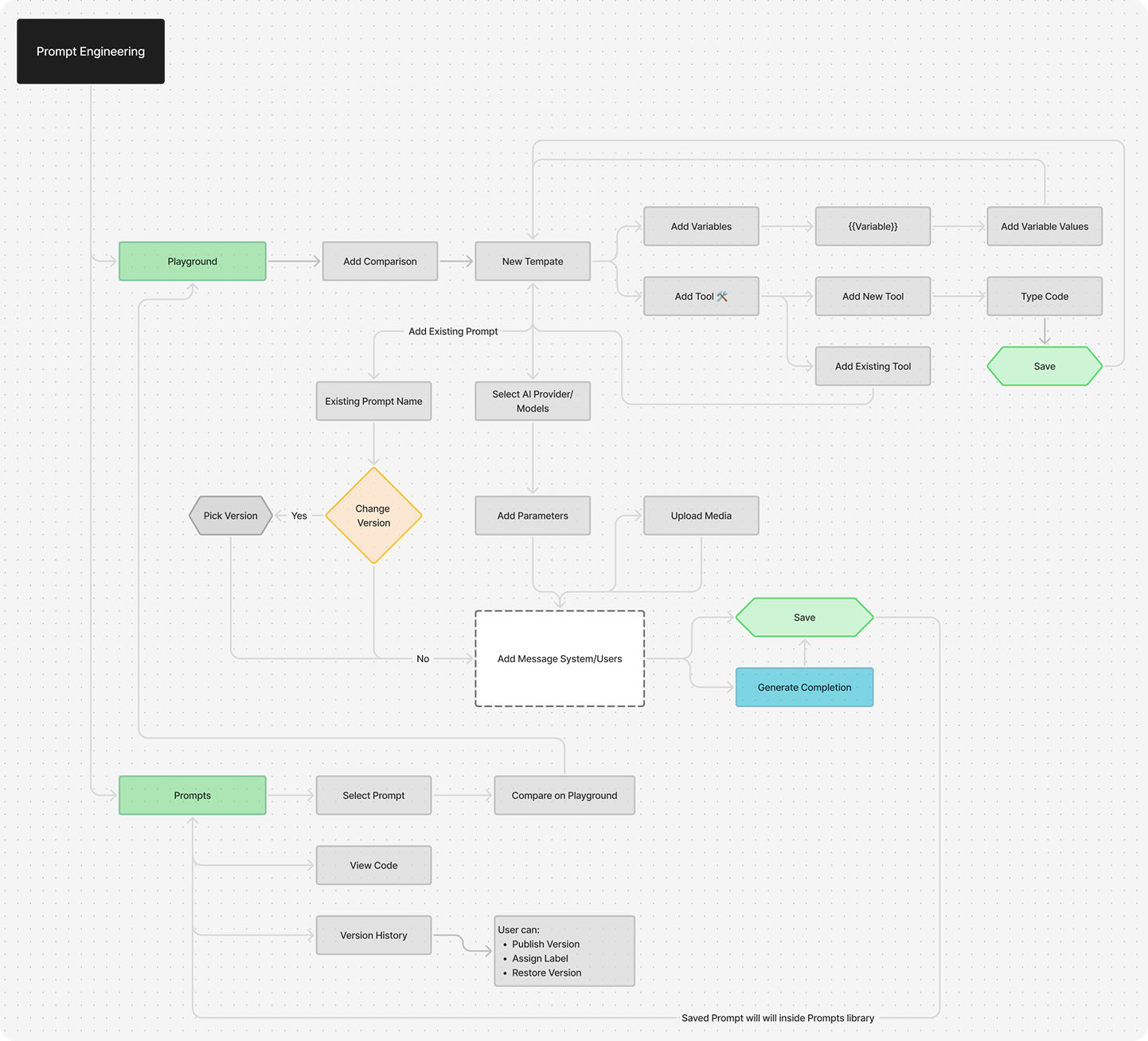

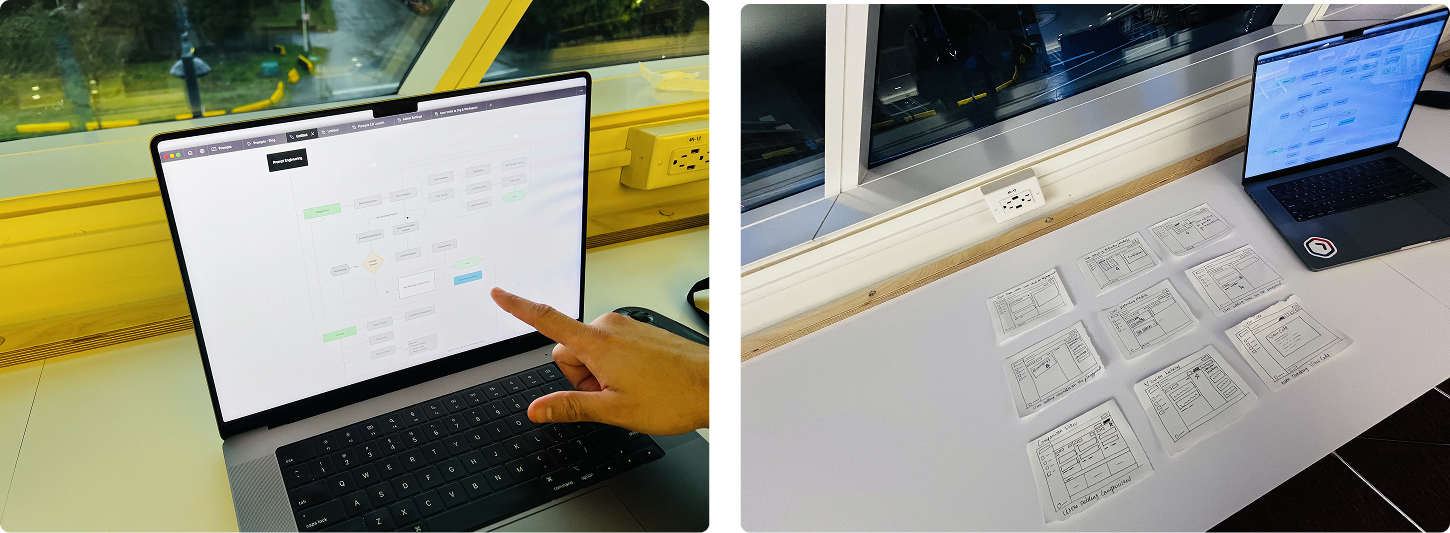

User Flow

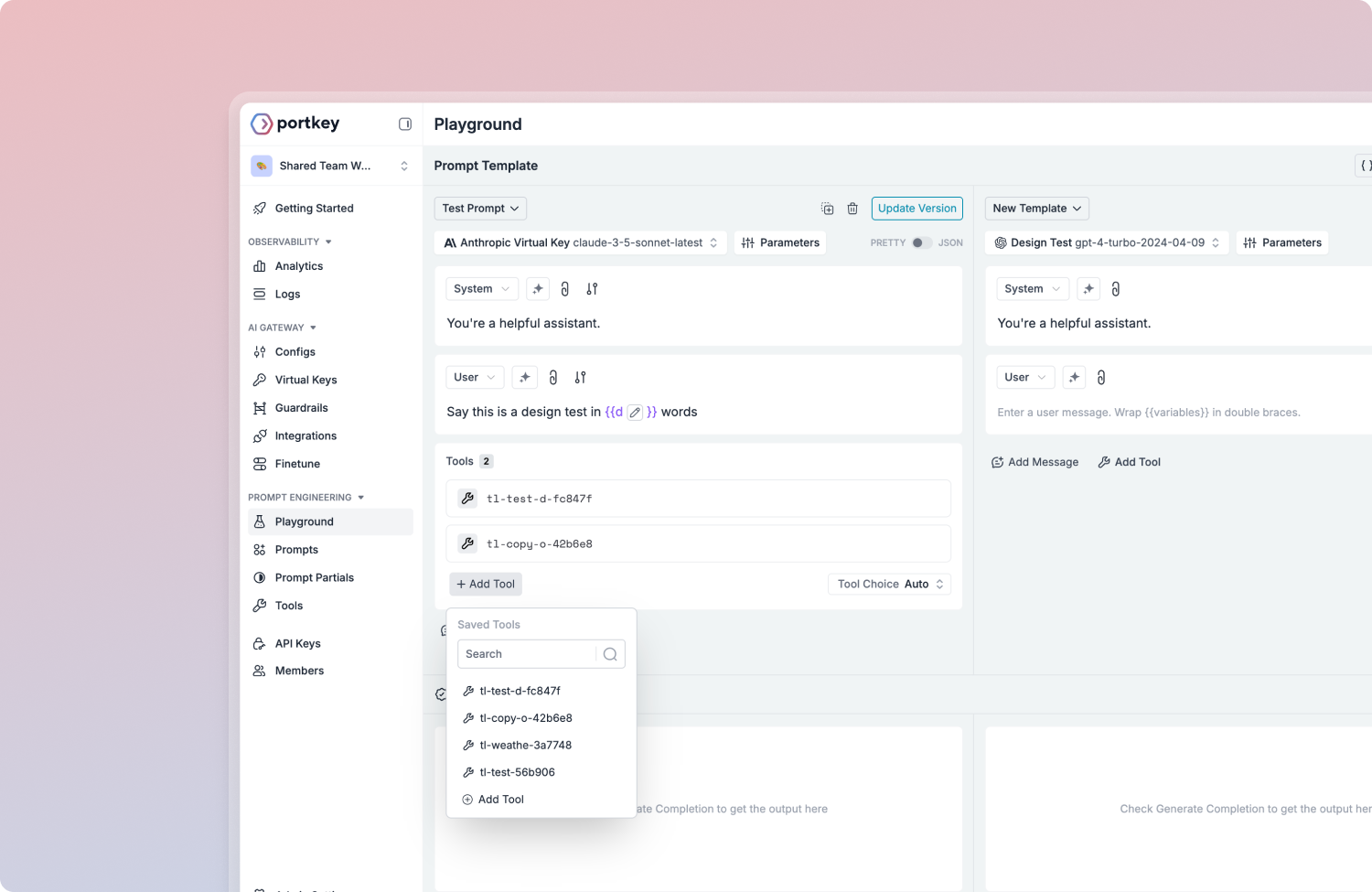

The user flow of the updated Prompt Engineering Studio is designed for simplicity and efficiency. Users can easily select AI models, add prompt variables, and upload media for testing. Tool management is streamlined with a user-friendly selection card, and the "View Code" feature ensures seamless integration. Version history is now easier to navigate, making the overall process smooth and intuitive.

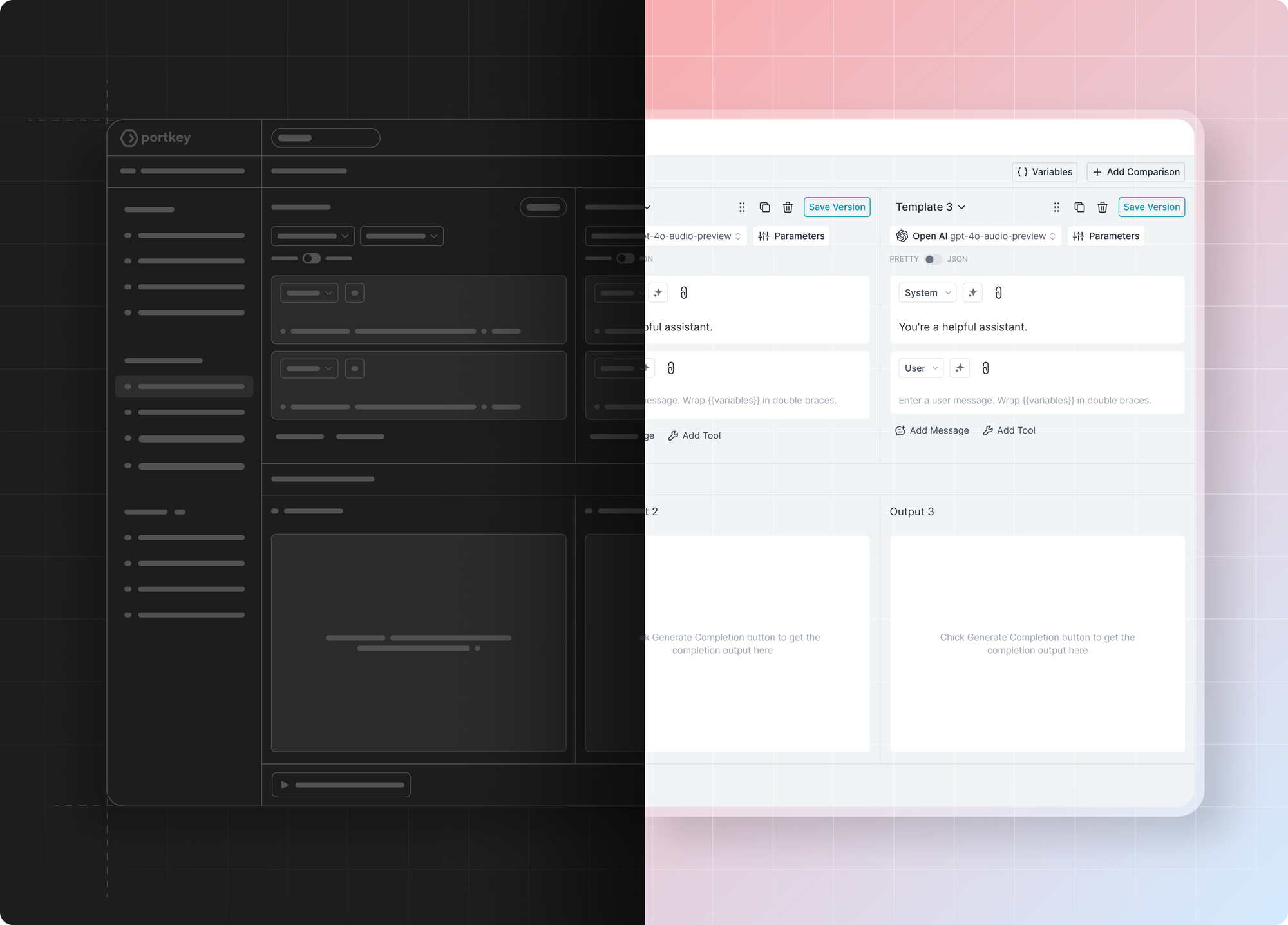

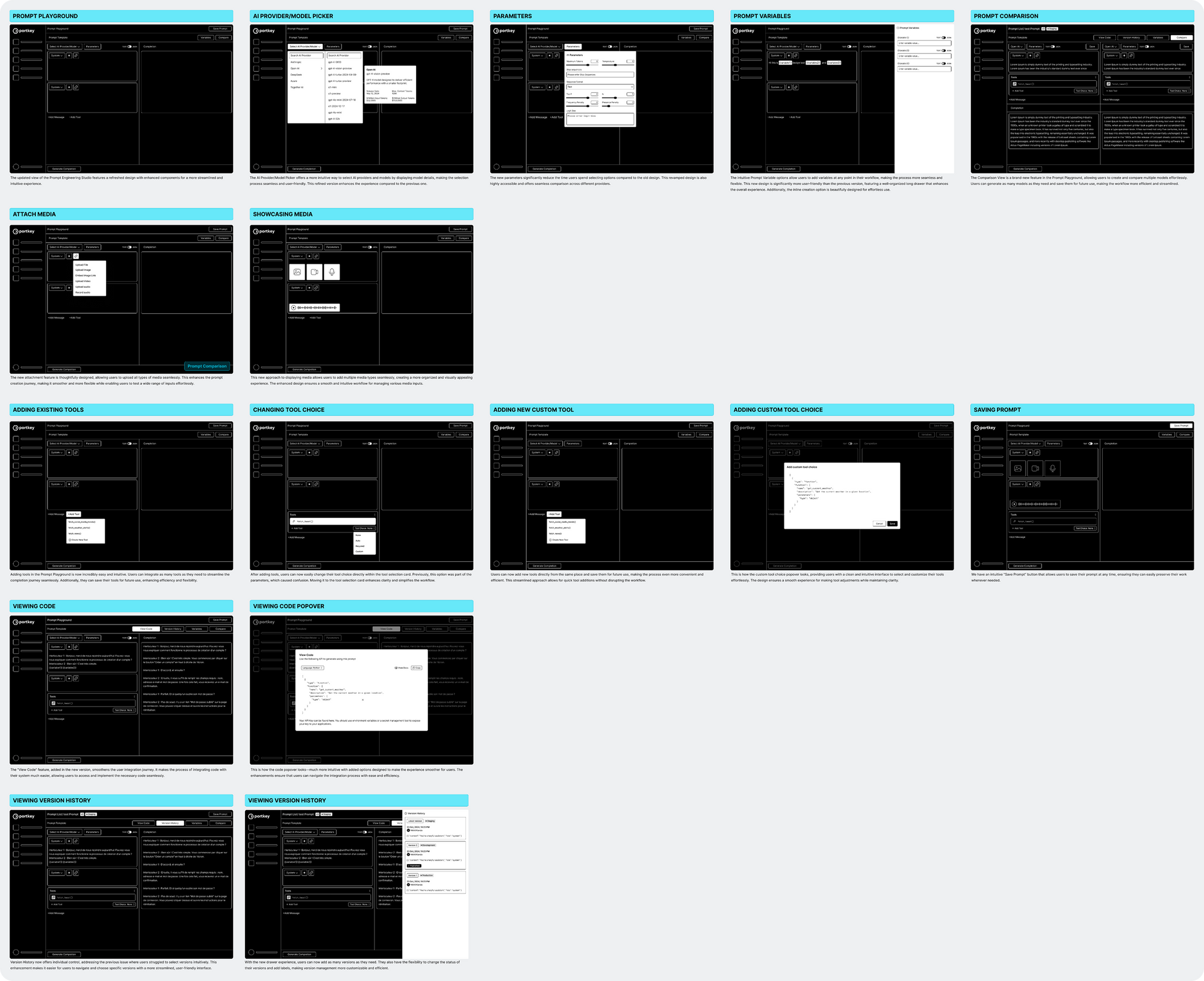

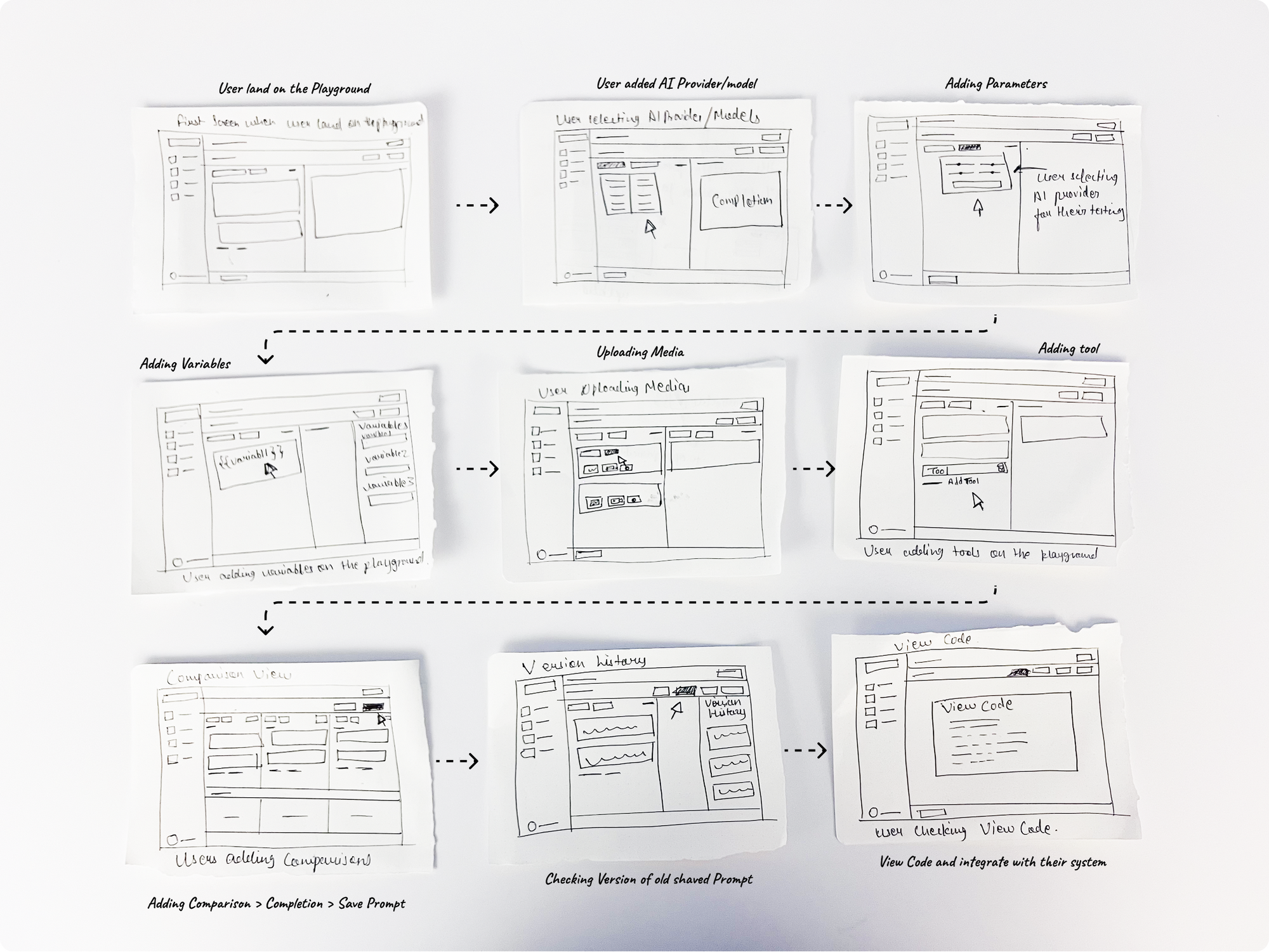

Wireframes

The new Prompt Engineering Studio offers a more intuitive design. The AI Model Picker now shows clear details for easier selection. Parameters are faster to choose, saving time, and Prompt Variables can be added flexibly with an organized drawer and inline creation.

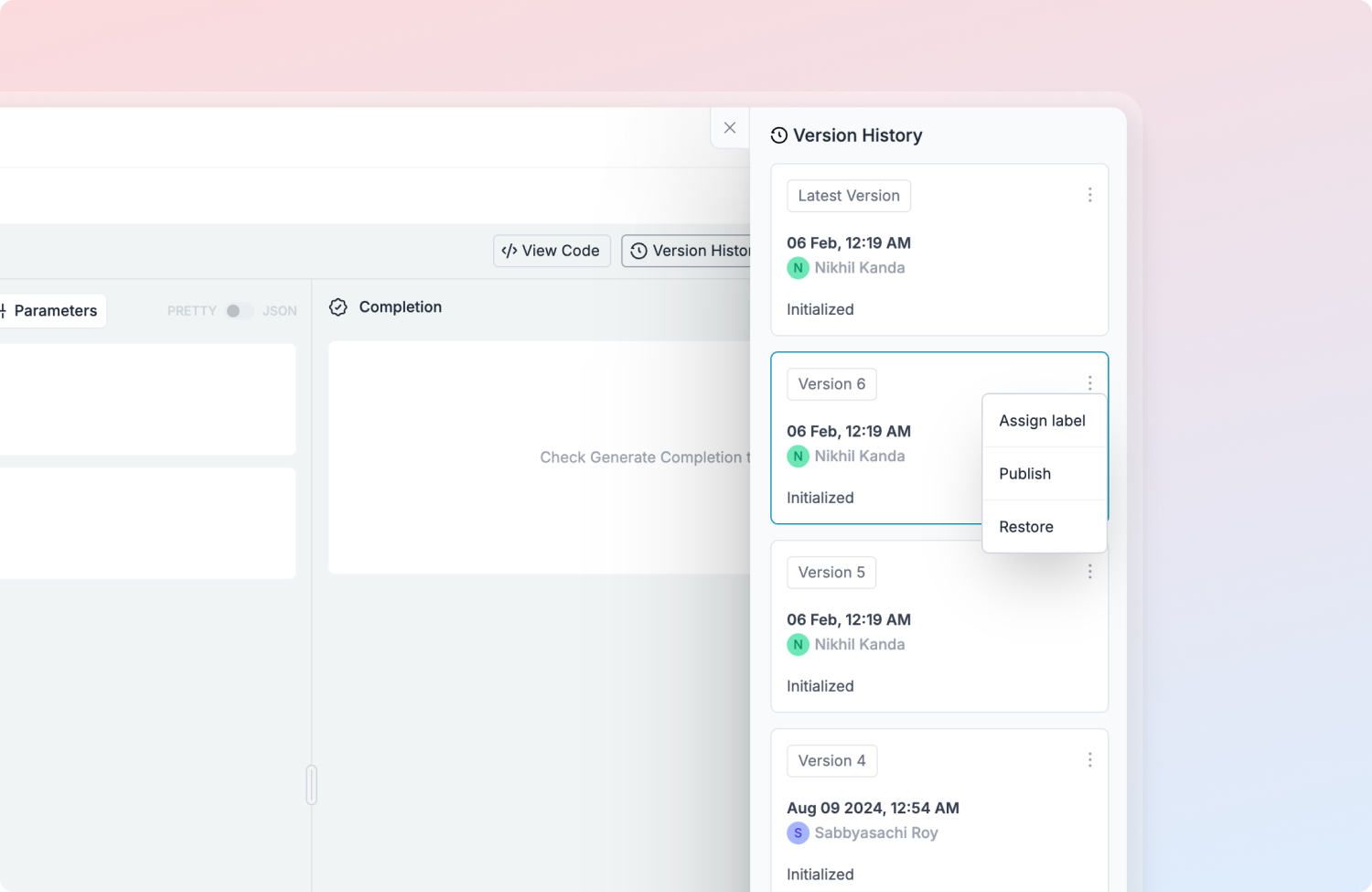

The new Attachment Feature enables seamless uploads of images, videos, and audio. Managing tools is simplified with an improved tool selection card for adding and saving tools. The "View Code" feature streamlines integration, and the updated Version History makes managing versions more intuitive and flexible. Overall, the design enhances efficiency and clarity.

Challenges & Bottlenecks

Our journey through complexity to clarity wasn't without its share of obstacles and revelations.

- Narrowing the scope: We struggled to choose between beginners and power users until a lunchtime napkin sketch led to the idea of a layered interface that grows with users.

- Defining the problem: A chaotic war room of sticky notes eventually revealed clear patterns after nights of prioritizing user feedback.

- Balancing interests: Product meetings became debates between feature-rich stakeholders and simplicity-driven users, teaching us compromise without losing our vision.

- Reflection: The biggest insight was realizing that each team member had been solving different "obvious" problems, reshaping our collaboration forever.

In the Develop phase, we streamlined variable management, halved model selection time, and refined the media upload feature, improving the overall user experience.

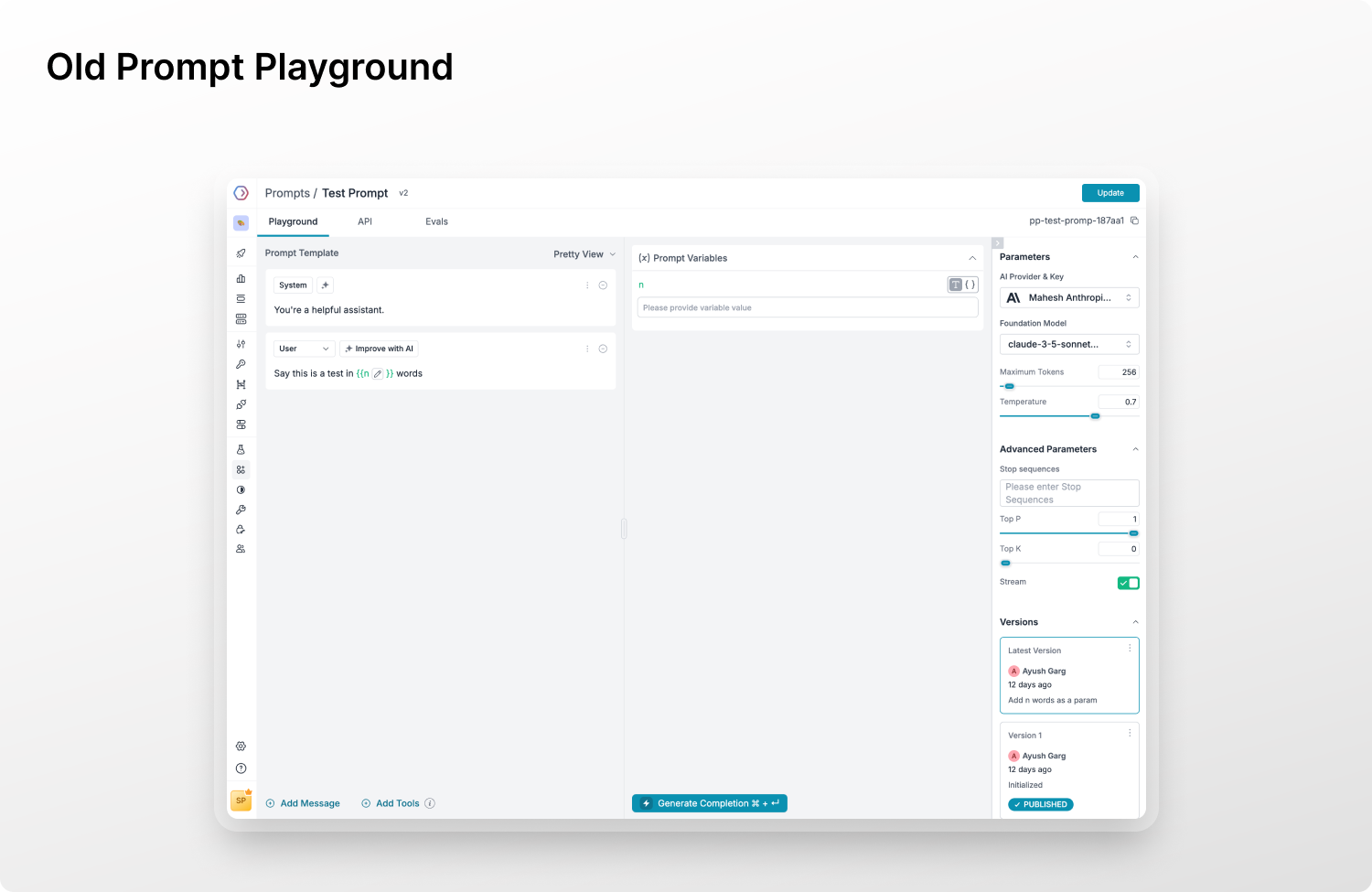

Constraints with Old Prompt Design

The initial prompt design faced constraints like a cluttered interface and difficulty switching between models. These limitations made it clear a redesign was needed to create a more seamless, user-friendly experience, allowing easy model switching without extra guidance.

Prompt Playground: The first version of the Portkey Prompt Playground was unintuitive, lacked multi-model comparison, and had a confusing model selection and version history process. The complex tool-adding flow further slowed users down.

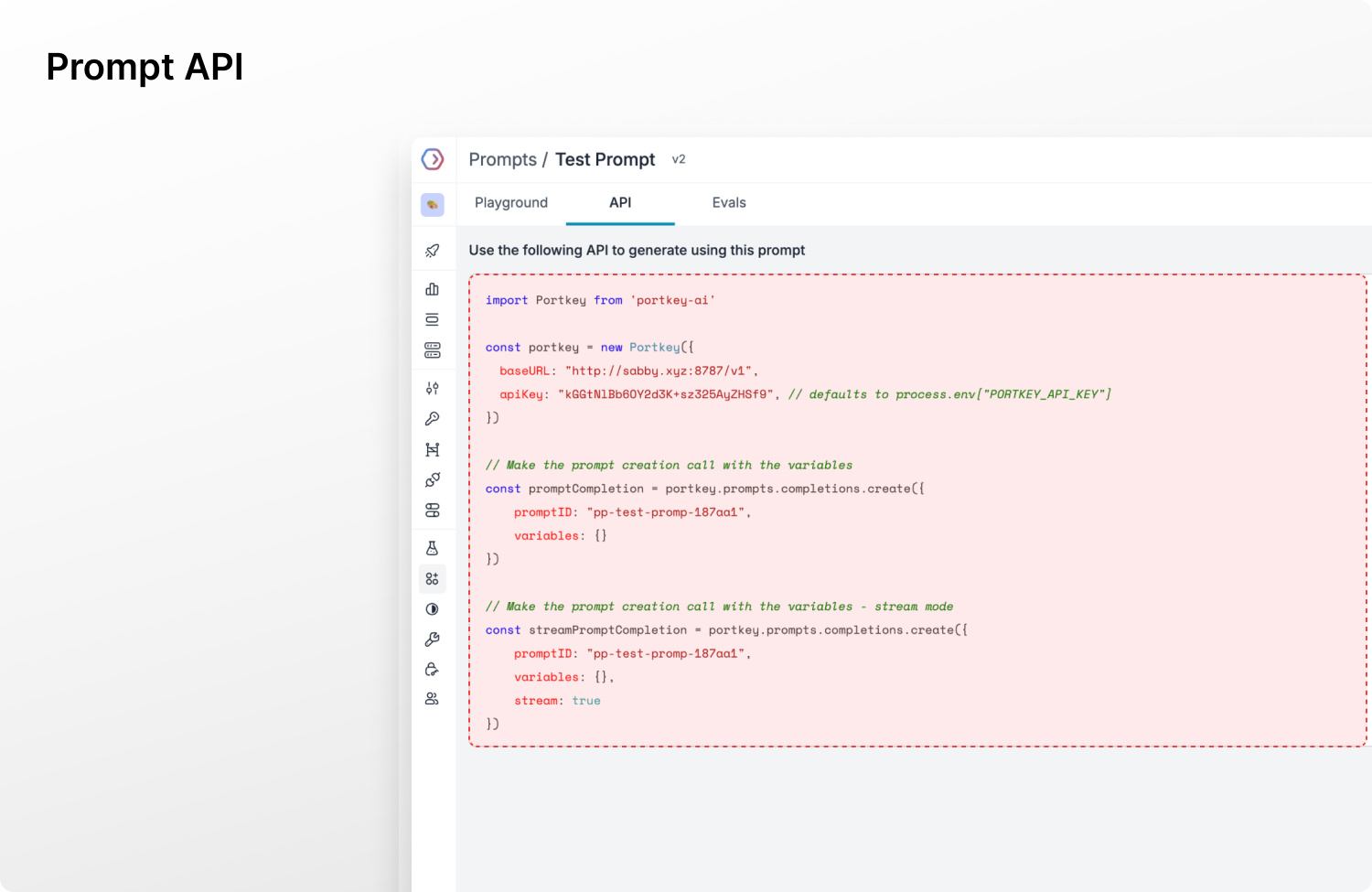

Prompt API: The last Prompt API tab was hard to find and unclear, making integration confusing for engineers. Users also couldn't tell if it showed content or just the API key.

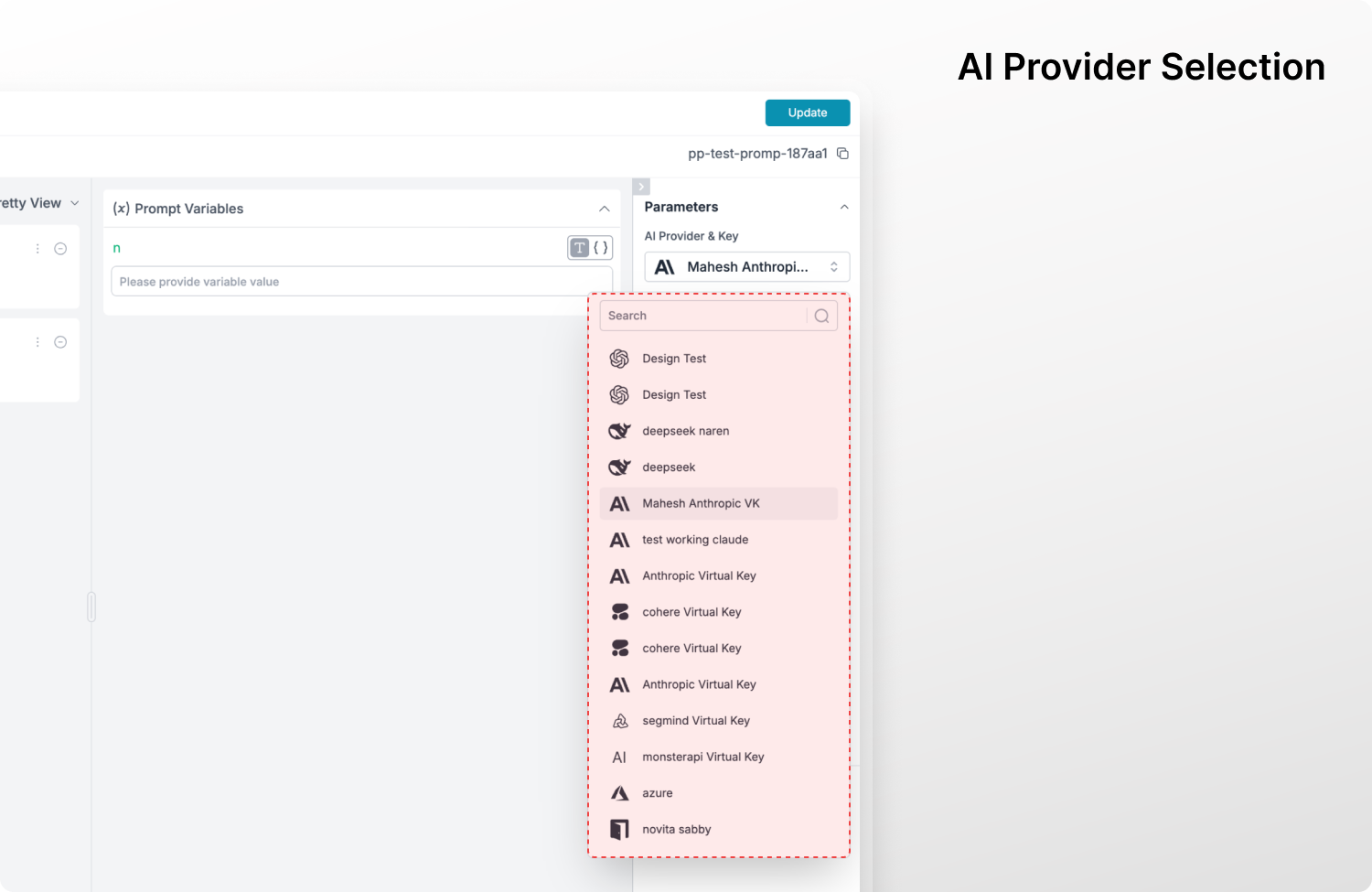

AI Provider Modal: The AI Provider modal in the last Prompt Playground was not user friendly, making it hard for users to choose the right provider. The old layout was difficult to compare multiple prompts and models simultaneously.

Model Selection: Model selection was not user-friendly, making it hard for engineers to identify the best-fit model and its multimodality. The layout also lacked clarity for model comparison in prompt engineering.

Variables: The old prompt variable design was cluttered, hiding the completion card under scroll and making it hard to view results. It also wasn’t suited for the comparison feature, requiring a redesign for better scalability and support.

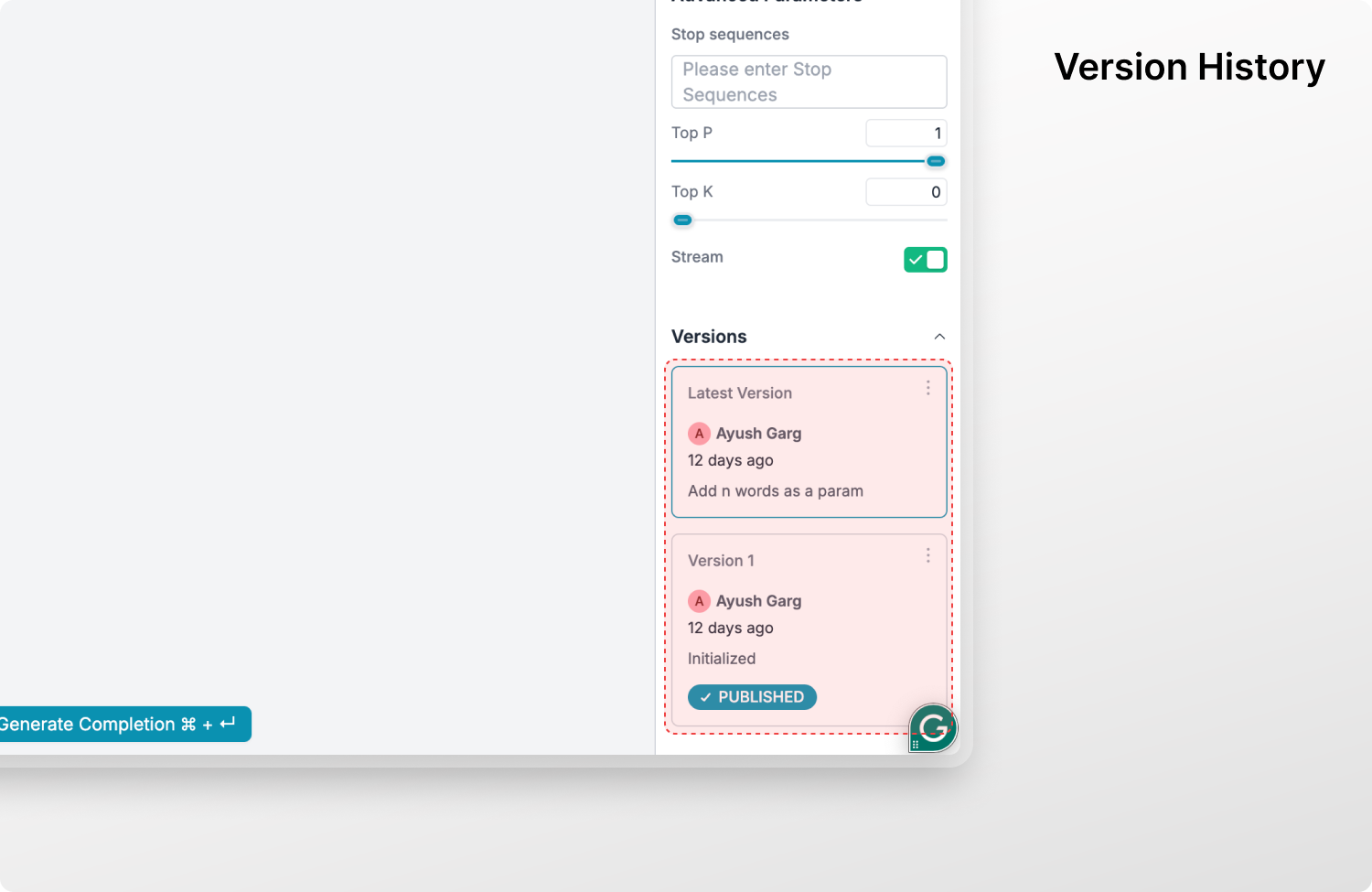

Version History: The old version history was unintuitive, making it difficult to manage versions. The right drawer required excessive scrolling, and the parameters added complexity to prompt engineering.

Tool Integration: Engineers had to switch between the parameter drawer to add tools information suce as choice, making the process difficult. Users couldn't add multiple tools or maintain a tool library for easy access during prompt engineering.

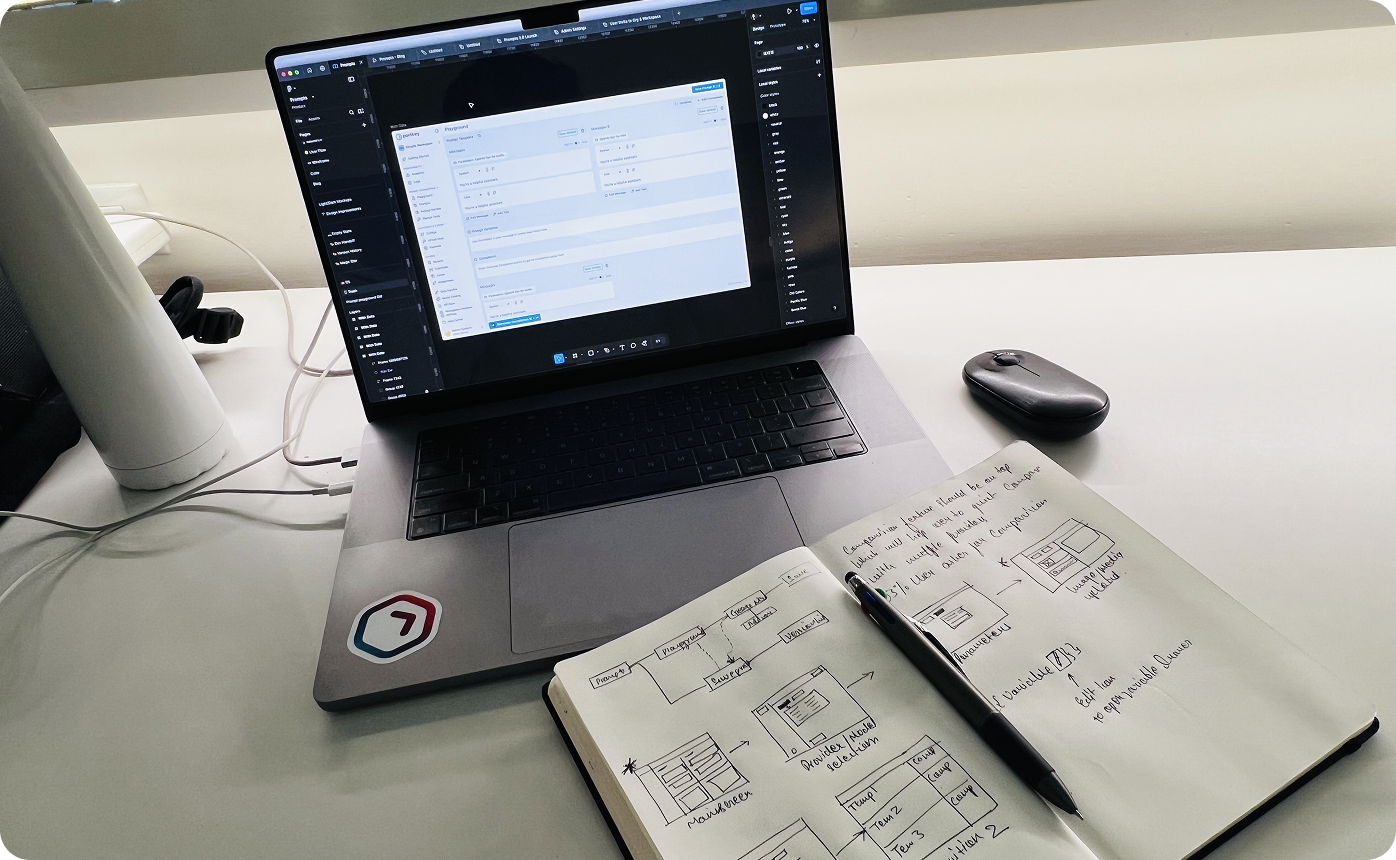

Ideation & Prototyping

We mapped user frustrations with prompt engineering through interviews and studies, identifying issues with comparing outputs, managing versions, and model selection. Pain points were marked on flow diagrams, guiding our design process.

From these challenges, we identified three key features:

- A Comparison View for side-by-side prompt testing.

- An AI Model Selector to simplify decision-making.

- A Version History system for easy tracking.

We refined the user flow for simplicity and ease. Initial wireframes showed that dense control panels overwhelmed users, while minimalist designs hid key controls. Progressive disclosure, showing core tools upfront and expanding advanced settings, streamlined the flow, making it intuitive and functional.

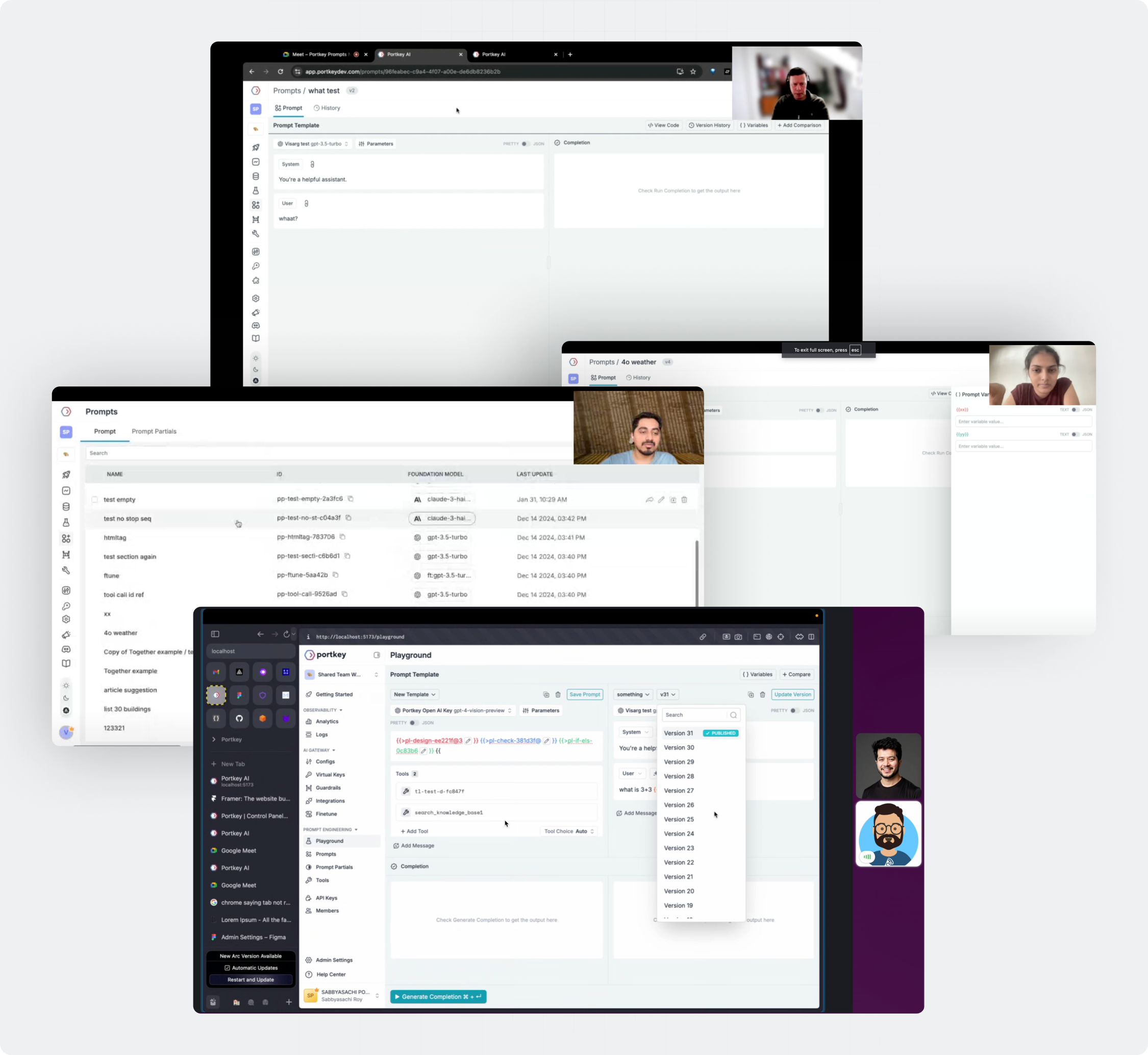

We built interactive prototypes in Figma and tested them with real users. While the version comparison feature was a hit, the AI model selection interface caused hesitation. Refining the hierarchy with tooltips and default recommendations helped. We also shifted from popover-based variable inputs to inline editing for better workflow.

Each iteration, guided by user feedback, led to a system that made prompt engineering more approachable and efficient for all users.

Usability Testing

Throughout user testing, we uncovered small friction points that, when addressed, made a big difference.

- Bringing Templates to the Playground: Users wanted easy access to reuse prompt templates, so we added a seamless copy-and-apply feature.

- Adding Virtual Keys on the Fly: Instead of navigating to a separate page, users can now add Virtual Keys directly from the AI provider/model dropdown.

- Swapping System & User Messages: Users desired flexibility in structuring conversations, so we allowed prompt positions to be swapped.

- Decluttering the UI: Redundant input headings under completions were removed for a cleaner, more focused interface.

- Calling Old Prompts with Versions: Users can now effortlessly retrieve past prompts along with their versions.

- Building a Tool Library: We introduced a tool library, enabling users to save and reuse tools across prompts.

- Fixing Image Display: Large images were optimized for better readability based on user feedback.

⭐ Final Design Call

Our journey in designing the prompt engineering experience involved multiple iterations, user feedback loops, and key design refinements. Each challenge helped shape a more intuitive and scalable interface.

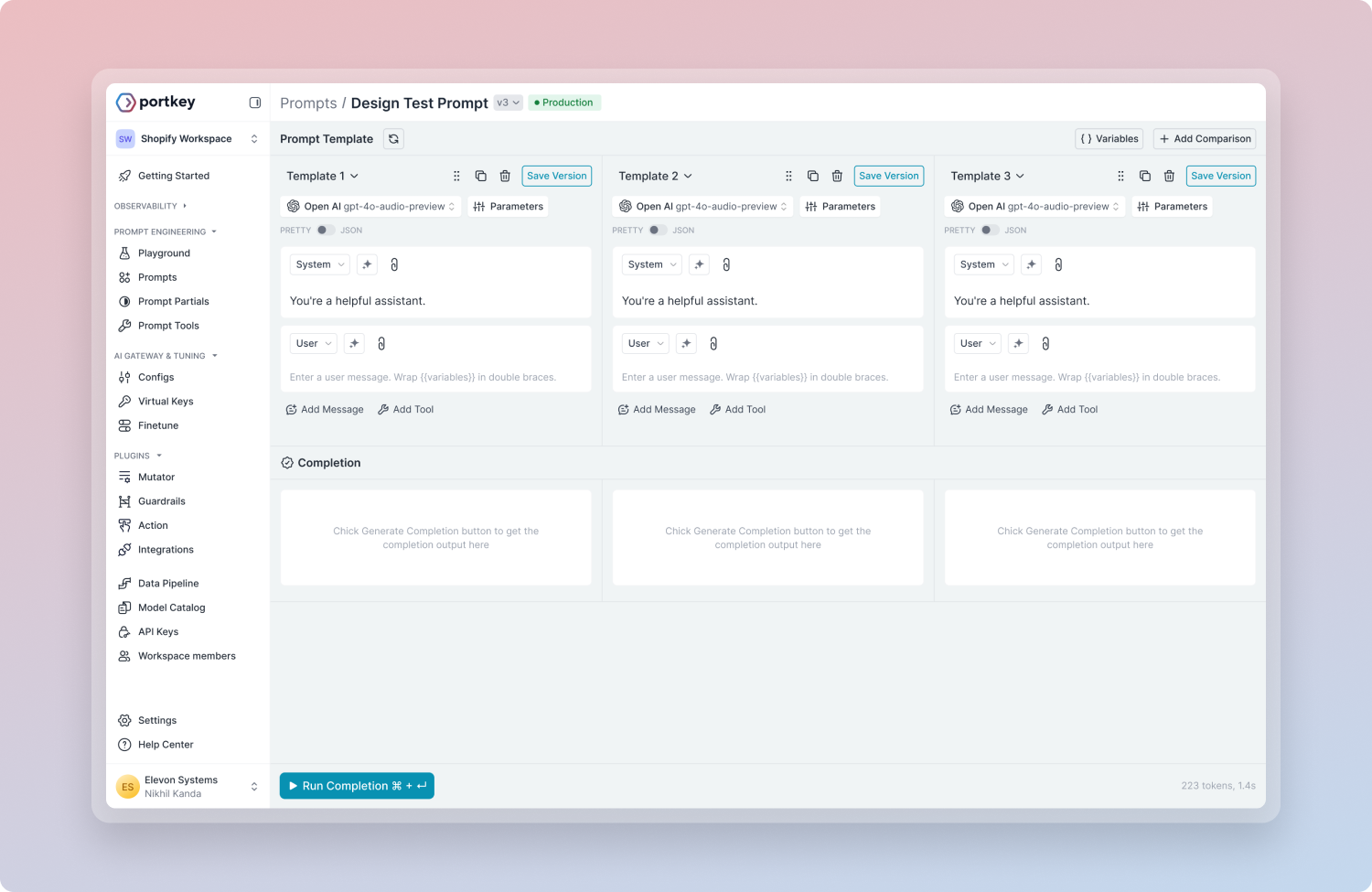

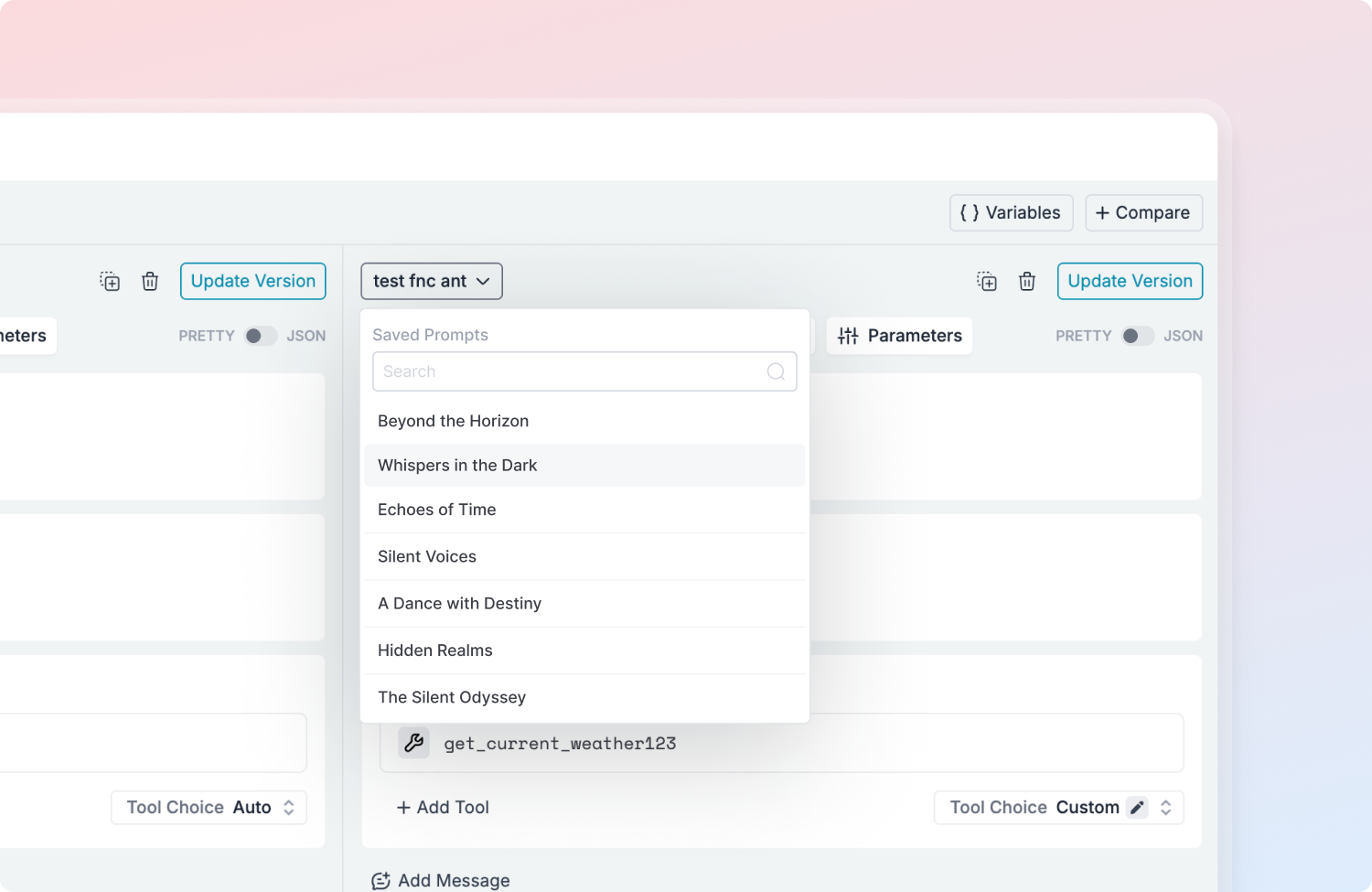

Advance Comparison: We designed a vertical comparison layout to efficiently scale with multiple prompts, enabling users to easily compare any number of prompts.

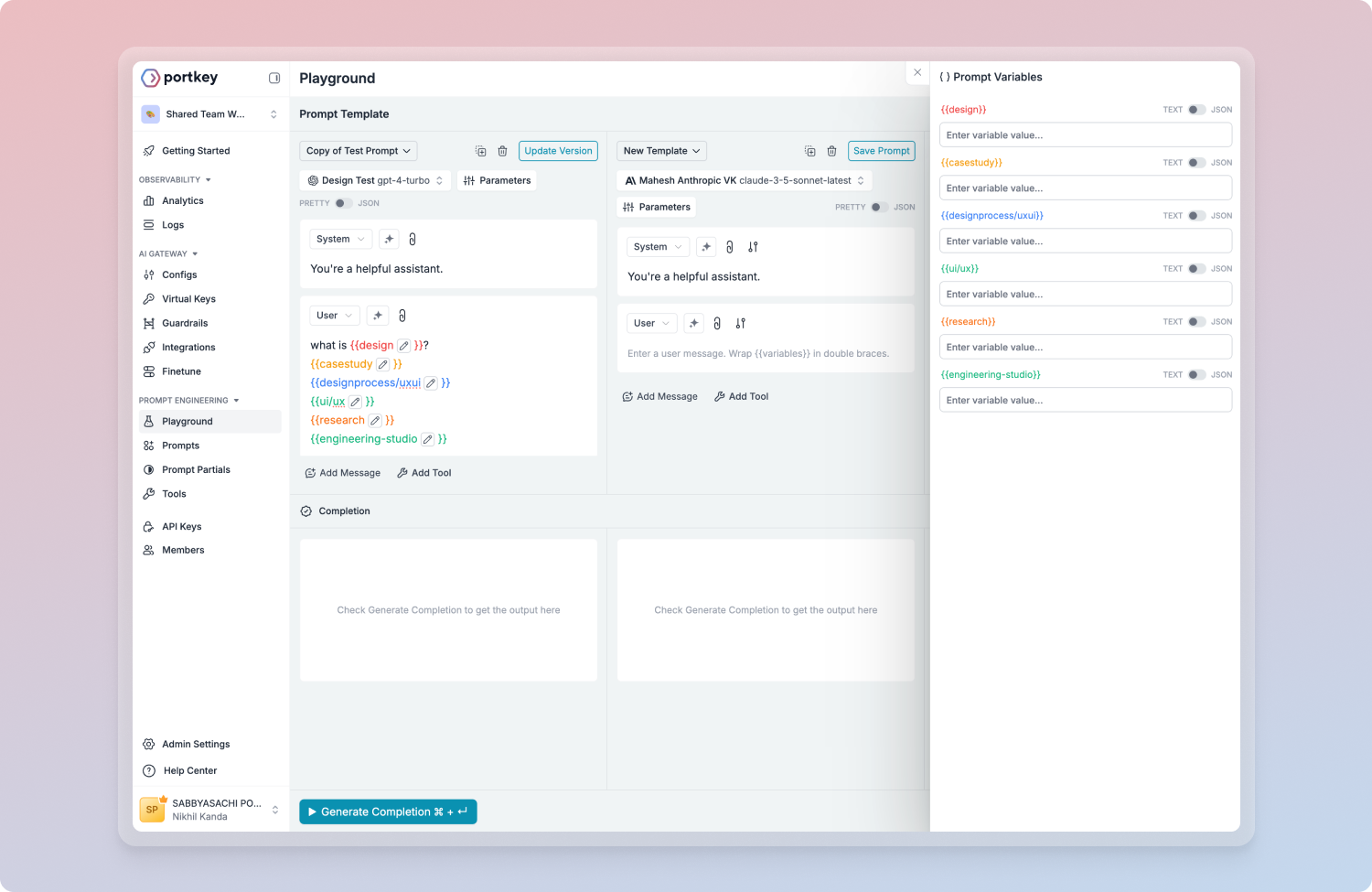

Variable Inputs: We transitioned from a popover to an inline solution with interactive variable inputs, enhancing usability and making dynamic entries simpler while comparing prompts.

Advance the AI Model/Provider Modal: We redesigned the AI model/provider picker for easier comparisons, with individual switches for each model and parameters for scalability.

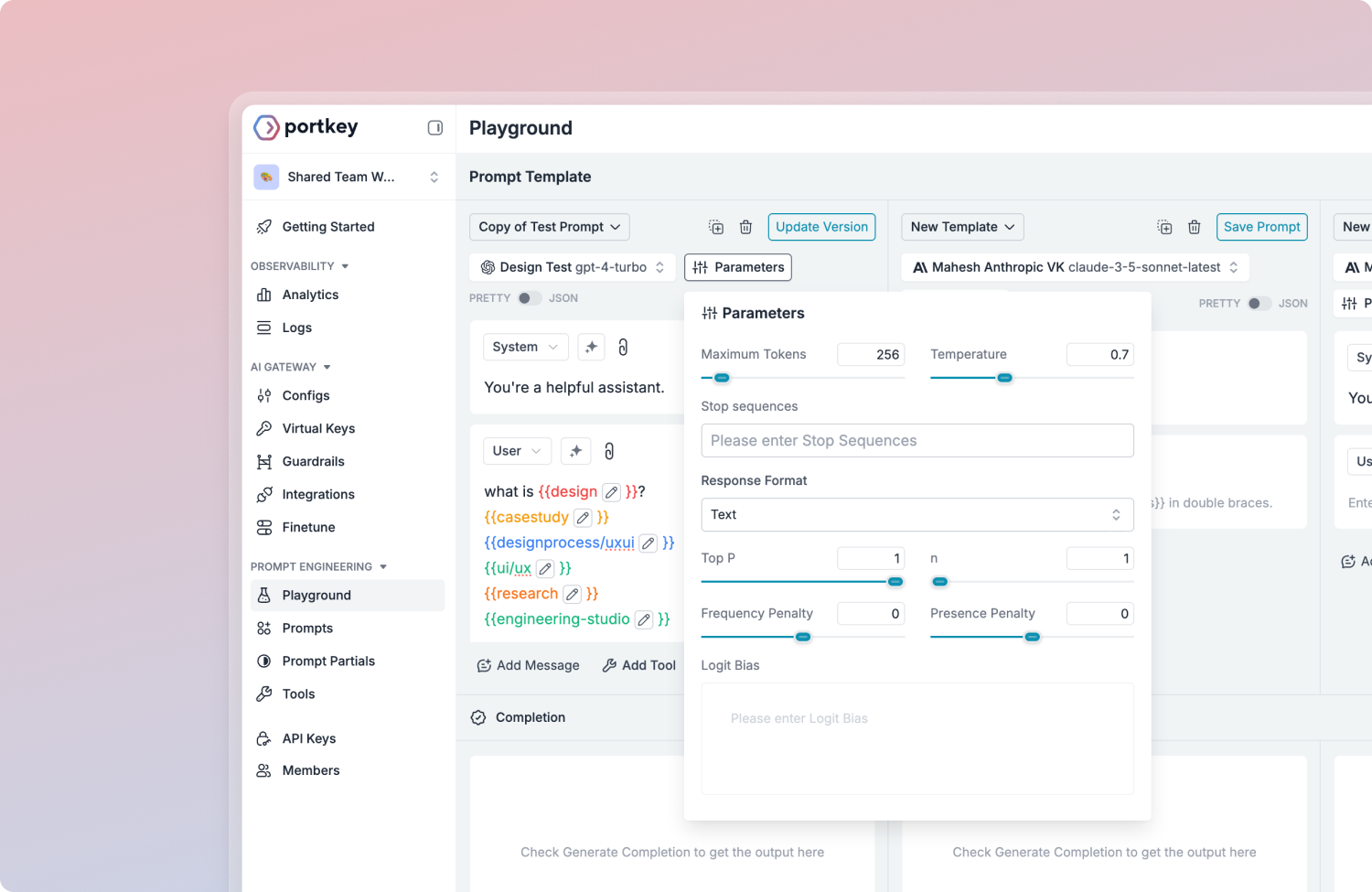

Fresh Parameters Controller: We repositioned Version History and Parameters for a more intuitive workflow, reducing the need for users to shift focus.

Enhancing Tool Selection Flow: The new streamlined flow simplifies tool selection, eliminating the need for multiple steps and improving clarity.

Rewamped Version History: A dedicated drawer for Version History improves organization and access to previous versions.

Comparing Saved Prompts: Users can now directly compare old saved prompts in the playground, with an option to load prompts from prompt library.

The final design was a functional transformation faster model selection, smarter media support, and an integrated tool panel.

The impact: 70% faster prompt testing, 40% quicker model selection, and a 50% efficiency boost for advanced users.

Final Design

The redesigned Prompt Engineering Studio is now more intuitive, allowing users to test multiple prompts across different AI models simultaneously, while easily comparing saved prompts. The interface is cleaner, with collapsible drawers, clearer navigation, and added media support, enabling users to run more diverse and effective tests

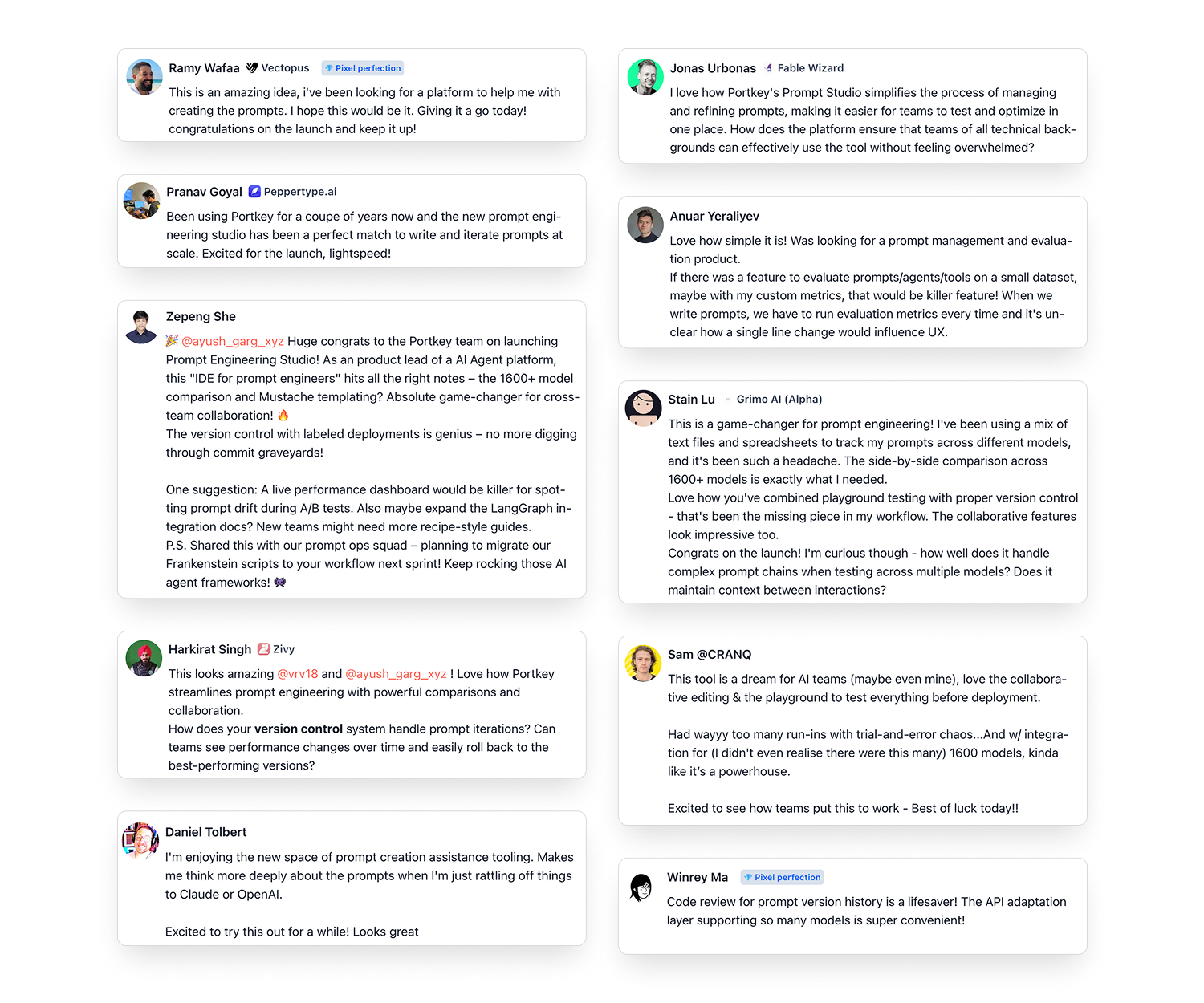

Big Success & Client Satisfaction ❤️

With strong adoption of the new Portkey Prompt Engineering Studio and positive feedback from users, especially our enterprise clients, the Portkey team was thrilled with the overall success of the revamped feature. Although specific quantitative metrics are not disclosed, we did receive general insights from internal teams and stakeholders after the redesign.

- Reduced customer support tickets related to prompt creation and model selection.

- High adoption rates across both new and existing users, with power users particularly embracing the streamlined workflow.

- Increased engagement with the new media upload feature, enhancing the testing process for a wider variety of use cases.

- Strengthened relationships with enterprise clients, who view the redesigned Prompt Engineering Studio as a key enabler of their AI strategy.

🎯 Additionally, the successful redesign and its impact led to continued collaboration between the design and development teams at Portkey, reinforcing the value of our work and establishing a lasting partnership. A significant personal win for me, as it reaffirmed the importance of user-centered design in creating meaningful and impactful product experiences.

Business Impact

65% Fewer Support Queries

Reduced support queries related to prompt creation and model selection through improved user engagement.

70% Faster Workflow Execution

Increased prompt creation speed, boosting overall productivity.

45% Higher Retention

Improved active user retention, especially among power users, due to new features and streamlined workflows.

My Design Philosophy

Key Takeaways

This project was one of my most rewarding experiences as a designer. Looking back, I gained valuable insights about myself and my approach to design, and I would do a few things differently if I had the chance to redo it.

- Growth in Communication & Collaboration: This project strengthened my ability to collaborate across roles, bridging design, technical constraints, and business goals while working with product managers, engineers, and stakeholders.

- More Time for User Research: Looking back, I would have allocated more time for deeper user insights. While we involved customer support, additional surveys and affinity mapping could have refined our design further.

- Joy in Cross-Functional Work: I loved collaborating with engineers, especially brainstorming creative solutions within constraints. This experience reinforced the importance of fostering an environment where everyone feels comfortable sharing ideas.

The design facelift was a true collaboration between the development and design teams, ensuring a seamless integration of functionality and user experience. Close communication between both teams allowed us to quickly address challenges and iterate on the design, resulting in a more efficient and intuitive product. This strong partnership not only led to the successful launch but also reinforced the value of cross-team collaboration in delivering impactful solutions.

A huge thank you to Rohit Agarwal and Ayush Garg for the vision and leadership, Sabbyasachi Roy for the technical expertise, and the entire Portkey team for their dedication and hard work in bringing this feature to life. Incredible teamwork all around! 🙌🚀

If I had to plan the next steps, I would definitely focus on A/B testing on features that got mixed reviews during the user testing and I would iterate on designs to make them more intuitive.