Powering GenAI initiatives in insurance companies to go from pilot to production

Teams are quickly prototyping use cases with LLMs: automating claims summaries, extracting information from documents, or assisting member support. Explore the fastest way to give every team safe, secure access to LLMs, without losing observability, governance, or control.

The fastest way to give every team safe, secure access to LLMs — without losing observability, governance, or control.

With new improvements in AI every day, automation has become easier, at least on an individual level. Teams are quickly prototyping use cases with LLMs: automating claims summaries, extracting information from documents, or assisting member support.

And as expected, these experiments multiply. What starts with one workflow turns into five teams trying to build their own GenAI solutions, each with their own prompts, providers, and keys.

Taking these pilots to production is what teams struggle with.

- It’s hard to get a central view of what teams are building or which models they’re using

- Every team takes its own approach — different prompts, models, and deployment methods. Also, the efforts are duplicated.

- There is no easy way to monitor performance, quality, or cost across various use cases. Identifying issues like failures or inefficiencies becomes nearly impossible

- Security and compliance requirements are hard to enforce when teams move fast

- Hallucinations, data leakage, and prompt drift go unnoticed until it’s too late

Introducing Portkey

Portkey equips AI teams with everything they need to go to production - Gateway, Observability, Guardrails, Governance, and Prompt Management, all in one platform.

With Portkey’s AI Gateway, teams connect, manage, and secure AI interactions across 1600+ LLMs, with centralized control, real-time monitoring, and enterprise-grade governance.

Having worked with some of the top insurance companies — including Premera, Barkibu, Bold Penguin, and Clearcover — we’ve seen firsthand what platform teams need to succeed.

What you get with Portkey

Forward-compatible AI Gateway

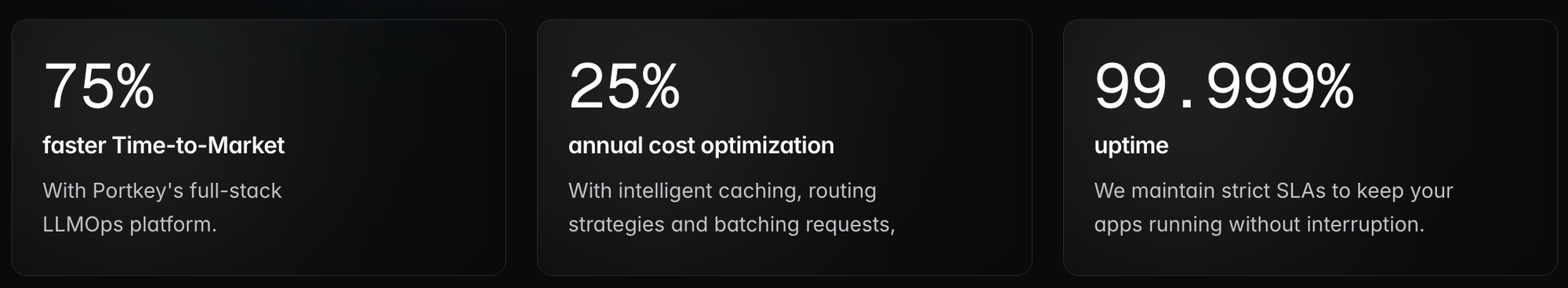

Portkey integrates with 1,600+ models across all major LLM providers and modalities, including custom or private models. With new updates every day, Portkey stays forward-compatible — so your infra doesn’t have to be rebuilt every time a new model launches or a provider adds support for new regions, pricing, or features.

Centralized governance

Portkey becomes the single entry point for all LLM calls across teams and use cases. Portkey provides a hierarchical system for managing teams, resources, and access within your AI development environment.

Control, manage, and view usage and spending across teams from a single, unified view.

Built-in reliability

Portkey ensures your LLM workloads are resilient, with support for batching, model failover, retries, fallback, smart routing, and multi-region access. If a model or provider goes down or rate limits are hit, you can manage traffic seamlessly.

Seamless integration with your existing stack

Whether you’re building on GCP, Azure, or AWS, Portkey fits directly into your cloud and your network. No rewrites, no compromises.

Guardrails against hallucinations and PII leakage

Detect, redact, and validate responses in real time. Portkey’s built-in guardrails help catch hallucinated answers, secure sensitive data, and enforce output format — all before it reaches your app.

Full observability for every request

Track usage, cost, latency, and errors at the request level. Portkey makes every LLM call visible and traceable.

Enterprise-grade security

Our platform is compliant with leading security standards, including SOC2, ISO27001, GDPR, and HIPAA. Role-based access control, API key rotation, SSO, and live-streamed audit logs are everything you need to stay ready for internal audit requirements.

Why Portkey is the right AI gateway for you

I tested everything, but the only one I continue using is Portkey. It was the simplest one. I used it just as a proxy at first, then for prompt versioning and threshold-based tracing. Now we can see all the LLM calls tied to a ticket.

Pablo Pazos, CEO, Barkibu

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,CTO, Qoala