Bring Your Agents to Production with Portkey

Portkey now natively integrates with Langchain, CrewAI, Autogen and other major agent frameworks, and makes your agent workflows production-ready.

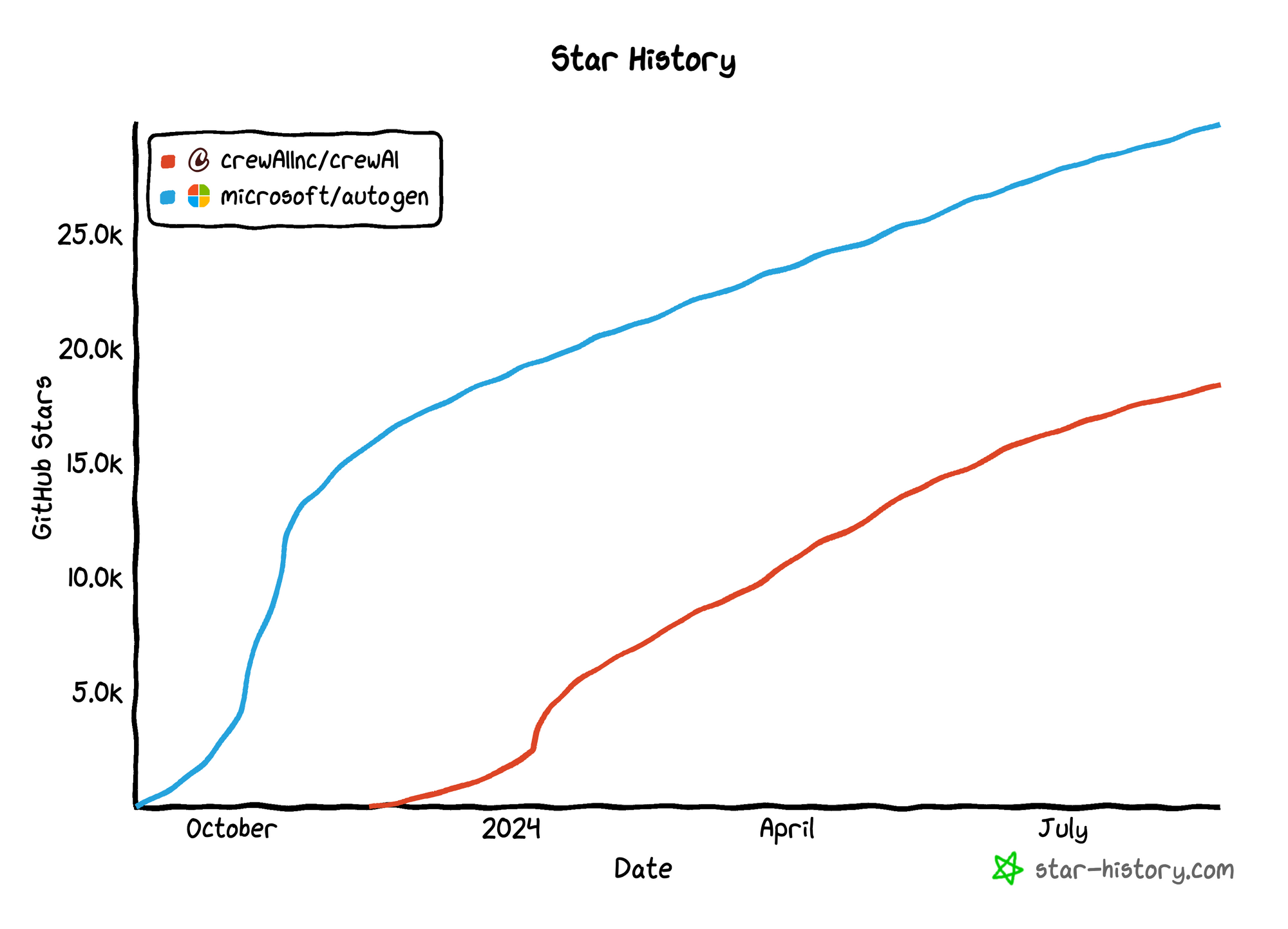

AI Agents are here, and the number of companies using and building agents is growing rapidly. Just take a look at this Github star history for CrewAI & Autogen:

Andrew Ng captured this rise perfectly:

"AI agent workflows will drive massive progress in AI this year — perhaps even more than the next generation of foundation models. This is an important trend, and I urge everyone who works in AI to pay attention to it."

While these frameworks are constantly getting better and the underlying LLMs are getting better, there's still a last-mile problem with taking agents to prod:

AI Agents Are Fragile

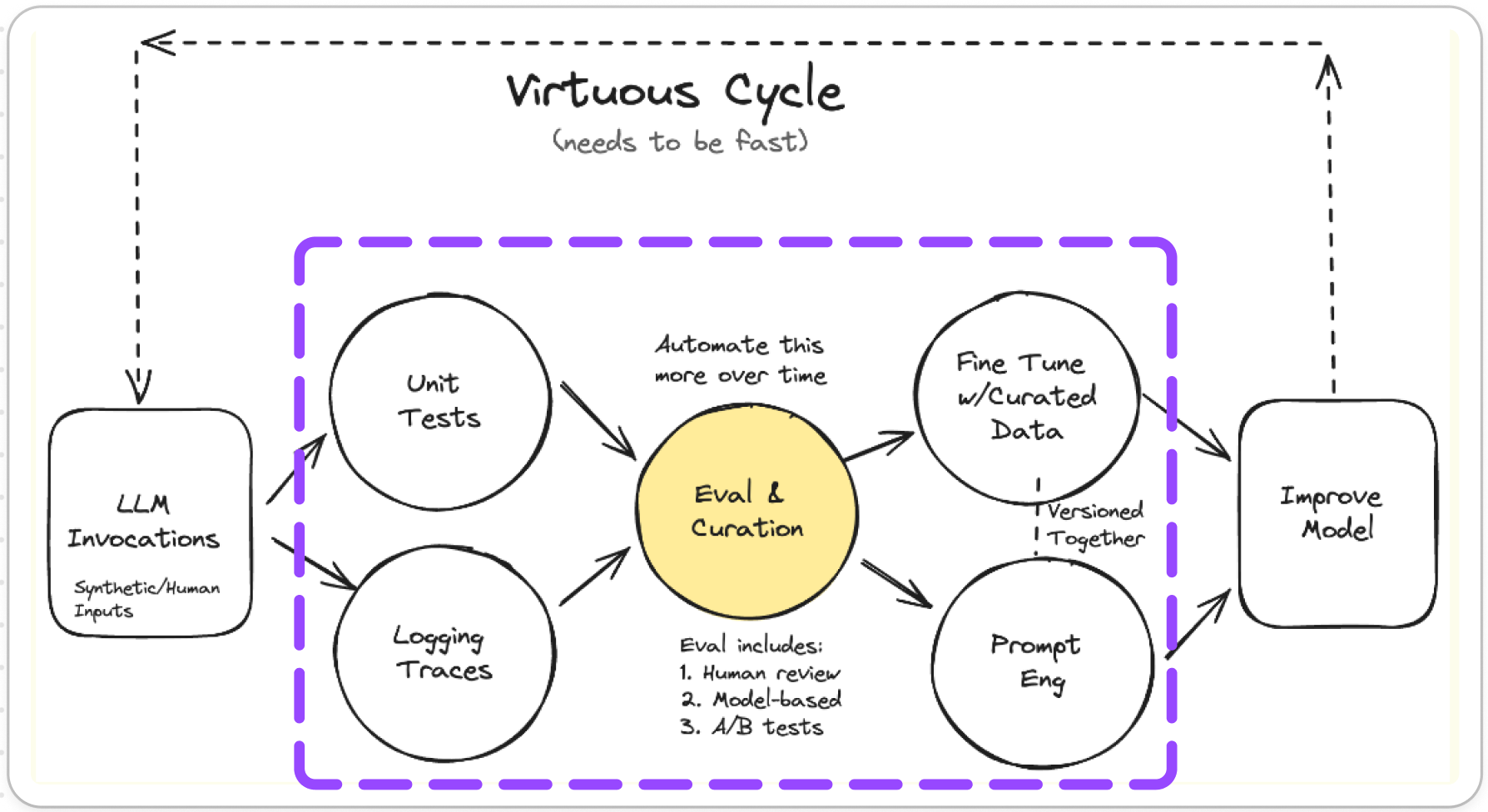

Hamel Husain in his article Your AI Product Needs Evals, highlights a key issue:- rapid iteration is the most critical factor in moving your Agent from PoC to production.

Iterating Quickly == Success

According to Hamel, the success of an AI agent hinges on how quickly developers can:

- Evaluate quality- Eval and Curation

- Debug issues - Logging and Traces, Unit Tests

- Change the system's behavior- Prompt Engineering

This is, the virtuous cycle of AI development

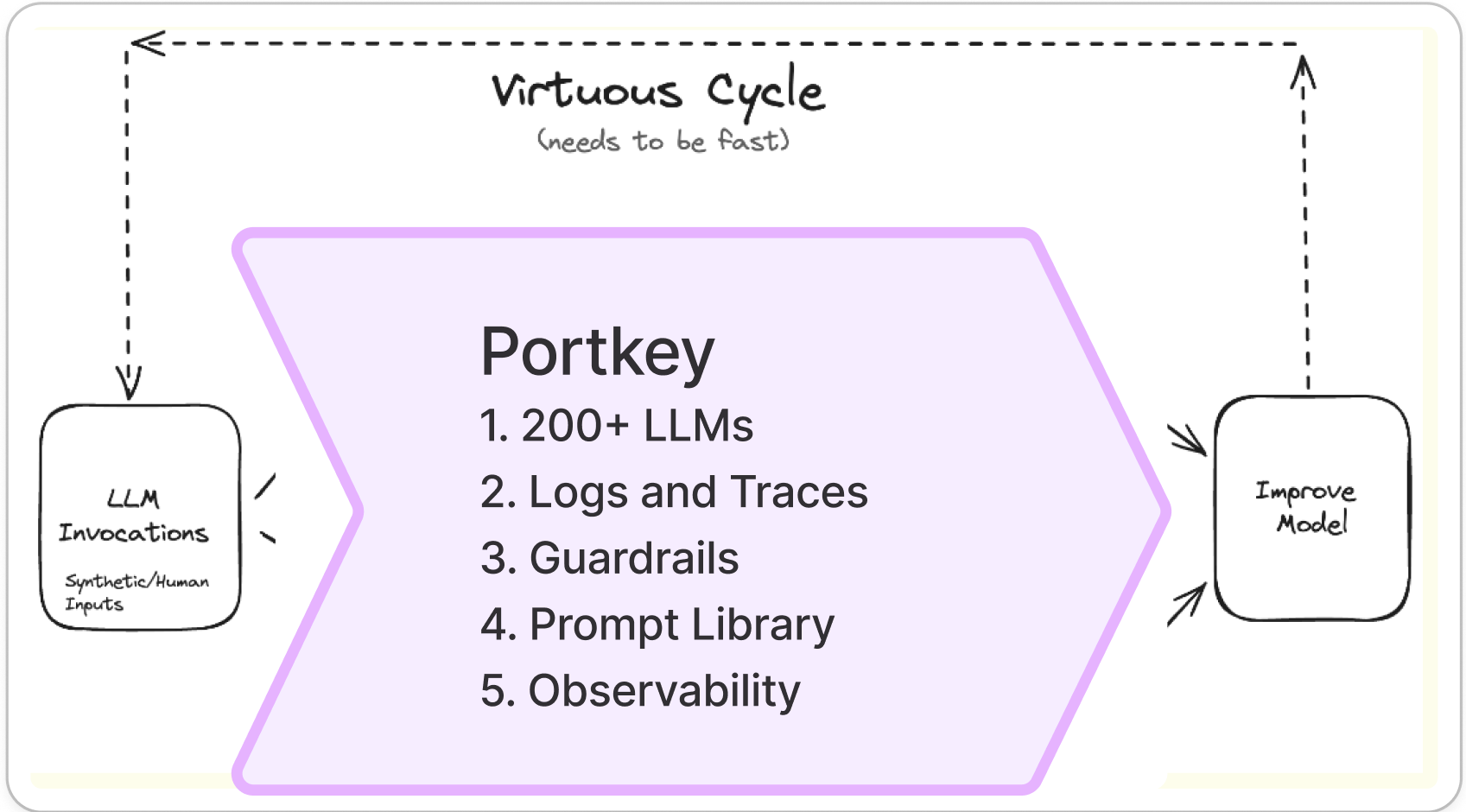

At Portkey, we've built tools to accelerate this virtuous cycle. Portkey's features tackle each part of Hamel's cycle, transforming the slow, manual process of refining agents into a fast, automated Ferrari.

At Portkey, we're expanding our mission to provide the tools you need to take your AI agent from proof of concept (PoC) to production. We've got you covered.

How Portkey Accelerates Your Agents to Production

1. Use Multiple LLMs

Don't limit your agent to a single model. The AI landscape is evolving rapidly. New models emerge daily. Your agent needs flexibility to stay competitive. Best practices include:-

- Integrate multiple LLM providers

- Regularly evaluate new models

- Match tasks to the most suitable LLM

Portkey lets you seamlessly connect 200+ LLMs. Simply changing the provider and API-key in the ChatOpenAI object.

llm = ChatOpenAI(

api_key="api-key",

base_url=PORTKEY_GATEWAY_URL,

default_headers=createHeaders(

provider="azure-openai", #Switch models seamlesslly for 200+LLMs

api_key="PORTKEY_API_KEY",

virtual_key="AZURE_VIRTUAL_KEY"

)

)

2. Debug with Precision

AI agents are complex. Without proper visibility, issues remain hidden. You need to solve edge cases to get your Agent to prod.

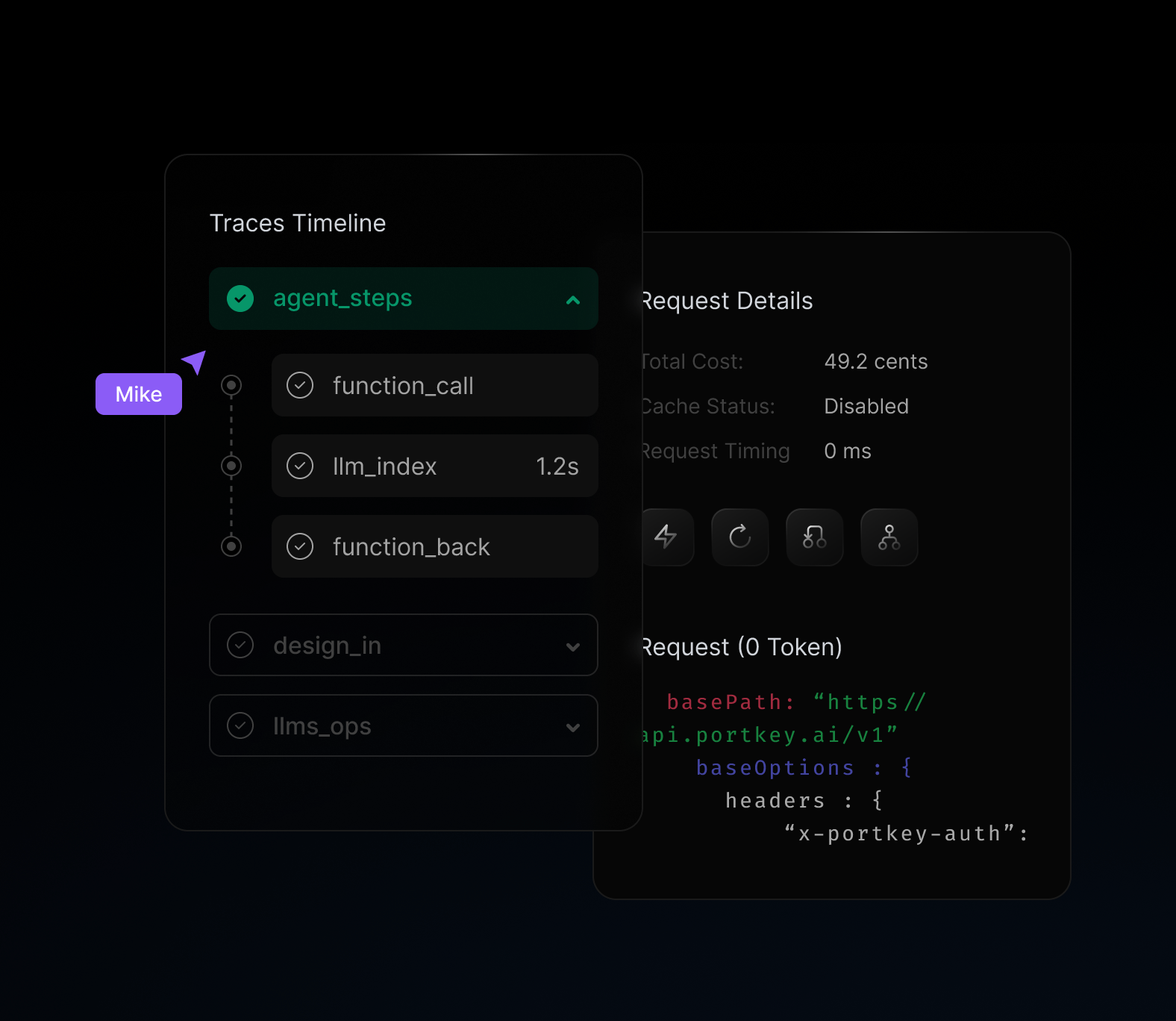

Portkey's traces-and-logs view acts as your AI detective kit. Our traces-and-logs view offers X-ray vision for your agent. Track every decision and action. Identify and solve problems in seconds. No more guesswork – just clear, actionable insights to refine your agent's performance.

3. Set Robust Guardrails

Protect your agent and users with strong safeguards. AI agents in production need guardrails. I can't stress this enough. Unchecked LLM may expose sensitive data or exhibit biases.

Portkey's Guardrails ecosystem acts as a supervisor for your AI. It verifies agent requests, controls inputs and outputs, and ensures your AI operates within predefined ethical and safety boundaries

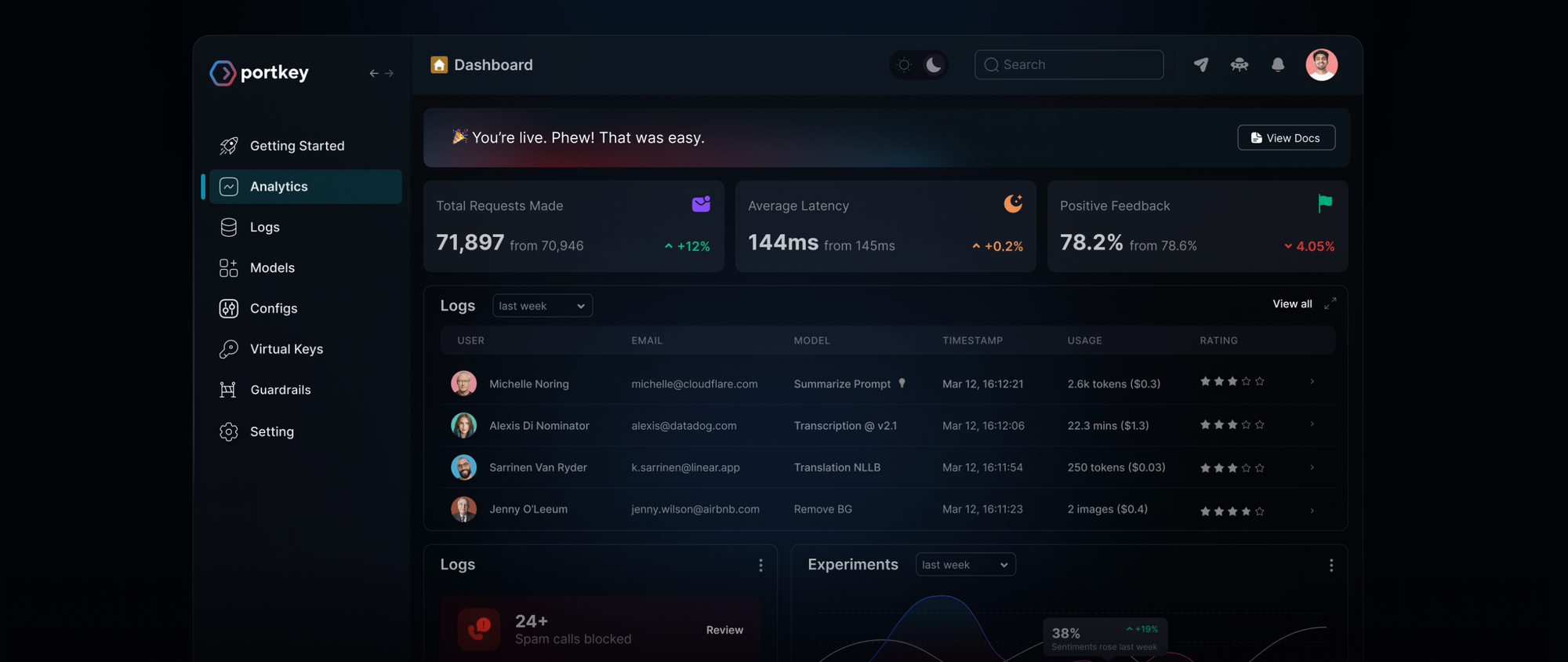

4. Monitor Your Agent in Real Time

AI agents can be costly. Multiple moving parts make them complex. You need to have a bird's-eye view of your entire operation.

Portkey's observability suite acts as your AI control center. It offers a 20000+ feet view of your agent, tracking everything from costs and token usage to 40+ key performance metrics.

5. Build Reliable Agents

Agent runs are complex. You need a strong foundation to build your AI pipelines. Relying on providers like OpenAI/Anthropic can disrupt your agent's workflow during a crisis.

Portkey has built-in reliability features like- fallbacks, load-balance, retries, caching, and much more to help you make a rock-solid base. It keeps your AI standing, no matter what.

Scale with ease. Handle traffic spikes. Maintain performance under pressure. It's the bedrock your AI can rely on.

{

"retry": {

"attempts": 5

},

"strategy": {

"mode": "loadbalance" // Your AI's personal safety net

},

"targets": [

{

"provider": "openai",

"api_key": "OpenAI_API_Key"

},

{

"provider": "anthropic",

"api_key": "Anthropic_API_Key"

}

]

}

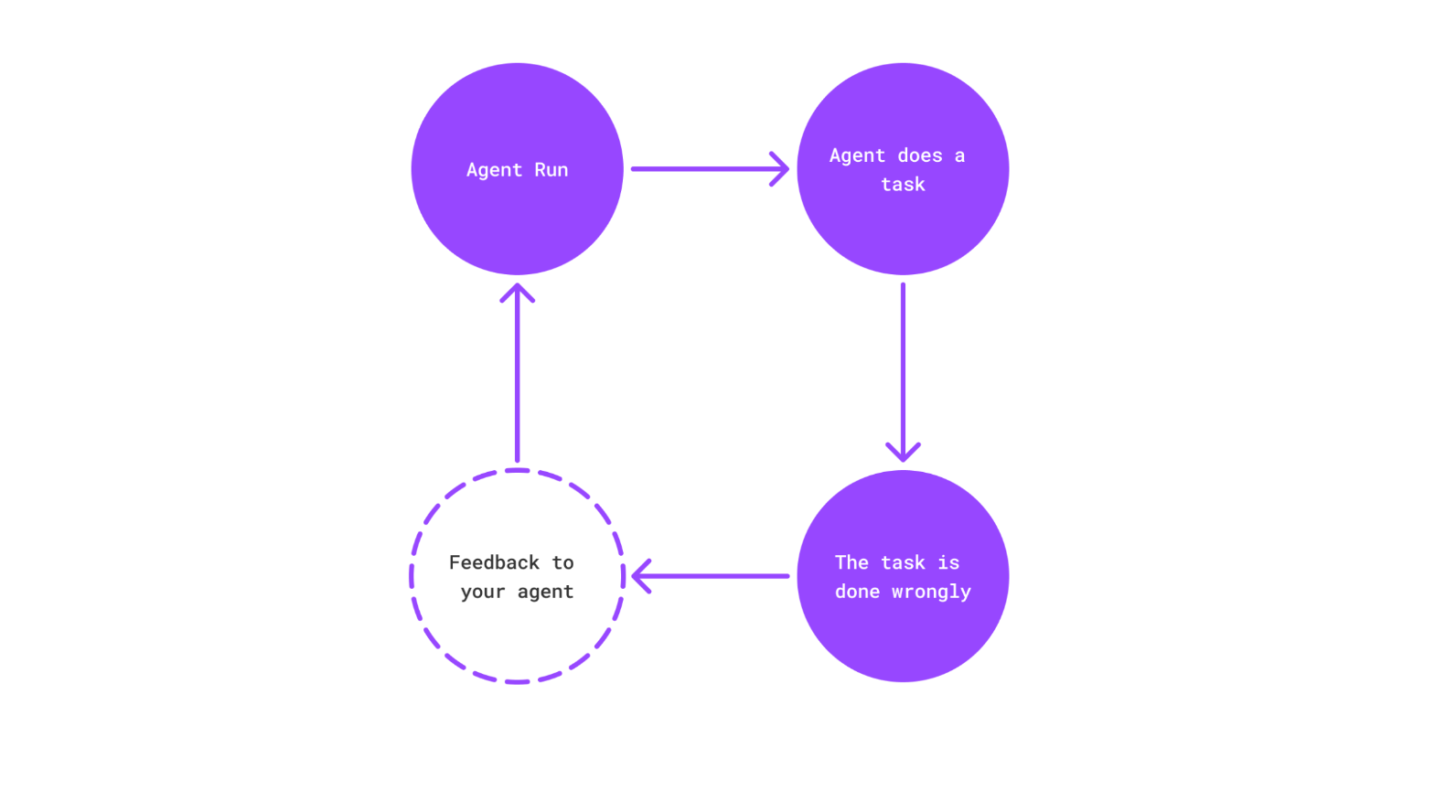

Continuous Improvement - Feedback to your Agent

Improve your Agent runs by capturing qualitative & quantitative user feedback on your requests. Portkey's Feedback API provides a simple way to get weighted feedback from customers on any request you served, at any stage in your app.

By capturing weighted feedback from users, you gain valuable data that can be used to fine-tune your LLMs. This continuous feedback loop ensures that your Agent is constantly evolving.

Get Started

Whether you're working with CrewAI, AutoGen, LangChain, LlamaIndex, or building your own agent, natively integrate Portkey with leading agent frameworks and take them to prod. Link to docs

pip install -qU llama-agents llama-index portkey-ai

from langchain_openai import ChatOpenAI, createHeaders

from portkey_ai import createHeaders, PORTKEY_GATEWAY_URL

llm1 = ChatOpenAI(

api_key="OpenAI_API_Key",

base_url=PORTKEY_GATEWAY_URL,

default_headers=createHeaders(

provider="openai",

api_key="PORTKEY_API_KEY"

)

)!pip install -qU pyautogen portkey-ai

from autogen import AssistantAgent, UserProxyAgent, config_list_from_json

from portkey_ai import PORTKEY_GATEWAY_URL, createHeaders

config = [

{

"api_key": "OPENAI_API_KEY",

"model": "gpt-3.5-turbo",

"base_url": PORTKEY_GATEWAY_URL,

"api_type": "openai",

"default_headers": createHeaders(

api_key ="PORTKEY_API_KEY",

provider = "openai",

)

}

]pip install -qU llama-agents llama-index portkey-ai

from llama_index.llms.openai import OpenAI

from portkey_ai import PORTKEY_GATEWAY_URL, createHeaders

gpt_4o_config = {

"provider": "openai", #Use the provider of choice

"api_key": "YOUR_OPENAI_KEY",

"override_params": { "model":"gpt-4o" }

}

gpt_4o = OpenAI(

api_base=PORTKEY_GATEWAY_URL,

default_headers=createHeaders(

api_key=userdata.get('PORTKEY_API_KEY'),

config=gpt_4o_config

)

)import controlflow as cf

from langchain_openai import ChatOpenAI

from portkey_ai import createHeaders, PORTKEY_GATEWAY_URL

llm = ChatOpenAI(

api_key="OpenAI_API_Key",

base_url=PORTKEY_GATEWAY_URL,

default_headers=createHeaders(

provider="openai", #choose your provider

api_key="PORTKEY_API_KEY"

)

)pip install -qU crewai portkey-ai

from langchain_openai import ChatOpenAI

from portkey_ai import createHeaders, PORTKEY_GATEWAY_URL

llm = ChatOpenAI(

api_key="OpenAI_API_Key",

base_url=PORTKEY_GATEWAY_URL,

default_headers=createHeaders(

provider="openai", #choose your provider

api_key="PORTKEY_API_KEY"

)

)!pip install phidata portkey-ai

from phi.llm.openai import OpenAIChat

from portkey_ai import PORTKEY_GATEWAY_URL, createHeaders

llm = OpenAIChat(

base_url=PORTKEY_GATEWAY_URL,

api_key="OPENAI_API_KEY", #Replace with Your OpenAI Key

default_headers=createHeaders(

provider="openai",

api_key=PORTKEY_API_KEY # Replace with your Portkey API key

)

)Conclusion

You could spend months cobbling together a fragile AI infrastructure. Or you could use Portkey as your catalyst to build production-ready AI agents - all this before your coffee gets cold. Try now