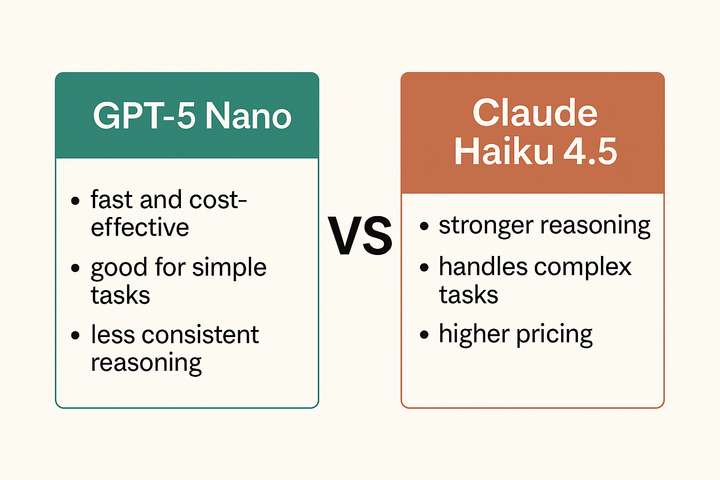

Comparing lean LLMs: GPT-5 Nano and Claude Haiku 4.5

Compare GPT-5 Nano and Claude Haiku 4.5 across reasoning, coding, and cost. See which lightweight model fits your production stack and test both directly through Portkey’s Prompt Playground or AI Gateway.