The AI governance problem in higher education and how to solve it

From cost control to safe tool access, managing GenAI across campus isn’t simple. This post breaks down the operational challenges and what a centralized AI gateway should solve.

Giving students and faculty access to AI isn’t the hard part anymore. The models are available. The tools are ready. What’s hard is doing it responsibly, without blowing through budgets, exposing credentials, or creating security gaps across campus.

Most universities don’t just need API keys. They need a way to provision access based on course enrollment. They need guardrails that work out of the box. They need to give students tools like Cursor and Claude Code, without compromising oversight.

This post breaks down the real governance challenges institutions face, and what a centralized solution needs to look like to solve them.

The governance challenge: balancing access, safety, and scale

The moment you try to open up access to tools a specific set of challenges comes up.

Managing and sharing model credentials across departments or courses quickly becomes chaotic. Keys get hardcoded into scripts, shared over email, reused across different contexts. Revoking or rotating them is nearly impossible.

Even worse, multiple users often end up using the same keys, which makes tracking usage per user or course nearly impossible. You can’t cap usage for a single class, set a quota per student, or even estimate which department is responsible for the month’s bill. That makes cost management reactive, instead of proactive.

Every student doesn’t need the same level of access. But managing different access levels for undergrads, graduate students, or research assistants let alone keeping them updated across semesters is a logistical nightmare when done manually.

Students switch courses, graduate, or drop out. But in most AI setups, their access doesn’t change unless someone explicitly removes it. That means former students can retain access to institutional tools and models indefinitely unless someone remembers to revoke it.

Without SSO and directory sync, AI systems sit outside the university’s core identity infrastructure. That leads to separate logins, siloed user lists, and a constant drift between actual enrollment and system access.

Also, LLMs can return inappropriate, biased, or unsafe responses. But in most setups, there’s no consistent way to apply guardrails across tools or models. Even if some teams manually implement filters, others might skip them entirely leaving the institution exposed.

Apps like Cursor, Claude Code, LibreChat, and OpenWebUI are how students want to interact with AI today. But these tools often connect directly to model providers, bypassing any university-wide governance or monitoring. That means there’s no visibility, no policy enforcement, and no way to stop misuse or overuse when it happens.

Solving any one of these is hard. What universities need is a centralized solution that handles them all without compromising ease of use for students or control for administrators.

What a centralized solution should do

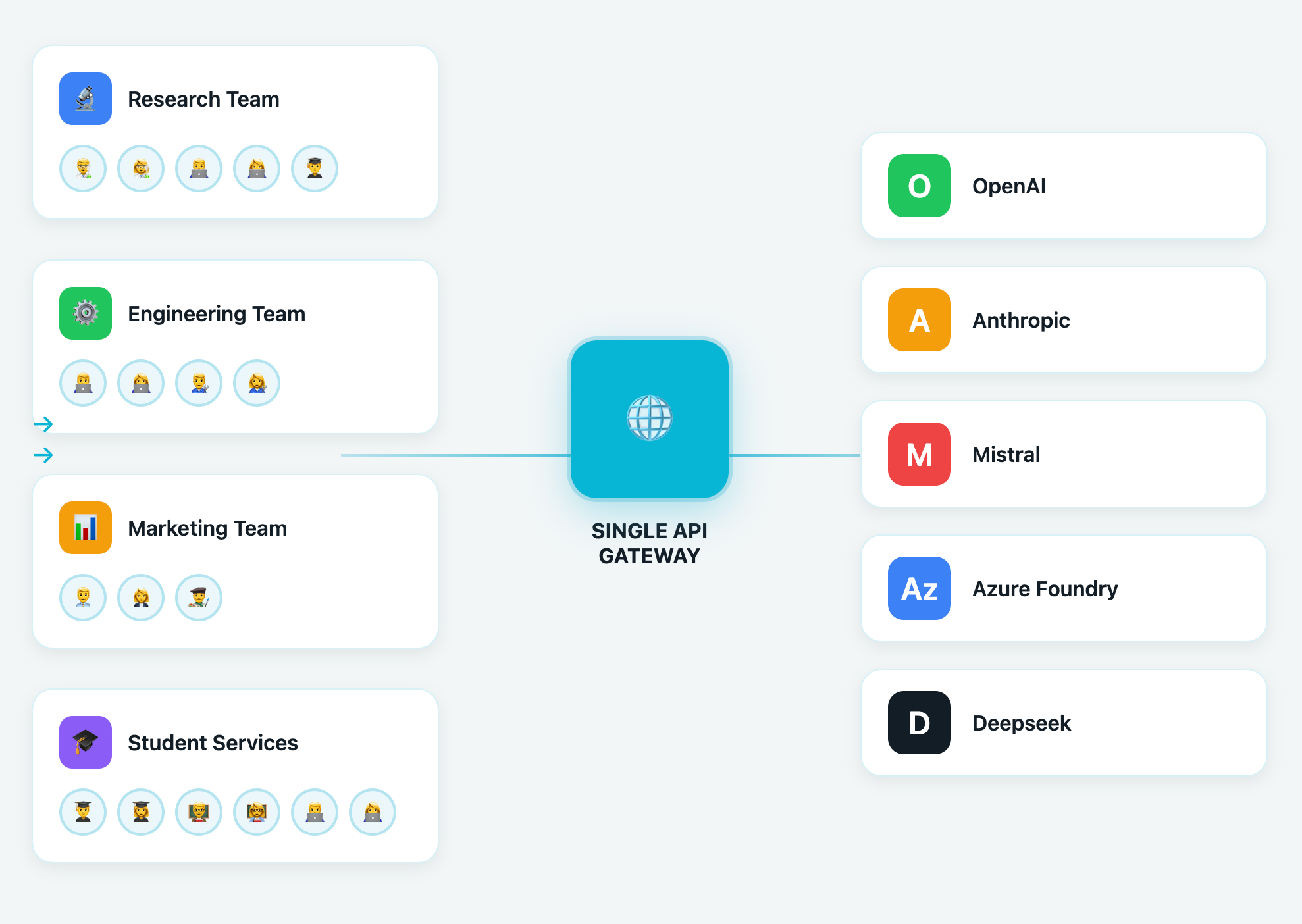

It starts with access. Every model request, whether to Claude, Gemini, Mistral, or an open-source LLM should route through a central gateway. This gateway handles authentication, abstracts away provider credentials, and enforces usage controls automatically. Instead of juggling individual API keys or trying to guess at departmental budgets, universities can track usage in one place, apply quotas, and avoid overspending before it happens.

But access alone isn’t enough. That gateway also needs to apply safety guardrails in real time. It should detect prompt injections, block jailbreak attempts, redact sensitive data, and flag outputs that violate policy. These rules should be customizable, broad defaults for undergraduates, stricter controls for high-risk research groups, and opt-outs where justified. The goal isn’t to micromanage every request, but to build a policy layer that institutions can trust.

Access also needs to reflect enrollment and role. A single AI environment doesn’t work when students span hundreds of courses and levels. What’s needed is workspace isolation, separate environments for each course, lab, or research group, each with its own set of tools, models, and policies. Students in a generative design course might get multimodal tools. A CS research group might get access to raw API endpoints. An English class might get only a chat UI. With proper separation, this becomes manageable and auditable.

And since courses change every semester, that separation has to be dynamic. The system should integrate with enrollment data to automatically create workspaces, assign students, and revoke access when the term ends. No admin should have to manually add or remove hundreds of users every few months.

Behind all of this is identity. The system needs to integrate with existing SSO and directory infrastructure Azure AD, or whatever the campus uses to manage access based on real user data. SCIM sync makes sure roles are up to date, and access maps cleanly onto university systems.

Finally, students shouldn’t just get access to models, they should also get access to the tools that make those models useful. Apps like Cursor, Claude Code, LibreChat, and OpenWebUI are becoming staples in how students interact with AI. But instead of blocking these tools or forcing workarounds, institutions need a way to allow them safely. That means routing tool traffic through the gateway, applying the same guardrails and policies, and giving admins visibility into usage patterns.

In short, a centralized governance layer needs to handle all of it: access, safety, identity, provisioning, and tools. Not as disconnected systems, but as one cohesive interface for managing AI at campus scale.

Portkey: AI infrastructure built for education

Portkey solves these governance challenges with a centralized AI gateway built specifically for large-scale institutions. It’s now the official Internet2 NET+ AI Gateway, trusted by universities like NYU and Princeton to securely deploy GenAI across campus.

With Portkey, institutions get a single control point for all AI traffic across models, tools, and users. You no longer need to hand out API keys. Instead, every request is routed through Portkey, where it’s authenticated, logged, and policy-enforced.

Guardrails come built-in. You can filter outputs, block injections, redact sensitive data, or flag unsafe completions automatically and in real time. These protections can be customized per workspace, course, or user group.

Every student or faculty member gets access based on their role and enrollment. Workspaces are isolated by course, department, or project. And as students move across semesters, Portkey automatically provisions or deactivates access by syncing with your enrollment systems.

You can plug into your campus-wide identity system to manage access centrally. And you can expose safe, governed access to modern AI tools like LibreChat, Claude Code, Cursor, or OpenWebUI without giving up control or visibility.

Whether it’s providing access to 1,600+ LLMs, enforcing safety and cost limits, or giving students the tools they need to build with AI Portkey gives universities the infrastructure to do it responsibly.

If you’re looking to give your institution secure, structured access to GenAI, Portkey is built for exactly that. As the official Internet2 NET+ AI Gateway, we help universities roll out AI safely, at scale with zero custom infrastructure.

Curious? You can either get started yourself or book a demo with us.