What is tree of thought prompting?

Large language models (LLMs) keep getting better, and so do the ways we work with them. Tree of thought prompting is a new technique that helps LLMs solve complex problems. It works by breaking down the model's thinking into clear steps, similar to how humans work through difficult problems. This approach helps LLMs tackle challenging tasks more effectively by exploring different paths to find the best solution.

What is tree of thought prompting?

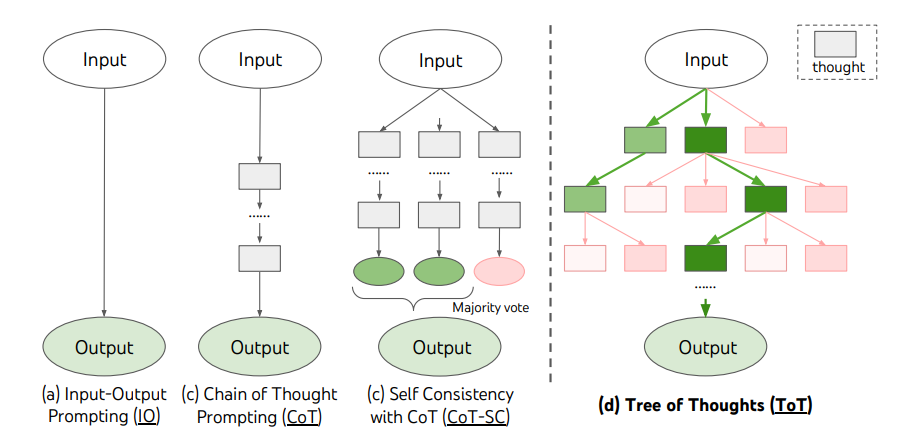

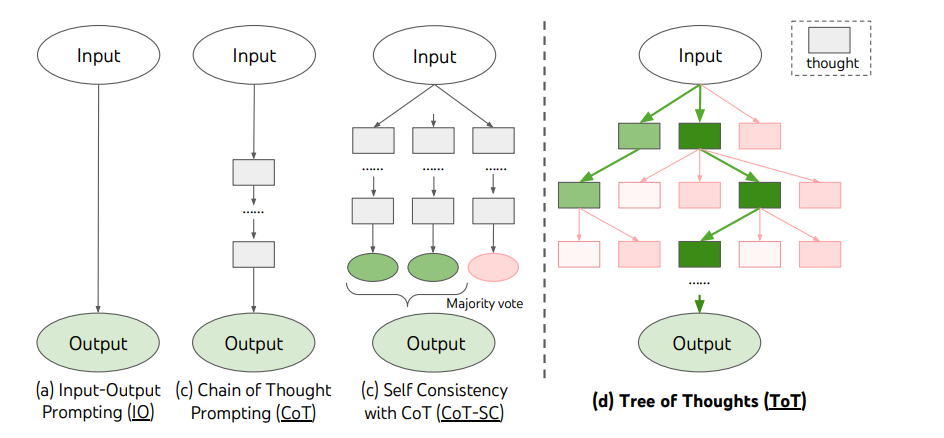

Tree of thought prompting is a prompt engineering technique that organizes the reasoning process of an LLM into a branching structure, much like a decision tree. Instead of moving from question to answer, the model goes through multiple potential paths simultaneously, evaluating each before deciding on the most optimal route.

This approach mirrors how humans tackle complex problems—by brainstorming, weighing options, and iterating through potential solutions. It moves from traditional single-step or few-shot prompting, which often assumes a direct path to the answer. Tree of thought prompting, by contrast, takes the path of complexity, making it particularly suited for tasks requiring deep reasoning or multi-step planning.

How tree of thought prompting works

Here's how tree-of-thought prompting works in practice:

- Branching solutions: The model generates multiple potential answers or intermediate steps based on a given prompt. Each branch represents a different line of reasoning or approach to the problem.

- Evaluating outcomes: The branches are assessed based on predefined criteria, such as accuracy, relevance, or feasibility. This evaluation can be automated or guided by additional prompts.

- Pruning and selection: Less promising branches are pruned, and the model focuses on the most viable paths, iterating as needed until an optimal solution is reached.

Imagine using tree of thought prompting to plan a marketing campaign. The model might generate several approaches: focusing on social media ads, influencer partnerships, or traditional media. Each path is then evaluated for potential ROI, audience reach, and feasibility. After pruning weaker ideas, the model modifies the most promising strategy into a detailed plan.

Core framework of tree-of-thought prompting

The ToT framework breaks down into four key elements that work together to enable deliberate decision-making:

Thought Decomposition

The first challenge is breaking down complex problems into "thoughts" - units of text that serve as steps toward a solution. This decomposition requires careful balance as each thought must be substantial enough for the LLM to evaluate meaningfully, yet small enough that the model can generate diverse, high-quality options. Also, the structure needs to match the problem's natural breakpoints

For example, when solving mathematical problems, a thought might be a single equation step. For creative writing, it could be a paragraph-level outline. The key is finding the right granularity that enables both creative exploration and reliable evaluation.

Thought Generation

Tree of thought implements two different strategies for exploring possible solutions:

Independent Sampling works like parallel brainstorming - generating multiple separate thoughts simultaneously. This approach is great when dealing with open-ended tasks like creative writing, where diversity of ideas is crucial.

Sequential Proposal operates more like a step-by-step reasoning process, where each new thought builds directly on previous ones. This proves especially effective for highly structured problems like mathematical reasoning or puzzle-solving.

State Evaluation

The framework's evaluation mechanism acts as a sophisticated decision-making engine with two powerful approaches:

Independent Evaluation examines each potential state on its own merits, assessing how promising it looks for reaching the final goal. This might involve checking mathematical validity, narrative coherence, or logical soundness.

Comparative Voting puts different states head-to-head, having the model select the best paths forward. This helps identify subtle quality differences that might be missed when isolated

Integrating Classical Search Algorithms

The final piece of the puzzle involves leveraging time-tested search algorithms to explore the solution space efficiently:

Breadth-first search (BFS) works well for problems with limited depth, systematically exploring all possibilities at each level before moving deeper. This is effective for tasks that require considering multiple parallel approaches.

Depth-first search (DFS) is better at deeper exploration, following promising paths to their conclusion before backtracking if needed. This strategy helps manage memory constraints while still enabling thorough exploration of complex solution spaces.

Both approaches can be enhanced with pruning strategies to focus computational resources on the most promising paths, making the overall process more efficient without sacrificing effectiveness.

Together, these components create a framework that enables language models to approach problem-solving more like humans do - with the ability to explore multiple paths, evaluate options, and adjust course as needed. This marks a significant advancement over simpler prompting methods, opening new possibilities for AI-assisted problem-solving across a wide range of domains.

Benefits of tree of thought prompting

When LLMs explore multiple paths instead of just one, they catch problems and opportunities they might otherwise miss. This helps avoid common pitfalls and leads to more solid results. Some problems need multiple steps to be solved properly. Tree of thought prompting is helpful here because it maps out different approaches and follows them systematically. This structured approach helps break down tricky problems into manageable pieces.

Also, by examining different paths instead of rushing to the first solution, LLMs often uncover creative approaches that wouldn't be obvious with simpler prompting methods.

Challenges of tree of thought prompting

While this technique has transformed how LLMs tackle complex problems, it's important to understand both its capabilities and limitations.

When you run tree of thought prompting, you're asking your LLM to explore multiple solution paths at once. This multi-path exploration comes with a catch: it needs serious computational muscle. Your infrastructure needs to handle the increased load, which typically means more GPU time and higher memory usage.

Setting up effective tree of thought prompts takes careful planning too. You need clear evaluation metrics to help the LLM judge which solution paths are worth pursuing and which should be dropped. This isn't just about writing good prompts - it's about designing a decision framework that guides the LLM toward meaningful solutions while avoiding computational dead ends.

There's also a tricky balance between specialization and flexibility. When you fine-tune your prompts for specific problems, you risk creating a system that works brilliantly for known scenarios but not for new challenges. This specialization-generalization tradeoff needs constant attention, especially if you're building systems that need to handle diverse use cases.

These challenges shape how and when you should deploy tree of thought prompting. For some applications, the improved problem-solving capabilities justify the extra computational cost and setup complexity. For others, simpler prompting strategies might be more practical.

The key is matching the prompt engineering technique to your specific needs while keeping resource constraints and long-term maintainability in mind.

Tree of thought prompting represents a different approach in reasoning and problem-solving. By using human decision-making processes, it opens new possibilities for tackling complex tasks and generating creative solutions. Whether you’re building AI applications for healthcare, finance, or any other industry, tree of thought prompting is a concept worth exploring.