We Tracked $93M in LLM Spends Last Year. Now the Data is Yours.

Accurate pricing for 2,000+ models across 40+ providers. Free API, no auth required.

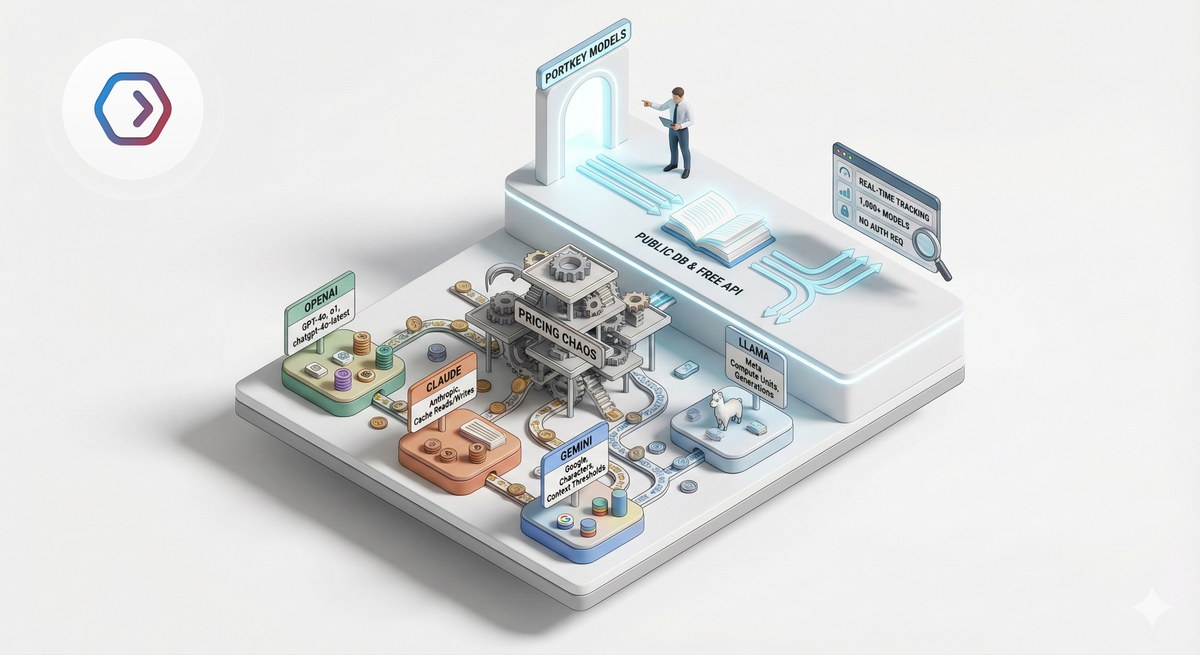

LLM pricing is a mess.

Every provider does it differently. OpenAI charges tokens, Google charges characters, others use "generations" or "compute units" or whatever they made up that quarter. The simple "input costs X, output costs Y" model breaks the moment you account for thinking tokens, cache reads, context thresholds, and per-request fees.

So every team building on LLMs ends up maintaining its own spreadsheet. It's stale by Friday.

Here's why:

The Naming Problem. OpenAI has gpt-5, gpt-5.2-pro-2025-12-11, o1, o3-mini - all different models, all different prices. Good luck explaining to finance why the same "gpt-4" cost 3x more this month.

The Units Problem. OpenAI charges per token. Google charges per character. Anthropic charges per token but with separate rates for "cache creation" vs "cache reads." Cohere uses "generations" and "classifications" and "summarization units." Amazon Bedrock prices the same Claude model differently than Anthropic direct.

The Hidden Dimensions Problem. The sticker price is "input/output tokens." The actual bill includes thinking tokens, cache writes vs. reads, context thresholds, per-request fees, and multimodal surcharges. Anthropic charges 3.75x more to write to cache than to read. Gemini 2.5 Pro charges $1.25/M under 200K context, $2.50/M above. None of this is on the marketing page.

And lastly, The Velocity Problem. Pricing isn't static. DeepSeek dropped R1 pricing from $0.55 to $0.14 input in weeks. OpenAI cut GPT-4 class pricing by 80% in a year. Google releases new Gemini tiers mid-quarter with no announcement. If your pricing data is a week old, it's wrong.

Of course, we at Portkey are not the first ones to solve this. Credit where it's due - there are a lot of good efforts:

Theo's GitHub Gist: A viral, 280+ CSV of model prices. But the comments tell the real story: "could you add Gemini 2.5 Pro?", "Did 4o just up their pricing?", "alibaba cloud offers qwen, but its pricing page is horrible." It's crowdsourced, manually updated, and perpetually chasing changes. Good for a quick reference.

LiteLLM's Pricing JSON: The most comprehensive static dataset out there. Covers hundreds of models with input/output costs, context windows, and capabilities. The problem: it's a JSON file in a GitHub repo. You parse it yourself. There's no API. When Anthropic adds a new caching tier, you wait for a PR to merge.

OpenRouter's /models API: Actually a proper API with real-time data. But it requires authentication — you need an OpenRouter account and API key. Fine if you're already routing through OpenRouter. A barrier if you just want pricing data for your own cost calculator.

Most efforts here have been side projects that go stale when the maintainer gets busy. In January alone, 111 out of 302 tracked models on one site had price changes. Keeping up is a full-time job.

The pattern is clear: everyone builds their own thing, it's accurate for a few weeks, then it drifts. There's no canonical source. No API you can just call. No dataset comprehensive enough to handle the weird edge cases.

We Built It. Now It's Yours.

We built a database to solve this internally. It powers cost attribution for 200+ enterprises running 400B+ tokens through Portkey every day.

This is roughly $250,00 in LLM spend tracked daily.

At that scale, a 1% discrepancy isn't a rounding error. It's a disaster.

Today, we're open-sourcing all of it.

Browse: portkey.ai/models

A searchable interface for 2,000+ models across 40+ providers. Filter by capability, modality, or provider. We track multiple billing dimensions — input tokens, output tokens, cached reads, thinking tokens, audio, images, web search, the works. One place. Always current.

See what's actually running: portkey.ai/rankings

Real usage data from production traffic across Portkey users. Which models are consuming the most tokens. Which are serving the most requests. Provider market share — not from surveys, from actual API calls. Updated daily.

Query it:

Free API. No auth required. One curl:

curl https://api.portkey.ai/model-configs/pricing/openai/gpt-5

curl https://configs.portkey.ai/pricing/openai.json

Contribute: github.com/portkey-ai/models

Raw JSON. MIT licensed. Community maintained.

Every model is verified and cross-referenced against provider documentation. This is the same data our enterprise customers bet their cost attribution on.

Later this week, we're open-sourcing the pricing agent that keeps this fresh automatically.

Trust, but verify. The data is live. The API is free. The repo is open.