What are AI guardrails?

Learn how to implement AI guardrails to protect your enterprise systems. Explore key safety measures, real-world applications, and practical steps for responsible AI deployment.

AI systems have become powerful tools in our tech stack, but their outputs and behaviors can be unpredictable. Teams are finding that without proper boundaries, AI can generate harmful content, rack up unnecessary costs, or make decisions that don't match business requirements.

AI guardrails address these challenges by creating clear boundaries for how AI systems operate. Whether you're scaling up your AI deployment or just starting to think about AI safety, understanding these core concepts will help you build more reliable systems.

What are AI guardrails?

Building AI systems requires more than just good models - it requires robust safety measures. AI guardrails are the technical and operational controls that keep your AI systems in check throughout their lifecycle. From data processing to decision-making to final outputs, these measures work together to maintain system reliability and safety.

Key characteristics of AI Guardrails

Proactive and Reactive Controls: The best defense is a mix of prevention and quick response. Proactive measures stop problems before they start - like input validation and resource limits. Reactive controls kick in when something slips through, helping your team catch and fix issues fast.

Dynamic Adaptation: AI systems evolve quickly, and your guardrails must keep pace. This means building flexible monitoring systems that can adapt to new use cases, model behaviors, and regulatory requirements without major rewrites.

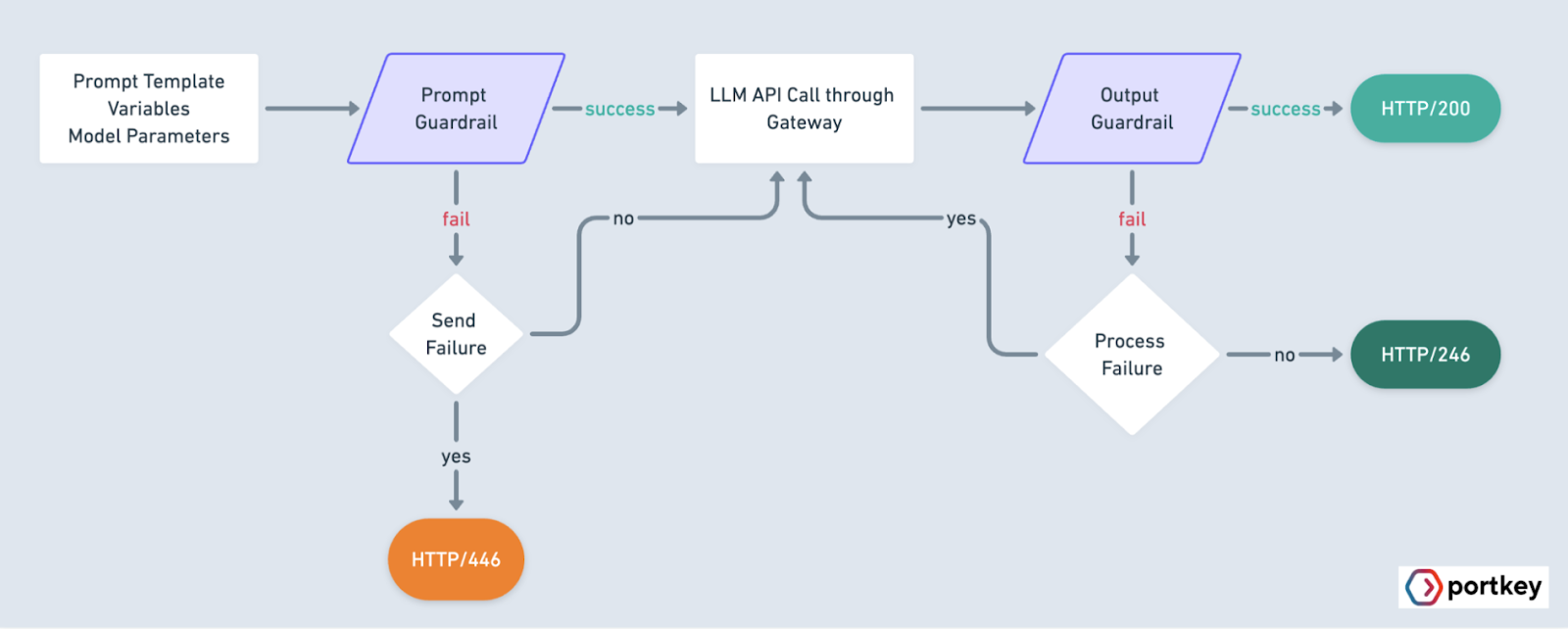

Multi-Layer Protection: Effective guardrails work at every stage of your AI pipeline. Input controls validate and sanitize data before it hits your models. Runtime monitoring watches for unexpected behaviors or resource spikes. Output checks screen results before they reach users.

The pillars of AI Guardrails

Your first line of defense starts with what goes into your AI system. Input guardrails make sure incoming data won't throw your model off track or cause problems down the line. Teams typically use input validation to catch obvious issues, prevent malicious, biased, or irrelevant data, filter content based on context, and apply domain-specific rules.

What comes out of your AI is just as important as what goes in. Output guardrails clean up AI responses to make sure they comply with ethical and business standards before they reach users. This means checking if responses make sense, scanning for harmful content, and collecting user feedback to spot problems.

Running AI at scale means keeping an eye on the nuts and bolts. Operational guardrails track how your system performs, stop it from using too many resources, and keep it in line with security rules - minimizing risks and costs. A common example is tracking token usage in large language models - nobody wants a surprise bill because their AI got chatty.

Why AI Guardrails are essential for enterprises

For businesses deploying AI systems, guardrails aren't just nice to have - they're fundamental to responsible AI development. Let's look at why these safeguards matter across different aspects of enterprise operations.

Organizations can't afford the fallout from AI systems gone wrong. Strong GenAI guardrails help with risk mitigation, catching potential problems early, and preventing AI from generating harmful or biased content that could damage your brand. When your AI system interacts with customers or processes sensitive data, these protections help spot and stop problematic outputs before they reach users. This proactive approach saves teams from scrambling to fix issues after they've already affected users or business operations.

The AI regulatory landscape keeps shifting, with new rules emerging across different regions and industries. Your AI systems need to stay compliant with everything from GDPR's data protection requirements to HIPAA's healthcare privacy rules. GenAI Guardrails help track and enforce these requirements automatically. They make sure your AI handles data appropriately and maintains proper documentation of its decision-making processes. This systematic approach helps teams adapt quickly when new regulations come into play.

Beyond prevention and compliance, smart guardrails improve how your AI systems run.By monitoring resource usage and setting appropriate limits, they keep costs under control and prevent system overload. Teams can spot inefficiencies early and optimize their AI workflows. These controls also stop bad actors from abusing your systems, protecting both your resources and your results.

Trust becomes the foundation of successful AI deployment. When users interact with AI systems, they need to know they can count on the results. Guardrails help deliver consistent, reliable experiences that build confidence in your AI solutions. By maintaining high standards for AI outputs and catching potential issues early, you show users and stakeholders that your AI systems are trustworthy partners, not black boxes. This trust becomes especially important as AI takes on more critical roles in business operations.

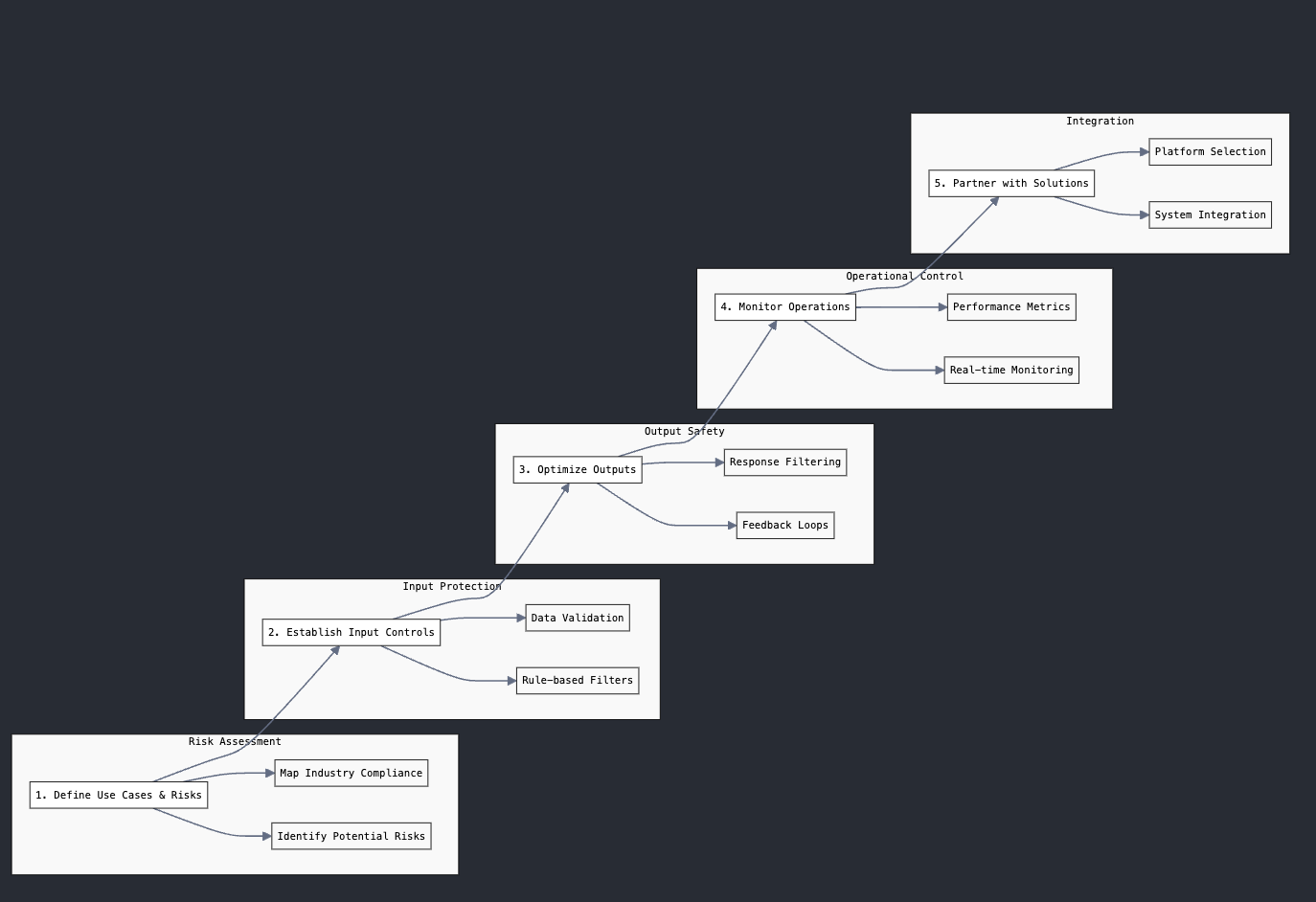

Steps to implement AI Guardrails at scale

Setting up AI guardrails starts with understanding your specific use cases and risks. Teams need to map out exactly how their AI systems will be used and what could go wrong. This means looking at your use cases from every angle - where could bias creep in? What sensitive data might your system handle? What industry rules do you need to follow? Getting clear on these questions shapes your entire guardrail strategy.

Once you know what you're protecting against, it's time to build your robust input controls. This goes beyond basic data validation. You'll need filters that understand your business context, rules that match your industry's standards, and checks that catch subtle problems in incoming data. These controls act as your first line of defense against misuse or problematic inputs.

Output quality needs constant attention. Start by putting filters in place that catch obvious issues in AI responses. Then set up ways to test different approaches and collect feedback about what works. Real users often find edge cases your team didn't think of, so make it easy to learn from their experiences and improve your systems.

Running AI at scale means keeping a close watch on how everything performs. Set up monitoring that shows you what's happening in real-time - from response times to resource usage to error rates. Good monitoring helps you spot problems fast and fix them before they affect users. Look for patterns in your logs and metrics that point to areas needing improvement.

The right tools make a big difference in successfully scaling GenAI guardrails. Rather than building everything from scratch, consider working with platforms designed for AI safety. Tools like Portkey can handle many common guardrails needs out of the box, letting your team focus on challenges specific to your business.

Real-World Applications of AI Guardrails

In healthcare, AI guardrails play a vital role in diagnostic systems. Medical teams need AI that can help spot patterns in patient data while staying within strict safety bounds. These GenAI guardrails check incoming patient data for quality and completeness and then validate outputs against established medical guidelines. For example, when an AI suggests a diagnosis, guardrails make sure it's based on solid evidence and flags cases needing human review. This keeps AI as a helpful tool in the diagnostic process while maintaining patient safety.

Financial services firms face unique challenges when deploying AI for investment advice. These systems need to understand complex market data while following strict regulatory rules. Guardrails in this space watch for recommendations that might break financial regulations or push clients toward unsuitable investments.

Retail AI systems, particularly shopping assistants, need guardrails to keep recommendations fair and helpful. These systems process vast amounts of customer data to make suggestions, but they need to avoid biases that might unfairly promote certain products or discriminate against user groups. GenAI Guardrails here check for diversity in recommendations, monitor for manipulative tactics, and make sure that suggestions match each customer's actual needs and preferences rather than just pushing high-margin items.

Challenges in setting up AI Guardrails

For setting up AI guardrails you need to balance innovation and safety. Too many restrictions can stifle innovation, while too few can lead to problems. Finding this sweet spot means understanding both your AI's capabilities and your business needs, then crafting rules that protect without restricting useful functionality.

AI threats don't stand still, and neither can your defenses. New ways to misuse AI systems pop up regularly, from clever prompt injection attacks to subtle ways of extracting sensitive data. Your guardrail systems need built-in flexibility to adapt and handle emerging threats. This means regular updates to safety rules, monitoring for new types of attacks, and quick responses when you spot problems.

Enterprise scale brings its own set of challenges. When you're running multiple AI models across different teams and regions, keeping everything in check gets complicated fast. Each use case might need its own set of rules, each region its own compliance checks, and each team its own monitoring setup. Managing all these moving parts while maintaining consistent safety standards takes careful planning and the right tools.

Teams need systems that can grow with their AI deployment while keeping everything secure and compliant.

How Portkey strengthens AI Guardrails

Portkey takes on AI safety challenges with a practical set of tools built for real-world use.

Text safety starts with pattern matching. Using regex checks, teams can spot and handle specific text patterns in both incoming requests and AI outputs. This helps catch everything from sensitive data patterns to potentially harmful content before it causes problems.

For teams working with structured data, JSON schema validation ensures AI responses stay in the right format. This keeps your data clean and your integrations running smoothly. When you need to watch for code in content - whether it's SQL queries, Python scripts, or TypeScript - Portkey's code detection tools help maintain boundaries around what kinds of content your AI can work with.

Sometimes off-the-shelf tools aren't enough. That's why Portkey works with your existing custom guardrails. Your team's specialized safety checks can plug right into the system, giving you the best of both worlds - proven tools plus your own custom safeguards.

The platform doesn't stop at basic checks. With over 20 deterministic guardrails and smart LLM-based protections, it watches for subtle issues like nonsense outputs or attempts to manipulate your AI system. These tools work together to help teams deploy AI responsibly, keeping risks in check without slowing down development. See how we work with Enterprise AI teams.

Building a safer AI future with guardrails

AI is becoming central to how businesses work, but its power needs smart controls. When teams put good guardrails in place, they not only protect against risks - they build AI systems their users can really count on. These safeguards help catch problems early, keep systems running smoothly, and adapt to new challenges as they come up.

Getting started with AI safety doesn't have to be overwhelming. Tools like Portkey help teams put solid guardrails in place without rebuilding everything from scratch. Whether you're just starting with AI or looking to make your existing systems more robust, the right guardrails can help you move forward with confidence.

Ready to build safer AI systems? Let's talk