What are MCP connectors?

MCP connectors simplify how LLMs and agents access tool, but tool-specific implementations create fragmentation. See how an AI gateway unifies production-ready MCP connectors.

As LLMs evolve into agents, tools have become essential, powering everything from search and RAG to code execution and database queries. But wiring tools into agents or model runtimes is still a fragmented, manual process.

That’s what MCP (Model Context Protocol) solves: a standard way to expose and consume tools across models, agents, and environments. It defines how tools are described, called, and responded to, making them portable and interoperable.

MCP connectors take this further by simplifying how tools get connected. Instead of building custom logic for every runtime, connectors act as a bridge between your LLM or agent and one or more MCP servers making tool calls seamless and consistent.

What MCP connectors solve

MCP gives you a standard for exposing tools but you still need a way to connect those tools to your agents or models in production. And that’s where things get tricky.

To use an MCP tool, you’d need to:

– Fetch the tool spec manually

– Handle auth and permissions per request

– Format inputs and parse outputs correctly

– Retry, log, and manage errors yourself

This gets even more complex when you’re working with multiple models, agents, or tool servers. Every tool call becomes a small orchestration problem and the effort multiplies fast.

MCP connectors solve this. They handle the glue work of connecting your runtime to any MCP-compliant tool server, standardizing how tools are discovered, invoked, and governed. So instead of re-implementing the protocol yourself, you get a clean interface that just works.

What are MCP connectors

MCP connectors let you connect directly to remote MCP servers without writing your own client logic or running a separate MCP client. Instead of handling the protocol implementation yourself, the connector takes care of it, directly within the model runtime or system that’s making the request.

In practice, this means your LLM or agent can call tools hosted on MCP servers without additional infrastructure. No extra middleware, no separate orchestration layers just a direct path from model to tool, handled by the connector.

MCP connectors abstract away the protocol details while preserving everything MCP offers: standardized tool definitions, stateless calls, and model-friendly interfaces. They make it dramatically easier to integrate and scale tools across different systems, without coupling them to specific runtimes. Here’s how it helps:

- Zero orchestration overhead

No need to run or manage a separate MCP client. Connect to remote tools directly from your model or agent runtime. - Tool reuse across models and agents

Expose a tool once on an MCP server and use it everywhere i.e., across OpenAI, Claude, OSS agents, or custom runtimes. - Multi-server flexibility

Call tools across multiple MCP servers in the same request, with connectors handling routing and resolution automatically. - Unified governance

Apply auth, usage controls, logging, and monitoring at the connector level — not per runtime or per tool. - Faster experimentation and deployment

Spin up new tools or swap models without rewriting plumbing or re-implementing the protocol.

The challenges of working with tool-specific MCP connectors

While MCP connectors simplify integration, they often come bundled with specific tools or runtimes and that introduces its own challenges:

- Inconsistent formats and behaviors

Different tool providers may implement connectors slightly differently, leading to edge-case handling and compatibility issues. - Fragmented auth and configuration

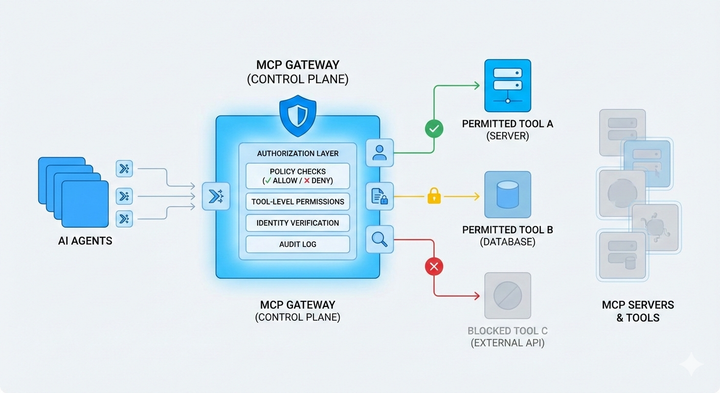

Each connector might require its own authentication method, environment variables, or client setup, increasing overhead. - Lack of central governance

When connectors are distributed across tools, there’s no unified way to enforce policies, log activity, or manage access across your stack. - Difficult to scale across environments

Connecting multiple MCP servers or sharing tools across model runtimes becomes harder without a central coordination layer.

In other words, connectors solve the right problem, but scattered, tool-specific implementations limit their impact at scale.

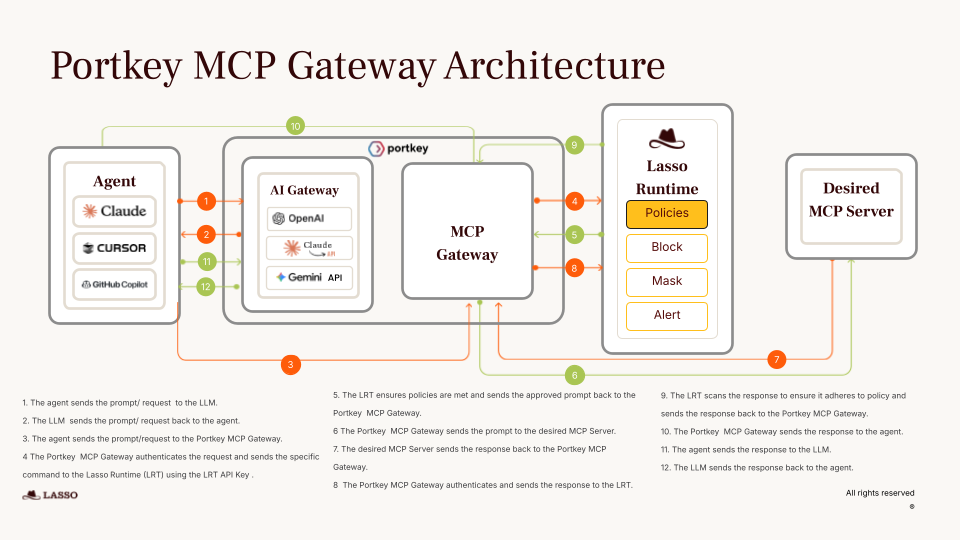

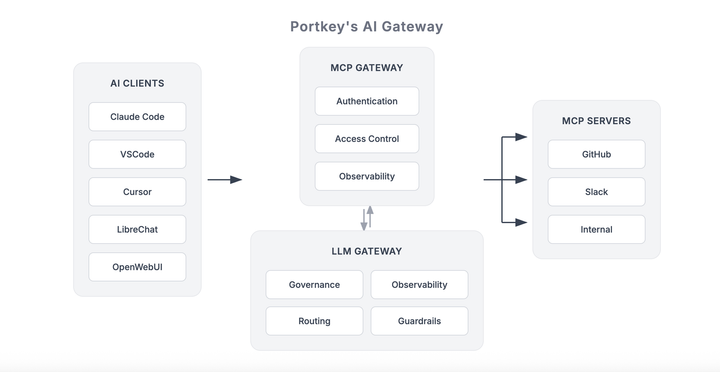

How Portkey brings MCP connectors to the AI Gateway

Instead of wiring in separate clients or managing tool-specific connectors, you can now connect to any MCP server through Portkey. The gateway handles everything:

- Route requests across multiple MCP servers in a single call

- Apply unified authentication and OAuth flows

- Enforce governance across every tool call — logging, usage control, and access policies

- Work out of the box with Claude, OpenAI, and open-source runtimes like LibreChat and OpenWebUI

Whether you're using agents or direct model calls, you get a single, consistent interface for tool access with zero orchestration or protocol handling on your side.

Get early access

MCP connectors on Portkey’s AI Gateway are launching soon. Book a demo to get a sneak peek and early access.