Zero-Shot vs. Few-Shot Prompting: Choosing the Right Approach for Your AI Model

Explore the differences between zero-shot and few-shot prompting to optimize your AI model's performance. Learn when to use each technique for efficiency, accuracy, and cost-effectiveness.

The way we interact with models significantly influences their performance. Prompt engineering—essentially the input we provide to these models—is critical in shaping their outputs. Among the various prompting techniques, zero-shot and few-shot prompting stand out as two popular approaches that cater to different needs.

This blog will explore the intricacies of zero-shot and few-shot prompting, comparing their strengths and limitations.

Use the latest models in LibreChat via Portkey and learn how teams are running LibreChat securely with RBAC, budgets, rate limits, and connecting to 1,600+ LLMs all without changing their setup.

To join, register here →

Understanding Zero-Shot Prompting

Zero-shot prompting is a technique in natural language processing where an AI model generates responses to user queries without receiving any prior examples. Instead of being trained with specific instances, the model relies on its understanding of language, context, and general knowledge acquired during its training phase.

For example, if a user asks, What are the benefits of renewable energy? a zero-shot model can generate a coherent and informative response even if it has never been explicitly trained on that exact question. The model utilizes its vast repository of knowledge to address the query contextually, effectively guessing the answer.

Advantages of zero-shot prompting

- Efficiency: It eliminates the need to curate and provide numerous examples for every new task.

- Speed: Since the model does not need to process example inputs, zero-shot prompting often results in faster response times. bots, where quick interaction is crucial.

- Versatility: Whether it’s general knowledge queries, creative writing, summarizing information or even generating images with an AI image generator, zero-shot models can often provide reasonable outputs without needing task-specific training.

Limitations of zero-shot prompting

- Lower Accuracy: While zero-shot models can handle a wide array of queries, they may struggle with highly specific or nuanced tasks. Without contextual examples, the model might misinterpret the intent of the prompt or generate less accurate responses.

- Dependency on Training Data: The effectiveness of zero-shot prompting heavily relies on the quality and diversity of the training data. If the model has not encountered similar concepts during its training, its responses may lack depth or accuracy.

- Contextual Ambiguity: In cases where prompts are vague or ambiguous, zero-shot prompting can lead to unpredictable outcomes. The model may draw from various unrelated knowledge areas, resulting in responses that do not align with user expectations.

Understanding Few-Shot Prompting

Few-shot prompting is an approach that provides an AI model with a handful of example prompts and responses to guide its output. Compared to zero-shot prompting, few-shot prompting introduces a series of examples that clarify the desired response style, tone, and structure.

For instance, if a user wants the model to respond in a formal tone for customer support emails, they could include a few examples of similar formal responses within the prompt. By seeing these examples, the model gains a better understanding of how it should respond to the specific input, leading to more consistent and accurate outputs.

Few-shot prompting essentially primes the model, creating a pseudo-training session that lets it adapt quickly to new contexts or specialized tasks without requiring extensive re-training.

Advantages of few-shot prompting

- Improved Accuracy: Few-shot prompting helps the model generate more accurate responses by establishing a clearer context through examples.

- Adaptability to Specific Tasks: Providing examples allows the model to align more closely with specific requirements, enhancing its performance on domain-specific tasks.

- Reduced Risk of Misinterpretation: By setting clear expectations, few-shot prompting can minimize misunderstandings in complex tasks where a vague prompt might lead the model astray. This approach helps the model understand both the tone and style of the desired output.

Limitations of few-shot prompting

- Increased Computational Load: Since few-shot prompting involves processing example inputs along with the main prompt, it can be more computationally demanding than zero-shot prompting and may lead to increased costs, particularly when running large-scale or high-volume applications.

- Longer Response Times: Providing and processing examples in each prompt can lead to longer response times. This delay might impact user experience in real-time applications where immediate feedback is essential.

- Limited Examples for Complex Tasks: For tasks that are especially intricate, a few examples may not fully capture the depth or nuances required for the best possible output. In these cases, even few-shot prompting might fall short, and a more extensive fine-tuning process could be necessary.

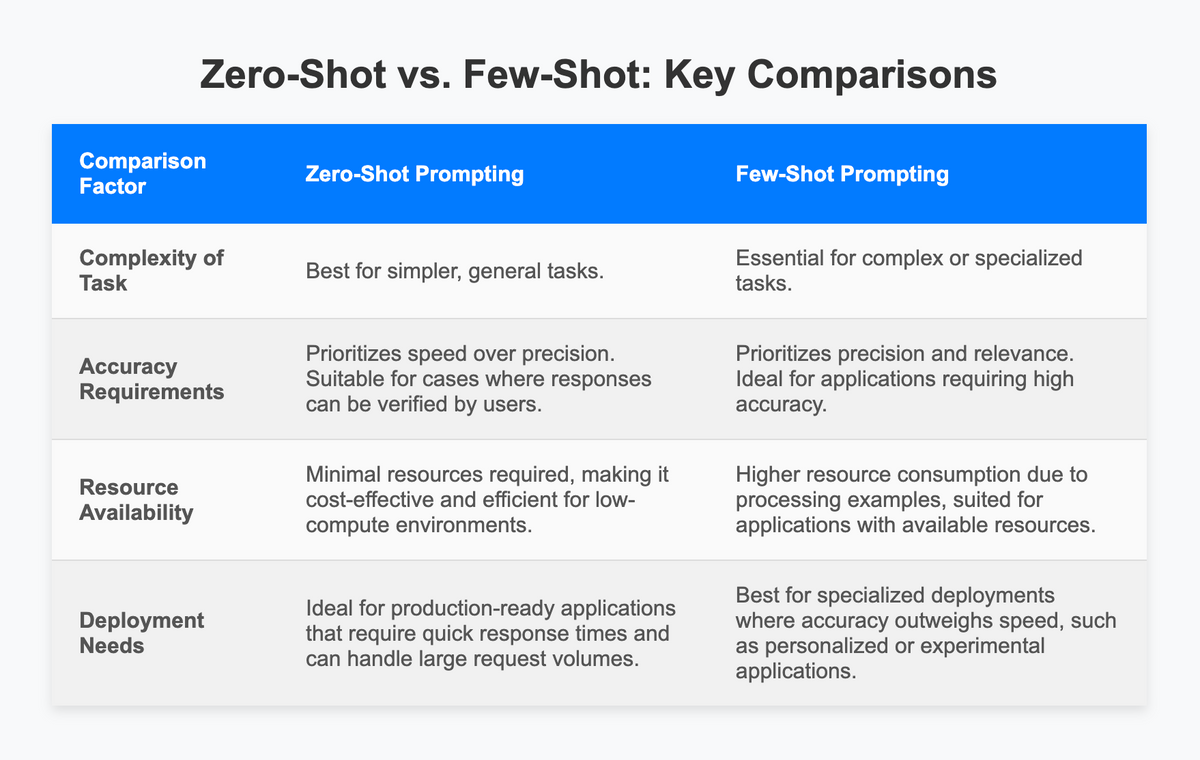

Zero-Shot vs. Few-Shot: Key Comparisons

| Comparison Factor | Zero-Shot Prompting | Few-Shot Prompting |

|---|---|---|

| Complexity of Task | Best for simpler, general tasks. Examples: general knowledge questions, open-ended brainstorming, language translation. | Essential for complex or specialized tasks. Examples: tasks requiring structured responses, specific tone or style, detailed context like technical support. |

| Accuracy Requirements | Prioritizes speed over precision. Suitable for cases where responses can be verified by users. | Prioritizes precision and relevance. Ideal for applications requiring high accuracy, like customer service or specialized content generation. |

| Resource Availability | Minimal resources required, making it cost-effective and efficient for low-compute environments. | Higher resource consumption due to processing examples, suited for applications with available resources. |

| Deployment Needs | Ideal for production-ready applications that require quick response times and can handle large request volumes. | Best for specialized deployments where accuracy outweighs speed, such as personalized or experimental applications. |

Zero-Shot Prompting Use Cases

- General-Purpose Chatbots

- Zero-shot prompting is well-suited for general-purpose chatbots, where queries are often simple, and responses can be generated without deep customization.

2. Straightforward Q&A

- For factual or general knowledge questions, zero-shot prompting can be effective without needing examples. This is ideal in applications where questions are straightforward and don’t require complex logic.

3. Language Translation

- Description: Basic language translation tasks can often be handled well with zero-shot prompting, as many models have been trained on multilingual data, making them capable of translating without extra guidance. Example: A travel app offering instant translations for common phrases or simple sentences between languages, where nuanced interpretation isn’t essential.

4. Content Summarization

- Description: Zero-shot prompting can handle summarizing content like news articles or blog posts, especially when a simple, concise summary is sufficient without extensive customization.

Few-Shot Prompting Use Cases

- Sentiment Analysis

- Few-shot prompting helps improve accuracy in sentiment analysis, particularly in cases where language may be ambiguous or require context to interpret correctly. Example: An e-commerce company using sentiment analysis to determine customer feedback tones (e.g., positive, neutral, negative) from reviews.

2. Specific Q&A

- When Q&A tasks require detailed and accurate answers, few-shot prompting provides essential context.

3. Specialized Customer Support Applications

- Few-shot prompting can refine customer support interactions, especially when responding to complex or sensitive issues that require particular phrasing, empathy, or a consistent tone. Example: A healthcare chatbot that guides patients through initial symptom checks with an empathetic, clear tone and avoids providing misleading information.

4. Product Recommendations

- In cases where personalized product recommendations need context, few-shot prompting can help the model understand which aspects of products or user preferences to emphasize. Example: An online retail assistant recommending products based on user preferences and past behavior.

5. Custom Content Generation

- Few-shot prompting works well in generating custom content like marketing copy, personalized messages, or instructional content, where tone, style, or structure are crucial. Example: A content generation tool for social media managers creating promotional posts with the brand’s specific tone.

Portkey’s prompt engineering feature empowers teams to explore both zero-shot and few-shot prompting styles and evaluate what works best for their specific needs. With a user-friendly interface and advanced prompt engineering tools, Portkey.ai lets you set up, test, and refine prompts with real-time feedback.

Key benefits of using Portkey.ai for zero-shot and few-shot experimentation include:

- Side-by-Side Comparison: Seamlessly toggle between zero-shot and few-shot prompting, allowing direct comparisons to see which approach yields the most relevant responses.

- Custom Prompt Libraries: Build and store libraries of example prompts for few-shot setups, making it easy to reuse and refine prompts across different applications.

- Performance Metrics: Track accuracy, response time, and user satisfaction across different prompting techniques, enabling data-driven insights into what’s working.

- A/B Testing Tools: Test multiple prompt variations in real-world scenarios, helping you fine-tune each approach based on live data.

By centralizing prompt management and offering in-depth analytics, Portkey.ai provides a powerful environment for maximizing AI performance through continuous experimentation. See firsthand which prompting style best aligns with your team’s goals—and optimize accordingly.