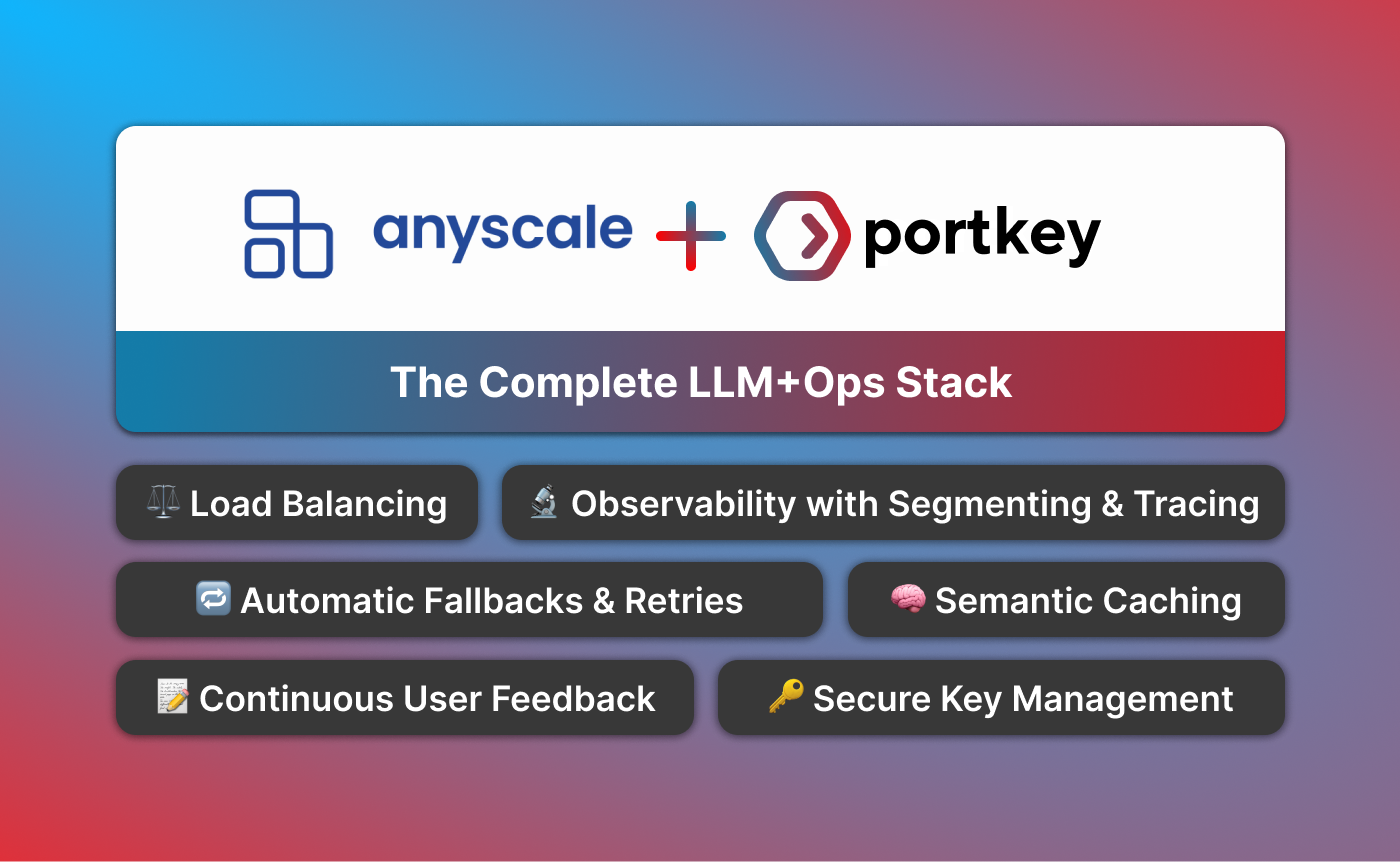

Anyscale's OSS Models + Portkey's Ops Stack

The landscape of AI development is rapidly evolving, and open-source Large Language Models (LLMs) have emerged as a key foundation for building AI applications.

Anyscale has been a game-changer here with their fast and cheap APIs for Llama2, Mistral, and more OSS models.

But to harness the full potential of these LLMs, developers need a robust LLMOps stack that combines reliability, security, fine-tuning, and observability. This is where the combination of Portkey and Anyscale Endpoints comes together!

Portkey and Anyscale Endpoints, when used in combination, offer a comprehensive LLMOps stack for developing AI applications with open-source LLMs. Portkey provides observability, model management & improvement suite, security, and reliability, while Anyscale Endpoints offers serving open LLM as a service, private deployment and fine-tuning, creating a powerful toolkit for developers.

Anyscale Endpoints: Streamlining LLM Serving and Fine-Tuning

Anyscale Endpoints brings efficiency to the table:

- Private Deployment: Deploy LLM endpoints in your cloud environment, a key feature for maintaining data privacy and governance.

- Fine-Tuning: Tailor open-source models with your data, an essential step for developing bespoke applications that balance cost and performance.

- State-of-the-Art Open LLMs: Access a range of optimized open-source models, allowing you to choose the best fit for your project scale and requirements.

Portkey: Enhancing Your Development Workflow

Portkey is a developer-centric platform that supports the full lifecycle of your AI applications. Here's how it can benefit your development process:

- Observability: Keep a close eye on your LLM applications with real-time monitoring, a key for timely troubleshooting and optimization.

- Security and Compliance: With increasing concerns about data security, Portkey's robust security protocols and compliance adherence (including SOC2, HIPAA, and GDPR) are invaluable.

- AI Gateway: Its integration capabilities with Anyscale and other LLM providers streamline your workflow, offering features like semantic caching and automated fallbacks for enhanced reliability and cost management.

- Model Management & Improvement: Efficiently manage prompt templates and leverage Portkey's logging capabilities for targeted model fine-tuning on Anyscale.

Combining Portkey and Anyscale Endpoints

By integrating Portkey with Anyscale Endpoints, you create a comprehensive LLMOps stack that not only covers all aspects of AI application development but also streamlines the process, allowing you to focus on innovation and efficiency.

Ready to boost your AI development process? Get started with Portkey + Anyscale here!