Bringing GenAI to the classroom

Discover how top universities like Harvard and Princeton are scaling GenAI access responsibly across campus and how Portkey is helping them manage cost, privacy, and model access through Internet2’s service evaluation program.

Universities are scrambling to adapt as AI rapidly changes how we create and share knowledge. It's no longer just a topic for computer science departments—AI literacy is becoming essential across all disciplines.

In this post, we'll dive into the real-world applications of generative AI in education today, examine the major hurdles universities are facing, and look at how leading institutions are navigating these challenges successfully. If you're developing AI tools that might find applications in education, or are just curious about this rapidly evolving space, you'll find some valuable insights here.

Why GenAI belongs in today's classroom

Generative AI is quickly becoming foundational, much like computer literacy was in the early 2000s. But this is more than helping computer science students write better code or researchers craft better papers. It's about giving every student powerful tools to learn, create, and solve problems, regardless of their field of study.

Universities are recognizing this shift and moving to integrate AI across the academic experience. Students want to use AI tools in their day-to-day learning. Faculty see value in automating repetitive tasks. And administrators are eyeing ways to make operations more efficient.

GenAI is no longer confined to labs or advanced CS classes. It’s showing up in humanities, law, business, and the arts—helping students ideate faster, summarize better, and create more freely.

The challenges in bringing AI to the classroom

While the potential of generative AI in education is exciting, universities aren't finding implementation to be straightforward. The reality is that schools are facing several complex challenges that go well beyond simply giving students access to an LLM.

Students and faculty are naturally eager to use the most powerful models available—whether that's GPT-4, Claude, Gemini, or others. But this widespread access raises important questions about oversight. How can a university ensure ethical use when hundreds or thousands of users are interacting with these systems daily?

Privacy and data protection present another significant hurdle. When students interact with AI systems, they often share sensitive personal information or academic work. Universities need robust guarantees that these inputs won't be stored, shared with third parties, or misused, especially when working with external API providers who may have different data policies.

New models and versions keep appearing almost every week. Universities simply can't afford to rebuild their integration systems every time a significant update rolls out. They need a flexible layer that can handle this complexity without constant re-engineering.

Cost management is proving to be another major challenge. AI usage patterns can spike unpredictably, particularly when students begin exploring models with large context windows or conducting extensive research. Without proper visibility into usage and appropriate limits, budgets that seemed reasonable can quickly spiral out of control.

Finally, there's the gap in infrastructure and technical expertise. Most universities don't have the engineering resources to build a secure AI stack from scratch. Faculty members want AI tools that "just work" without requiring them to become prompt engineers or system administrators themselves.

Today, many universities are tackling this department by department. Each school or research group often sets up its own AI subscriptions, leading to duplicated efforts, inconsistent access, and unused credits sitting idle. There's no centralized view of usage or spend, making it hard to optimize.

How Portkey is helping top universities solve this

All universities are navigating the same GenAI challenge: how to scale access to powerful AI tools while keeping security, privacy, and costs in check.

It’s not enough to offer AI to one department. Universities want to democratize access across campus—from business schools to engineering labs—without creating chaos in the process. That’s exactly where Portkey comes in.

One of the biggest advantages Portkey offers is centralized access to multiple AI models. Instead of managing separate subscriptions and integrations for GPT-4, Claude, Mistral, Gemini, and others, universities can connect to all these models through a single, unified API. This immediately eliminates the fragmentation that makes large-scale AI deployment so complex. Also, every new model gets added to Portkey, making it easier to try and test the new models while working with the existing models.

When it comes to responsible use, Portkey provides organization-wide guardrails that give administrators peace of mind. Universities can enforce consistent usage policies, block specific types of inputs or outputs, and implement moderation filters across all LLM interactions. The platform's role-based access control (RBAC) capabilities allow institutions to set different permission levels for administrators, faculty, and students, ensuring that each user only has access to the models and features appropriate for their role.

Administrators can set specific budgets for each department, track consumption in real time, and prevent overuse before it happens. This puts an end to two common problems: unused credits that go to waste and unexpected bills that blow the budget.

Portkey also includes built-in observability and comprehensive audit logs. Every single request gets logged with detailed metadata, enabling teams to not only analyze usage patterns but also maintain a complete audit trail for compliance and governance purposes. These logs provide the transparency needed for administrative oversight and can be invaluable during security reviews or when addressing potential misuse.

Perhaps most importantly for resource-constrained IT departments, Portkey doesn't require a heavy infrastructure investment. It integrates smoothly with existing university systems, which means IT teams don't have to build or maintain complex AI infrastructure from scratch.

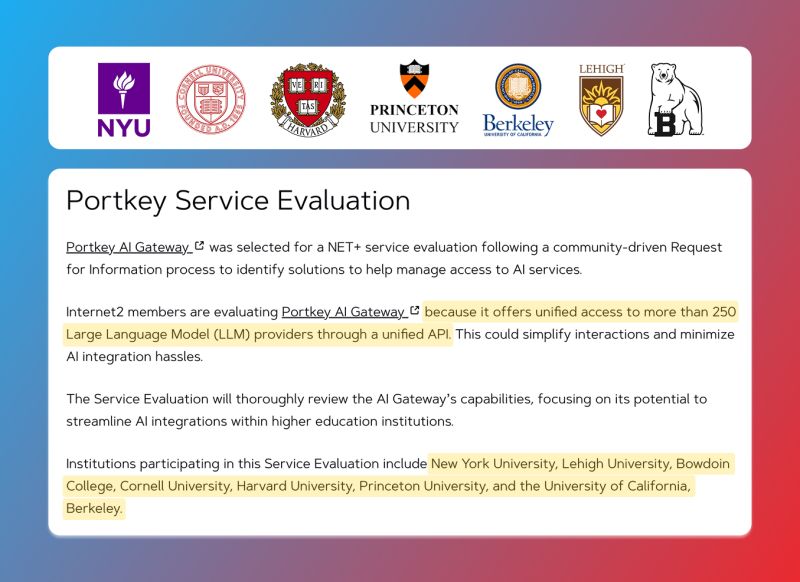

Several Ivy League universities, including Harvard, Princeton, Cornell, and UC Berkeley, are now evaluating Portkey as their official AI gateway.

By participating in Internet2’s process, Portkey is committed to building not just what works technically, but what aligns with the security, compliance, and governance standards expected in academia.

The path forward

Generative AI isn’t a future skill—it’s a present-day requirement. Universities that move fast to embed AI across their academic fabric will be the ones best preparing students for the real world.

But speed without strategy is risky. That’s why institutions need a platform that gives them flexibility, visibility, and control—all without slowing innovation.

At Portkey, we’re proud to help leading universities take this leap responsibly. Whether you’re rolling out AI tools across departments or just starting to explore how LLMs fit into your campus strategy, we’re here to help.

If you're a university leader, faculty member, or IT decision-maker trying to scale AI access, let’s talk. We’d love to share what we've learned from working with top-tier institutions and show you how Portkey can support your mission.