What is an AI Gateway and how to choose one in 2026

Learn what an AI gateway is, why organizations use it, and how to evaluate solutions for governance, access, and reliability.

AI use cases inside organizations have multiplied rapidly. What began with a handful of teams experimenting is now sprawling across customer support, research, product development, and internal operations.

To facilitate easier adoption, support growth at scale, and enforce governance across these expanding use cases, organizations need AI gateways.

An AI gateway has emerged as the control plane for this complexity. It standardizes access to providers, enforces policies, and gives organizations the visibility they need to scale AI responsibly.

In 2025, they’re no longer optional. They’ve become a critical part of the AI stack.

What is an AI gateway

AI gateway sits between your applications and the growing ecosystem of AI providers. Instead of every team wiring directly into different APIs, the gateway provides a single standard interface and adds the operational scaffolding needed to run AI at scale.

The core functions most gateways provide today include:

- Standardized access across providers: Connect once and route to OpenAI, Anthropic, AWS Bedrock, Google Vertex AI, and more without custom integrations.

- Routing and load balancing: Distribute traffic across models or providers to optimize for cost, performance, or availability.

- Governance and guardrails: Enforce policies on access, apply safety filters, and ensure compliance with data regulations.

- Monitoring and logging: Capture every request and response with detailed metadata for observability, debugging, and audits.

By 2025, expectations from gateways have expanded. Beyond these basics, organizations now look for support with agent orchestration, MCP (Model Context Protocol) compatibility, and advanced cost governance, capabilities that make the gateway a long-term platform, not just a routing layer.

Key factors to evaluate when choosing an AI gateway

Not all gateways are built the same. As AI usage spreads across an organization, the gateway becomes a foundational layer that impacts reliability, security, and costs. Here are the factors that matter most when choosing one in 2025:

1. Multi-provider coverage

Your teams need flexibility. Look for a gateway that supports all major providers — OpenAI, Anthropic, AWS Bedrock, Google Vertex, Cohere, and emerging players, without requiring new integrations each time. Support for different API formats (like messages vs. chat.completions) and multimodal workloads (text, image, video) is just as important.

2. Performance and reliability

As usage scales, performance can make or break adoption. Evaluate latency, throughput, and SLA commitments. The right gateway should handle billions of tokens daily with low latency, while offering redundancy and failover across regions.

3. Governance and security

A gateway is only useful if it helps keep AI usage safe and compliant. Critical features include:

- Role-based access and identity management (SSO, SCIM)

- Budget and rate limits per team or department

- Guardrails for prompt injection, unsafe outputs, and compliance frameworks

- Regional data residency and sovereignty controls to meet regulatory needs

4. Cost and usage management

AI spend grows fast. The gateway should give you real-time visibility into usage and costs across teams and providers. Features like caching, batching, and smart routing are must-haves to cut costs without slowing adoption.

5. Observability and debugging

When things break, you need to know why. A strong gateway provides unified logs for every request and integrates with your monitoring stack (OpenTelemetry, Datadog, Splunk, etc.). End-to-end traces i.e., connecting model calls, tool invocations, and downstream systems, are vital for debugging and governance.

6. Integrations with AI apps and agent frameworks

A gateway should fit seamlessly into your existing stack, not force you to rebuild it. Look for native integrations with popular agent frameworks like LangChain, LlamaIndex, or Haystack, as well as support for enterprise AI applications. This ensures teams can connect their agents, workflows, and internal systems directly through the gateway without custom engineering overhead.

7. Support for private LLM deployments

Many organizations are moving beyond public APIs and running models inside private clouds for security, compliance, or cost reasons. An enterprise-ready gateway must support LLM deployments on AWS, GCP, and Azure, alongside public providers. This allows organizations to unify governance and monitoring across both external APIs and internal deployments in a single control layer.

8. Future-readiness

The AI stack is evolving quickly. Choose a gateway that’s compatible with new standards like the Model Context Protocol (MCP), supports agent workflows, and provides extensibility through APIs and plugins. Future-readiness ensures you won’t be locked into yesterday’s architecture.

Checklist for decision makers

When assessing AI gateways, ask these questions to ensure you’re choosing one that will scale with your organization’s needs:

❓ Does it support a broad range of providers — OpenAI, Anthropic, Bedrock, Vertex, Cohere — as well as new models and formats?

❓ Can it guarantee performance with low latency, high throughput, and clear SLAs?

❓ Does it offer enterprise-grade governance — SSO/SCIM, role-based access, budgets, rate limits, and guardrails?

❓ Will it give you real-time visibility into costs and usage, with optimizations like caching and batching built in?

❓ Does it provide detailed logs, OpenTelemetry integration, and full end-to-end traces for observability?

❓ Can it integrate easily with agent frameworks like LangChain and LlamaIndex, and work with existing AI applications?

❓ Does it support private LLM deployments on AWS, GCP, and Azure in addition to public APIs?

❓ Is it future-ready, with MCP compatibility, agent orchestration, and extensibility through plugins and APIs?

If the answer is “no” for too many of these, the gateway may not be the right fit.

Why Portkey is the right choice for enterprises

Choosing the right gateway is about whether the platform can handle real-world scale, governance, and evolving AI adoption.

Portkey was designed to meet these demands end-to-end, and today it powers AI usage for both Fortune 500 enterprises and leading universities.

Broad provider and deployment support

Portkey connects to 1600+LLMS, including customer and fine-tuned models, across providers. This means enterprises can bring both public models and internal models under a single layer of governance, without teams needing to re-integrate every time.

Enterprise-grade governance and guardrails

Customers use Portkey to enforce strict access policies at scale. Role-based access (via SSO and SCIM), per-department budgets, and usage quotas make it possible to govern hundreds of teams while preventing key sprawl. On top of that, Portkey provides guardrails for prompt injection, unsafe outputs, and compliance frameworks, ensuring AI usage is safe across classrooms, research, and production environments.

Prompt management

As AI adoption grows, managing prompts becomes as important as managing code. Portkey provides versioning, collaboration, and central storage for prompts, so teams can experiment without losing control. Paired with evaluation integrations (like Promptfoo), this makes it easier to test, iterate, and deploy production-ready prompts while keeping them auditable and governed.

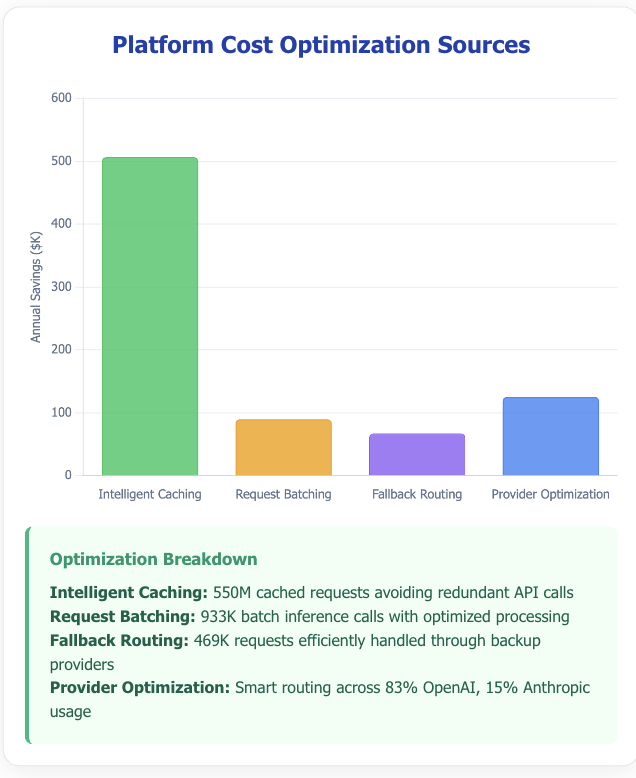

Cost optimization at scale

One food delivery platform scaled from 37M to over 2B monthly requests on Portkey, cutting effective spend growth by half through caching, batching, and routing optimization. Dashboards give finance and procurement teams real-time spend visibility across every provider and department.

Deep observability and debugging

For organizations running complex agent workflows, Portkey provides unified traces that connect LLM calls to downstream actions, errors, and latencies. This makes debugging failures far simpler than piecing together logs from different systems.

Future-readiness

Portkey is one of the first gateways to add MCP compatibility, letting enterprises securely expose internal and external MCP servers with unified authentication and discovery. Reach out to us for early access!

It also integrates with agent frameworks like LangChain and LlamaIndex, so teams can keep building with their existing tools.

Customer momentum

- A top-5 global university uses Portkey to govern AI adoption across classrooms, research labs, and student services.

- A consumer platform with 1,000+ engineers standardized its AI access on Portkey, reducing credential sprawl and saving millions in projected annual spend.

- Multiple Internet2 member universities are rolling out Portkey as their official AI access layer.

With Portkey, organizations get the control plane that makes AI adoption secure, governable, and cost-effective at scale.

Making the right choice in 2025

AI is now woven into the fabric of how organizations operate — from research and classrooms to customer products and enterprise workflows. With this expansion, the gateway you choose becomes one of the most important infrastructure decisions you’ll make.

The right gateway will determine how easily your teams adopt new models, how securely you can govern usage, and how effectively you manage costs at scale. More than just a connector, it’s the platform that decides whether AI becomes a source of innovation or a source of risk.

Portkey is built to be that platform. By combining provider flexibility, governance, observability, cost control, and future-ready features like MCP and agent integrations, Portkey gives enterprises and universities a proven foundation for scaling AI.

Book a demo with Portkey to see how leading organizations are already using the AI gateway to govern, optimize, and grow their AI adoption.