Scaling LibreChat for enterprise use: tracking, visibility, and governance

LibreChat is powerful, but lacks the control and visibility enterprises need. Learn how Portkey makes LibreChat production-ready with governance, observability, and security guardrails.

LibreChat has quickly become a favorite among developers and AI teams looking to build custom chat interfaces on top of large language models. It’s open-source, flexible, and supports multiple providers out of the box — including OpenAI, Anthropic, and more.

Many teams start by spinning up LibreChat in their internal environments to help with tasks like drafting content, summarizing documents, answering FAQs, or writing code. It’s fast to set up, easy to extend, and doesn’t require signing up for a hosted service, making it a popular choice for early experiments and lightweight use cases.

But as usage grows and more teams start relying on it day to day, you need something more.

Use the latest models in LibreChat via Portkey and learn how teams are running LibreChat securely with RBAC, budgets, rate limits, and connecting to 1,600+ LLMs all without changing their setup.

To join, register here →

But LibreChat alone isn’t enough for enterprises

While LibreChat works well for early experimentation, it starts to fall short when multiple teams begin using it across an organization.

Once LibreChat is deployed, there’s no easy way to track who’s using it, how often, or what it’s being used for. All usage flows through the same shared interface, which means teams can’t answer for usage, costs, spends for each team/user, et.c

That kind of opaqueness might be manageable in a small team. But for an enterprise where usage needs to be predictable, traceable, and governed it becomes a blocker.

There's also no accountability on costs. Because LibreChat doesn’t track usage by user or team, it’s impossible to map prompt activity to actual spend. Finance teams are left in the dark, only finding out about cost overruns after the invoice hits.

Then there’s model governance. LibreChat lets anyone select models and adjust parameters freely. For enterprises with internal policies, security standards, or procurement constraints, that kind of freedom introduces unnecessary risk.

Finally, LibreChat offers no built-in guardrails, so no way to flag when someone pastes sensitive data into a prompt, or when a model returns something inappropriate. And when something goes wrong i.e. a model fails or a request times out, there's no audit trail to debug what happened.

So while LibreChat is a powerful tool, it doesn’t come with the control, oversight, or safety nets that enterprises need to deploy it at scale.

Portkey solves these challenges

This is where Portkey comes in.

Portkey integrates directly with LibreChat, no major code changes required, and fills in the missing pieces that enterprises care about: control, visibility, and accountability.

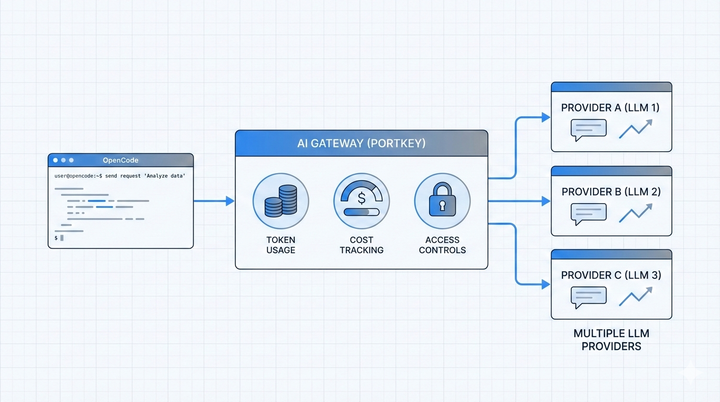

Instead of every user in LibreChat hitting model APIs directly, Portkey sits in the middle as a unified AI gateway. All requests flow through it, giving teams the ability to track, manage, and secure every interaction without slowing anyone down.

Here’s what you get:

- You get full visibility into usage, down to the user, model, and prompt level. Every interaction is logged and enriched with metadata, so you can see which team is using what, and why.

- You get clarity on spend — Portkey tracks costs in real time across users and teams, helping you prevent overruns before they happen. No more chasing invoices or trying to retroactively assign usage.

- You regain control over model access — enforce which providers or models are allowed, set soft or hard rate limits, and apply routing rules across your org.

- You add guardrails without rewriting LibreChat — enable PII detection, block unsafe prompts, and meet compliance requirements with minimal setup.

- You improve reliability — Portkey adds retries, timeouts, and fallback logic to model calls. When something breaks, you get detailed traces to debug it quickly.

How the integration works

Integrating Portkey with LibreChat takes just a few minutes.

All you need to do is replace your model provider API key with a Portkey Virtual Key, and update the librechat.yaml config to route requests through Portkey. From there, you can attach metadata for user-level tracking, enforce policies, and enable guardrails, all without changing LibreChat’s codebase.

What enterprises can now do

Once LibreChat is routed through Portkey, it becomes much more than a front-end for LLMs, it becomes a governable, trackable, and production-grade tool.

Here’s what teams are now able to do:

- Roll out LibreChat org-wide without losing oversight. Usage is logged, attributed, and auditable, no more black-box prompts.

- Track usage patterns across teams. Spot spikes, see who’s using which models, and optimize workflows based on real data.

Portkey's analytics dashboard

- Manage costs proactively, not reactively. Set up real-time budget limits or alerts per team, so finance teams stay informed before overruns happen.

- Enforce internal policies; whether it’s restricting certain models, applying safety filters, or preventing prompt misuse.

- Debug faster when something breaks. Every failure or timeout comes with a trace ID, logs, and visibility into what exactly went wrong.

Portkey's detailed logging

In short, LibreChat becomes something teams can safely use and platform teams can confidently approve.

LibreChat is now enterprise-ready

LibreChat is an excellent way to get started with LLM interfaces — it’s fast, flexible, and easy to deploy. But for enterprises, ease of use isn’t enough.

You need to know who’s using it, what it’s costing, whether it’s compliant, and if it’s reliable at scale. Without that, it’s just another tool running in the shadows.

Portkey gives you the visibility, control, and safeguards to confidently scale LibreChat across your org — without changing how your teams use it.

If your team is already experimenting with LibreChat, it’s time to make it production-ready.