Using Prompt Chaining for Complex Tasks

Master prompt chaining to break down complex AI tasks into simple steps. Learn how to build reliable workflows that boost speed and cut errors in your language model applications.

Complex problems require structured solutions. While single prompts work well for straightforward tasks, they quickly become unwieldy when handling multi-step operations. Enter prompt chaining - a method that breaks down sophisticated tasks into a series of focused, manageable steps.

For tasks with many moving parts, prompt chaining gives us a clear path forward, letting each step build on the one before it. From business tasks to customer service to data work, this method helps create reliable processes where precision matters.

If you're working with advanced LLM workflows, getting a grip on prompt chaining is key. We suggest starting with our guide to prompt engineering for a solid foundation.

What is Prompt Chaining?

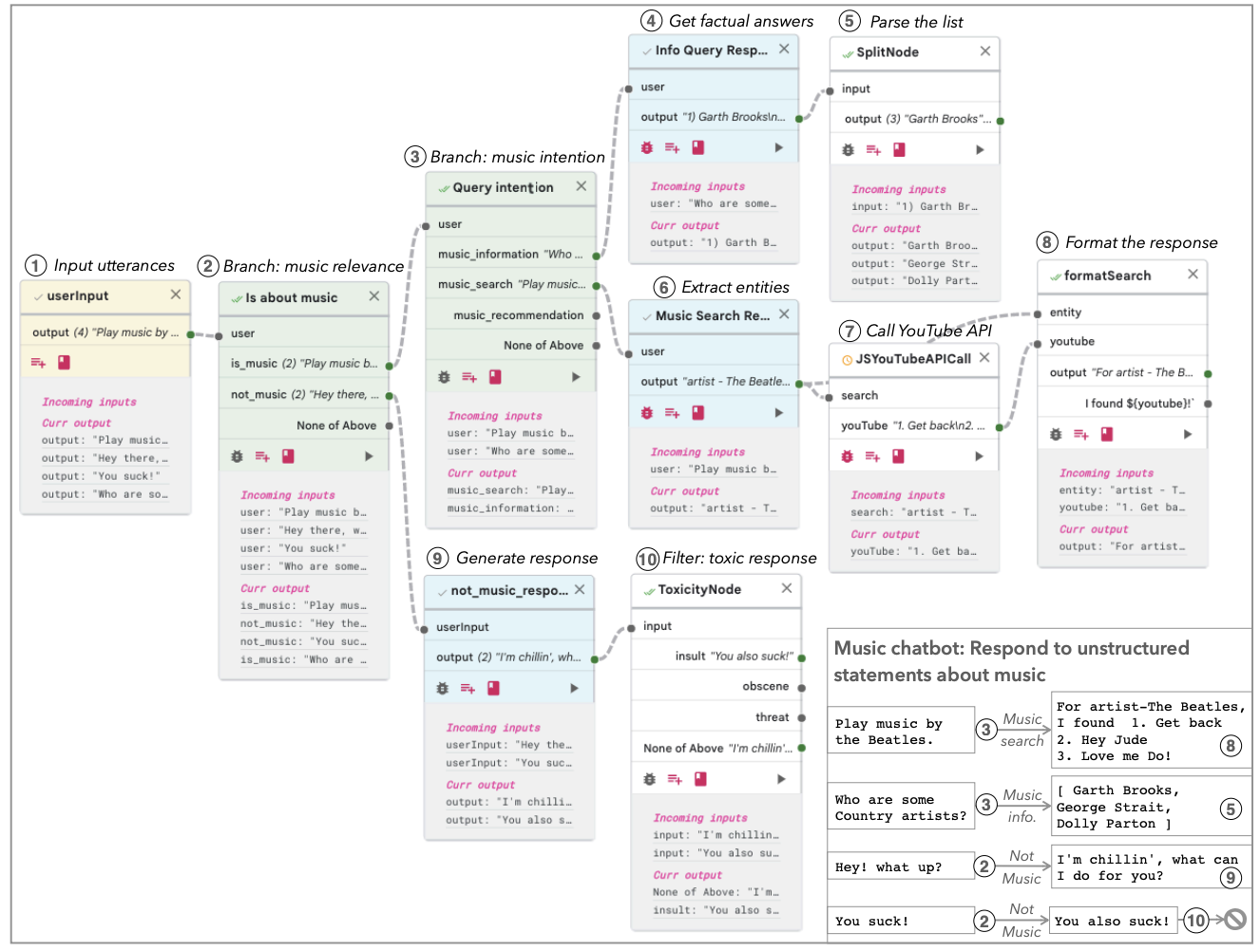

Put simply, prompt chaining links prompts together to tackle complex jobs. Rather than cramming everything into one big prompt, you split the work into clear, focused steps. Each step creates an output that feeds into the next one. This makes complex workflow better and helps catch any issues early.

Prompt chaining brings real benefits. By breaking tasks into smaller pieces, you're less likely to miss things or make mistakes. Plus, since each prompt has its own job, you can tweak any part without messing up the whole system. This building-block approach also matches how people naturally solve problems, making it easier to handle tricky decision points.

Prompt Chaining Benefits

The real power of prompt chaining shows up when you're working on complex tasks that need careful handling. Small, focused steps mean fewer mistakes - each prompt can do its job well without getting overwhelmed.

The building block setup means you can fix or improve any part without rebuilding everything from scratch. This really helps when you're running big processes that need regular updates. Best of all, by breaking complex tasks into clear steps, you get a workflow that thinks more like a person would, making smart choices at each point.

When Should You Use It?

Prompt chaining works best when you need tight control over multi-step processes, especially when each part depends on what came before. This method lets you move through complex tasks step by step, with each prompt handling its piece and adjusting based on earlier results.

Take customer support: one prompt figures out what the customer needs, another pulls up their info, and a third writes a personalized response. Each step builds on what came before, making the final answer more helpful.

This approach also shines in situations where different steps might need different handling. In medical questions, for instance, one prompt might look at symptoms while another suggests next steps like tests. This flexible structure helps the system adapt as needed.

Building Effective Prompt Chains

Let's walk through how to create prompt chains that work well. You'll need to break down your task and keep track of information between steps.

- Breaking Down the Task

Start by splitting your big task into clear pieces. For a customer support workflow, you might break it down like this:

- Find the main issue: Pick out keywords that show what the customer needs

- Create a basic response: Pull up or write a response that fits the issue

- Make it personal: Add the customer's name, order details, and other specific info

Each of these becomes one prompt in your chain, with each step's output flowing into the next one.

2. Planning Inputs and Outputs

Every step needs to know what it's getting and what it should produce. This helps keep the whole process running smoothly and makes it easier to fix problems when they pop up.

Here's what that might look like:

- Step 1 gives us: "Customer asking about product stock"

- Step 2 takes that and makes: A basic stock check response

- Step 3 uses both to create: A personal response with the customer's details

Prompt chaining examples

Let's see how this works in practice with report writing:

- Step 1 - Finding Key Points: Prompt: "Pull out the main findings from Q3 sales" Result: "Q3 saw 12% more sales, mostly from product Y"

- Step 2 - Making Suggestions: Prompt: "Based on Q3 results, what should we do next?" Result: "Ramp up product Y production and look for new markets"

- Step 3 - Writing It Up: Prompt: "Put together a report with these findings and ideas" Result: A clear report that ties everything together

Each prompt has its job, and they work together to create something useful.

Optimize your prompt chains

- Focus and Clarity: Each prompt should handle one clear task. Like a good recipe, simpler steps lead to better results:

- Extract the issue: "Order delayed" rather than "Customer seems unhappy about timing and wants an update about their order status"

- Keep outputs clean: Pass forward only what the next step needs

- Use consistent formats: If step 2 expects a category label, step 1 should provide exactly that

2. Error Handling: Build safeguards into your chain

- Add validation checks between steps

- Create fallback responses for common issues

- Include clear error messages that help identify where things went wrong

3. Information Management: Balance completeness with efficiency:

- Pass essential context between steps - customer ID, key dates, main issue

- Trim redundant data that won't be used downstream

- Structure data consistently throughout the chain

4. Context Preservation: Critical details should flow through the entire process:

- Maintain key identifiers across all steps

- Track important timestamps or version numbers

- Keep relevant history for the final output

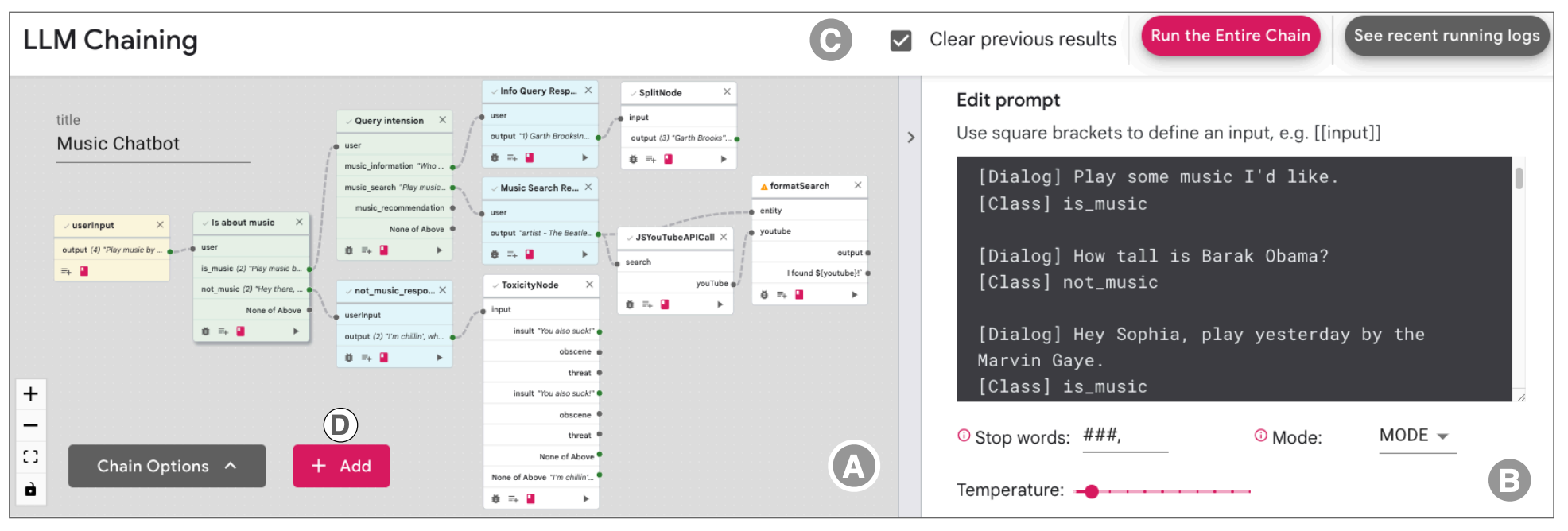

Using Portkey AI for Prompt Management

When running many prompt chains, you need tools that can handle the complexity. Portkey's LLM Gateway helps keep track of context between prompts without repeating yourself.

Portkey allows you to create multiple prompt templates that seamlessly work together by passing the output of one template as the input to another. Each prompt template can serve a different role—extracting key details, refining responses, or guiding decision-making.

You can then easily string these templates together in your code, making one prompt completion call after another. This structured approach enables dynamic AI workflows, ensuring better context retention, multi-step reasoning, and more efficient automation—all without manually managing prompt chaining complexities.

This flexibility helps businesses build solid workflows that can handle complex tasks well. Through Portkey's prompt library, developers can manage and improve their prompts in one place, creating better chains that get work done faster and more accurately.

Common Questions About Prompt Chaining

- What exactly is prompt chaining?

It connects prompts in a sequence where each step's output helps the next step do its job. This works great for breaking big tasks into manageable pieces.

2. How does prompt chaining improve task automation?

Prompt chaining enhances accuracy, flexibility, and modularity in workflows. Each prompt in the chain can be independently optimized, providing an overall boost in efficiency and reliability.

3. Can prompt chaining retain context between prompts?

Yes, prompt chaining allows for effective context retention. Portkey's prompt engineering capabilities help ensure essential information is retained across interactions in the chain, ensuring each prompt builds on the previous one seamlessly.

Prompt chaining helps smartly tackle complex tasks. Check out our prompt engineering guide to learn more, and see how Portkey can make your workflows smoother.