Prompt engineering vs. fine-tuning: What’s better for your use case?

Discover the key differences between prompt engineering and model fine-tuning. Learn when to use each approach, how to measure effectiveness and the best tools for optimizing LLM performance.

You're building an app with large language models, and you've hit that familiar crossroads: Do you spend time crafting better prompts, or should you roll up your sleeves and fine-tune the model?

Prompt engineering vs. fine-tuning: Let's break down both approaches and figure out what makes sense for your specific needs.

Prompt engineering

Prompt engineering optimizes pre-trained LLM outputs through precise input instructions for tasks like summarization, classification, and question answering - all without modifying the model's parameters.

Development teams often choose prompt engineering for its rapid iteration cycles. You can design, test, and refine prompts within hours, making it particularly valuable during initial prototyping phases. The ability to repurpose a single model for multiple tasks through varied prompts adds notable flexibility to your tech stack.

Iterating on prompts can be easier with a Prompt Playground. Switch between models, adjust parameters through an intuitive interface, and let Playground handle all the versioning automatically. You can compare different prompt versions side by side, track performance across various test cases, and identify which variations consistently produce the best outputs. Whether you're tweaking temperature settings, adjusting system prompts, or testing entirely new approaches, you'll see the impact instantly.

Still, prompt engineering brings specific technical challenges. Small variations can significantly impact output consistency. Since you're working within the boundaries of the model's pre-trained knowledge, some specialized tasks might remain out of reach. The non-deterministic nature of LLM responses also makes systematic debugging of edge cases more complex than traditional software testing.

In production environments, prompt engineering powers zero-shot and few-shot learning applications in customer service automation. Many teams use chain-of-thought prompting to break down complex reasoning tasks into manageable steps. The technique also proves valuable in RAG systems, where dynamically generated prompts enhance information retrieval and response generation.

Fine-tuning

Model fine-tuning updates pre-trained LLM weights using labeled, domain-specific data. This process reshapes the model's underlying knowledge representation to excel at specialized tasks and domain-specific language patterns. The technique builds upon transfer learning principles, preserving the model's broad capabilities while sharpening its performance in targeted areas.

Fine-tuning gives engineering teams granular control over model behavior and output quality. The process substantially improves the handling of domain-specific terminology and concepts, making it particularly valuable in specialized fields. Models gain the ability to understand industry jargon, follow domain-specific reasoning patterns, and maintain high accuracy even with unusual inputs or edge cases.

The technical demands of fine-tuning shape its practical implementation. Teams need access to substantial computing resources and high-quality training data. Development cycles stretch longer as models require careful training and validation. Once deployed, fine-tuned models need regular maintenance - retraining with new data to maintain performance and adapt to shifting patterns in your domain.

See how vision-enhanced fine-tuning can improve your AI application.

Prompt engineering vs. fine-tuning - Key considerations for developers

| Factor | Prompt Engineering | Fine-tuning |

|---|---|---|

| Task Complexity | ||

| Simple Tasks |

✓ Excellent for summarization, Q&A, basic classification

Best suited for general-purpose tasks

|

Limited advantages over prompt engineering

May be overkill for basic tasks

|

| Complex Tasks |

× Limited by base model capabilities

Cannot exceed original model performance

|

✓ Better for specialized domain tasks

Excels in domain-specific applications

|

| Domain Knowledge |

× Restricted to pre-trained knowledge

Cannot learn new domain patterns

|

✓ Can learn domain-specific patterns

Adapts to specialized terminology and concepts

|

| Resources | ||

| Infrastructure |

✓ No special hardware needed

Works with standard API calls

|

× Requires GPUs/TPUs for training

Significant computing resources needed

|

| Storage |

✓ Minimal storage requirements

Only prompts need to be stored

|

× Large storage for model weights

Multiple GB per model version

|

| Ongoing Costs |

✓ API costs only

Predictable usage-based pricing

|

× Training and maintenance costs

Includes compute, storage, and updates

|

| Development | ||

| Setup Time |

✓ Minutes to hours

Rapid implementation cycles

|

× Days to weeks

Requires extensive preparation

|

| Iteration Speed |

✓ Immediate testing and updates

Quick experimentation cycles

|

× Hours/days per training cycle

Long feedback loops

|

| Debugging |

× Hard to trace output issues

Less predictable behavior

|

✓ More predictable behavior

Clear training signals

|

| Scalability | ||

| Task Diversity |

✓ One model for multiple tasks

Flexible application

|

× Typically task-specific

Limited to trained domains

|

| Data Requirements |

✓ Zero to few examples needed

Minimal data preparation

|

× Large labeled datasets required

Extensive data collection needed

|

| Performance Ceiling |

× Limited by base model

Cannot exceed original capabilities

|

✓ Can exceed base model

Potential for specialized excellence

|

| Maintenance | ||

| Updates |

✓ Quick prompt modifications

Immediate changes possible

|

× Requires periodic retraining

Resource-intensive updates

|

| Version Control |

✓ Simple prompt versioning

Easy to track changes

|

× Complex model versioning

Requires specialized tooling

|

| Deployment |

✓ Lightweight deployment

Simple API integration

|

× Heavy deployment pipeline

Complex infrastructure needed

|

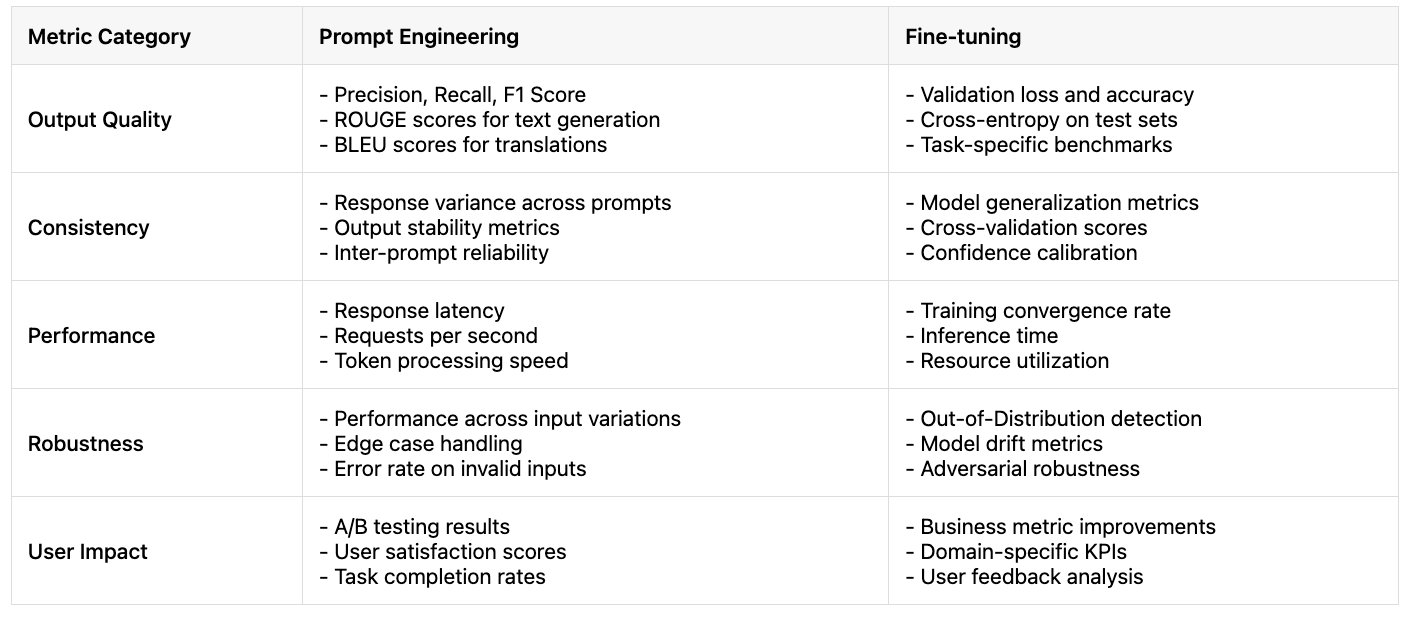

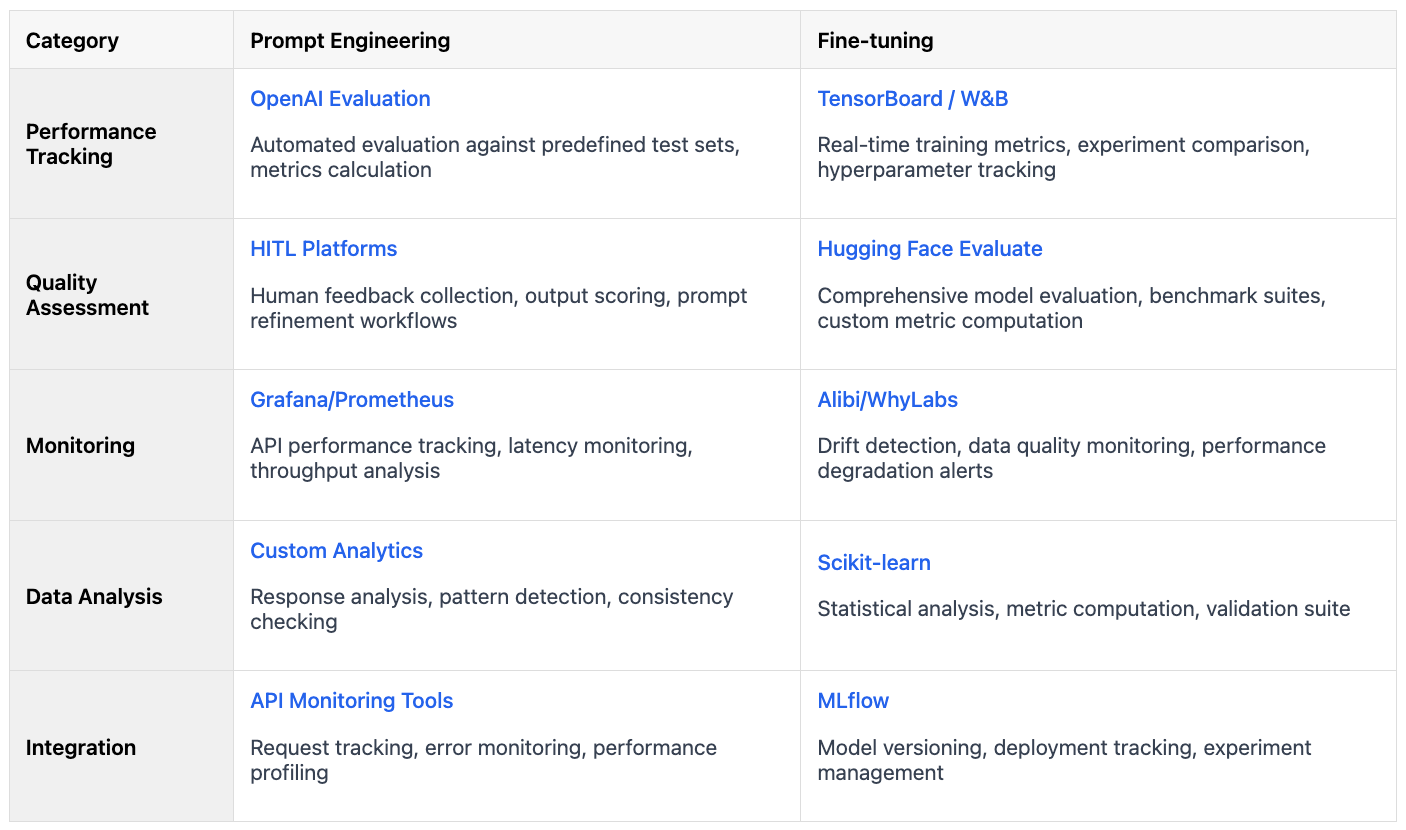

Prompt engineering vs. fine-tuning - How to measure output effectiveness

For prompt engineering, measuring response accuracy requires systematic evaluation using standard NLP metrics. Track precision, recall, and F1 scores for classification tasks, and implement response consistency checks across similar prompts. Performance monitoring should include latency measurements and throughput analysis at your target scale. Quantitative metrics should be supplemented with structured user feedback through controlled A/B tests.

Fine-tuned models require more comprehensive evaluation frameworks. Track validation loss and accuracy during training, but pay special attention to generalization metrics on completely unseen data. Implement drift detection systems to monitor performance degradation over time. OOD detection becomes crucial - test your model against inputs that diverge from the training distribution to understand its operational boundaries.

Both approaches benefit from automated monitoring pipelines that track these metrics continuously in production. Set up alerting thresholds based on your application's requirements and establish clear intervention protocols when performance degrades beyond acceptable levels.

The hybrid approach for developers

The hybrid approach starts with prompt engineering for rapid prototyping and validation. This phase lets you quickly test concepts and refine your approach without significant resource investment. Once you've validated core functionality, transition to fine-tuning for production-grade performance.

A practical example is building a domain-specific question-answering system:

- Use RAG with prompt engineering for efficient document retrieval and context preparation

- Process this context through a fine-tuned model specialized in your domain

- Generate detailed, consistent responses that combine broad knowledge with domain expertise

This approach balances development speed with production performance, leveraging each technique where it works best. The initial prompt engineering phase informs your fine-tuning strategy, while the final hybrid system maintains flexibility for handling edge cases.