Self-Consistency Improves Chain of Thought Reasoning in Language Models - Summary

The paper proposes a new decoding strategy called self-consistency to improve the performance of chain-of-thought prompting in language models for complex reasoning tasks. Self-consistency first samples a diverse set of reasoning paths and then selects the most consistent answer by marginalizing ou

Arxiv URL: https://arxiv.org/abs/2203.11171

Authors: Xuezhi Wang, Jason Wei, Dale Schuurmans, Quoc Le, Ed Chi, Sharan Narang, Aakanksha Chowdhery, Denny Zhou

Self-Consistency Improves Chain of Thought Reasoning - Summary:

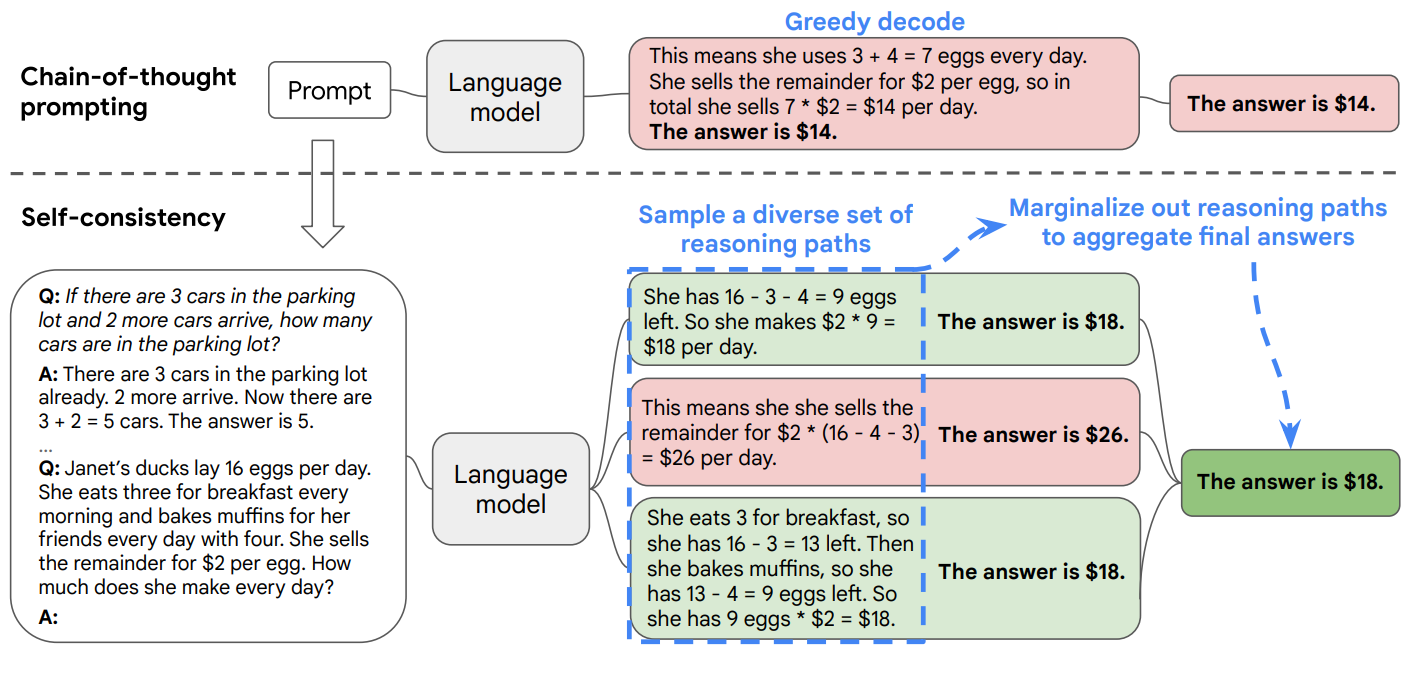

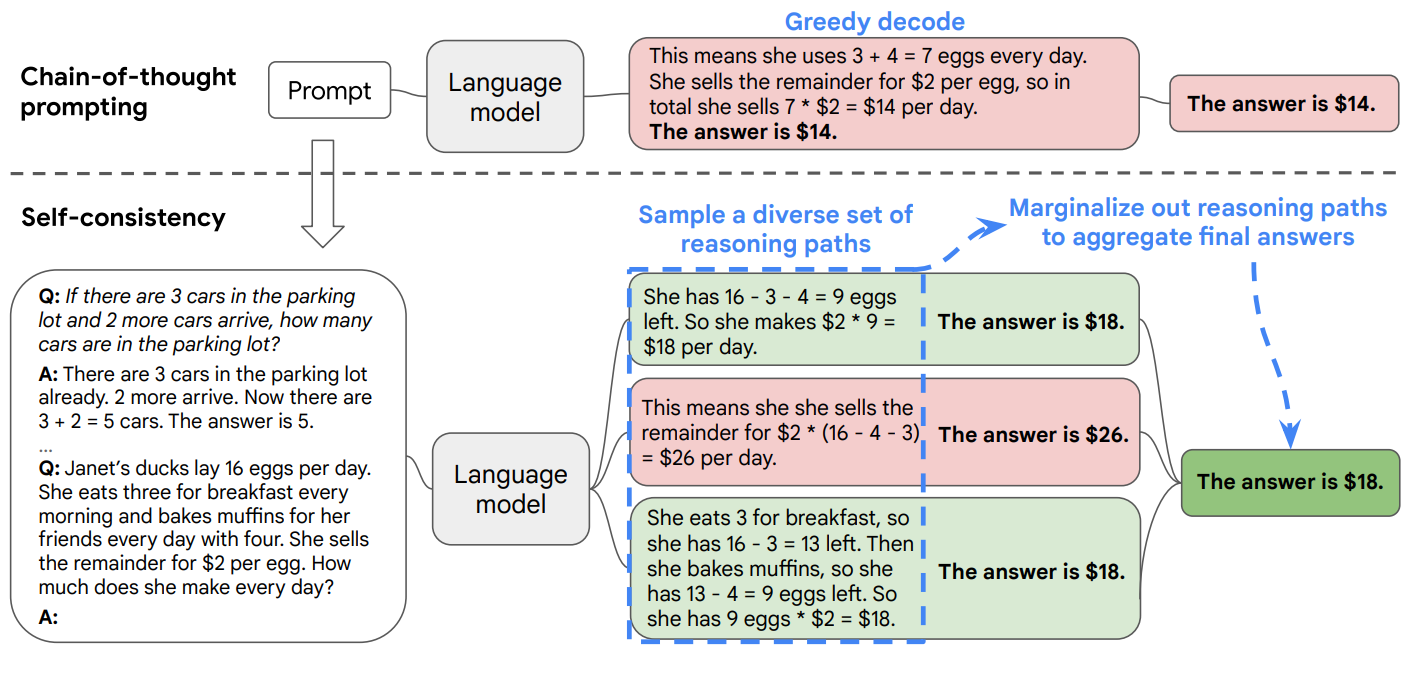

The paper proposes a new decoding strategy called self-consistency to improve the performance of chain-of-thought prompting in language models for complex reasoning tasks. Self-consistency first samples a diverse set of reasoning paths and then selects the most consistent answer by marginalizing out the sampled reasoning paths. The proposed method boosts the performance of chain-of-thought prompting on popular arithmetic and commonsense reasoning benchmarks by a significant margin.

Key Insights & Learnings:

- Self-consistency is a novel decoding strategy that improves the performance of chain-of-thought prompting in language models for complex reasoning tasks.

- The proposed method first samples a diverse set of reasoning paths and then selects the most consistent answer by marginalizing out the sampled reasoning paths.

- Self-consistency boosts the performance of chain-of-thought prompting on popular arithmetic and commonsense reasoning benchmarks with a significant margin.

- Self-consistency achieves new state-of-the-art levels of performance when used with PaLM-540B or GPT-3.

What are the advantages of self-consistency on chain of thought reasoning?

- Self-consistency leverages the fact that complex reasoning problems often have multiple valid approaches

- More robust than single-path reasoning

- The proposed method is entirely unsupervised, works off-the-shelf with pre-trained language models, requires no additional human annotation, and avoids any additional training, auxiliary models, or fine-tuning.

- Can provide uncertainty estimates based on answer consistency

What are the limitations of self-consistency?

- Requires more computation than single-path methods

- Only applicable to problems with fixed answer sets

- Language models can sometimes generate incorrect or nonsensical reasoning paths

Terms Mentioned: chain-of-thought prompting, language models, self-consistency, decoding strategy, reasoning paths, marginalizing, arithmetic reasoning, commonsense reasoning, state-of-the-art

Technologies / Libraries Mentioned: Google Research, UL2-20B, GPT-3-175B, LaMDA-137B, PaLM-540B