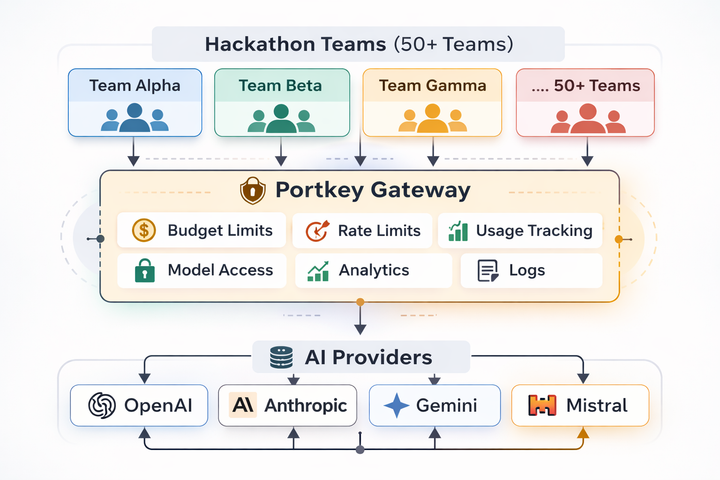

How to host an AI Hackathon without losing control of your keys or budget

Running an AI hackathon means providing dozens (or hundreds) of teams with access to expensive LLM APIs while maintaining cost control, fair usage, and visibility into what's happening.

Without proper infrastructure, you risk:

- Budget blowouts: A single runaway script consuming your entire API budget- - - No