Open Sourcing Guardrails on the Gateway Framework

We are solving the *biggest missing component* in taking AI apps to prod → Now, enforce LLM behavior and route requests with precision, in one go.

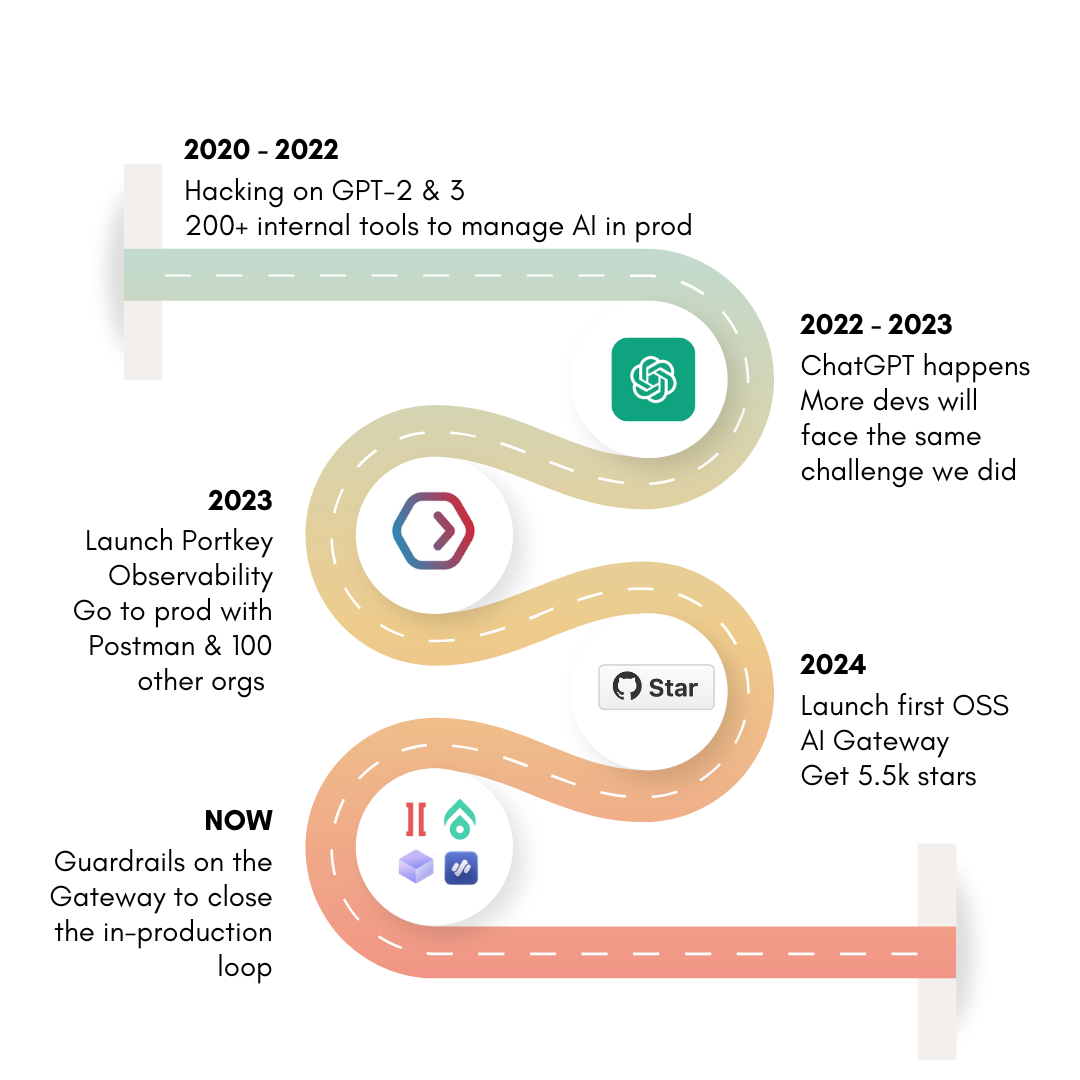

About a year ago, we started Portkey to solve a problem we had faced ourselves - lack of adequate tooling to confidently put LLM apps in production. This showed up in many ways when we experimented with GPT-2, GPT-3, and built million-user scale apps then:

- There was no way to debug LLM requests

- You couldn't see request-level cost data

- You couldn't iterate fast on prompts without involving devs

- When you wanted to try out a new model, it took forever to integrate

- And finally, the LLM output itself was often not reliable enough to use in production

We started building Portkey to solve these "ops" challenges, and we did it in our own way, with our opinionated, open source AI Gateway - https://git.new/portkey

Portkey Today Moves The Needle

With Portkey Gateway, we now process billions of LLM tokens every day, and help hundreds of companies take their AI apps to production.

Teams use Portkey to debug fast, iterate fast, handle API failures, and much more!

But, The Problem Remains

We built the Gateway such that it does not affect the core LLM behaviour in any way. Portkey does request/response transformations across 200+ different LLMs today, while making them more robust. The core LLM behaviour but, still remains as unpredictable as it gets -

- Outputs can be complete hallucinations or factually inaccurate

- Outputs could be biased

- Outputs could violate privacy or data protection norms

- Outputs could be harmful to the org

This is the Biggest Missing Component

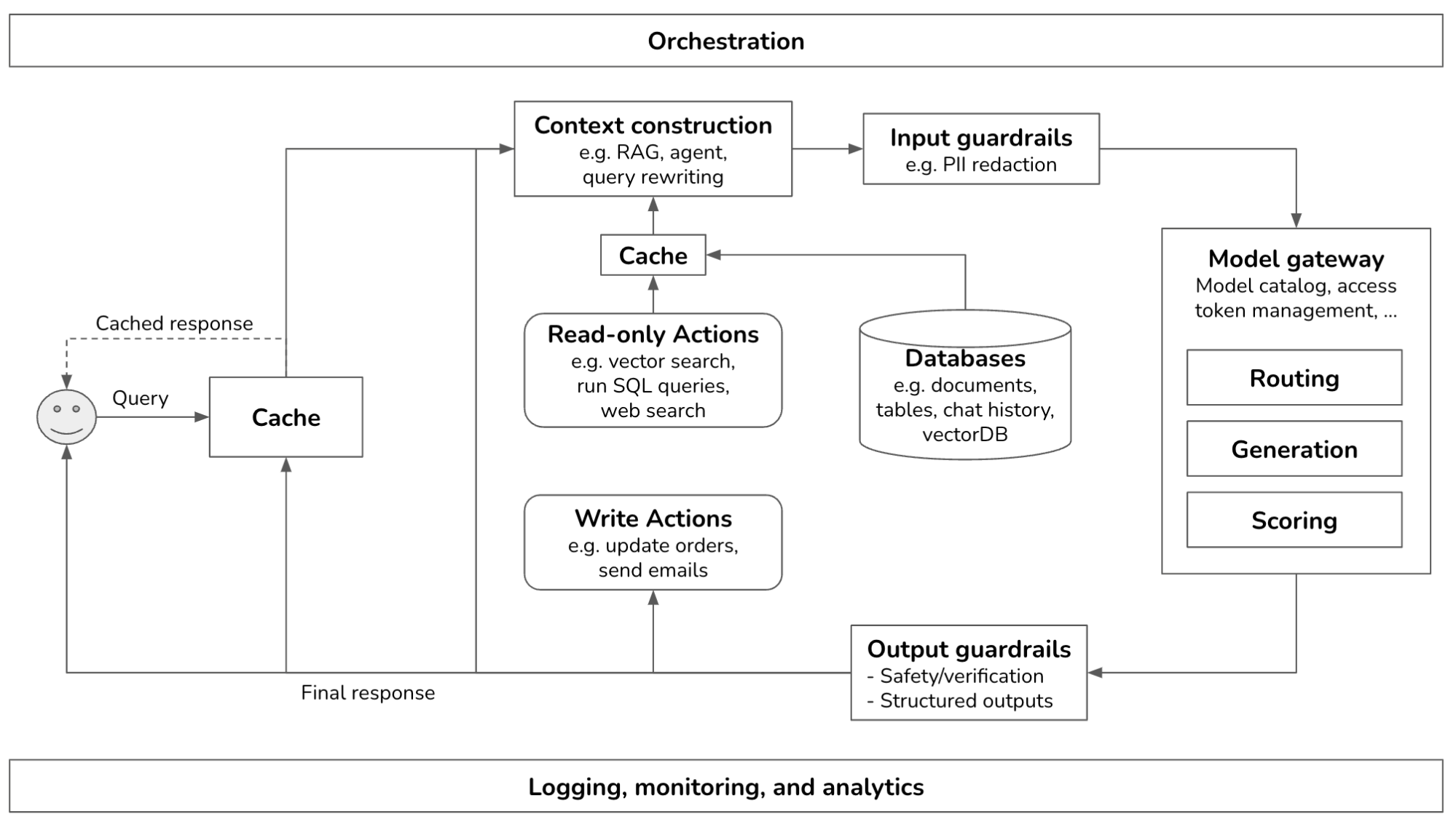

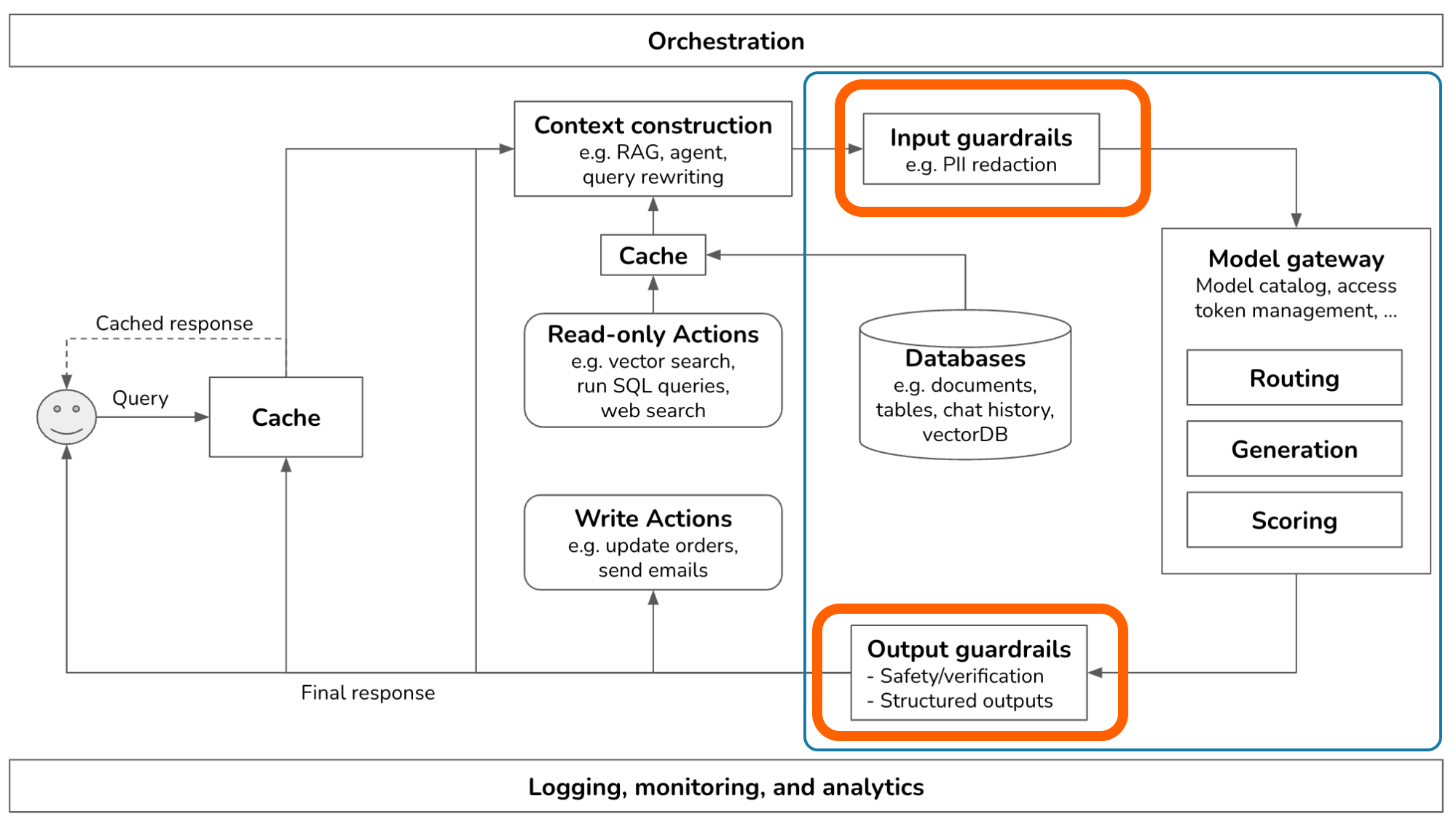

When it comes to taking AI apps to prod - this, we believe, is THE BIGGEST missing component. Chip Huyen recently wrote a definitive guide on "Building a Gen AI Platform" - and she pointed it out as well:

No, look closely:

What happens, when you bring,

Guardrails Inside the Gateway?

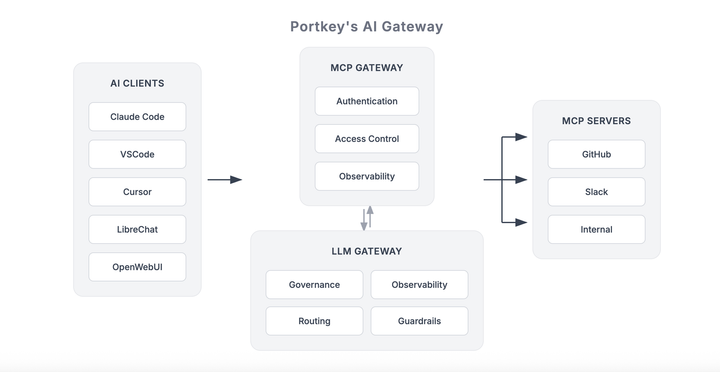

Guardrails are systems that help control and guide LLM outputs. By integrating them into the Gateway, we can create a powerful solution:

You can orchestrate your LLM requests based on the Guardrail's verdict - whether it's on the input or on the output, and handle LLM behavior in real-time EXACTLY as you want.

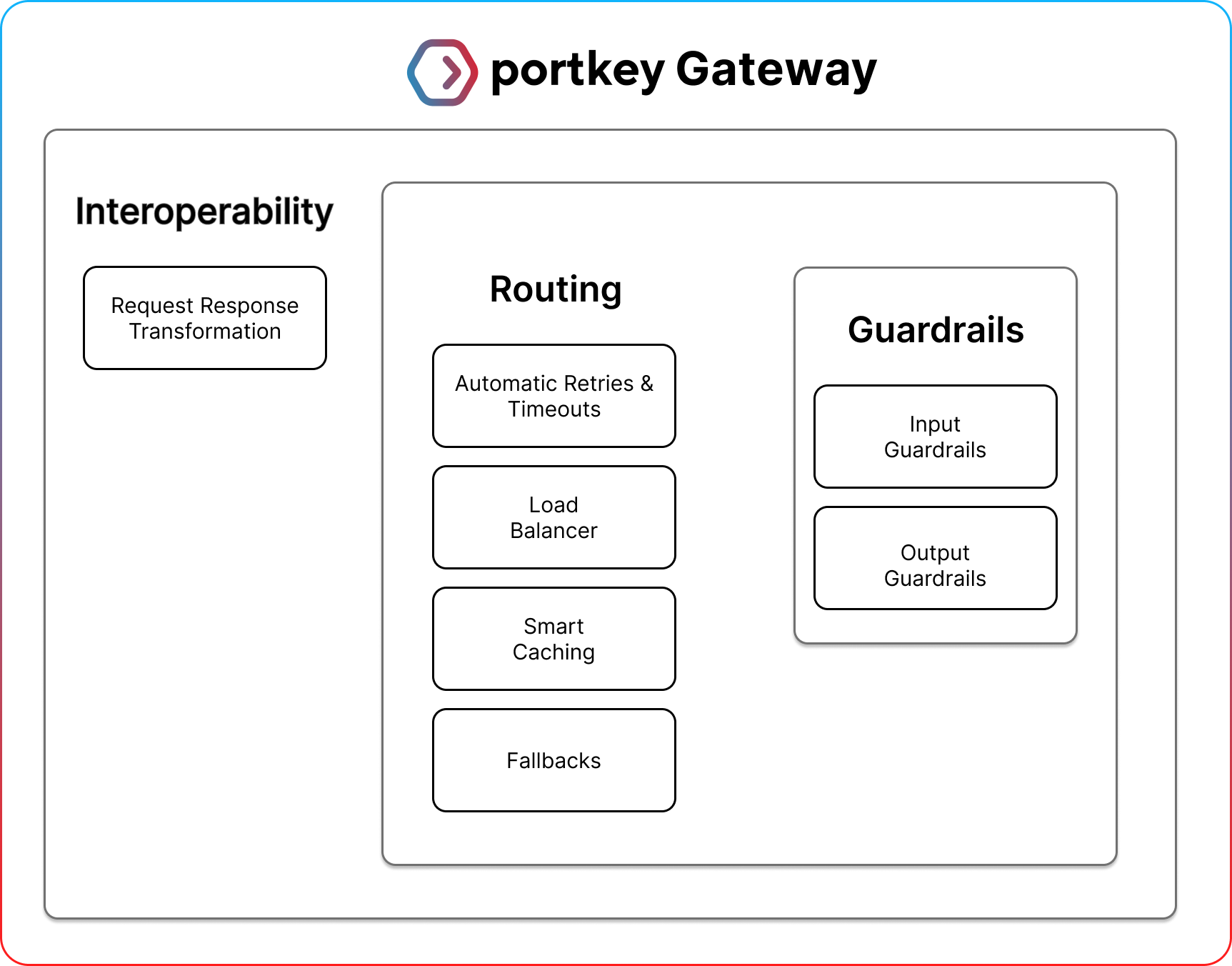

Interoperability, Routing, and Guardrails - combined on the Gateway.

Today, we are excited to announce that we are evolving Portkey's open source AI Gateway to make it work with Guardrails.

But, we are no experts in Guardrails

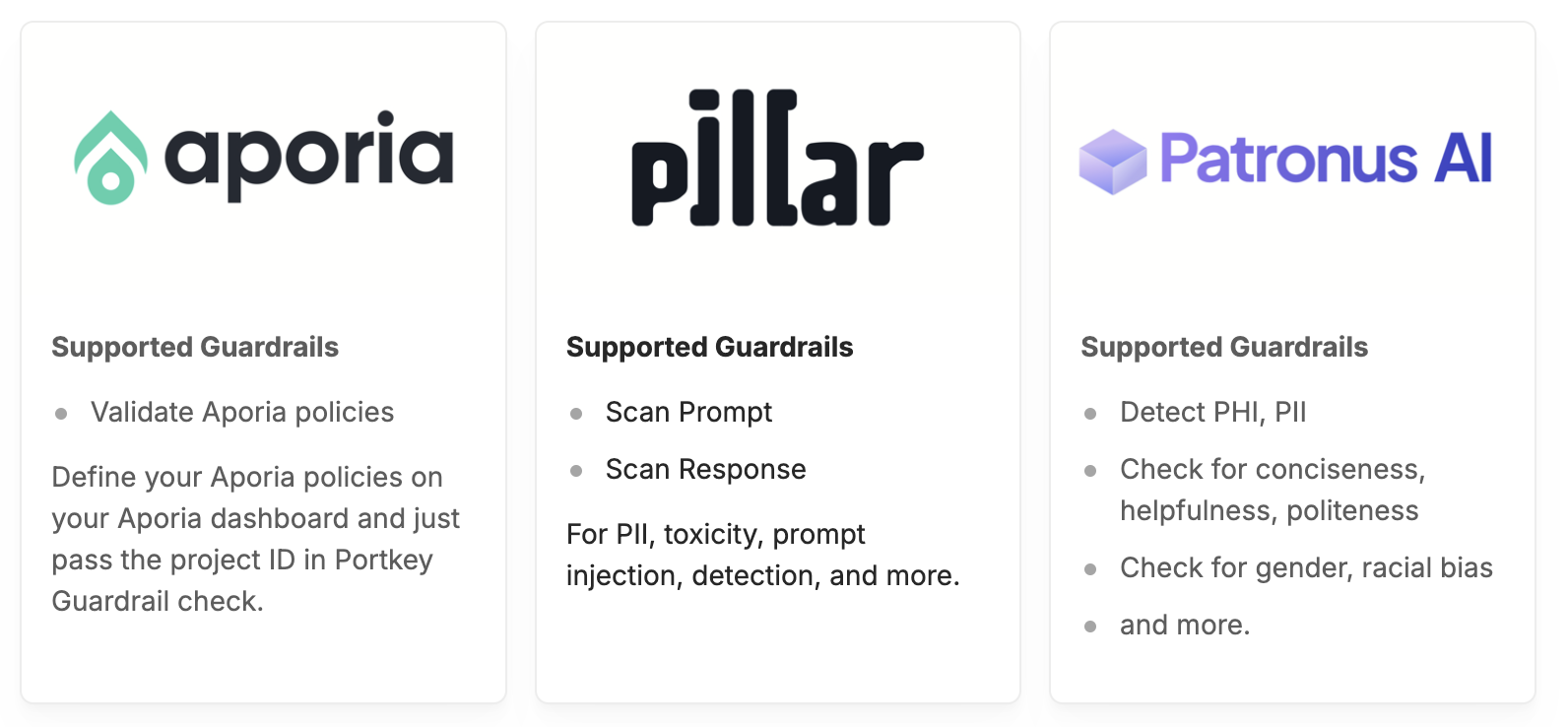

We believe Gateway is critical tooling every AI developer will need. However, we recognize that steering and evaluating LLM behavior requires specialized expertise.

That's why we're partnering with some of the world's best AI guardrails platforms to make them available on Portkey Gateway.

Available on Portkey App & on Open Source

Guardrails are now available on our open source repo for you to try out, as well as free on Portkey's hosted app.

This is a step towards closing a crucial production gap that so many companies face - and this is just the beginning! As we move forward, continuous learning, adaptation, and collaboration will be key to addressing the complex challenges that lie ahead.

We're excited to see how the community adopts and adapts these concepts, and we look forward to continuing this important conversation about the future of AI APIs!