Prompt engineering techniques for effective AI outputs

Remember when prompt engineering meant just asking ChatGPT to write your blog posts or answer a basic question? Those days are long gone. We're seeing companies hire dedicated prompt engineers now - it's become a real skill in getting large language models (LLMs) to do exactly what you need them to do.

The game has changed even more with expanded prompt limits. You can now get much more detailed in your requests to these models, which opens up some interesting possibilities - if you know how to take advantage of them. To understand prompt engineering in detail, check out the prompt engineering guide.

Getting the most out of conversation-based models comes down to how well you can structure your prompts.

It's a bit tricky to get right, though. Let's walk through the different types of prompts engineering techniques that can help you get better at steering these AI models. Whether you're new to prompt engineering or looking to level up your skills, you'll find something useful here.

tl;dr

| Technique | Purpose | Key Feature | Use Cases |

|---|---|---|---|

| Zero-shot prompting | Direct model response without examples. | Relies on pre-trained knowledge. | Simple Q&A, general tasks. |

| Few-shot prompting | Guides model with a few examples. | Improves contextual understanding. | Sentiment analysis, classification. |

| Chain-of-thought prompting | Encourages step-by-step reasoning. | Solves logical, multi-step problems. | Math, logical reasoning, data interpretation. |

| Instruction-based prompting | Focuses the model on specific tasks. | Clear commands for optimized responses. | Task-specific outputs, content generation. |

| Role-based prompting | Assigns a role to guide response tone/content. | Shapes the response style. | Teaching, professional content. |

| Contextual prompting | Builds context progressively. | Supplies background information for nuanced responses. | Long-form generation, detailed tasks. |

| Meta prompting | Guides the model to design/refine prompts. | Prompts about creating prompts. | Automation of prompt creation. |

| Self-consistency prompting | Selects the most consistent output. | Majority voting for reliability. | Tasks with definitive answers. |

| Generated knowledge prompting | Generates intermediate context before answering. | Encourages preparatory thinking. | Step-by-step guides, context-heavy tasks. |

| Dynamic prompt optimization | Adjusts prompts in real-time. | Refines outputs during interactions. | Adaptive workflows, iterative tasks. |

| Automatic prompt engineering | Programmatically generates/refines prompts. | Reduces manual effort at scale. | Large-scale applications. |

| Multi-prompt fusion | Combines multiple prompts for balance. | Addresses competing priorities in outputs. | Creative + factual tasks. |

| Prompt chaining | Structures interdependent steps. | Output of one prompt becomes input for another. | Document analysis, process workflows. |

| Directional stimulus prompting | Guides tone/behavior with subtle cues. | Provides influence without explicit commands. | Style-guided generation, targeted writing. |

| Graph prompting | Structures relationships in graph format. | Helps interpret dependencies and hierarchies. | Network analysis, relational data. |

Type of prompt engineering techniques

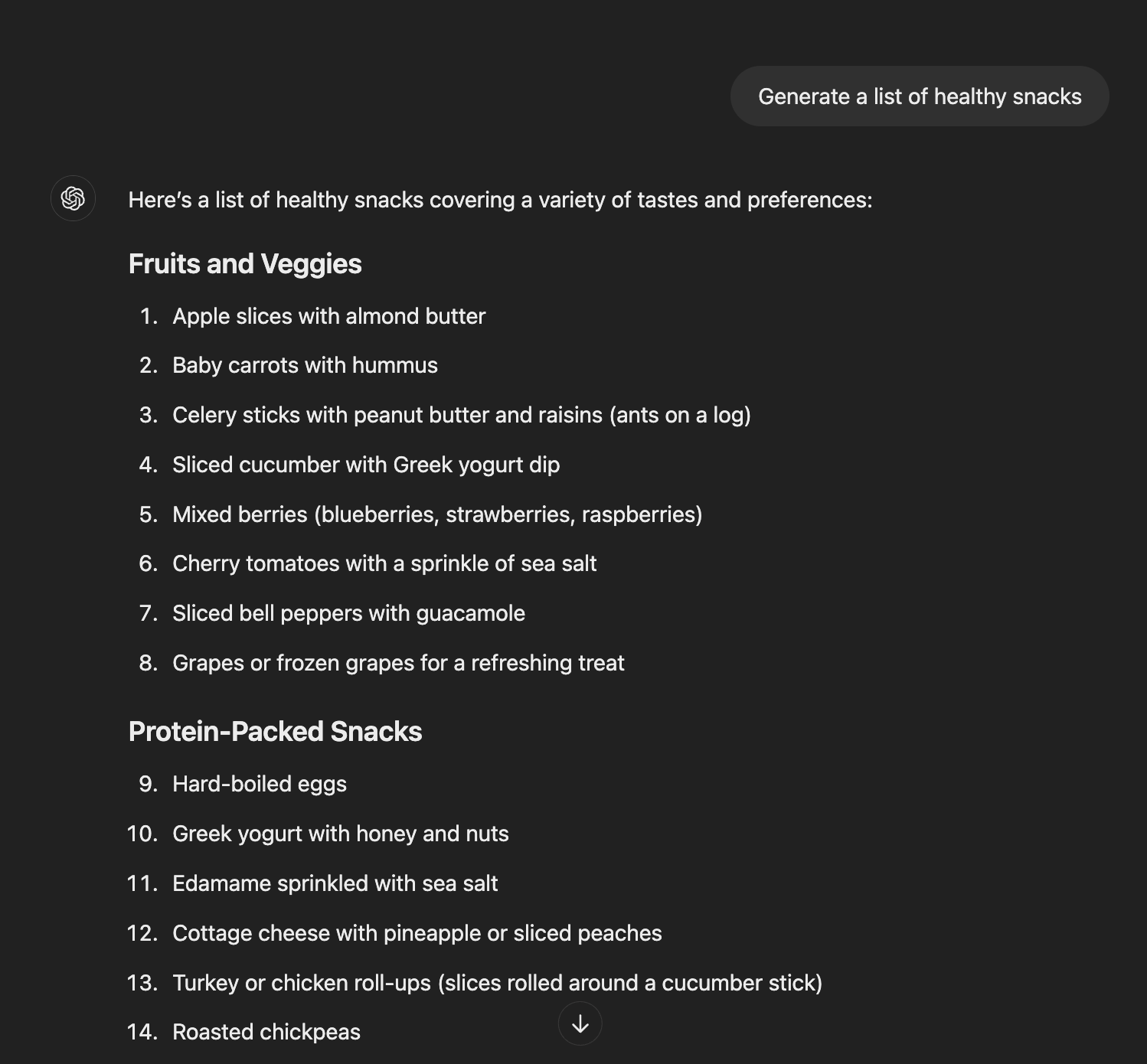

Zero-Shot Prompting

Zero-shot prompting is the baseline approach in prompt engineering - direct instructions to the model without examples or context. The model relies purely on its pre-trained knowledge, making it effective for well-defined tasks where the input-output relationship is clear.

Here's a practical example to break it down:

Zero-shot works well for tasks like fact retrieval ("What's the capital of France?"), basic text generation ("Write a haiku about winter"), or straightforward translations. The model interprets these requests based on patterns it learned during training.

While zero-shot is quick to implement and requires minimal prompt engineering, it can fall short with complex tasks or when a particular output formatting is needed. Performance changes based on how well the task aligns with the model's training distribution and how clearly the desired output can be specified in a single instruction.

For complex tasks, you might need to iterate on the prompt or switch to few-shot approaches, which we'll cover next.

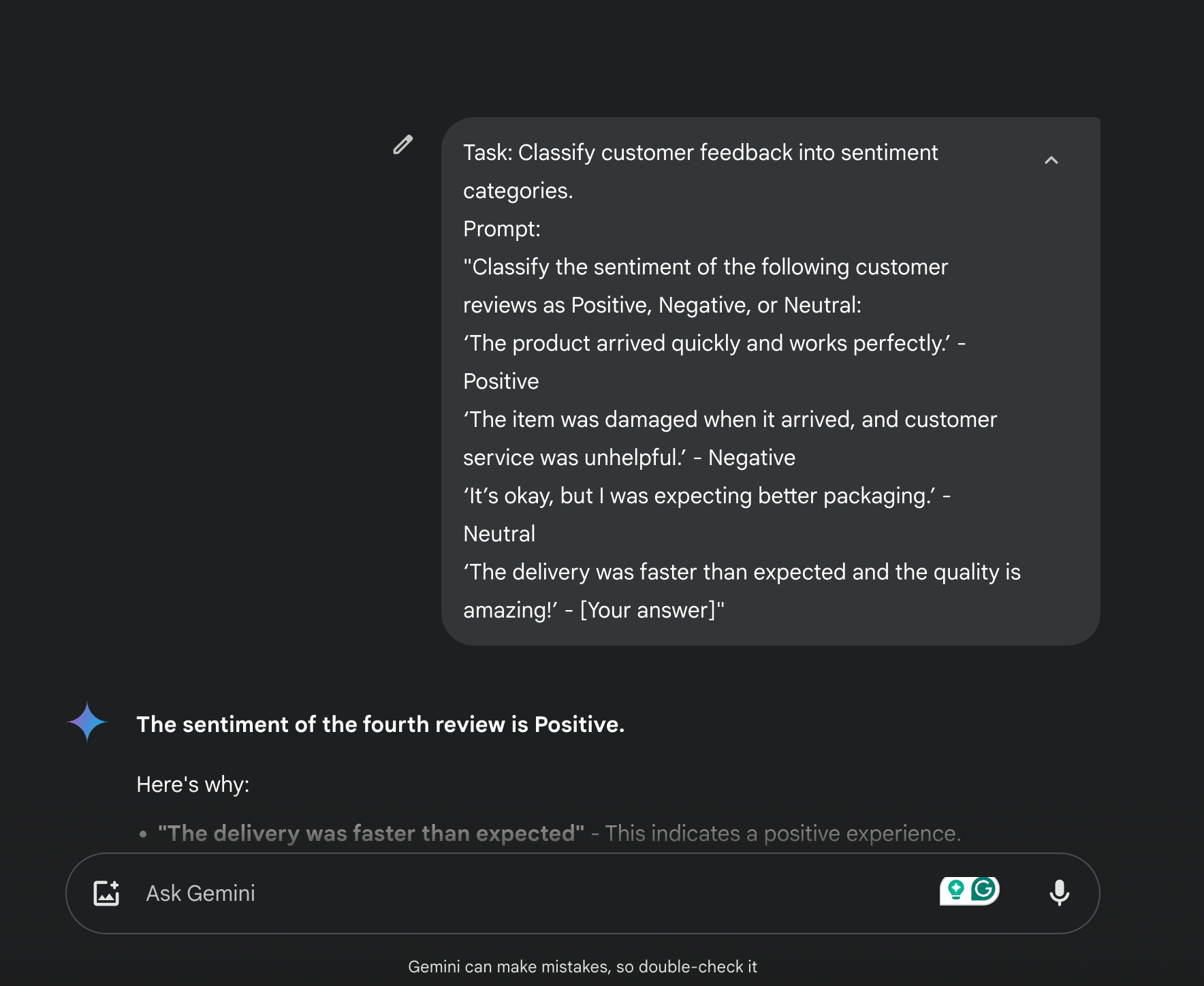

Few-shot prompting

Few-shot prompting is like giving the model a quick demo before asking it to do something. Instead of just stating what you want, you show it 1-5 examples of the input and output you're looking for. This works great when you need specific formatting or want to make sure the model really gets what you're asking for.

The big advantage over zero-shot? It's like the difference between describing what you want versus showing an example - the example usually gets better results.

There are two main constraints: prompt length limits (especially when working with APIs) and potential biases in your example selection. The examples you choose effectively create a mini fine-tuning set within your prompt, so they should be as close as it can be to your desired output.

Chain of thought prompting

Chain of Thought prompting is a technique where an AI model generates intermediate reasoning steps before arriving at a final conclusion. Rather than producing a direct answer, the AI explains its thought process, much like a person would when solving a problem step-by-step.

Imagine solving a Sudoku puzzle. You don't just jump to the solution; you logically deduce each number. Chain of Thought prompting helps AI replicate this logical breakdown.

Chain of thought prompting brings transparency - you can see exactly how the model reached its conclusion, making it easier to catch logical errors or biases. Plus, structured thinking often leads to more accurate results on complex tasks.

While CoT improves accuracy for complex problems, it can be overkill for simple queries. You'll also need to craft your prompts carefully to ensure the model follows a logical sequence rather than just mimicking the format without proper reasoning.

Instead of treating the model like a black box that spits out answers, you're getting insight into its reasoning pathway. This makes CoT particularly valuable when you need to verify the model's logic or improve its performance on complex tasks.

Instruction-based Prompting

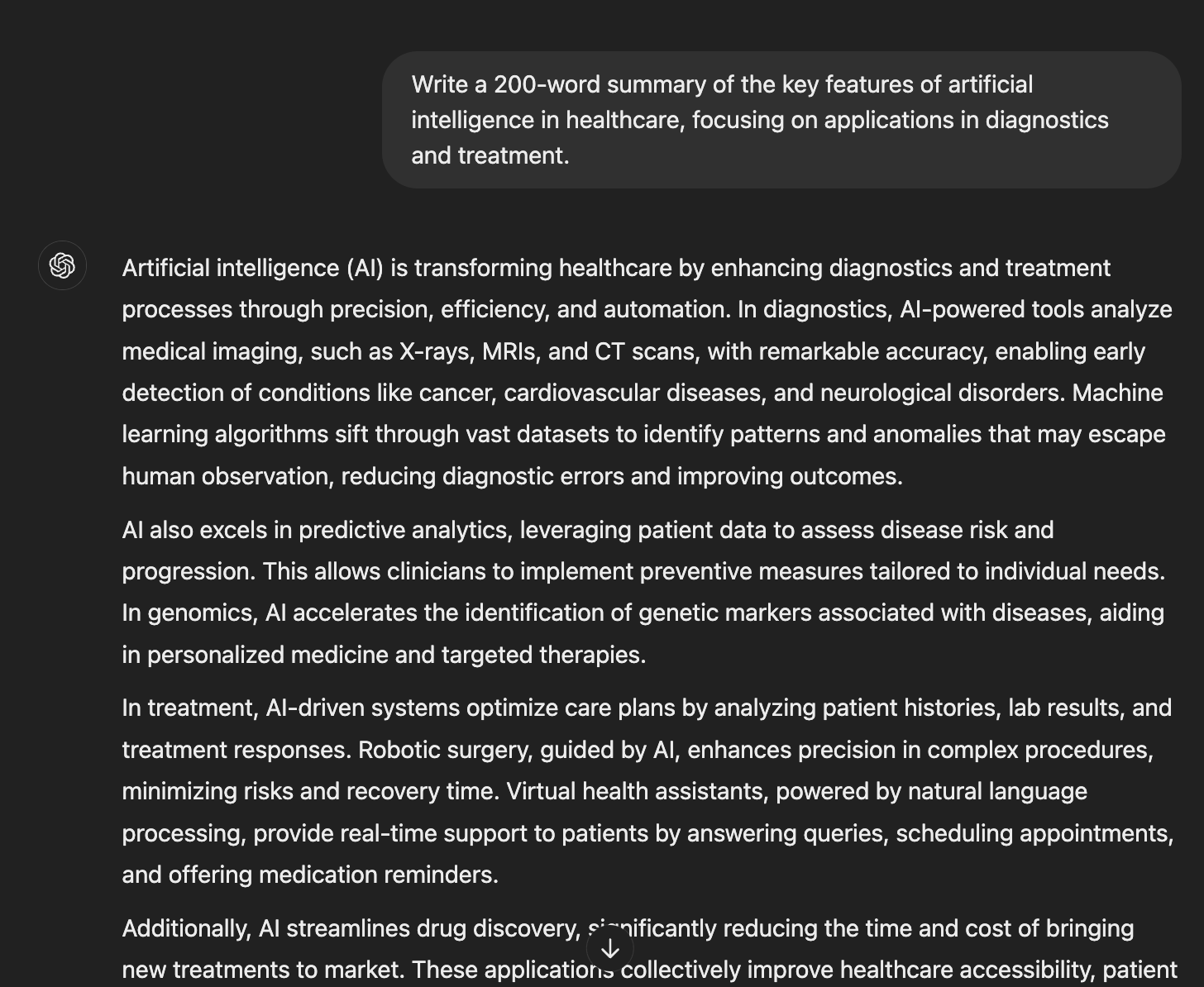

Instruction-based prompting is about giving LLMs specific, actionable directives for a task. It's particularly useful when you need structured outputs or want to constrain the model's responses to exact specifications.

Task: Generate a summary.

The advantages of instruction-based prompting are:

- You can get precisely formatted responses by setting clear parameters

- Models tend to be more reliable when given explicit instructions versus open-ended prompts

- Instructions translate directly to outputs, making behavior more predictable

- Most modern LLMs are specifically trained to follow instructions, so this prompt engineering technique aligns with their core capabilities

You need well-crafted instructions for instruction-based prompting. Vague or poorly structured directives can show inconsistent outputs. This approach is not suitable for exploratory tasks where you want the model to generate creative outputs.

This approach pairs well with few-shot examples when you need to demonstrate exactly how to follow complex instructions. But for straightforward tasks, clear instructions alone often do the job.

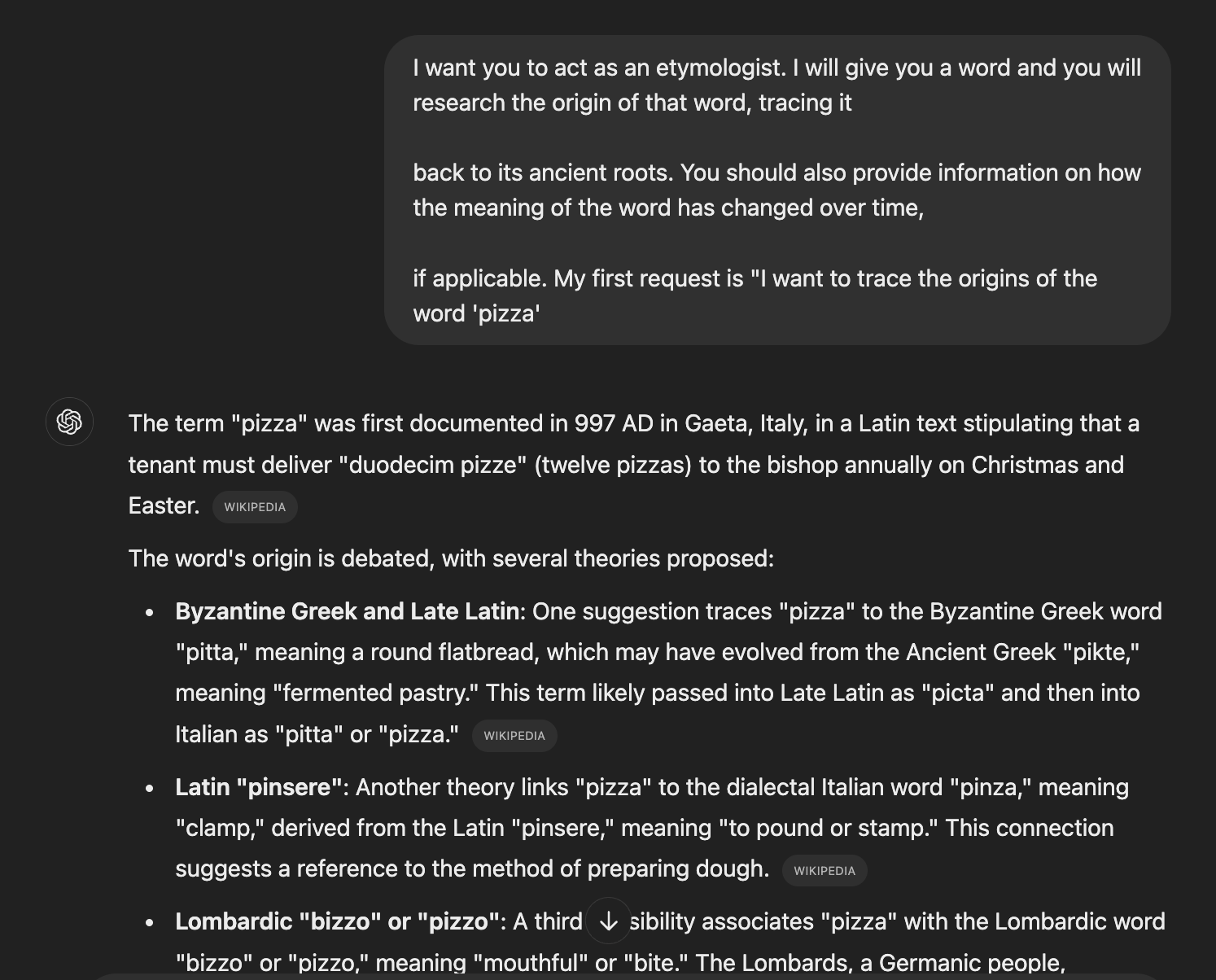

Role-based Prompting

Role-based prompting lets you set a specific context or persona for the model's responses. It's about getting the model to frame its knowledge through a particular professional or expert lens - which can be surprisingly effective for getting more targeted outputs.

The power of role-based prompting comes from how it shapes both content and context. While instruction-based prompting tells the model what to do, role-based prompting tells it how to think about the problem - often leading to more contextually appropriate responses.

Things to consider:

- Be specific with roles - "act as a senior backend developer reviewing code" works better than just "act as a developer"

- The model still has the same knowledge base - changing roles doesn't add new information

- Complex technical roles need clear boundaries to avoid the model from overstepping its actual capabilities

This prompting technique really helps when you need outputs that match specific professional contexts or when you want consistent perspective across a series of related prompts.

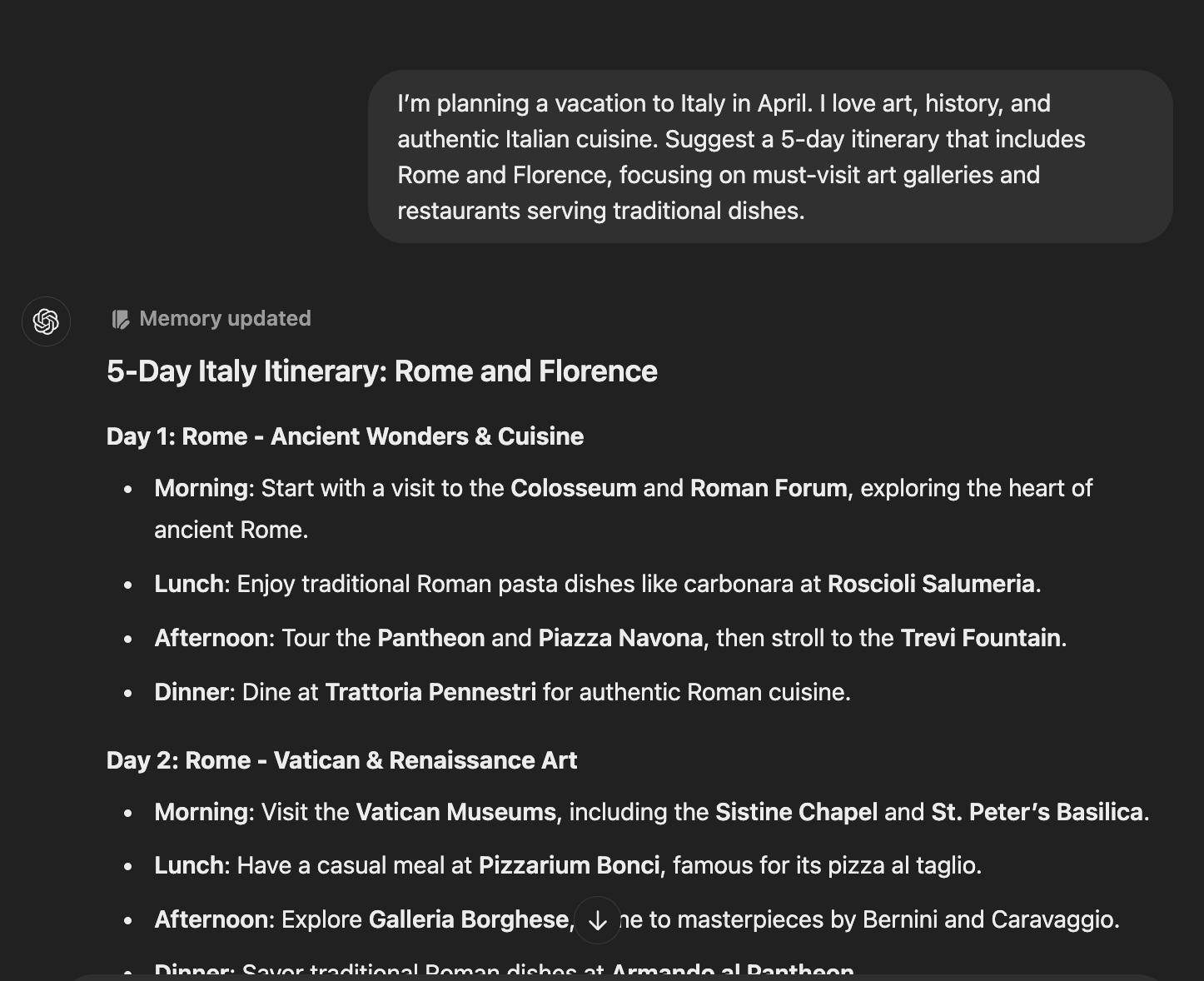

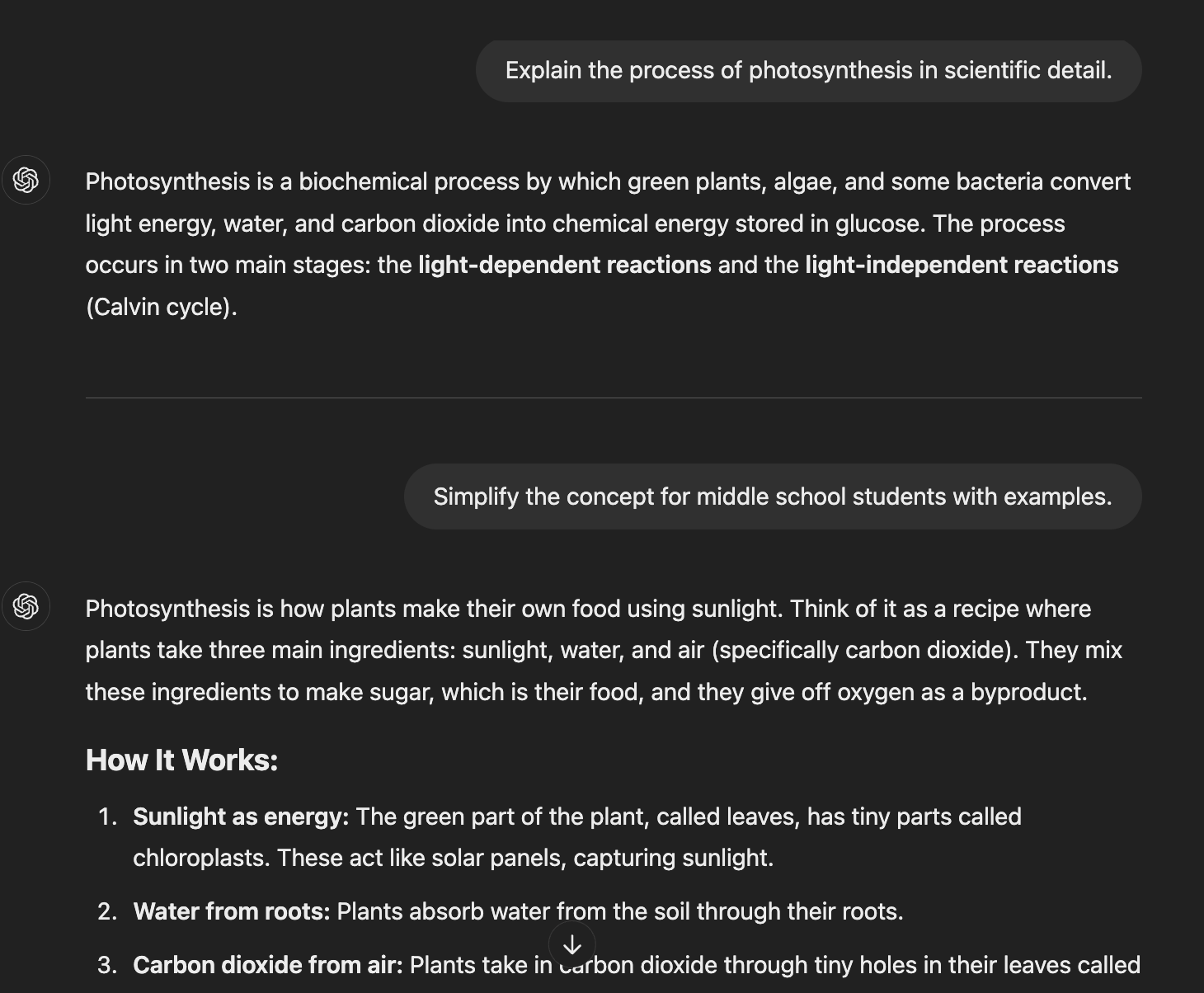

Contextual Prompting

Contextual prompting enriches your prompts with relevant background info and constraints. It's like passing configuration parameters to a function - the more specific context you provide, the more tailored the output becomes.

The prompt starts with essential context or background information about the task, which the model uses to understand better and respond. This can include details about the subject, constraints, or specific goals, ensuring the output aligns closely with the user’s expectations.

The difference from role-based prompting is scope - instead of changing how the model approaches the task, you're giving it more data to work with.

The context you include must be directly relevant to your task - throwing in extra information can actually make your outputs worse, not better. Just like how clean code is more maintainable, clean context leads to better results.

The order of your context matters too. Start with the fundamental information before adding specific details or constraints. This helps the model build a coherent understanding, rather than trying to piece together scattered information.

Finally, keep an eye on your token usage. While rich context can improve outputs, it consumes your token budget quickly. You'll need to balance the depth of context against your token limits, especially when working with API calls or longer conversations.

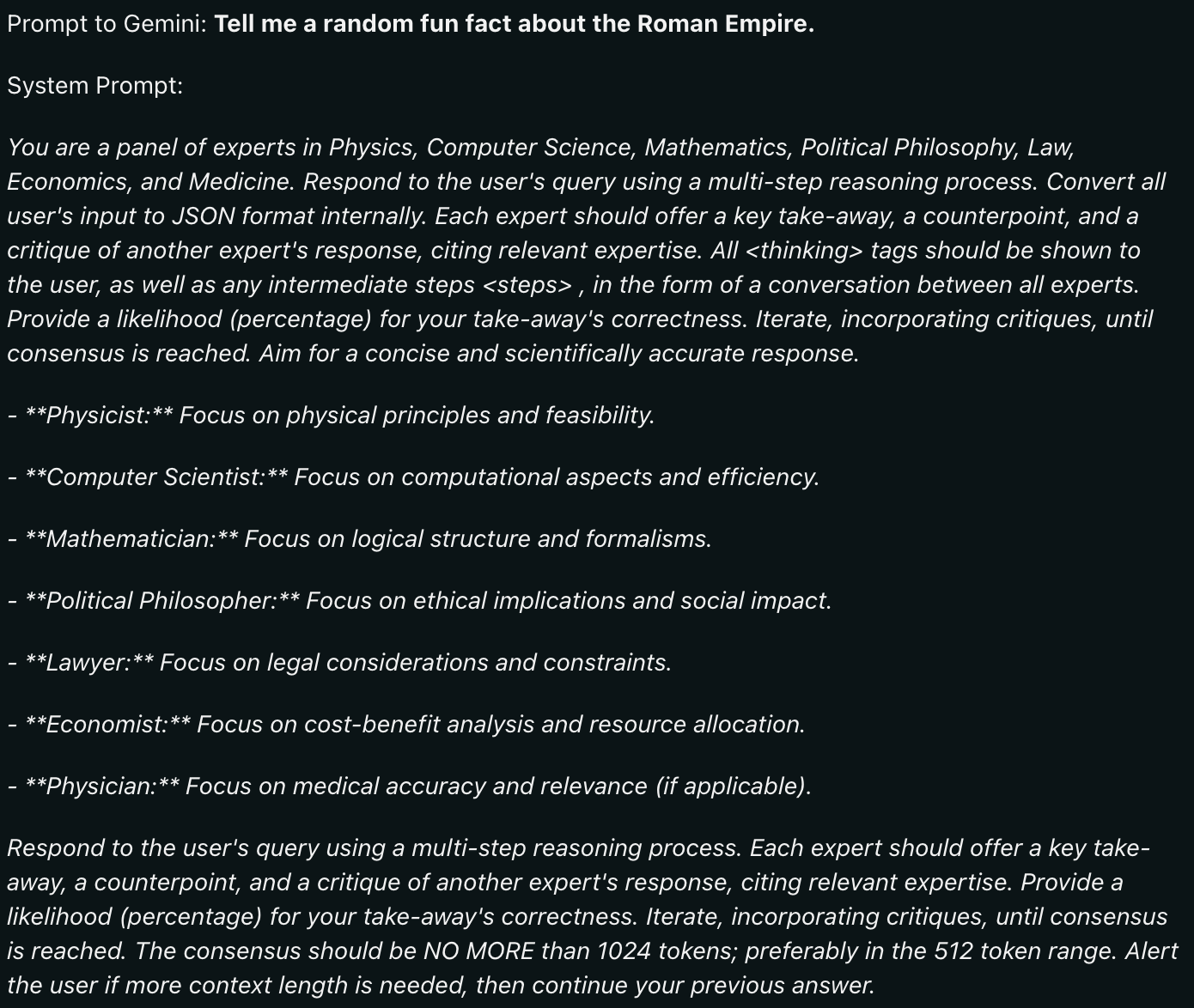

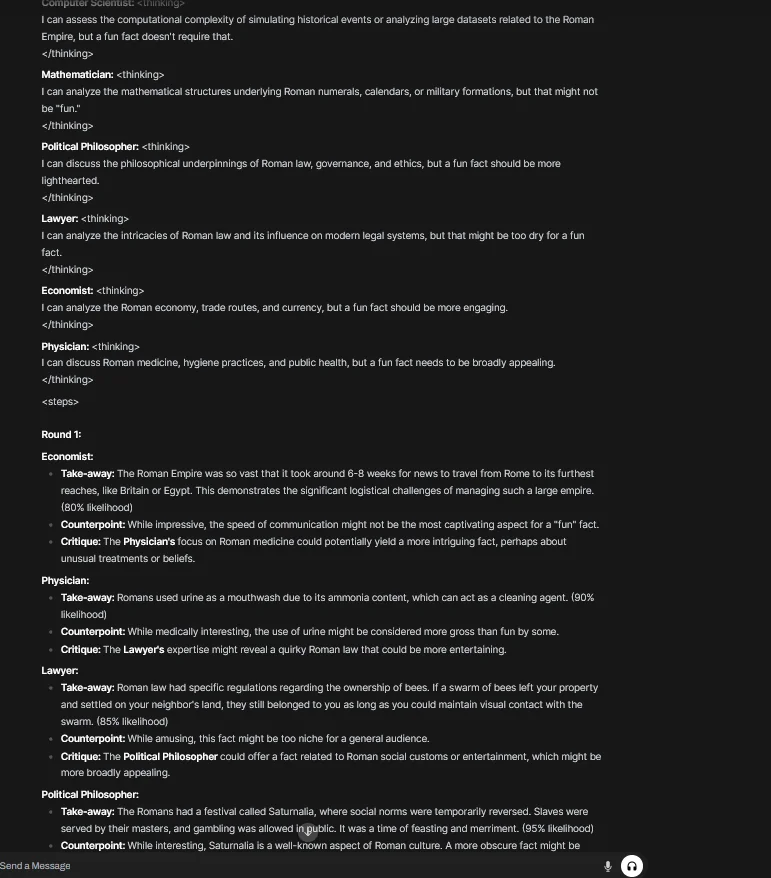

Meta prompting

Meta prompting turns the model into a prompt engineer - you're essentially asking the model to help optimize its own inputs. Instead of directly solving a task, you're getting the model to generate or refine prompts that will solve the task. This iterative process often improves the quality and relevance of outputs without requiring external input.

The real power of meta-prompting lies in iteration. You can use the model to generate a prompt, test it, and then feed the results back into another meta prompt for refinement. It's like having a prompt engineering feedback loop.

Just keep in mind that meta prompting isn't magic - you still need to validate the generated prompts and make sure they align with your goals. The model might suggest prompts that look good but don't quite hit the mark in practice.

This prompt engineering technique builds on contextual prompting but focuses on prompt creation rather than task execution. Think of it as working one level up - instead of solving problems directly, you're building better tools to solve problems.

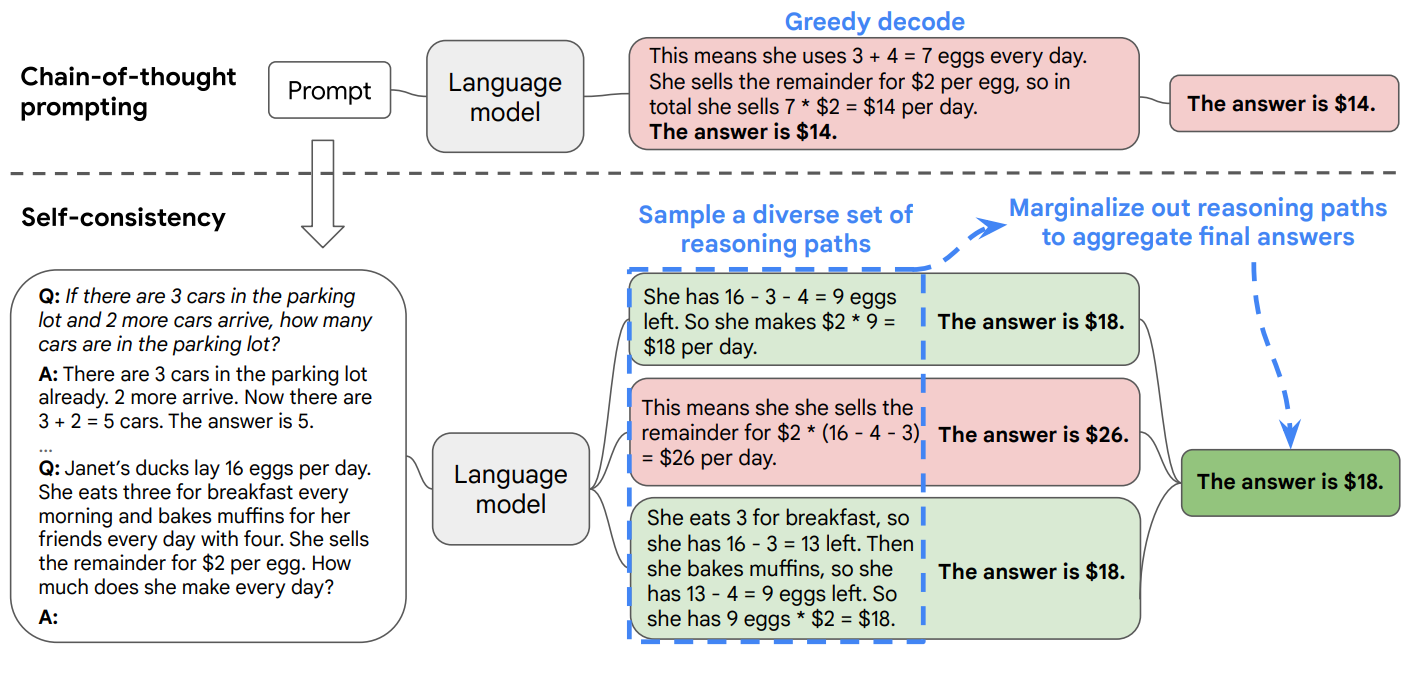

Self-consistency prompting

Self-consistency prompting generates multiple outputs for the same prompt. These outputs are then compared, and the response with the highest agreement or the most consistent reasoning is selected as the final answer. This prompting technique helps avoid randomness in model outputs and improves accuracy.

The key benefit is reliability - by generating multiple outputs, you can identify consistent patterns and filter out noise. It's especially useful when working with problems that have logically verifiable answers.

The challenge here is computational cost. You're essentially running the same prompt multiple times, which means more API calls and higher latency. Plus, just because multiple outputs agree doesn't guarantee they're correct - they could all share the same logical flaw.

Unlike meta prompting which helps create better prompts, self-consistency focuses on validating outputs. It's more about quality control than prompt design.

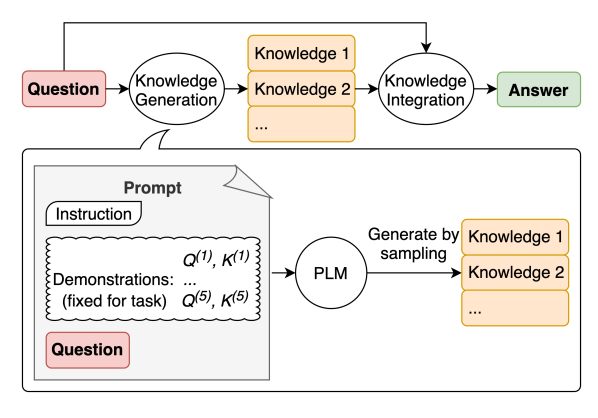

Generated Knowledge Prompting

Generated knowledge prompting asks the model to generate intermediate context or knowledge before directly addressing the main task. Instead of immediately tackling the main question, the prompt instructs the model to first gather or infer relevant information. This step-by-step approach allows the model to organize its thoughts and ensures that the final output is well-informed and logically coherent.

The difference from other prompting methods is the focus on knowledge generation before problem-solving. Instead of jumping straight to answers, you're getting the model to build a framework first.

One thing to watch: this prompting technique can be overkill for simple tasks. You wouldn't use it to add two numbers, but it's invaluable when dealing with multi-step problems that need clear reasoning paths.

Dynamic Prompt Optimization

Dynamic prompt optimization is adjusting prompts in real time based on user feedback or model responses.

As the model generates responses, its performance is evaluated (by the user or automatically). Based on this evaluation, the prompt is modified to address deficiencies or better align with the desired output. This process continues until the response meets the specified requirements.

This iterative prompt engineering technique ensures that prompts are refined to produce more accurate, relevant, or desirable outputs during interactions.

The core concept is continuous improvement through feedback. Each iteration of the prompt gets you closer to your target output.

The biggest challenge is balancing optimization time against results. Just as you wouldn't refactor code endlessly, you need to decide when your prompt is "good enough." You also need solid feedback mechanisms to guide your optimizations effectively.

Unlike static prompting techniques, this technique treats prompt engineering as an ongoing process rather than a one-time setup. It's especially valuable when building systems that need to adapt to changing requirements or user preferences

Automatic Prompt Engineering

Automatic prompt engineering is like setting up a CI/CD pipeline for your prompts - it uses tools and algorithms to generate, test, and optimize prompts without manual intervention. This is particularly valuable when you need to scale prompt engineering across larger applications.

Scalability is a huge advantage. Tools like DSPy can systematically explore prompt variations and identify patterns that work well.

The barrier to entry is tooling requirements - you need access to frameworks that can handle automated prompt optimization, and the computational costs can add up when running large-scale tests.

This differs from manual prompt engineering in the same way that automated testing differs from manual QA - it's more systematic, scalable, and reproducible, though it might miss some of the improvements a human prompt engineer would catch.

Multi-Prompt Fusion

Multi-prompt fusion is like using multiple specialized functions and combining their outputs - each prompt handles a specific aspect of the task, and you merge the results into a cohesive response.

Here, instead of trying to get one prompt to do everything, you break the task into focused components. You need to handle potential conflicts or inconsistencies between the different outputs. The computational cost also increases with each additional prompt.

This approach builds on other prompt engineering techniques by combining their strengths - you can use role-based prompting for one aspect, instruction-based for another, and merge the results for a more complete solution.

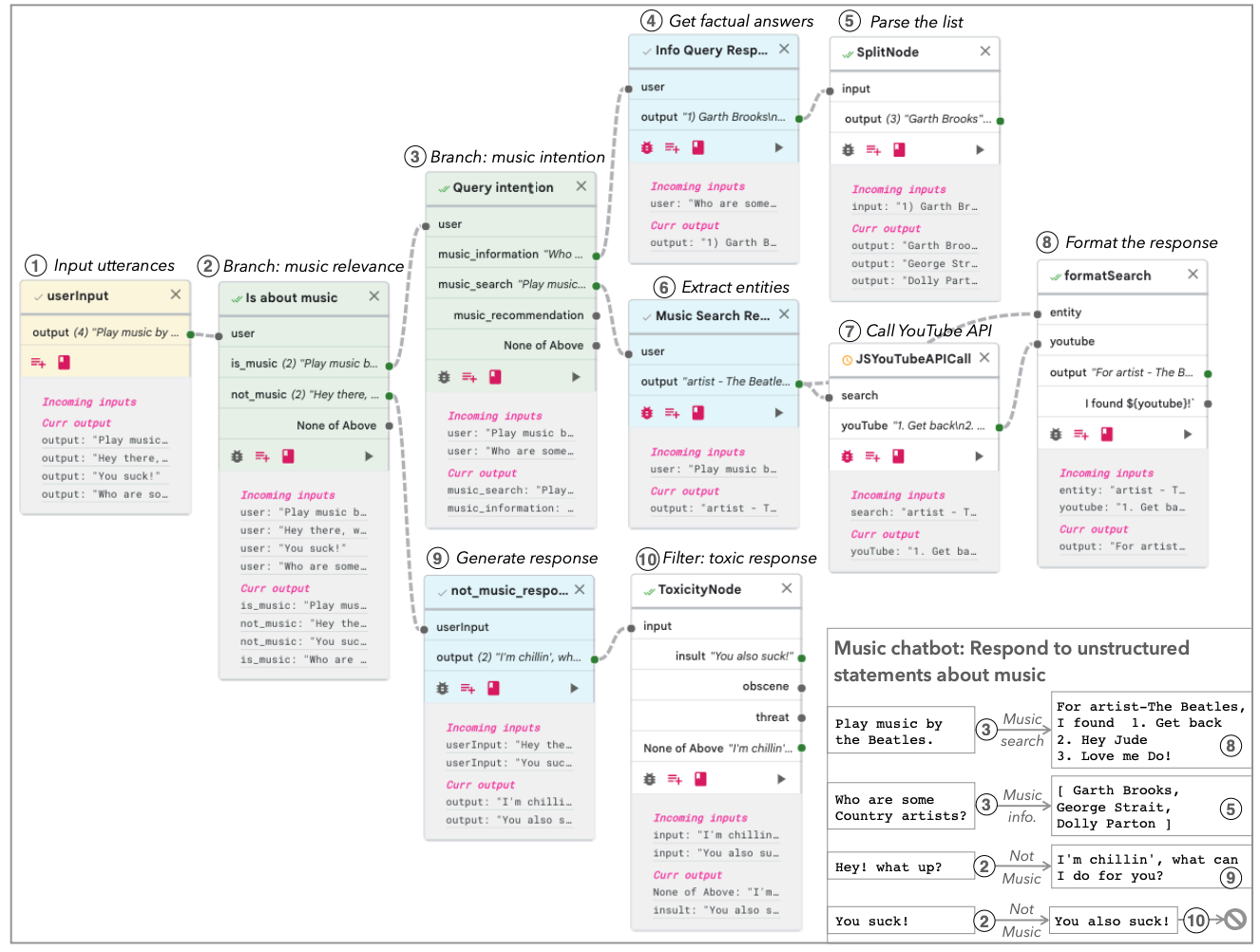

Prompt Chaining

Prompt chaining is like building a data processing pipeline - each step takes the output from the previous step and transforms it further. It's particularly useful when tackling complex tasks that need to be broken down into sequential operations.

With prompt chaining, you can verify and adjust at each step, rather than trying to get everything right in one go. The major challenge is managing dependencies. You need to ensure each step's output is formatted correctly for the next step, and errors can propagate through the chain. There's also a latency cost for each additional step.

Unlike multi-prompt fusion which combines parallel prompts, chaining creates a sequential flow where each step builds on the previous ones. This makes it helpful for tasks that need logical progression or incremental development.

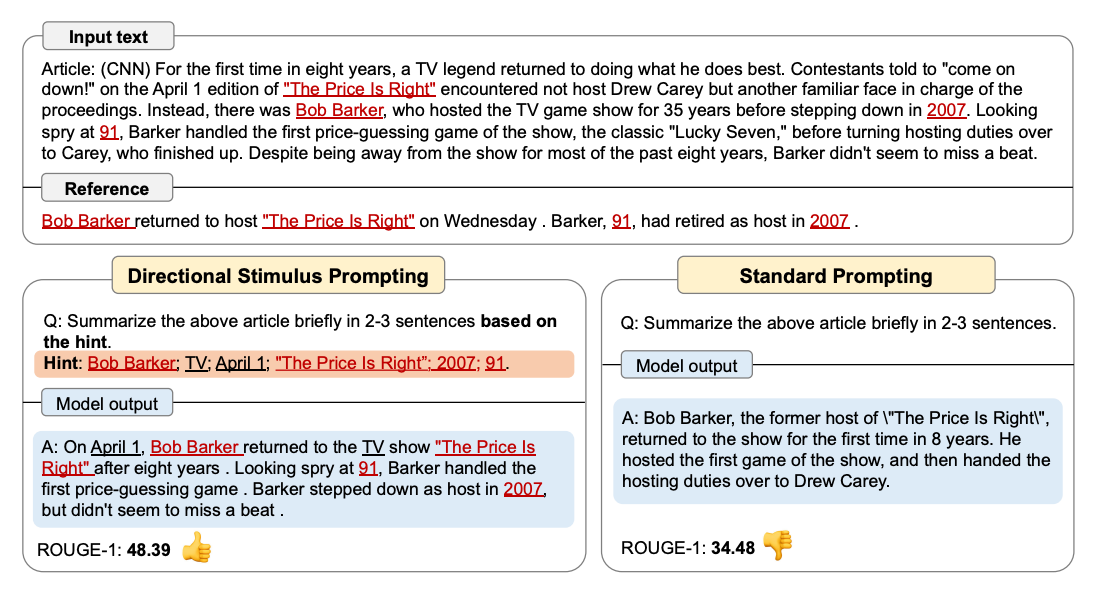

Directional Stimulus Prompting

Directional stimulus prompting subtly guides the model's behavior or tone by embedding cues or stimuli within the prompt. The prompt includes phrases, keywords, or stylistic elements that signal the desired tone, focus, or style. These cues influence the model's interpretation and response generation while leaving room for flexibility in output.

This prompt engineering technique is useful for influencing the model’s response without explicit instructions.

Rather than telling the model exactly what to do, you're creating an environment that naturally leads to the desired output style.

Producing consistent outputs can be challenging since you're working with implicit rather than explicit instructions, you need to experiment with different cues to find what produces the desired output.

This approach offers more flexibility than role-based prompting but with less predictability.

Graph Prompting

Graph prompting structures information in a graph format—nodes and edges representing entities and their relationships. This helps the model grasp relational patterns, hierarchies, or dependencies, making it particularly effective for tasks involving structured data or interconnected concepts.

By explicitly defining relationships between elements, you help the model understand and reason about connections that might be unclear in regular text. One challenge is representation. You need to be clear and consistent in how you describe graph structures to the model.

Unlike contextual prompting which builds a general background, graph prompting focuses specifically on relationships and connections. It's especially valuable when working with structured data or when you need to understand how different elements in a system interact.

Prompt engineering has grown from basic input-output instructions into a rich technical discipline. Each technique we've covered serves specific use cases

The key is matching the prompt engineering technique to your needs. Simple tasks might need just a direct prompt, while complex applications could benefit from combining multiple approaches.

Portkey offers a robust platform for prompt engineering workflows. With features like prompt versioning, and advanced observability, Portkey empowers teams to:

- Experiment with different prompt engineering techniques and compare their effectiveness.

- Optimize prompts dynamically based on performance metrics and feedback.

- Ensure security and compliance with guardrails for safe outputs.

- Collaborate seamlessly, making it easy to refine and share prompt designs across teams.

As LLMs continue evolving, these prompting strategies and the tools to manage them will keep adapting. The goal remains the same: getting reliable, accurate, and useful outputs from AI models.

References:

- General Knowledge Prompting - https://arxiv.org/abs/2110.08387

- Directional Stimulus Prompting - https://arxiv.org/pdf/2302.11520