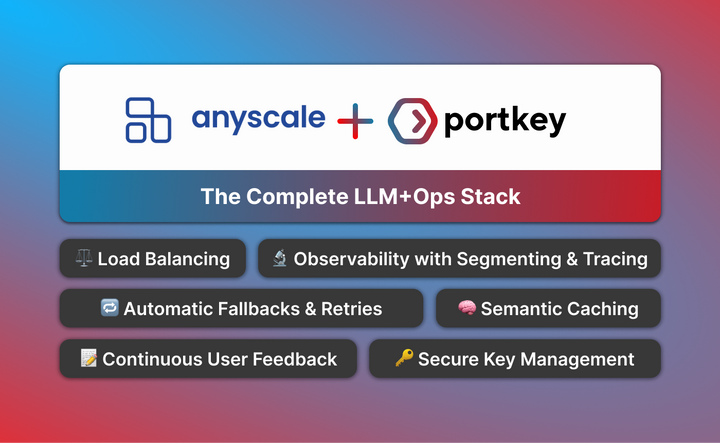

Anyscale's OSS Models + Portkey's Ops Stack

The landscape of AI development is rapidly evolving, and open-source Large Language Models (LLMs) have emerged as a key foundation for building AI applications.

Anyscale has been a game-changer here with their fast and cheap APIs for Llama2, Mistral, and more OSS models.

But to harness the full potential of