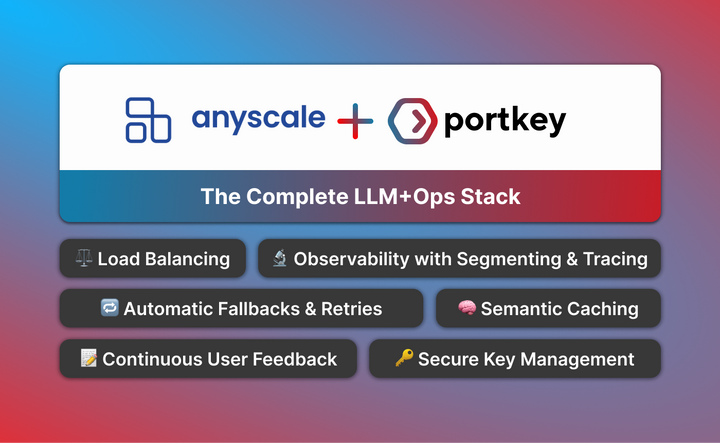

Portkey Goes Multimodal

2024 is the year where Gen AI innovation and productisation happens hand-in-hand.

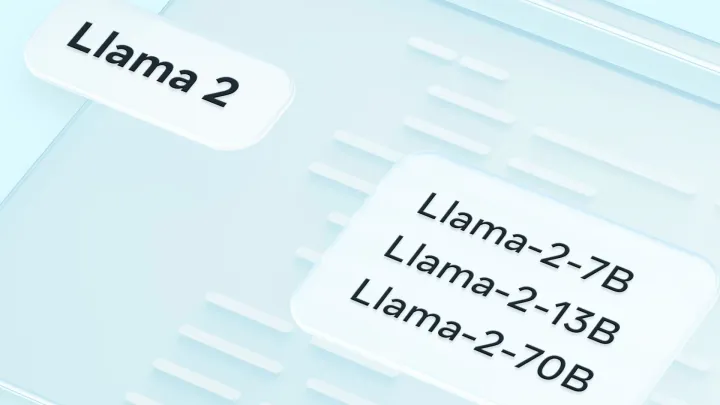

We are seeing companies and enterprises move their Gen AI prototypes to production at a breathtaking pace. At the same time, an exciting new shift is also taking place in how you can interact with LLMs: completely new