🌖 Announcing $3M Seed Round to Bring LLMs to Production

Portkey is building a full-stack LLMOps platform that empowers AI builders to productionize their Gen AI apps reliably and securely.

When we (Ayush & Rohit) first started building on top of LLMs back when GPT3 was all the rage, the LLM paradigm was new, but the adoption and readiness of users to try it out was unprecedented. So, as our apps started to scale, we started running into challenges we hadn't seen before. Challenges like:

- Increasing latencies

- No visibility of requests and errors

- Abuse of our systems

- Rate limiting of requests

- Poor key management

- Privacy complications with client data

- ...and more.

We soon realized, building a prototype on top of LLMs is a very different problem from scaling them to production.

Ayush and I both come from a technical background and have been deep admirers of companies like Cloudflare & Datadog and what they have done to impact the cloud ecosystem. We believe, bringing the DevOps principles of reliability to LLM systems is the key to unlocking the next stage of growth for Gen AI apps.

Today, as we proudly announce our seed round, we are also inspired by another monumental achievement: India's Chandrayaan-3 has become the only lunar mission in the world to land on the moon’s south pole. As we work to fulfil our mission of building a world-class LLMOps platform out of India, we take immense inspiration from what the scientists at ISRO have achieved.

We are raising a $3M seed round led by Dev Khare and Manjot Pahwa from Lightspeed India. Portkey's mission is special - and we are so excited to have some of the most prominent Cloud, Dev GTM, and AI leaders from the industry joining this round - Sanjeev Sisodiya, Adit Parekh, Ankit Gupta, Shyamal Hitesh Anadkat, Manish Jindal, Jake Seid, Oliver Jay, Aakrit Vaish, Pranay Gupta, Sandeep Krishnamurthy, Gaurav Mandlecha.

“We are excited to partner with Portkey, as they supercharge teams developing, deploying and managing LLM-based applications and copilots for businesses and consumers. We are equally excited by the founding team of Rohit and Ayush, whom we’ve known for the past decade through multiple 0 to 1 journeys.”

- Dev Khare, Lightspeed India

Why now?

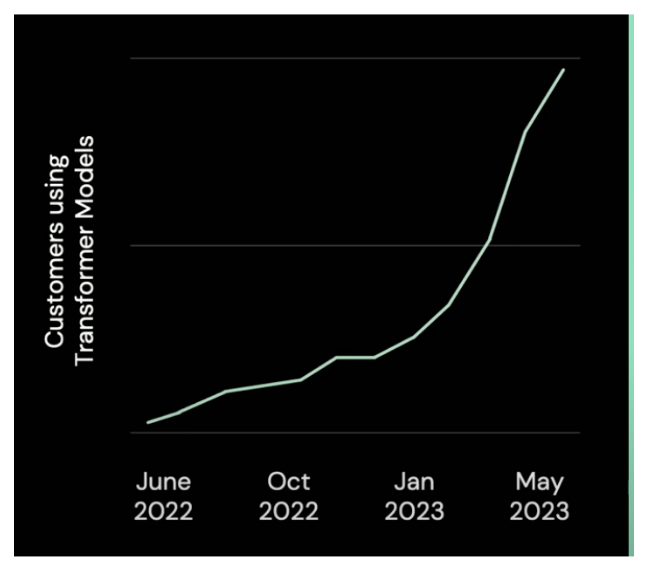

Look at this chart highlighting the unsurprising popularity of OpenAI’s Python SDK.

And then, this one from the Databricks conference keynote on LLM adoption

There’s no denying that teams are really excited about building with LLMs. We’re also at the right maturity stage, where these applications are starting to go to production. But, this won’t be possible without the right tools to enable companies to build really smart, complex applications.

One of the major ingredients for amazing SaaS applications to launch after 2010 was the rise of tools, which enabled companies to build deeper products really well. Stripe made payments easier, Cloudflare enabled security, Datadog enabled debugging across companies.

For Gen AI apps, having a great LLMOps platform accelerates development, feedback collection, and most definitely, revenue. Having worked with over 100 companies and serving millions of requests daily, we’re proud of the feedback we continuously receive from our users.

While the category is still in its infancy, we're ready for a journey filled with growth and insights as the underlying technology evolves.

Below, we've detailed our plans for where we want to go next and where we are today. If you connect with our mission of bringing DevOps principles to the LLM world, join our community of practitioners putting LLMs in production.

What is Portkey?

Portkey is a developer-first full-stack LLMOps platform. We've natively built:

- An Observability layer

- An AI Gateway

- A Prompt Manager

- An Experimentation & Evals framework

- And Security & Compliance protocols

All in all, one unified platform that can be integrated with a line of code in Gen AI apps, making them production-grade, reliable, and secure.

Like Cloudflare, we operate as a gateway between LLM apps and their providers and add production capabilities to existing workflows without adding any latency.

const openai = new OpenAI({

apiKey: ‘api_key’,

baseURL: ‘https://api.portkey.ai/v1/proxy’,

defaultHeaders: {

’x-portkey-api-key’: ’portkey_api_key’,

’x-portkey-mode’: ’proxy openai’

}

});And with this one LoC, all your LLM requests are logged automatically. You can dive into errors, cost spikes, and debug effortlessly. You can enable semantic caching and have a direct impact of 20% cost reductions & 20x faster responses on your LLM calls.

“While developing Albus, we were handling over 10K questions daily. The challenges of managing costs, latency, and rate limiting on OpenAI were becoming overwhelming. It was then that Portkey intervened, providing invaluable support through its analytics and semantic caching solutions."

- Kartik Mandaville, CEO, Springworks

Where are we today?

As of today, Portkey has processed over 50 million LLM requests and is rapidly onboarding more customers from different industries utilizing LLMs - HR tech, content gen, enterprise copilot, dev tools, and more.

We have built one of the most advanced LLM analytics suite in the market, and are the only hosted solution for semantic caching, which has proven to make API requests 20X faster.

We are also proud to be launching a hosted AI Gateway that solves enterprise reliability issues around uptime, errors and rate limiting by balancing requests across different API keys and LLM providers.

What's next?

One of the most exciting things we’ve realized while building Portkey is that everyone is very, very early. Which means, we have an unprecedented opportunity to impact how AI gets integrated into our lives and help shape it fundamentally.

With the landscape changing so much, so fast - we’ll be allocating resources to building the best platform engineering systems to cater to probabilistic models.

Our early customers have been a source of immense real feedback that helps us cut through the noise. We’re actively listening and focused on building as much value for companies who are building with Gen AI. We believe this is the only way to build a sustainable & value-generating business in the LLMOps space.

Secondly, we are building partnerships with LLM providers, vector databases, and more in the ecosystem, as that would play a major role in adoption and usefulness.

The Gen AI community globally has been extremely collaborative and helpful, and we’re looking to add more fuel to the revolution.

We are grateful to our early customers who’ve trusted us with their production apps. It is a responsibility we do not take lightly, and over the longer term, along with our customers, we hope to help define how AI is brought to the lives of people all around the world.

Portkey is out of beta. Sign up here and follow us on Twitter (or X) to stay updated with what's happening at Portkey 🪄.