Control Panel

for AI Apps

With Portkey's AI Gateway, Prompts, Guardrails, & Observability Suite,

thousands of teams ship reliable, cost-efficient, and fast apps

With Portkey's AI Gateway, Prompts, Guardrails, & Observability Suite,

thousands of teams ship reliable, cost-efficient, and fast apps

With Portkey's AI Gateway, Guardrails, and Observability Suite, thousands of teams ship reliable, cost-efficient, and fast apps.

Enabling 3000+ leading teams to build the future of GenAI

Enabling 3000+ leading teams to build the future of GenAI

Enabling 3000+ leading teams to build the future of GenAI

Monitor costs, quality, and latency

Monitor costs, quality, and latency

Get insights from 40+ metrics and debug with detailed logs and traces.

Get insights from 40+ metrics and debug with detailed logs and traces.

Route to 250+ LLMs, reliably

Route to 250+ LLMs, reliably

Call any LLM with a single endpoint and setup fallbacks, load balancing, retries, cache, and canary tests effortlessly.

Call any LLM with a single endpoint and setup fallbacks, load balancing, retries, cache, and canary tests effortlessly.

Streamline and scale prompt engineering

Streamline and scale prompt engineering

Collaboratively build, version, and deploy prompts with multi-environment support, access control, and more.

Collaboratively build, version, and deploy prompts with multi-environment support, access control, and more.

Enforce reliable LLM behaviour with guardrails

Enforce reliable LLM behaviour with guardrails

LLMs are unpredictable. With Portkey, you can synchronously run Guardrails on your requests and route them with precision.

LLMs are unpredictable. With Portkey, you can synchronously run Guardrails on your requests and route them with precision.

Put your agents in prod

Put your agents in prod

Portkey integrates with Langchain, CrewAI, Autogen and other major agent frameworks, and makes your agent workflows production-ready.

Portkey integrates with Langchain, CrewAI, Autogen and other major agent frameworks, and makes your agent workflows production-ready.

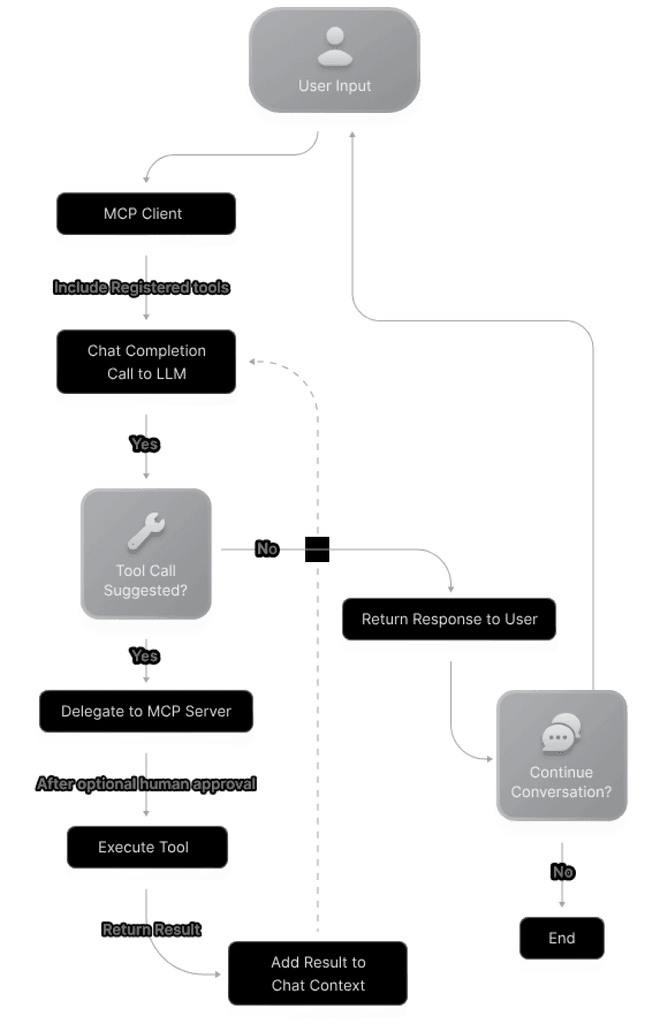

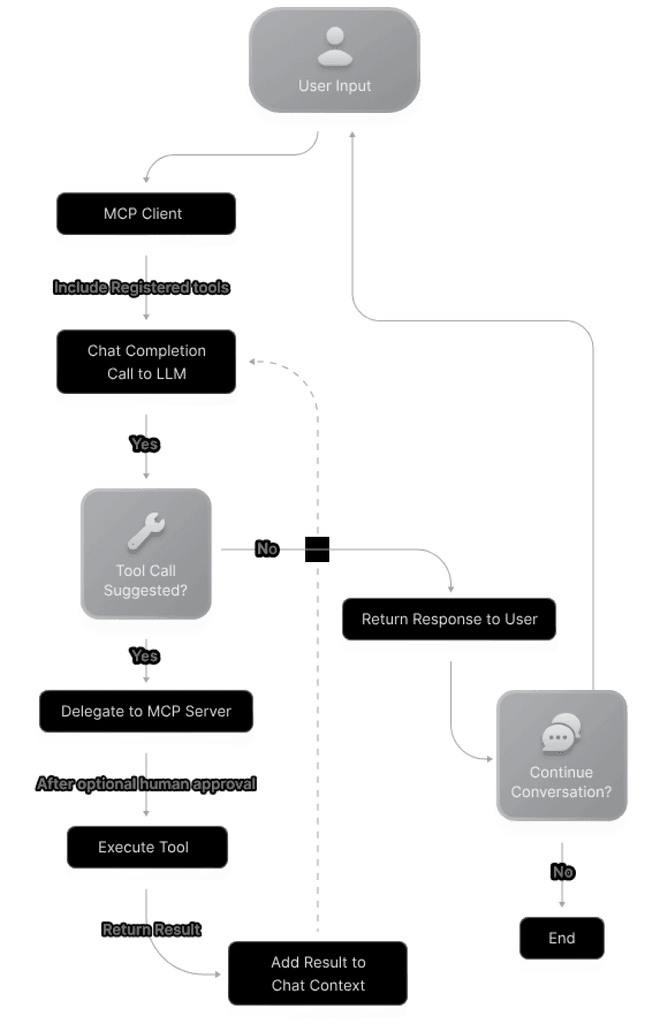

Build agents with access to real-world tools

Build agents with access to real-world tools

With Portkey's MCP client, build AI agents that can call 1,000+ verified tools and take up any task in natural language.

With Portkey's MCP client, build AI agents that can call 1,000+ verified tools and take up any task in natural language.

“It's the simplest tool I've found for managing prompts and getting insights from our AI models. With Portkey, we can easily understand how our models are performing and make improvements. It gives us confidence to put things in production and scale our business without breaking a sweat. Plus, the team at Portkey is always responsive and helpful.”

Pablo Pazos

Building Barkibu - AI-first pet health insurance

“While developing Albus, we were handling more than 10,000 questions daily. The challenges of managing costs, latency, and rate-limiting on OpenAI were becoming overwhelming; it was then that Portkey intervened and provided invaluable support through their analytics and semantic caching solutions.”

Kartik M

Building Albus - RAG based community support

Thank you for the good work you guys are doing. I don't want to work without Portkey anymore.

Luka Breitig

Building The Happy Beavers - AI Content Generation

Daniel Yuabov

Building Autoeasy

"We are really big fans of what you folks deliver. For us, Portkey IS OpenAI."

Deepanshu S

Building in stealth

"We are using Portkey in staging and production, works really well so far. With reporting and observability being so bad on openAI and Azure, portkey really helps get visibility into how and where we are using GPT which becomes a problem as you start using it at scale within a company and product."

Swapan R

Building Haptik.ai

"I really like Portkey so far. I am getting all the queries I made saved in portkey out of box. Integration is super quick if using openai directly. And basic analytics around costing is also useful. OpenAi fails often and I can set portkey to fallback to azure as well."

Siddharth Bulia

Building in Stealth

Integrate

in a minute

Works with OpenAI and other AI providers out of the box. Natively integrated with Langchain, LlamaIndex and more.

Node.js

Python

OpenAI JS

OpenAI Py

cURL

import Portkey from 'portkey-ai'; const portkey = new Portkey() const chat = await portkey.chat.completions.create({ messages: [{ role: 'user', content: 'Say this is a test' }], model: 'gpt-4, }); console.log(chat.choices);

Build your AI app's control panel now

No Credit Card Needed

SOC2 Certified

Fanatical Support

Build your AI app's control panel now

No Credit Card Needed

SOC2 Certified

Fanatical Support

30% Faster Launch

With a full-stack ops platform, focus on building your world-domination app. Or, something nice.

99.99% Uptime

We maintain strict uptime SLAs to ensure that you don't go down. When we're down, we pay you back.

40ms Latency

Cloudflare workers enable our blazing fast APIs with <40ms latencies. We won't slow you down.

100% Commitment

We've built & scaled LLM systems for over 3 years. We want to partner and make your app win.

How does Portkey work?

You can integrate Portkey by replacing the OpenAI API base path in your app with Portkey's API endpoint. Portkey will start routing all your requests to OpenAI to give you control of everything that's happening. You can then unlock additional value by managing your prompts & parameters in a single place.

How does Portkey work?

You can integrate Portkey by replacing the OpenAI API base path in your app with Portkey's API endpoint. Portkey will start routing all your requests to OpenAI to give you control of everything that's happening. You can then unlock additional value by managing your prompts & parameters in a single place.

How do you store my data?

Portkey is ISO:27001 and SOC 2 certified. We're also GDPR compliant. We maintain the best practices involving security of our services, data storage and retrieval. All your data is encrypted in transit and at rest. For enterprises, we offer managed hosting to deploy Portkey inside private clouds. If you need to talk about these options, feel free to drop us a note on [email protected]

How do you store my data?

Portkey is ISO:27001 and SOC 2 certified. We're also GDPR compliant. We maintain the best practices involving security of our services, data storage and retrieval. All your data is encrypted in transit and at rest. For enterprises, we offer managed hosting to deploy Portkey inside private clouds. If you need to talk about these options, feel free to drop us a note on [email protected]

Will this slow down my app?

No, we actively benchmark to check for any additional latency due to Portkey. With the built-in smart caching, automatic fail-over and edge compute layers - your users might even notice an overall improvement in your app experience.

Will this slow down my app?

No, we actively benchmark to check for any additional latency due to Portkey. With the built-in smart caching, automatic fail-over and edge compute layers - your users might even notice an overall improvement in your app experience.

Products

© 2024 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Products

© 2024 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Products

© 2024 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR