A unified access layer to access MCP tools, without modifying your agents or servers. Self-host, deploy to your cloud, or run fully managed.

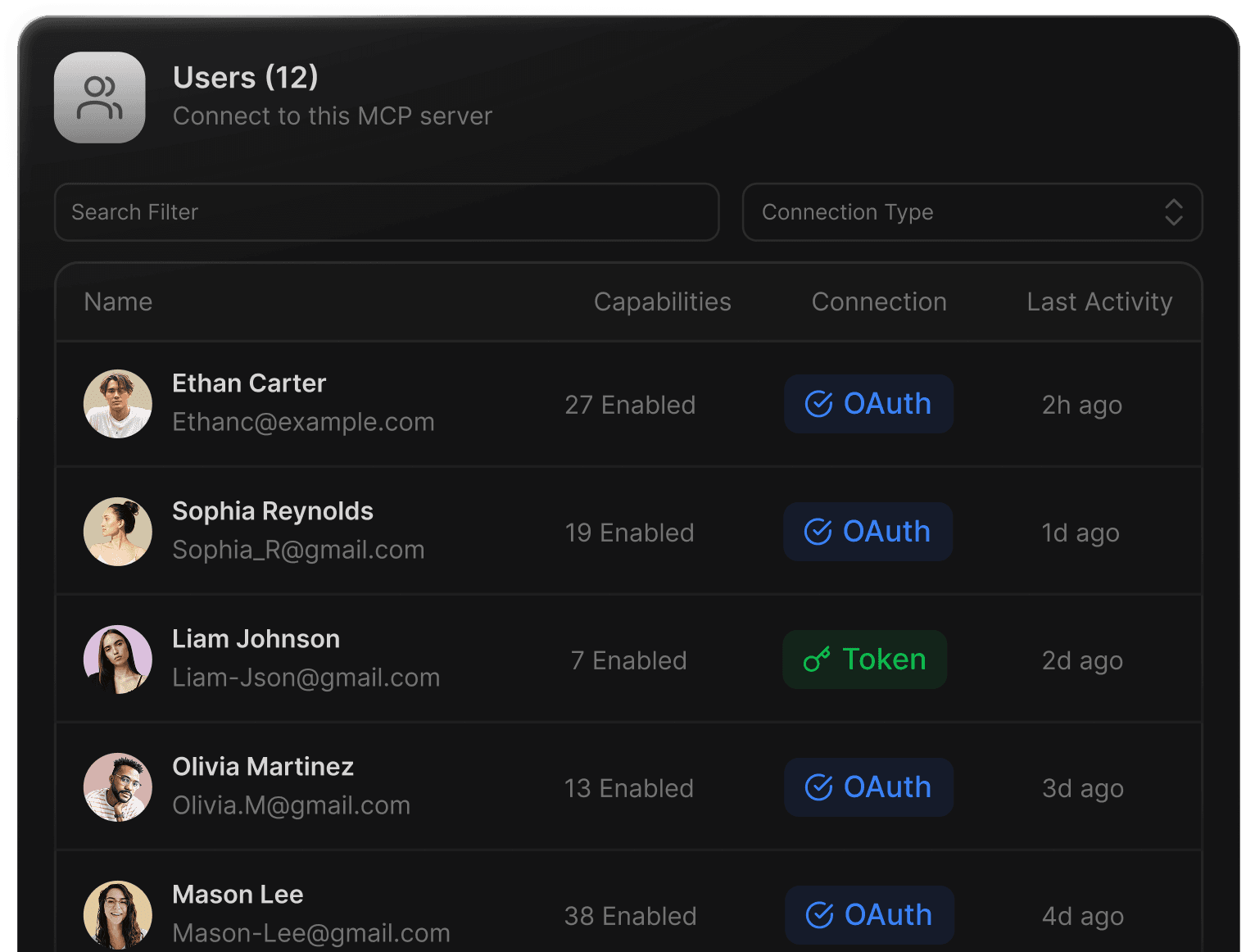

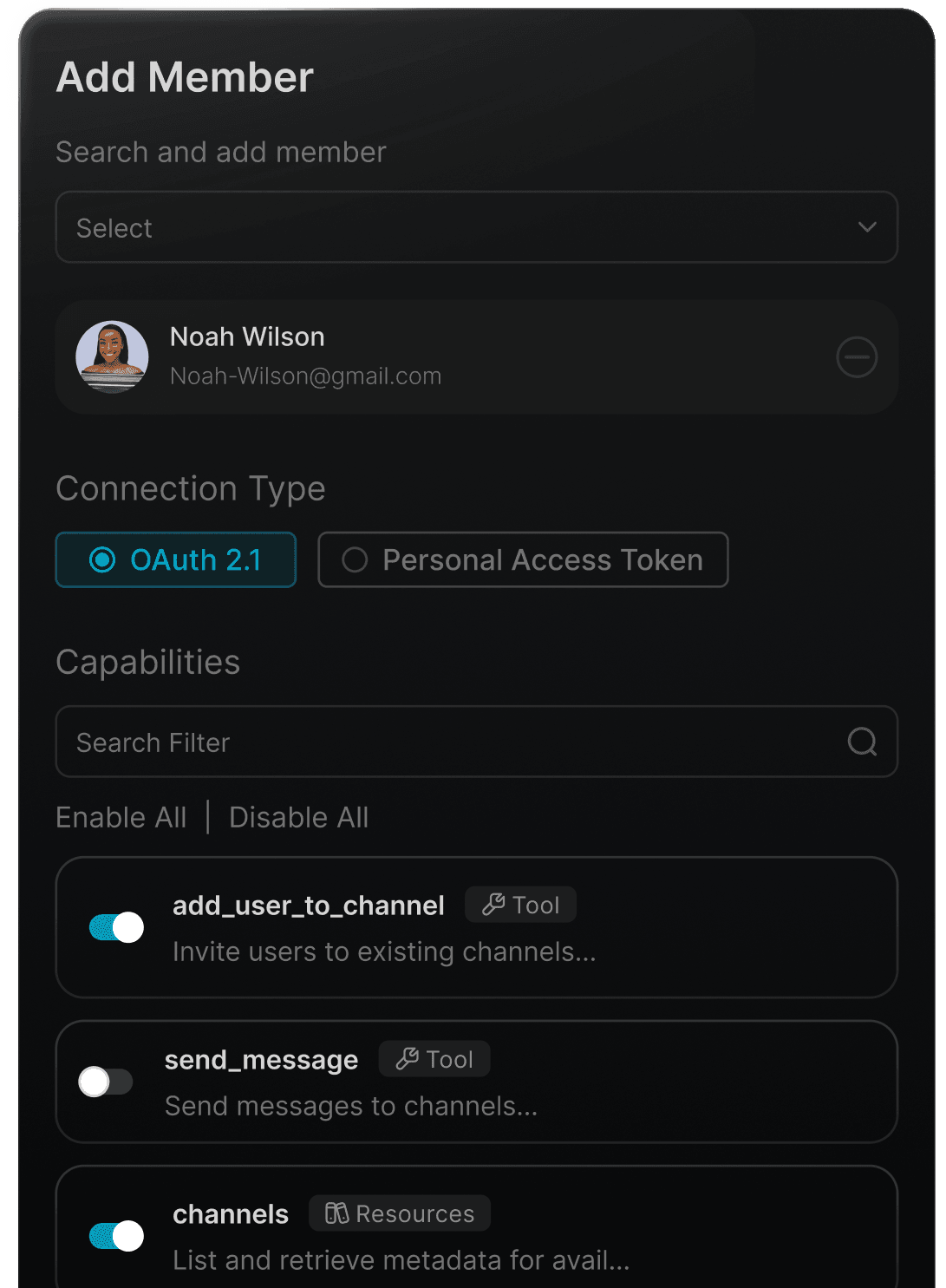

Built-in support for every auth type

Support for OAuth 2.1, API Tokens, Header Auth. Fully configurable

JWT validations, Identity forwarding, Passthrough Headers and other advanced auth features supported out of the box

Bring your own auth with Okta, Entra and more

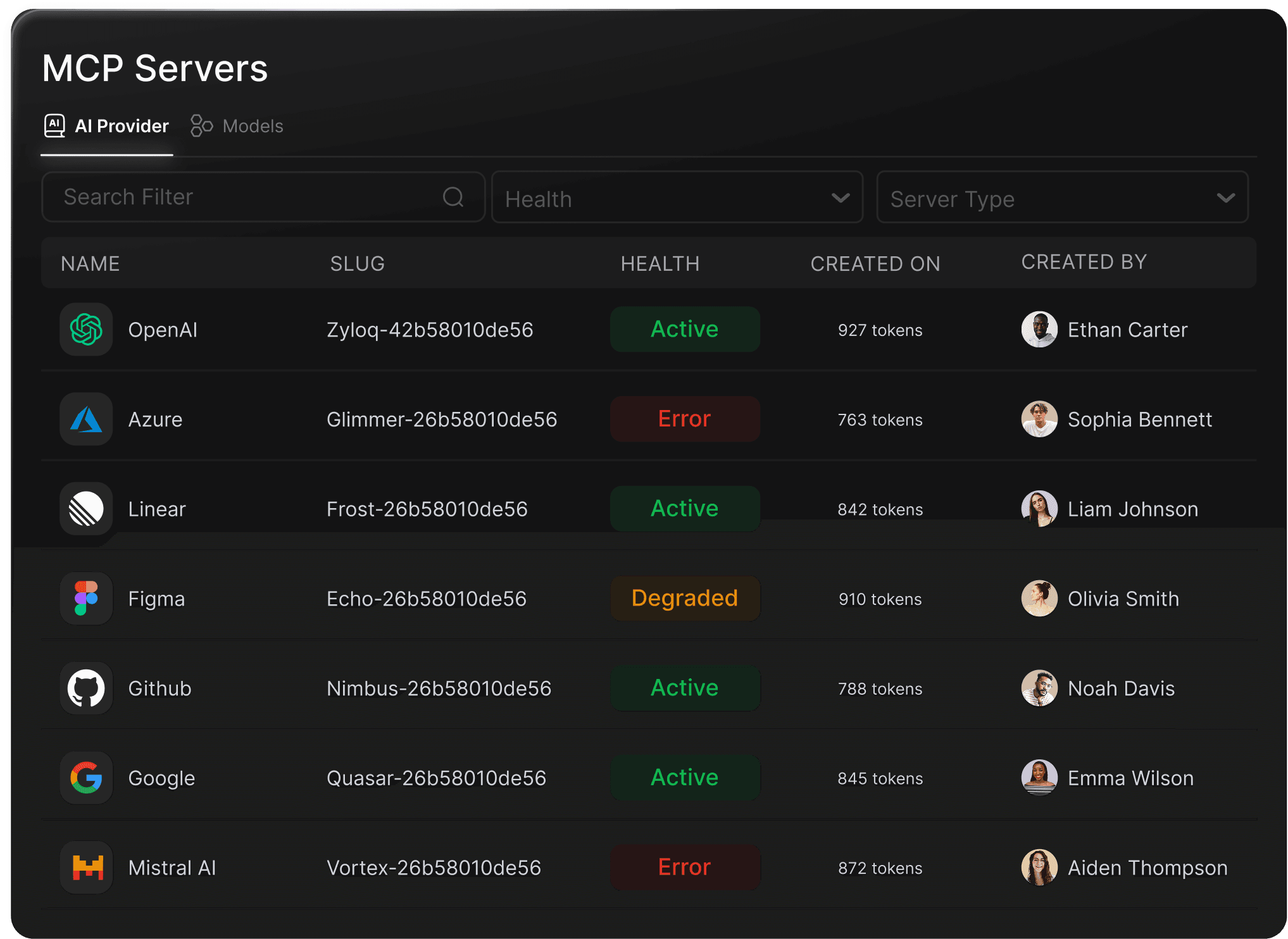

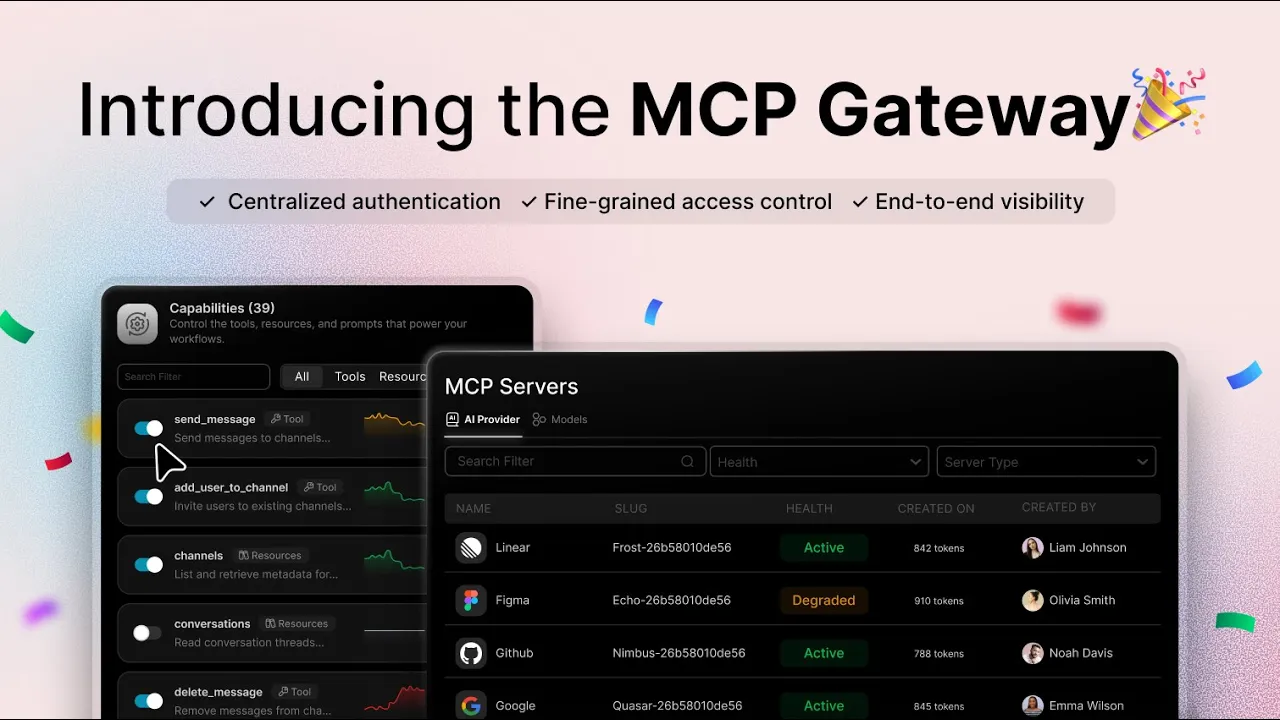

Central registry for servers and tools

View available servers and tools in one place

Scope servers to specific teams, no sprawl

Integrate new MCP server in a few mins

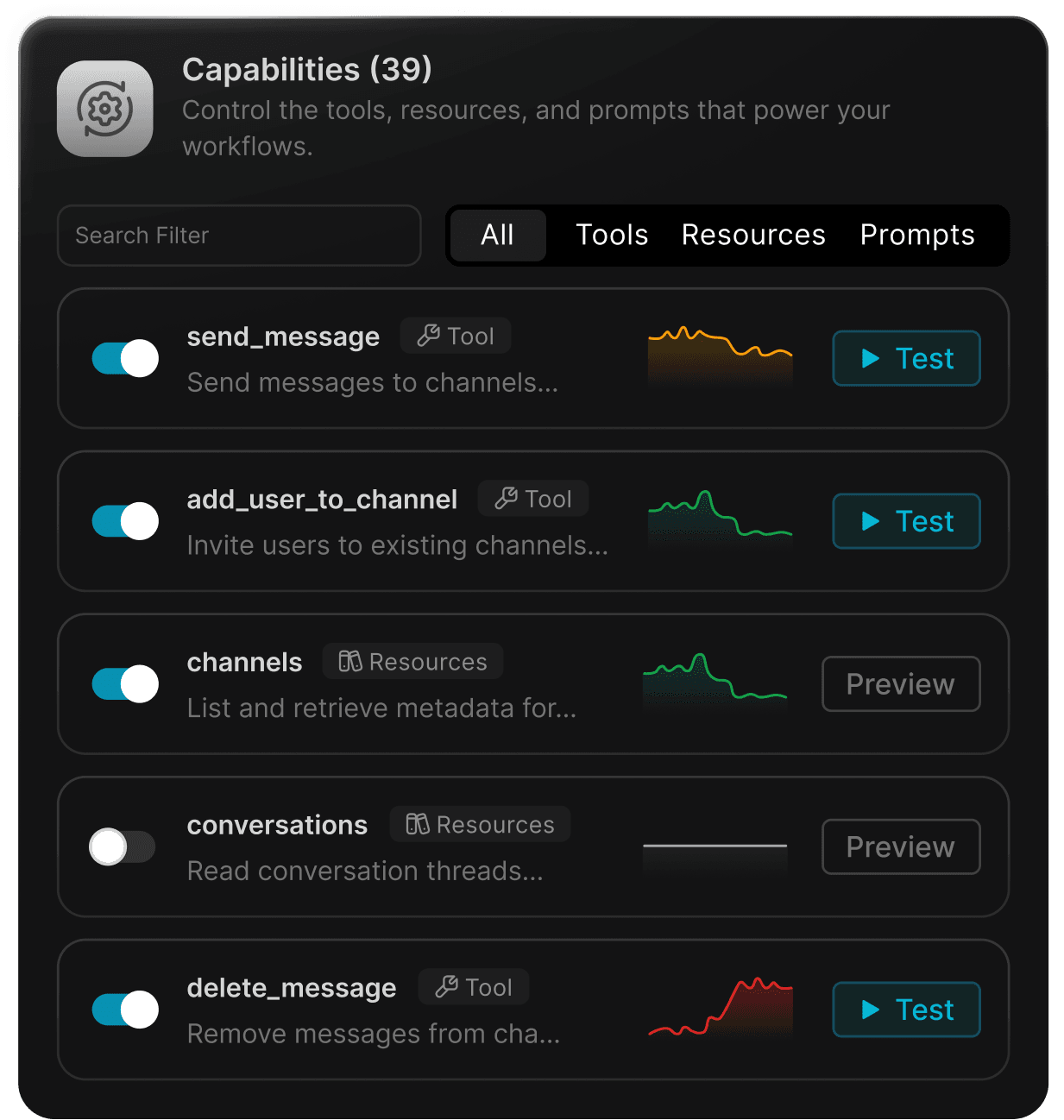

Fine-grained access control for servers and tools

RBAC for org, workspace, team, or individual user, scope access precisely

No shadow tooling. If it's not approved in the gateway, agents can't use it

Let teams share tools across boundaries without sharing credentials

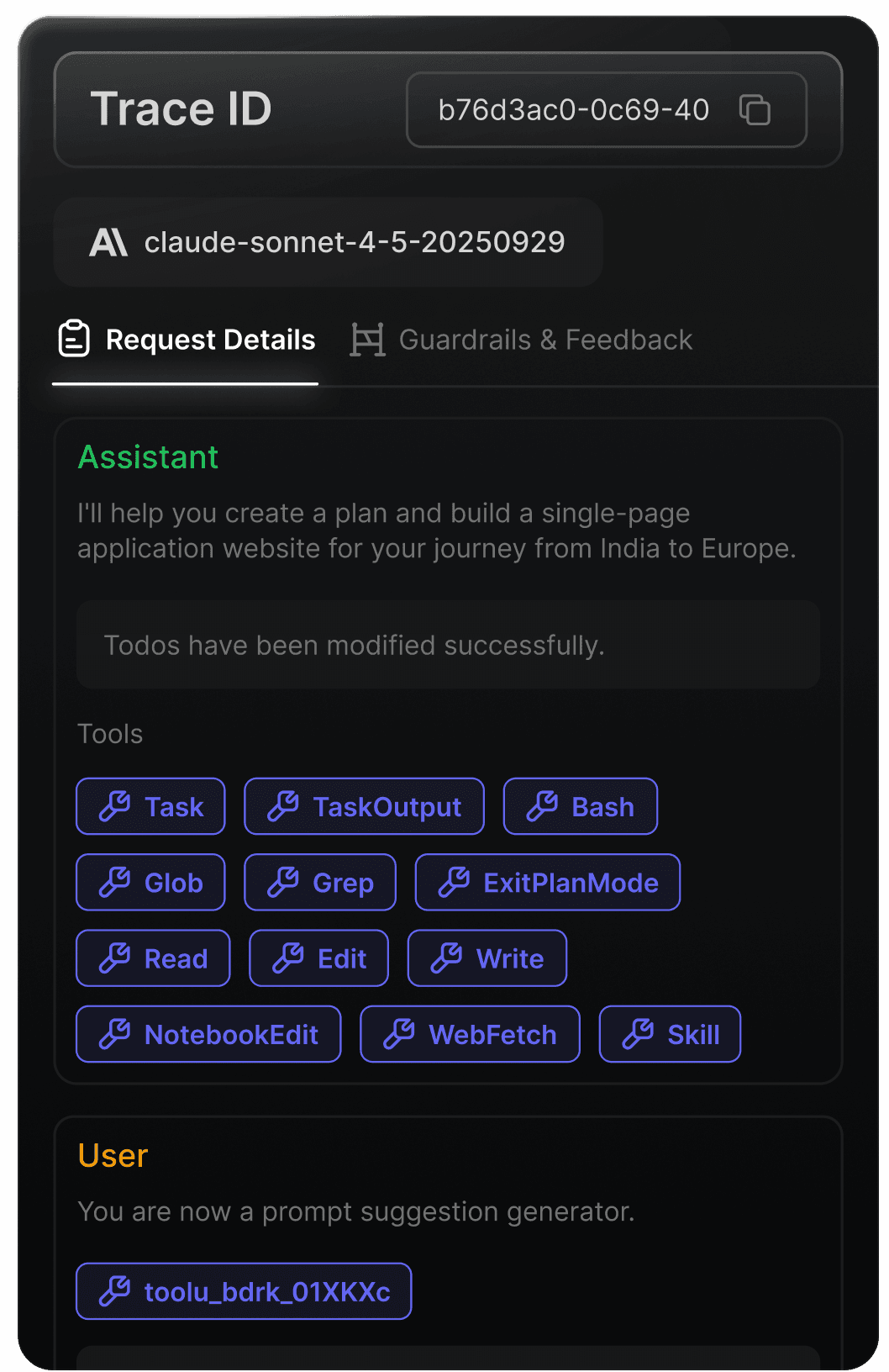

End-to-end observability for agent workflows

Get unified traces and see exactly which tools an agent called

Fast debugging

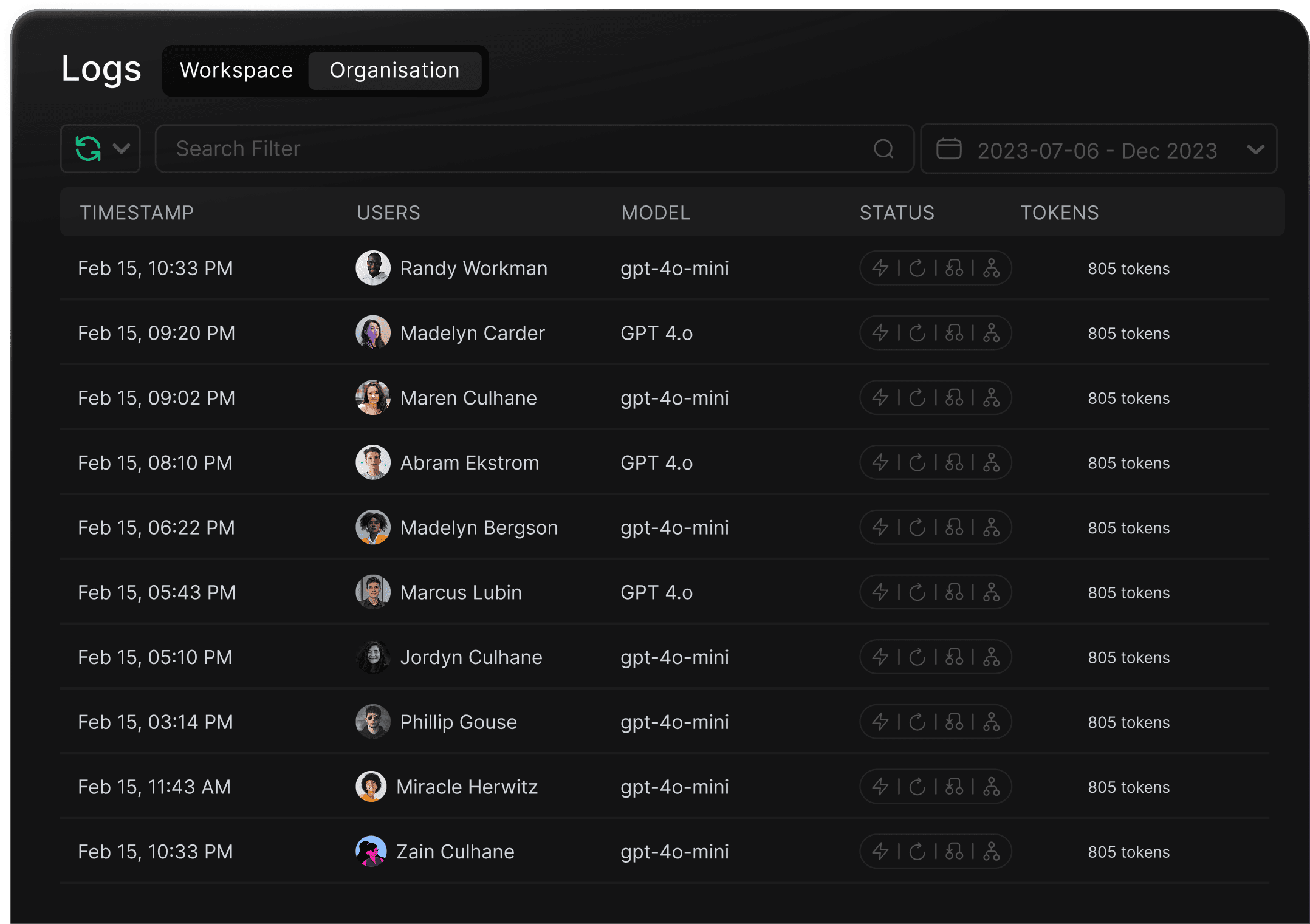

Every tool invocation is audited, logged, and searchable

Usage and performance analytics

Track request volume, latency, and errors across MCP traffic.

Identify slow or failing servers before they impact agents

Monitor usage patterns per tool, per server, per team

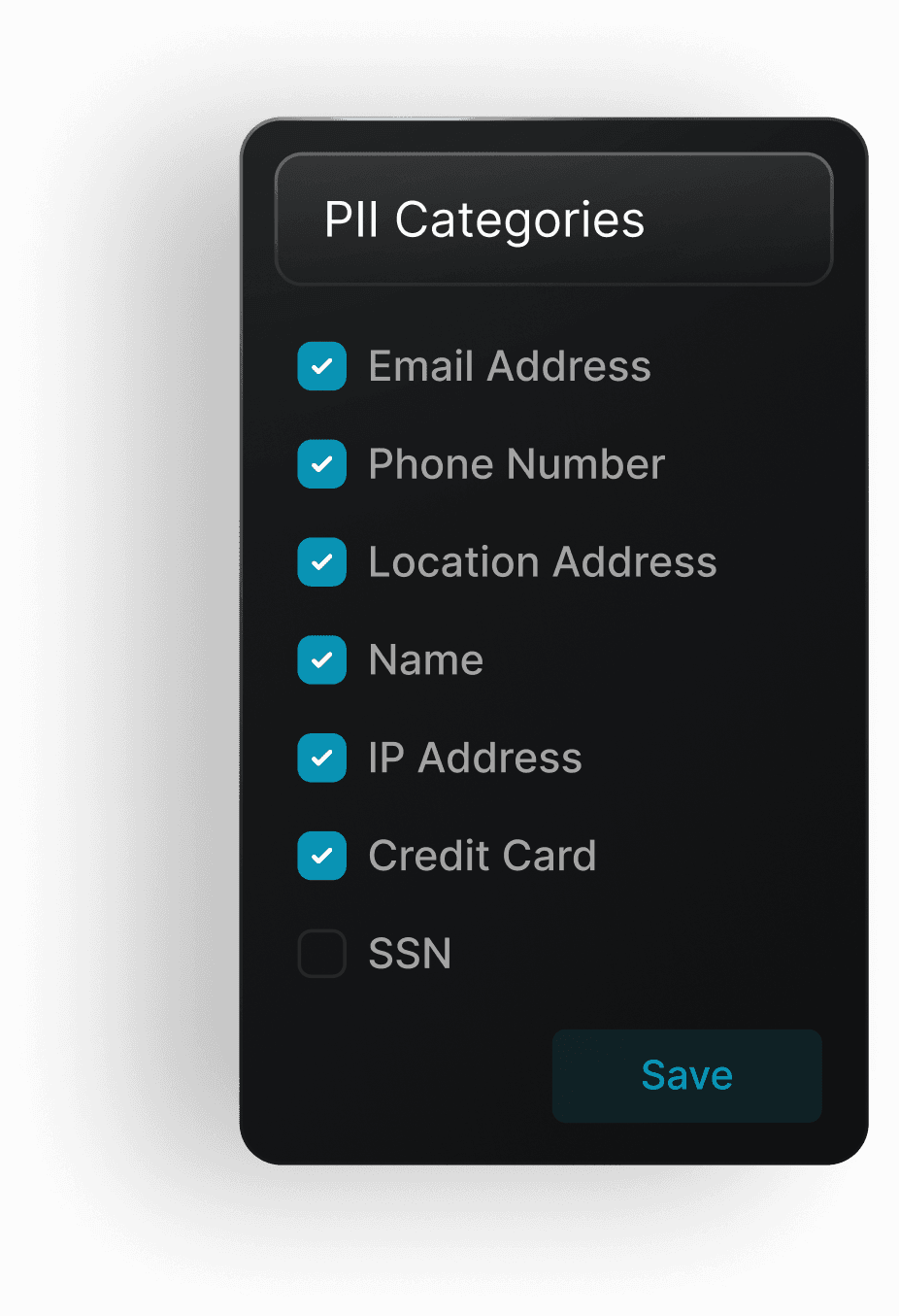

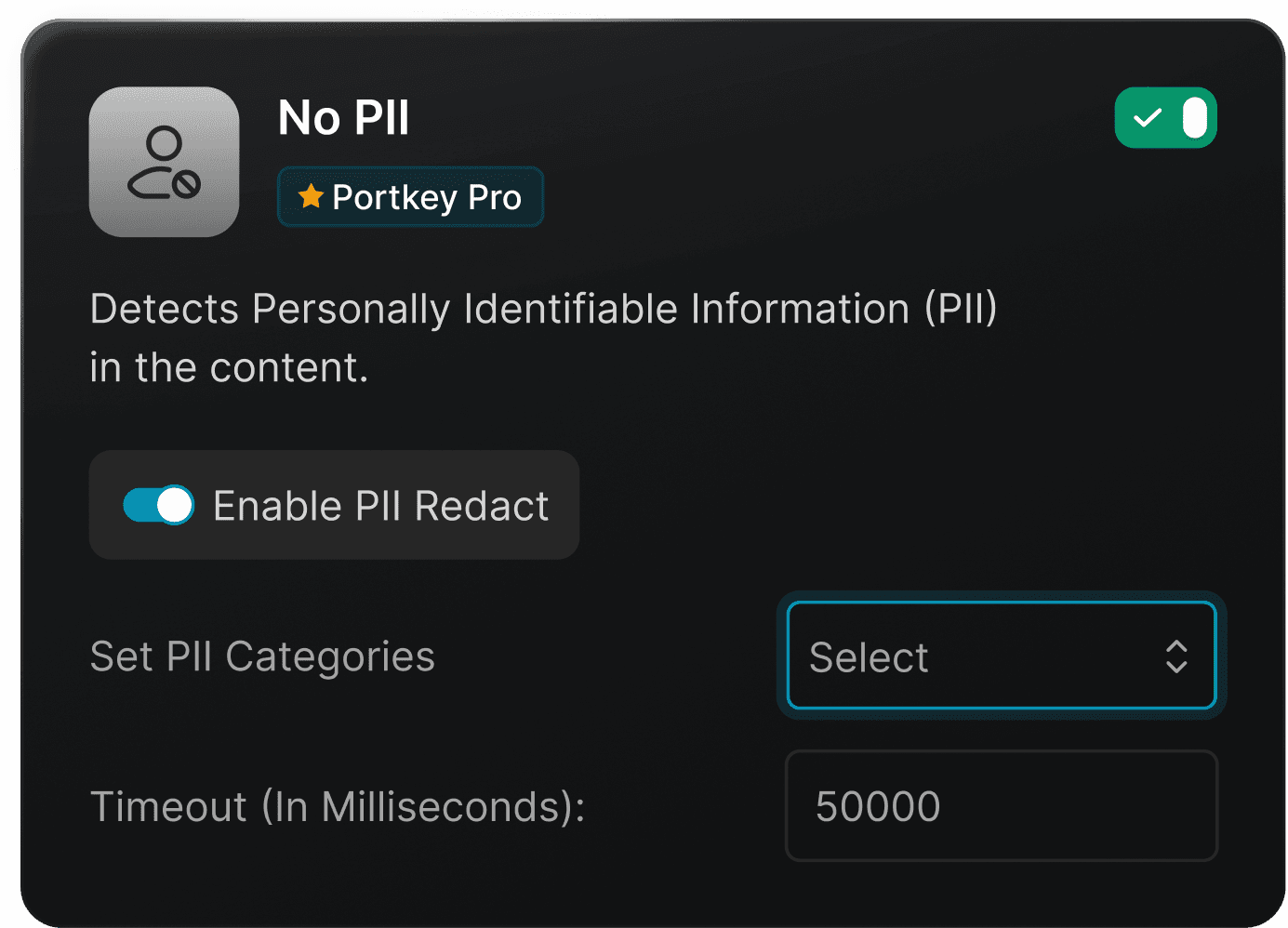

Runtime policy enforcement

Enforce PII redaction, content filtering, and compliance policies in one place

Stop unauthorized tool invocations before they execute

Consistent policies across LLMs and MCP

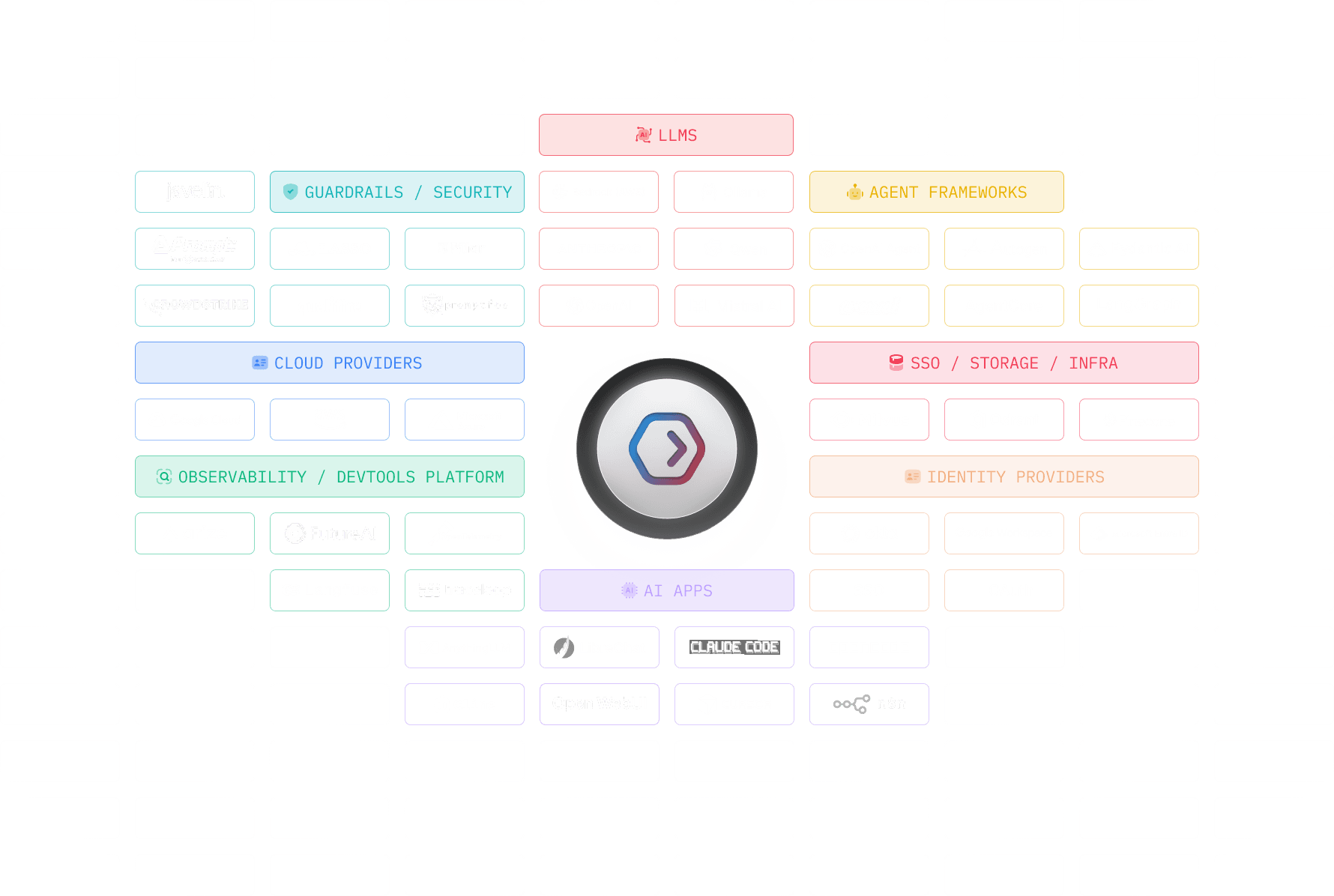

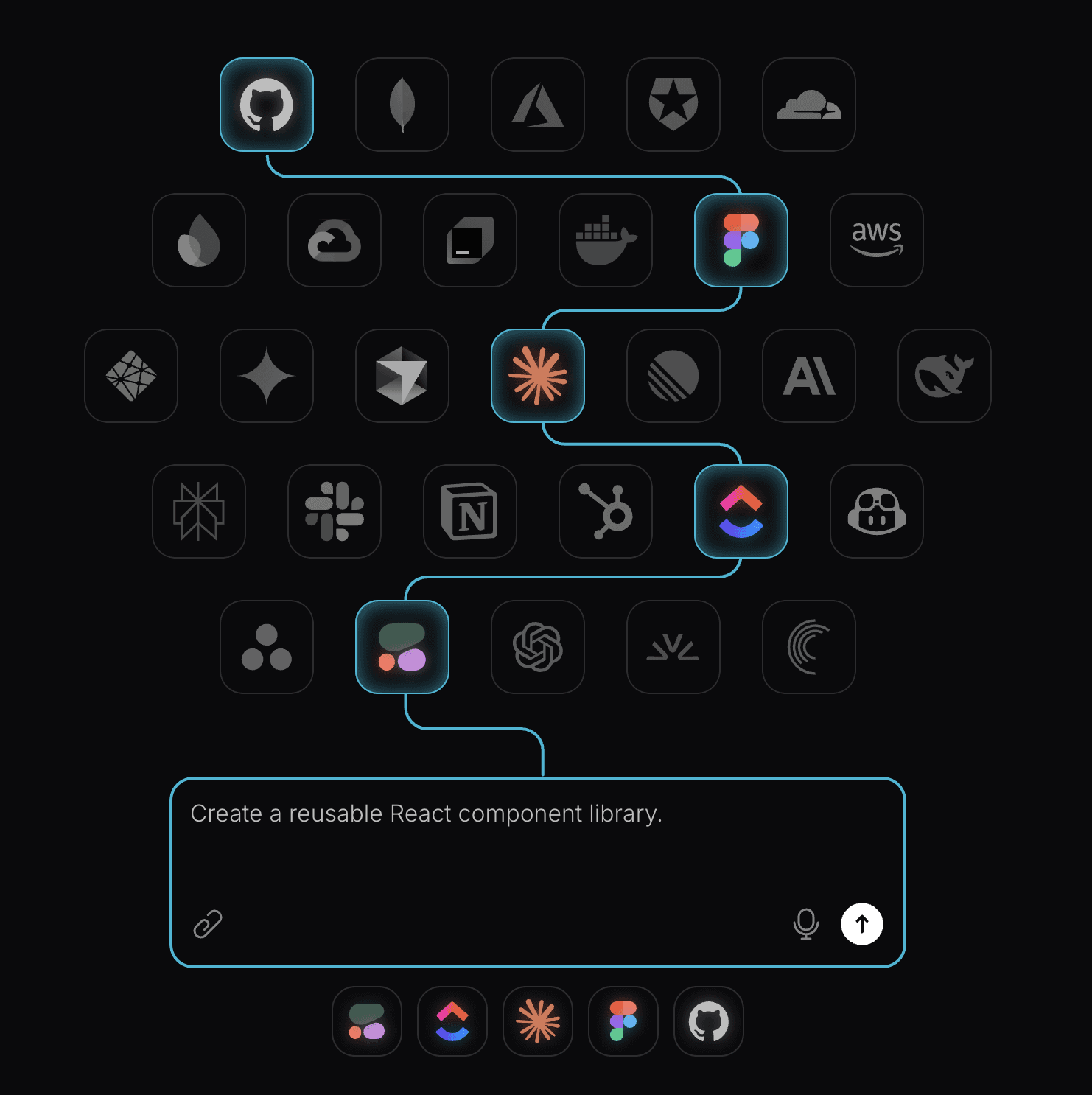

Portkey's MCP Gateway integrates with the agent frameworks, identity providers, guardrails, and tools you already use.

Expose internal MCP tools without exposing credentials

Share secure, controlled access to your internal MCP servers. Authenticate users via Portkey, and manage permissions at the team level so credentials never leave the gateway.

Scale MCP access across your org

Give the entire org access to your MCP servers via the MCP Gateway. Users authenticate with your existing IdP, no additional onboarding. All traffic routes through the gateway.

Bring vendor tools under your governance

Integrate vendor and partner MCP servers using OAuth, API keys, or custom auth. Bring them under the same governance and observability model as internal tools.

Connect, govern, and share MCP tools from multiple servers

across teams in your organization.

Secure by design

End-to-end encryption, SOC 2 compliance. SaaS, private cloud, VPC, or fully self-hosted.

One control plane for LLMs and MCP

Integrate with Portkey's AI Gateway to manage your models and tools together. Unified observability and policy.

Open-source and community-driven

Built in the open with hundreds of contributors. Inspect the code, shape the roadmap, and deploy with confidence.