This page compares the best Cloudflare AI Gateway alternatives for teams building and operating AI applications at scale. It covers tools that offer different approaches to routing, platform control, and operational ownership.

What is Cloudflare AI Gateway?

Cloudflare AI Gateway is a managed generative AI gateway built on Cloudflare’s global edge network. It sits between applications and LLM providers, providing a unified interface for routing requests, caching responses, controlling traffic, and collecting usage analytics.

Strengths

Unified access to popular providers:

Supports routing to popular AI providers such as OpenAI, Azure OpenAI, Workers AI, Hugging Face, Replicate, and other provider endpoints

Built-in caching:

Cloudflare caches responses to repeated requests, reducing provider costs and improving latency by serving cached responses directly

Traffic control:

Includes rate limiting and retry/fallback configurations to protect applications from spikes, quota exhaustion, and abuse.

Security integration:

Leverages Cloudflare’s existing edge security controls for DDoS protection, API key proxying, and global request filtering (inherent to the platform).

Why teams look for Cloudflare AI Gateway alternatives

The production challenges

Low governance capabilities

Does not include built-in enterprise governance features like RBAC, policy-as-code, audit trails, or workspace separation.

Limited visibility

Provides application-level metrics and logs, but lacks deep AI observability such as token-level tracing, prompt analysis, or cost attribution across teams and workloads.

Basic safety filters

Supports simple content filtering and request controls, but complex compliance, safety, or moderation workflows require additional systems.

Limited provider breadth

Primarily supports popular, managed model providers, with no support for lesser-know providers, open-source or self-hosted LLMs.

Top Cloudflare AI Gateway Alternatives

Platform | Focus Area | Key Features | Ideal For |

|---|---|---|---|

Portkey | End-to-end production control plane. Open source. | Unified API for 1,600+ LLMs, observability, guardrails, governance, prompt management, MCP integration | GenAI builders and enterprises scaling to production |

OpenRouter | Developer-first multi-model routing | Unified API for multiple LLMs, simple routing, community model access | Individual developers and small teams experimenting with multiple models |

LiteLLM | Open-source LLM proxy | OpenAI-compatible proxy supporting 1600+ LLMs, OSS extensibility | Platform teams running multi-provider LLM routing with self-managed infrastructure |

TrueFoundry Gateway | MLOps workflow management | Model deployment, serving, and monitoring; integrates with internal ML pipelines | ML and platform engineering teams |

Kong AI Gateway | API gateway with AI routing extensions | API lifecycle management, rate limiting, policy enforcement via plugins | Enterprises already using Kong for APIs |

Custom Gateway Solutions | In-house or OSS-built layers | Fully customizable; requires ongoing maintenance and scaling effort | Advanced engineering teams needing full control |

In-Depth Analysis of the Top Alternatives

Dive deeper into each solution, covering their core strengths, weaknesses, pricing, customer base, and market reputation, to help teams choose the right gateway for their GenAI production stack.

Portkey

Portkey is an AI Gateway and production control plane built specifically for GenAI workloads. It provides a single interface to connect, observe, and govern requests across 1,600+ LLMs. Portkey extends the gateway with observability, guardrails, governance, and prompt management, enabling teams to deploy AI safely and at scale.

Strengths

Unified API layer

Primarily designed for production teams, so lightweight prototypes may find it more advanced than needed.

Enterprise governance features (e.g., policy-as-code, regional data residency) are part of higher-tier plans.

Deep observability

Detailed logs, latency metrics, token and cost analytics by app, team, or model.

Guardrails and moderation

Request and response filters, jailbreak detection, PII redaction, and policy-based enforcement.

Governance and access control

Workspaces, roles, data residency, audit trails, and SSO/SCIM integration.

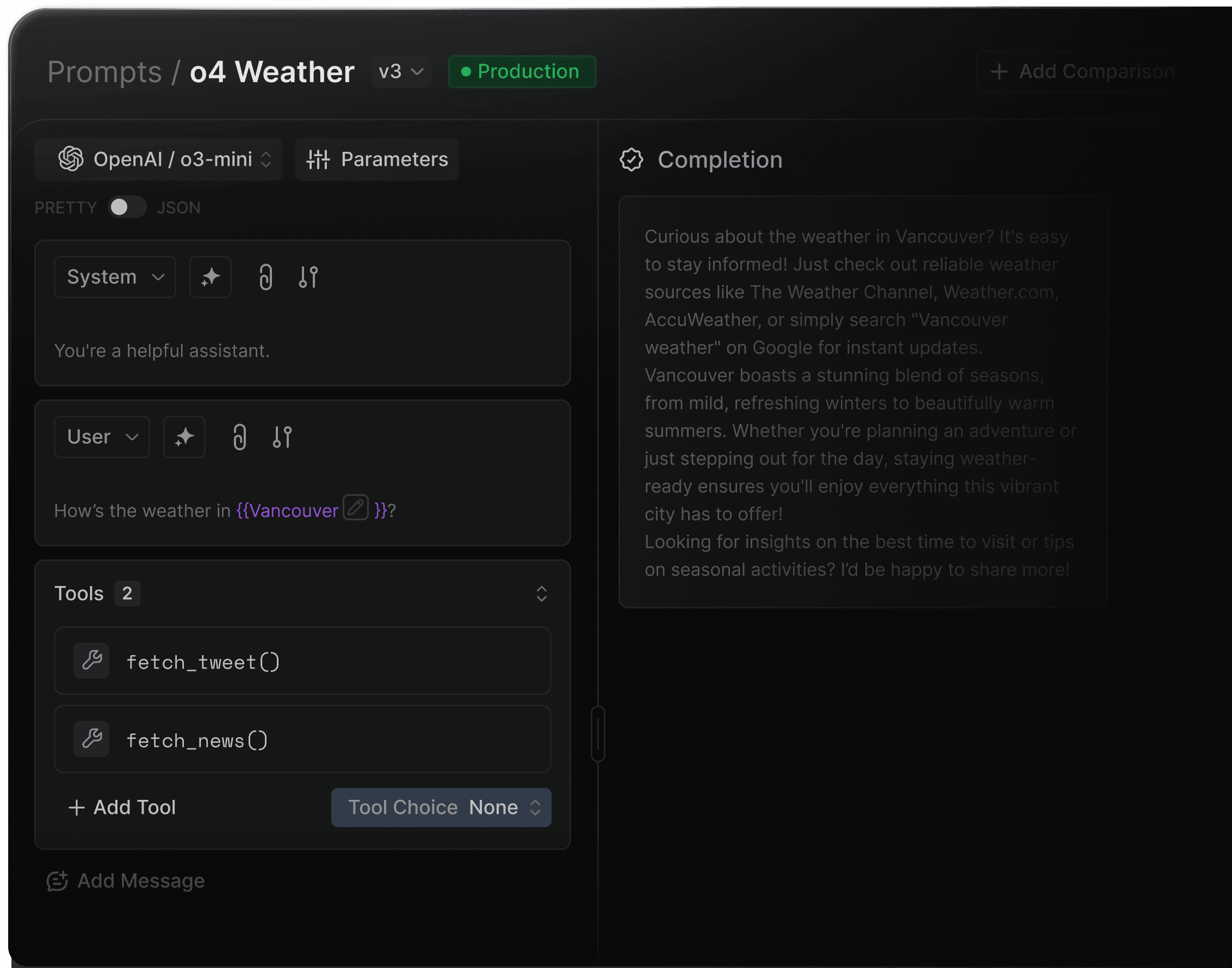

Prompt management and versioning

Reusable templates, variable substitution, and environment promotion.

Multi-model routing and reliability controls

Latency- or cost-based routing, fallbacks, canary deployments, and circuit breakers.

MCP integration

Register tools and servers with built-in observability and governance for agent use cases.

Developer experience

Modern SDKs (Python, JS, TS), streaming support, evaluation hooks, and test harnesses.

Limitations

Primarily designed for production teams, so lightweight prototypes may find it more advanced than needed.

Enterprise governance features (e.g., policy-as-code, regional data residency) are part of higher-tier plans.

Pricing Structure

Portkey offers a usage-based pricing model with a free tier for initial development and testing. Enterprise plans include advanced governance, audit logging, and dedicated regional deployments.

Ideal For / Typical Users

GenAI builders, enterprise AI teams, and platform engineers running multi-provider LLM workloads who need observability, compliance, and reliability in production.

4.8/5 on G2 — praised for its developer-friendly SDKs, observability depth, and governance flexibility.

OpenRouter

OpenRouter is a developer-focused AI gateway that provides a single API for accessing and routing requests across multiple LLM providers. It abstracts provider-specific APIs and billing behind a unified endpoint, making it easy to experiment with different models without managing separate accounts or credentials.

Strengths

Unified access and billing to LLM providers:

A single API and pricing layer that makes it easy to manage multiple providers, enables fast model switching and easy comparison during experimentation.

Minimal setup:

No infrastructure to manage; developers can start routing requests immediately.

High throughput at scale:

Designed to handle very large request volumes

Automatic failover:

Requests can be transparently routed around provider outages or errors to maintain availability.

Limitations

Limited observability, with no deep tracing, token-level debugging, or cost attribution by team, app, or environment.

Minimal governance and access controls, which makes it difficult to operate as an internal platform across large teams or in regulated environments.

No built-in guardrails such as safety filters, PII redaction, moderation pipelines, or policy enforcement.

No native support for prompt versioning, templating, environment promotion, or approval workflows.

Routing logic is constrained, with limited control beyond provider or model selection

Pricing Structure

Usage-based pricing with plan-dependent access to models, providers, and routing.

Ideal For / Typical Users

Individual developers and small teams looking for easy multi-model access and routing without managing provider accounts or infrastructure.

Public review data is limited. OpenRouter is generally well-regarded in developer communities for ease of use and broad model access.

LiteLLM

LiteLLM is an open-source, self-hosted AI gateway that provides an OpenAI-compatible interface for routing requests across multiple LLM providers. It is commonly deployed as an internal gateway layer that teams run and operate themselves.

Strengths

Unified access to LLM providers:

A single API and pricing layer that enables fast model switching and easy provider comparison during experimentation.

Self-hosted and open source:

Full control over deployment, networking, and data flow.

Extensible architecture:

Can be integrated with custom logging, auth, or policy layers.

Strong community adoption:

Widely used as a lightweight internal gateway.

Limitations

Requires teams to manage infrastructure, scaling, and availability themselves.

Observability is basic by default; advanced token analytics, tracing, and cost attribution require additional tooling.

No native enterprise governance such as RBAC, workspaces, budgets, or audit logs.

No native support for prompt versioning, templating, environment promotion, or approval workflows.

Operational complexity increases significantly as usage scales across teams.

Pricing Structure

Free, open-source self-hosting with paid enterprise plans for hosted and advanced capabilities.

Ideal For / Typical Users

Teams routing across multiple LLM providers while managing the rest of the infrastructure in-house, especially platform teams comfortable operating self-hosted gateways.

Public marketplace reviews are limited. LiteLLM is widely discussed and adopted in open-source and developer communities.

TrueFoundry AI Gateway

TrueFoundry is an MLOps platform that helps teams deploy, monitor, and manage machine learning models and LLM-based applications.

Its AI Gateway component is part of a broader MLOps suite, focused on infrastructure automation, model deployment, and workflow management rather than pure multi-provider orchestration.

The gateway is positioned for teams that already have ML pipelines and need a controlled way to serve LLMs within those workflows.

Strengths

End-to-end MLOps integration:

Tightly connected with model deployment, experiment tracking, and model registry features.

Strong internal infrastructure controls:

Autoscaling, rollout strategies, and Kubernetes-native deployments for teams that prefer infrastructure ownership.

Custom model hosting:

Supports deploying fine-tuned or proprietary LLMs alongside hosted providers.

Monitoring and alerts:

Metrics for model performance, resource usage, and API health within the same interface.

Developer workflows:

CI/CD pipelines, environment promotions, and reproducible deployment templates.

Limitations

Primarily built for ML/MLOps teams, not general application developers.

Less focus on cross-provider LLM routing compared to modern AI gateways.

Lacks granular guardrails, policy governance, and cost controls expected in enterprise AI gateways.

Requires infrastructure management (Kubernetes, cloud resources), which may add overhead for teams wanting a hosted solution.

Observability is more ML-focused rather than request-level LLM observability.

Pricing Structure

No broad public free tier; pricing is generally provided on request.

Ideal For / Typical Users

ML platform teams, data science groups, and enterprises building custom model pipelines who want model hosting and serving integrated with their DevOps/MLOps workflows.

Appreciated for its deployment automation and developer workflows; some users note a steeper learning curve around infrastructure setup.

Kong AI Gateway

Kong is a widely adopted API gateway and service connectivity platform used by engineering teams to manage API traffic at scale. Its AI Gateway capabilities are delivered through plugins and extensions built on top of Kong Gateway and Kong Mesh.

Strengths

Robust API management foundation:

Industry-standard rate limiting, authentication, transformations, and traffic policies.

Plugin ecosystem:

AI-related plugins support routing to LLM providers, applying policies, and transforming requests.

Security controls:

Integrates with WAFs, OAuth providers, RBAC, and audit frameworks.

Environment flexibility:

Deployable as OSS, enterprise self-hosted, or cloud-managed via Kong Konnect.

Limitations

AI capabilities are extensions, not core design — lacks deep observability and cost intelligence for LLM workloads

Limited multi-provider LLM orchestration, routing logic, or model-aware optimizations (latency-based routing, canaries, cost-aware fallback).

No built-in LLM guardrails, jailbreak detection, evaluations, or policy enforcement beyond generic API rules.

Requires configuration and DevOps involvement; not optimized for rapid GenAI iteration.

Governance and monitoring are API-centric, not request/token-centric like modern AI gateways.

Pricing Structure

AI gateway is a part of their API gateway offering.

Ideal For / Typical Users

Enterprises already using Kong as their API gateway or service mesh who want to extend existing infrastructure to support basic LLM routing, without adding a new platform.

Praised for reliability and API governance; not typically evaluated for AI-specific workloads.

Custom Gateway Solutions

Some engineering teams choose to build their own AI gateway or proxy layer using open-source components, internal microservices, or cloud primitives.

These “custom gateways” often start as simple proxies for OpenAI or Anthropic calls and gradually evolve into internal platforms handling routing, logging, and key management.

While they offer full control, they require significant ongoing engineering, security, and maintenance investment to keep up with the rapidly expanding LLM ecosystem.

Strengths

Full customization:

Every component can be tailored to internal needs.

Complete control over data flow:

Easy to enforce organization-specific data policies or network isolation.

Deep integration with internal stacks:

Fits seamlessly into proprietary systems, legacy infrastructure, or internal developer platforms.

Potentially lower cost at a very small scale:

If only supporting a single provider or simple routing logic.

Limitations

High engineering burden: implementing and maintaining routing, retries, fallbacks, logging, key rotation, credentials, dashboards, and guardrails is complex.

Difficult to keep up with fast-moving LLM features—function calling, new provider APIs, model updates, safety settings, embeddings, multimodal inputs, etc.

No built-in observability: requires building token tracking, latency metrics, per-user analytics, cost dashboards, and log pipelines.

Security and governance debt: RBAC, workspace separation, policy enforcement, audit logs, and compliance controls require significant effort.

Reliability challenges: implementing circuit breakers, provider failover, shadow testing, canary routing, and load balancing is nontrivial.

Higher opportunity cost — engineers spend time maintaining infrastructure rather than building AI products.

Pricing Structure

No fixed pricing as the cost is measured in engineering hours, cloud resources, and operational overhead. Over time, most teams report that maintaining custom gateways costs more than adopting a purpose-built platform, especially once governance, observability, and multi-provider support become requirements.

Ideal For / Typical Users

Highly specialized engineering teams with unique compliance or architectural constraints that cannot be met by commercial platforms, and who have the bandwidth to maintain internal infrastructure.

Why Portkey is different

Gloo Gateway is a cloud-native API gateway built on Envoy to secure, observe, and control AI applications. While not AI-native, Solo has introduced AI traffic policies and LLM-aware routing extensions built on top of Gloo’s existing API and mesh infrastructure.

Governance at scale

Built for enterprise control from day one

Workspaces and role-based access

Budgets, rate limits, and quotas

Data residency controls

SSO, SCIM, audit logs

Policy-as-code for consistent enforcement

HIPAA

COMPLIANT

GDPR

MCP-native capabilities

Portkey is the first AI gateway designed for MCP at scale. It provides:

MCP server registry

Tool and capability discovery

Governance over tool execution

Observability for tool calls and context loads

Unified routing for both model calls and tool invocations

Comprehensive visibility into every request

Token and cost analytics

Latency traces

Transformed logs for debugging

Workspace, team, and model-level insights

Error clustering and performance trends

Unified SDKs and APIs

A single interface for 1,600+ LLMs and embeddings across OpenAI, Anthropic, Mistral, Gemini, Cohere, Bedrock, Azure, and local deployments.

Guardrails and safety

PII redaction

Jailbreak detection

Toxicity and safety filters

Request and response policy checks

Moderation pipelines for agentic workflows

Prompt and context management

Template versioning, variable substitution, environment promotion, and approval flows to maintain clean, reproducible prompt pipelines.

Portkey unifies everything teams need to build, scale, and govern GenAI systems — with the reliability and control that production demands

Reliability automation

Sophisticated failover and

routing built into the gateway:

MCP server registry

Fallbacks and retries

Canary and A/B routing

Latency and cost-based selection

Provider health checks

Circuit breakers and dynamic throttling

Integrations

Portkey connects to the full GenAI ecosystem through a unified control plane. Every integration works through the same consistent gateway. This gives teams one place to manage routing, governance, cost controls, and observability across their entire AI stack.

Portkey supports integrations with all major LLM providers, including OpenAI, Anthropic, Mistral, Google Gemini, Cohere, Hugging Face, AWS Bedrock, Azure OpenAI, and many more. These connections cover text, vision, embeddings, streaming, and function calling, and extend to open-source and locally hosted models.

Beyond models, Portkey integrates directly with the major cloud AI platforms. Teams running on AWS, Azure, or Google Cloud can route requests to managed model endpoints, regional deployments, private VPC environments, or enterprise-hosted LLMs—all behind the same Portkey endpoint.

Integrations with systems like Palo Alto Networks Prisma AIRS, Patronus, and other content-safety and compliance engines allow organizations to enforce redaction, filtering, jailbreak detection, and safety policies directly at the gateway level. These controls apply consistently across every model, provider, app, and tool.

Frameworks such as LangChain, LangGraph, CrewAI, OpenAI Agents SDK, etc. route all of their model calls and tool interactions through Portkey, ensuring agents inherit the same routing, guardrails, governance, retries, and cost controls as core applications.

Portkey integrates with vector stores and retrieval infrastructure, including platforms like Pinecone, Weaviate, Chroma, LanceDB, etc. This allows teams to unify their retrieval pipelines with the same policy and governance layer used for LLM calls, simplifying both RAG and hybrid search flows.

Tools such as Claude Code, Cursor, LibreChat, and OpenWebUI can send inference requests through Portkey, giving organizations full visibility into token usage, latency, cost, and user activity, even when these apps run on local machines.

For teams needing deep visibility, Portkey integrates with monitoring and tracing systems like Arize Phoenix, FutureAGI, Pydantic Logfire and more. These systems ingest Portkey’s standardized telemetry, allowing organizations to correlate model performance with application behavior.

Finally, Portkey connects with all major MCP clients, including Claude Desktop, Claude Code, Cursor, VS Code extensions, and any MCP-capable IDE or agent runtime.

Across all of these categories, Portkey acts as the unifying operational layer. It replaces a fragmented integration landscape with a single, governed, observable, and reliable control plane for the entire GenAI ecosystem.

Get started

Portkey gives teams a single control plane to build, scale, and govern GenAI applications in production with multi-provider support, built-in safety and governance, and end-to-end visibility from day one.