Precise RBAC for AI innovation

Precise RBAC for AI innovation

Precise RBAC for AI innovation

Secure your AI services with our RBAC system, which offers permissions from organization-wide policies to workspace-specific controls.

Secure your AI services with our RBAC system, which offers permissions from organization-wide policies to workspace-specific controls.

Powering 3000+ GenAI teams

Powering 3000+ GenAI teams

Powering 3000+ GenAI teams

Maintain the highest level of abstraction

Maintain the highest level

of abstraction

Maintain the highest level of abstraction

Get a robust framework for managing large-scale AI development projects, with resource allocation, access control, and team management across your entire organization.

Get a robust framework for managing large-scale AI development projects, with resource allocation, access control, and team management across your entire organization.

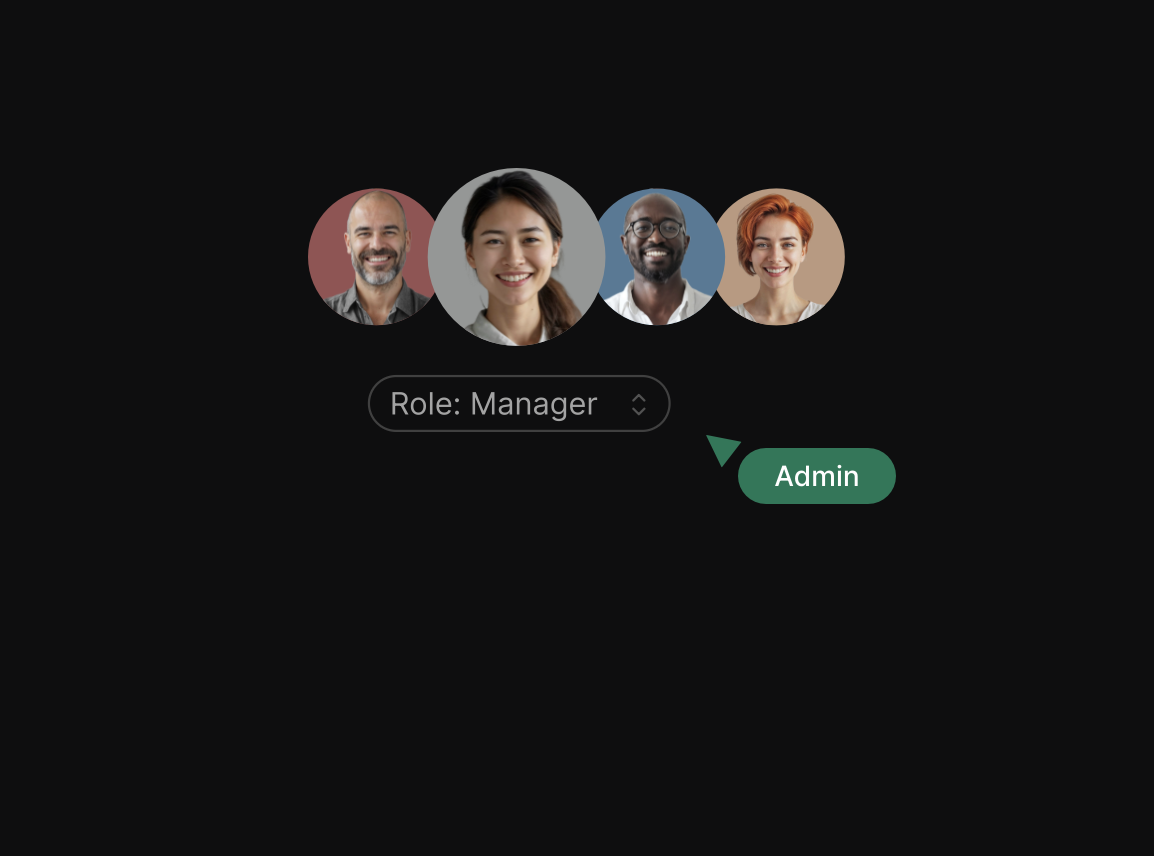

Hierarchical organization management

Assign owners and admins to oversee your AI development environment, ensuring clear roles and responsibilities for effectively managing teams, resources, and operations.

Granular team management with workspaces

Set up dedicated spaces with manager and member roles for precise control. Each workspace contains its own set of features – from logs to virtual keys – ensuring complete project separation.

Share controlled access

Define who accesses sensitive operations and data across your organization. Balance security with efficiency while maintaining smooth team operations.

Secure your internal operations

Authenticate and authorize operations using Admin API Keys for organization-wide control and Workspace API Keys for project-specific access.

Enterprise-ready LLMOps platform

Highest standards for security & compliance

Portkey complies with stringent data privacy and security standards so that you can focus on innovation without worrying about data security.

Audited every quarter

100% secure on-prem deployment with full control

Deploy Portkey in your private cloud for enhanced security, control, and 100% data ownership.

The most popular open source AI Gateway

Portkey’s AI Gateway is actively maintained by 50+ contributors worldwide, bringing the cutting edge of AI work into the Gateway.

Portkey provides end-to-end support for Azure

Portkey plugs into your entire Azure ecosystem, so you can build and scale without leaving your existing infrastructure

New Integration: Inference.net (wholesaler of LLM...

Kierra Westervelt • Oct 03, 2024

New Integration: DeepSeek AI on Gateway

Alfonso Workman • Oct 01, 2024

Enhance RAG Retrieval Success by 67% using...

Lincoln Geidt • Sep 30, 2024

OpenAI o1-preview and o1-mini on Portkey

Zain Aminoff • Sep 27, 2024

New Integration: Inference.net (wholesaler...

Omar Calzoni • Sep 23, 2024

Changelog

We’re open-source!

Join 50 other contributors in collaboratively developing Portkey’s open-source AI Gateway and push the frontier of production-ready AI.

Enterprise-ready LLMOps platform

Highest standards for security & compliance

Portkey complies with stringent data privacy and security standards so that you can focus on innovation without worrying about data security.

Audited every quarter

100% secure on-prem deployment with full control

Deploy Portkey in your private cloud for enhanced security, control, and 100% data ownership.

The most popular open source AI Gateway

Portkey’s AI Gateway is actively maintained by 50+ contributors worldwide, bringing the cutting edge of AI work into the Gateway.

Portkey provides end-to-end support for Azure

Portkey plugs into your entire Azure ecosystem, so you can build and scale without leaving your existing infrastructure

New Integration: Inference.net (wholesaler of LLM...

Kierra Westervelt • Oct 03, 2024

New Integration: DeepSeek AI on Gateway

Alfonso Workman • Oct 01, 2024

Enhance RAG Retrieval Success by 67% using...

Lincoln Geidt • Sep 30, 2024

OpenAI o1-preview and o1-mini on Portkey

Zain Aminoff • Sep 27, 2024

New Integration: Inference.net (wholesaler...

Omar Calzoni • Sep 23, 2024

Changelog

We’re open-source!

Join 50 other contributors in collaboratively developing Portkey’s open-source AI Gateway and push the frontier of production-ready AI.

Enterprise-ready LLMOps platform

Highest standards for security & compliance

Portkey complies with stringent data privacy and security standards so that you can focus on innovation without worrying about data security.

Audited every quarter

100% secure on-prem deployment with full control

Deploy Portkey in your private cloud for enhanced security, control, and 100% data ownership.

The most popular open source AI Gateway

Portkey’s AI Gateway is actively maintained by 50+ contributors worldwide, bringing the cutting edge of AI work into the Gateway.

Portkey provides end-to-end

support for Azure

Portkey plugs into your entire Azure ecosystem, so you can build and scale without leaving your existing infrastructure

New Integration: Inference.net (wholesaler of LLM...

Kierra Westervelt • Oct 03, 2024

New Integration: DeepSeek AI on Gateway

Alfonso Workman • Oct 01, 2024

Enhance RAG Retrieval Success by 67% using...

Lincoln Geidt • Sep 30, 2024

OpenAI o1-preview and o1-mini on Portkey

Zain Aminoff • Sep 27, 2024

New Integration: Inference.net (wholesaler...

Omar Calzoni • Sep 23, 2024

Changelog

We’re open-source!

Join 50 other contributors in collaboratively developing Portkey’s open-source AI Gateway and push the frontier of production-ready AI.

Trusted by Fortune 500s & Startups

Portkey is easy to set up, and the ability for developers to integrate with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by GenAI teams across the world!

Trusted by Fortune 500s

& Startups

Portkey is easy to set up, and the ability for developers to integrate with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by GenAI teams across the world!

Trusted by Fortune 500s & Startups

Portkey is easy to set up, and the ability for developers to integrate with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by GenAI teams across the world!

Latest guides and resources

Beyond the Hype: The Enterprise AI Blueprint You Need Now (And Why Your AI Gateway...

Discover how top universities like Harvard and Princeton are scaling GenAI...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

MCP servers are multiplying at an overwhelming pace and at scale, they create an invisible sprawl.

Latest guides and resources

Beyond the Hype: The Enterprise AI Blueprint You Need Now (And Why Your AI Gateway...

Discover how top universities like Harvard and Princeton are scaling GenAI...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

MCP servers are multiplying at an overwhelming pace and at scale, they create an invisible sprawl.

Latest guides and resources

Beyond the Hype: The Enterprise AI Blueprint You Need Now (And Why Your AI Gateway...

Discover how top universities like Harvard and Princeton are scaling GenAI...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

MCP servers are multiplying at an overwhelming pace and at scale, they create an invisible sprawl.

Share controlled access across your org

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Share controlled access across your org

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Share controlled access across your org

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR