🎉 Introducing Model Catalog. Control every LLM your team uses. Learn More

🎉 Introducing Model Catalog. Control every LLM your team uses. Learn More

🎉 Introducing Model Catalog. Control every

LLM your team uses. Learn More

🎉 Introducing Model Catalog. Control every LLM your team uses. Learn More

Production Stack for Gen AI Builders

Portkey equips AI teams with everything they need to go to production - Gateway, Observability, Guardrails, Governance, and Prompt Management, all in one platform.

Production Stack for Gen AI Builders

Portkey equips AI teams with everything they need to go to production - Gateway, Observability, Guardrails, Governance, and Prompt Management, all in one platform.

Production Stack for Gen AI Builders

Portkey equips AI teams with everything they need to go to production - Gateway, Observability, Guardrails, Governance, and Prompt Management, all in one platform.

Production Stack for Gen AI Builders

Portkey equips AI teams with everything they need to go to production - Gateway, Observability, Guardrails, Governance, and Prompt Management, all in one platform.

Observability

Logs

Guardrails

Prompt

Monitor LLM behavior, catch anomalies early, and manage usage proactively with our real-time observability dashboard.

Observability

Logs

Guardrails

Prompt

Monitor LLM behavior, catch anomalies early, and manage usage proactively with our real-time observability dashboard.

Observability

Monitor LLM behavior, catch anomalies early, and manage usage proactively with our real-time observability dashboard.

Observability

Logs

Guardrails

Prompt

Monitor LLM behavior, catch anomalies early, and manage usage proactively with our real-time observability dashboard.

Enabling 3000+ leading teams to build the future of GenAI

Enabling 3000+ leading teams to build the future of GenAI

Enabling 3000+ leading teams to build the future of GenAI

Enabling 3000+ leading teams to build the future of GenAI

tokens processed daily

github stars and counting

LLMs supported

"Portkey is a no-brainer for anyone using AI in their GitHub workflows. The reason is simple: the risk-reward balance is clear. Portkey has saved us thousands of dollars by caching tests that would otherwise run repeatedly without any changes"

Kiran P

Sr. Machine Learning Eng. Ario

Why Portkey ♥

Caching

Clear ROI

Easy Set-Up

Figg

Haptik

Qoala

tokens processed daily

github stars and counting

LLMs supported

"Portkey is a no-brainer for anyone using AI in their GitHub workflows. The reason is simple: the risk-reward balance is clear. Portkey has saved us thousands of dollars by caching tests that would otherwise run repeatedly without any changes"

Kiran P

Sr. Machine Learning Eng. Ario

Why Portkey ♥

Caching

Clear ROI

Easy Set-Up

Figg

Haptik

Qoala

tokens processed daily

github stars and counting

LLMs supported

"Portkey is a no-brainer for anyone using AI in their GitHub workflows. The reason is simple: the risk-reward balance is clear. Portkey has saved us thousands of dollars by caching tests that would otherwise run repeatedly without any changes"

Kiran P

Sr. Machine Learning Eng. Ario

tokens processed daily

github stars and counting

LLMs supported

"Portkey is a no-brainer for anyone using AI in their GitHub workflows. The reason is simple: the risk-reward balance is clear. Portkey has saved us thousands of dollars by caching tests that would otherwise run repeatedly without any changes"

Kiran P

Sr. Machine Learning Eng. Ario

Unified Access

Unified Access

Unified Access

Introducing the AI Gateway Pattern

Introducing the AI Gateway Pattern

Introducing the AI Gateway Pattern

End-to-end LLM Orchestration

End-to-end LLM Orchestration

End-to-end LLM Orchestration

Stop wasting time integrating models

Portkey lets you access 1,600+ LLMs via a unified API, so you can focus on building, not managing.

Eliminate the guesswork

Keep AI outputs in check

No need to hard-code prompts

Production-ready agent workflows

Stop wasting time integrating models

Portkey lets you access 1,600+ LLMs via a unified API, so you can focus on building, not managing.

Eliminate the guesswork

Keep AI outputs in check

No need to hard-code prompts

Production-ready agent workflows

Stop wasting time integrating models

Portkey lets you access 1,600+ LLMs via a unified API, so you can focus on building, not managing.

Eliminate the guesswork

Keep AI outputs in check

No need to hard-code prompts

Production-ready agent workflows

Stop wasting time integrating models

Portkey lets you access 1,600+ LLMs via a unified API, so you can focus on building, not managing.

Eliminate the guesswork

Keep AI outputs in check

No need to hard-code prompts

Production-ready agent workflows

See what Portkey can do for your AI stack

See what Portkey can do for your AI stack

See what Portkey can do for your AI stack

Plug & Play

Integrate in a minute

Integrate Portkey in just 3 lines of code - no changes to your existing stack.

Node.js

Python

OpenAI JS

OpenAI Py

cURL

import Portkey from "portkey-ai"; const portkey = new Portkey(); async function main() { const completion = await portkey.chat.completions.create({ messages: [{ role: "user", content: "What's a Portkey?" }], model: "@openai/gpt-4.1" }); console.log(completion.choices[0]); } main();

Plug & Play

Integrate in a minute

Integrate Portkey in just 3 lines of code - no changes to your existing stack.

Node.js

Python

OpenAI JS

OpenAI Py

cURL

import Portkey from "portkey-ai"; const portkey = new Portkey(); async function main() { const completion = await portkey.chat.completions.create({ messages: [{ role: "user", content: "What's a Portkey?" }], model: "@openai/gpt-4.1" }); console.log(completion.choices[0]); } main();

Plug & Play

Integrate in a minute

Integrate Portkey in just 3 lines of code - no changes to your existing stack.

import Portkey from "portkey-ai"; const portkey = new Portkey(); async function main() { const completion = await portkey.chat.completions.create({ messages: [{ role: "user", content: "What's a Portkey?" }], model: "@openai/gpt-4.1" }); console.log(completion.choices[0]); } main();

Plug & Play

Integrate in a minute

Integrate Portkey in just 3 lines of code - no changes to your existing stack.

Node.js

Python

OpenAI JS

OpenAI Py

cURL

import Portkey from "portkey-ai"; const portkey = new Portkey(); async function main() { const completion = await portkey.chat.completions.create({ messages: [{ role: "user", content: "What's a Portkey?" }], model: "@openai/gpt-4.1" }); console.log(completion.choices[0]); } main();

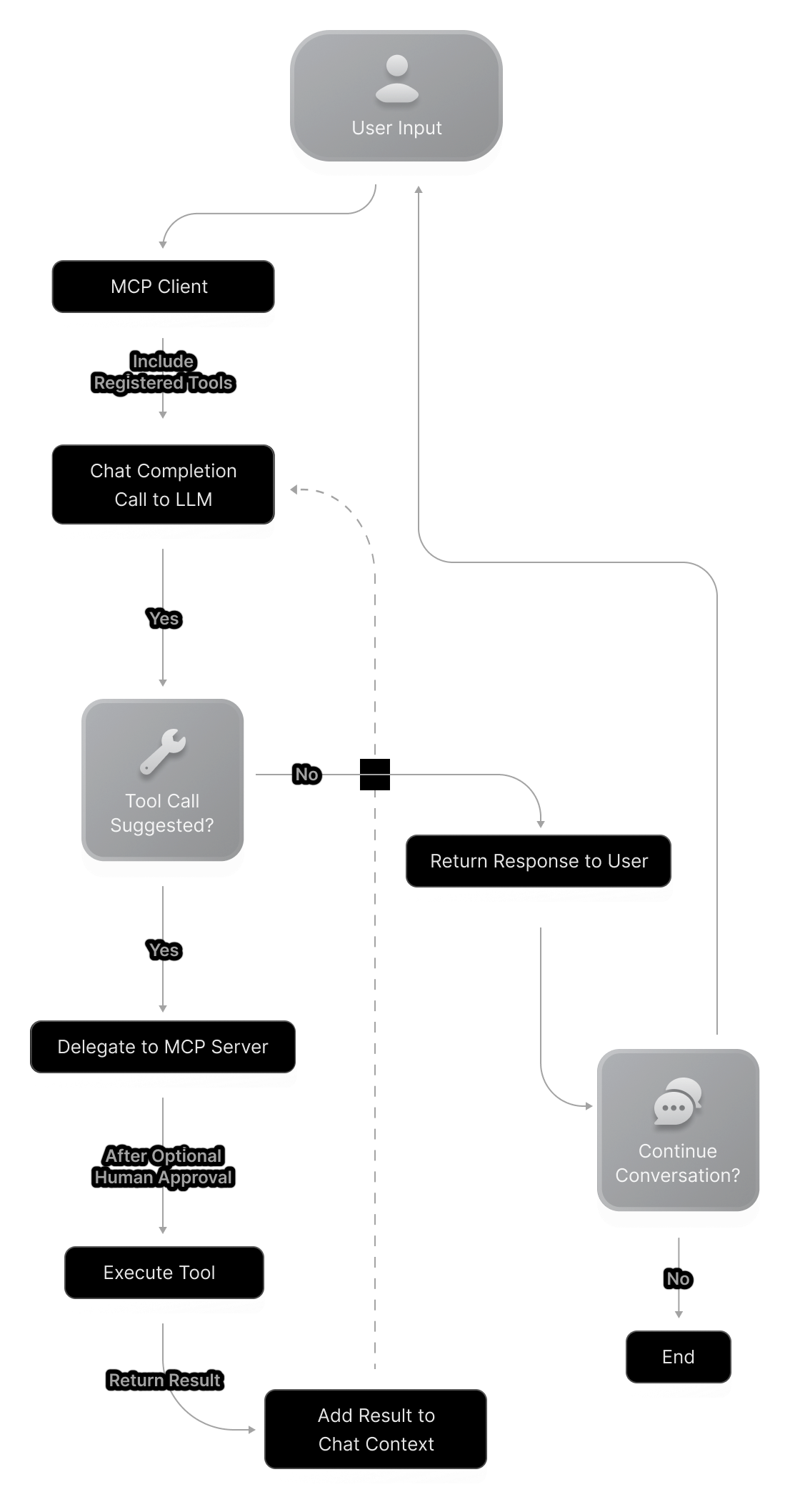

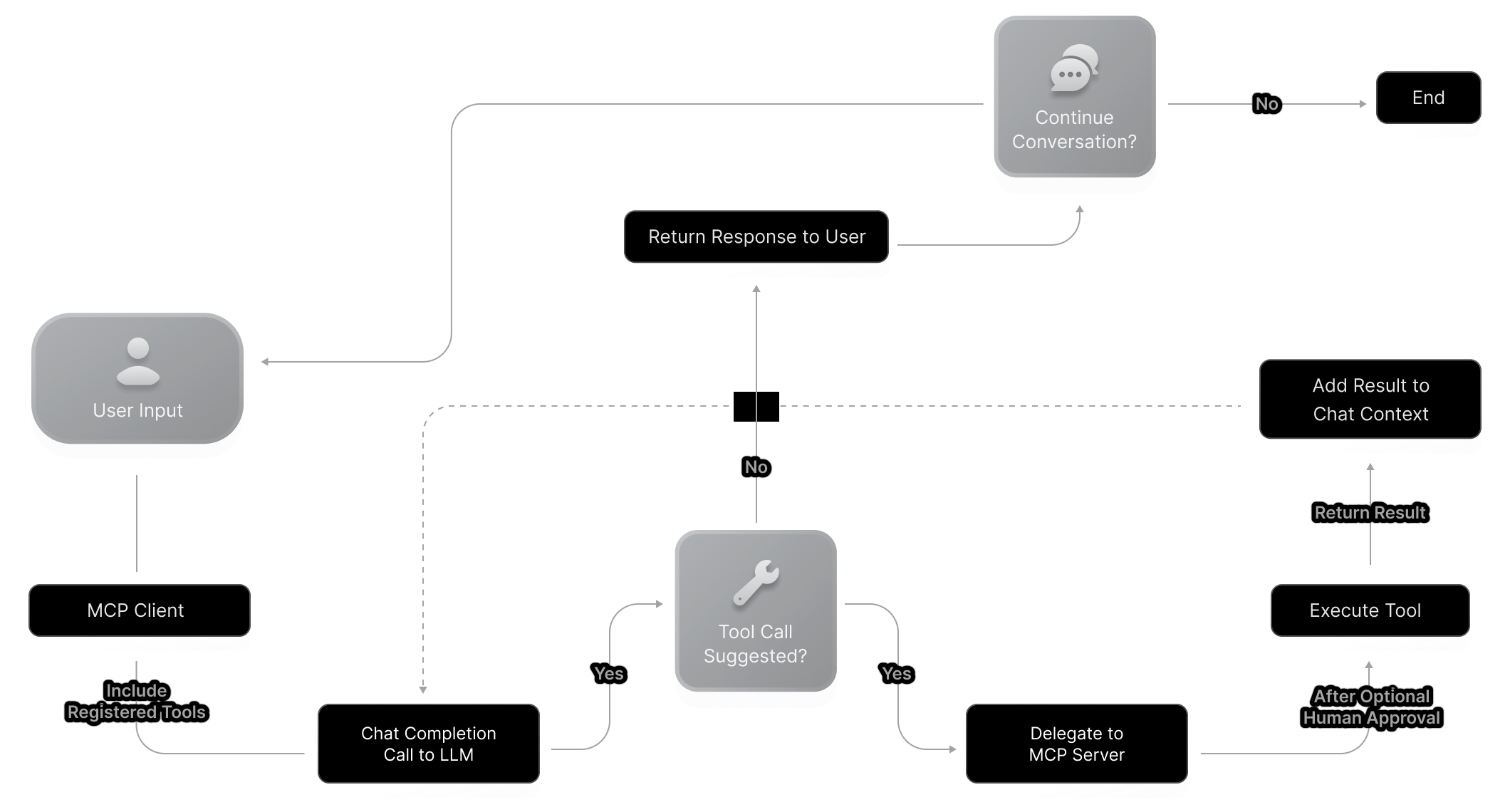

Model Context Protocol

Model Context Protocol

Model Context Protocol

Model Context Protocol

Build AI Agents with Portkey's MCP Client

Build AI Agents with Portkey's MCP Client

Build AI Agents with Portkey's MCP Client

Tool calling shouldn’t slow you down. Portkey’s MCP client simplifies configurations, strengthens integrations, and eliminates ongoing maintenance, so you can focus on building, not fixing.

Tool calling shouldn’t slow you down. Portkey’s MCP client simplifies configurations, strengthens integrations, and eliminates ongoing maintenance, so you can focus on building, not fixing.

Community Driven

Open source with active community development

Community Driven

Open source with active community development

Community Driven

Open source with active community development

Community Driven

Open source with active community development

Enterprise Ready

Built for production use with security in mind

Enterprise Ready

Built for production use with security in mind

Enterprise Ready

Built for production use with security in mind

Enterprise Ready

Built for production use with security in mind

Future Proof

Evolving standard that grows with the AI ecosystem

Future Proof

Evolving standard that grows with the AI ecosystem

Future Proof

Evolving standard that grows with the AI ecosystem

Future Proof

Evolving standard that grows with the AI ecosystem

Democratized Access

Democratized Access

Democratized Access

Democratized Access

Take the driver's seat

with AI Governance

Take the driver's seat with AI Governance

Take the driver's seat

with AI Governance

Using Portkey, you can build a production-ready platform to manage, observe, and govern GenAI for multiple teams

Using Portkey, you can build a production-ready platform to manage, observe, and govern GenAI for multiple teams

Collaborate while staying secure

Manage your resources with a clear hierarchy to ensure seamless collaboration with Role-Based Access Control (RBAC).

Cut costs, not corners

Monitor performance and costs in real time. Set budget limits and optimize resource allocation to reduce AI expenses.

59.99$

59.99$

59.99$

59.99$

"We evaluated multiple tools, but ultimately chose Portkey—and we’re glad we did. The platform has become an essential part of our workflow. Honestly, we can’t imagine working without it anymore!."

"We evaluated multiple tools, but ultimately chose Portkey—and we’re glad we did. The platform has become an essential part of our workflow. Honestly, we can’t imagine working without it anymore!."

"We evaluated multiple tools, but ultimately chose Portkey—and we’re glad we did. The platform has become an essential part of our workflow. Honestly, we can’t imagine working without it anymore!."

Deepanshu Setia

Machine Learning Lead

Keep it secure with PII redaction

Portkey automatically redacts sensitive data from your requests before they are sent to the LLM

••••••••••••

••••••••••••

Stay in control with full visibility

Track every action with detailed activity logs across any resource, making it easy to monitor and investigate incidents.

One of the things I really appreciate about Portkey is how quickly it helps you scale reliability. It also centralizes prompt management and includes built-in observability and metrics, which makes monitoring and optimization much easier.

JD Fiscus

Director of R&D - AI, RVO Health

Single Sign-On

Onboard your teams instantly and have Portkey follow your

governance rules from day 0

We are heavy users of Portkey. They have thought about the needs of a company like ours and fit so well. Kudos on a great job done!

Abhishek Gutgutia - CTO, Adamx.ai

We are heavy users of Portkey. They have thought about the needs of a company like ours and fit so well. Kudos on a great job done!

Abhishek Gutgutia - CTO, Adamx.ai

We are heavy users of Portkey. They have thought about the needs of a company like ours and fit so well. Kudos on a great job done!

Abhishek Gutgutia - CTO, Adamx.ai

We are heavy users of Portkey. They have thought about the needs of a company like ours and fit so well. Kudos on a great job done!

Abhishek Gutgutia - CTO, Adamx.ai

Backed by experts

Recognised by industry leaders

Setting the standard for LLMOps, we're building what the industry counts on.

Faster Time-to-Market

With Portkey's full-stack LLMOps platform.

Latency

With our extremely lightweight and performant AI Gateway.

Uptime

We maintain strict SLAs to keep your apps running without interruption.

Featured in analyst discussions on emerging AI infrastructure and tooling.

Top-rated on G2 by developers and AI teams building secure, production-grade GenAI applications.

Acknowledged, endorsed, and trusted by leading

industry experts and innovators

Build with the best - start with Portkey today

Backed by experts

Recognised by industry leaders

Setting the standard for LLMOps, we're building what the industry counts on.

Faster Time-to-Market

With Portkey's full-stack LLMOps platform.

Latency

With our extremely lightweight and performant AI Gateway.

Uptime

We maintain strict SLAs to keep your apps running without interruption.

Featured in analyst discussions on emerging AI infrastructure and tooling.

Top-rated on G2 by developers and AI teams building secure, production-grade GenAI applications.

Acknowledged, endorsed, and trusted by leading

industry experts and innovators

Build with the best - start with Portkey today

Backed by experts

Recognised by industry leaders

Setting the standard for LLMOps, we're building what the industry counts on.

Faster Time-to-Market

With Portkey's full-stack LLMOps platform.

Latency

With our extremely lightweight and performant AI Gateway.

Uptime

We maintain strict SLAs to keep your apps running without interruption.

Featured in analyst discussions on emerging AI infrastructure and tooling.

Top-rated on G2 by developers and AI teams building secure, production-grade GenAI applications.

Acknowledged, endorsed, and trusted by leading

industry experts and innovators

Build with the best - start with Portkey today

Backed by experts

Recognised by industry leaders

Setting the standard for LLMOps, we're building what the industry counts on.

Faster Time-to-Market

With Portkey's full-stack LLMOps platform.

Latency

With our extremely lightweight and performant AI Gateway.

Uptime

We maintain strict SLAs to keep your apps running without interruption.

Featured in analyst discussions on emerging AI infrastructure and tooling.

Top-rated on G2 by developers and AI teams building secure, production-grade GenAI applications.

Acknowledged, endorsed, and trusted by leading

industry experts and innovators

Build with the best - start with Portkey today

Trusted by Fortune 500s & Startups

Portkey is easy to set up, and the ability for developers to share credentials with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by ⭐️ 16,000+ developers across the world

Trusted by Fortune 500s & Startups

Portkey is easy to set up, and the ability for developers to share credentials with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by ⭐️ 16,000+ developers across the world

Trusted by Fortune 500s & Startups

Portkey is easy to set up, and the ability for developers to share credentials with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by ⭐️ 16,000+ developers across the world

Trusted by Fortune 500s

& Startups

Portkey is easy to set up, and the ability for developers to share credentials with LLMs is great. Overall, it has significantly sped up our development process.

Patrick L,

Founder and CPO, QA.tech

With 30 million policies a month, managing over 25 GenAI use cases became a pain. Portkey helped with prompt management, tracking costs per use case, and ensuring our keys were used correctly. It gave us the visibility we needed into our AI operations.

Prateek Jogani,

CTO, Qoala

Portkey stood out among AI Gateways we evaluated for several reasons: excellent, dedicated support even during the proof of concept phase, easy-to-use APIs that reduce time spent adapting code for different models, and detailed observability features that give deep insights into traces, errors, and caching

AI Leader,

Fortune 500 Pharma Company

Portkey is a no-brainer for anyone using AI in their GitHub workflows. It has saved us thousands of dollars by caching tests that don't require reruns, all while maintaining a robust testing and merge platform. This prevents merging PRs that could degrade production performance. Portkey is the best caching solution for our needs.

Kiran Prasad,

Senior ML Engineer, Ario

Well done on creating such an easy-to-use and navigate product. It’s much better than other tools we’ve tried, and we saw immediate value after signing up. Having all LLMs in one place and detailed logs has made a huge difference. The logs give us clear insights into latency and help us identify issues much faster. Whether it's model downtime or unexpected outputs, we can now pinpoint the problem and address it immediately. This level of visibility and efficiency has been a game-changer for our operations.

Oras Al-Kubaisi,

CTO, Figg

Used by ⭐️ 16,000+ developers across the world

Latest guides and resources

The enterprise AI blueprint you need now

The Gen AI wave isn't just approaching—it's already crashed over every industry...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

Make your agent workflows production-ready by integrating with

Latest guides and resources

The enterprise AI blueprint you need now

The Gen AI wave isn't just approaching—it's already crashed over every industry...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

Make your agent workflows production-ready by integrating with

Latest guides and resources

The enterprise AI blueprint you need now

The Gen AI wave isn't just approaching—it's already crashed over every industry...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

Make your agent workflows production-ready by integrating with

Latest guides and resources

The enterprise AI blueprint you need now

The Gen AI wave isn't just approaching—it's already crashed over every industry...

Portkey+MongoDB: The Bridge to Production-Ready...

Use Portkey's AI Gateway with MongoDB to integrate AI and manage data efficiently.

Bring Your Agents to Production with Portkey

Make your agent workflows production-ready by integrating with

The last platform you’ll need in your AI stack

The last platform you’ll need in your AI stack

The last platform you’ll need in your AI stack

The last platform you’ll need in your AI stack

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR

Products

© 2025 Portkey, Inc. All rights reserved

HIPAA

COMPLIANT

GDPR