Summary

| Area | Key Highlights |

|---|---|

| Platform | • Terraform Provider for Portkey • Configurable request timeouts • Enhanced rate limits for tokens and requests |

| Agents | • OpenAI AgentKit with multi-provider support |

| Guardrails | • Javelin AI Security integration • Add Prefix guardrail • Allowed Request Types guardrail |

| Gateway & Providers | • Claude Haiku 4.5 • Expanded provider updates (OpenAI, Azure, Bedrock, Vertex AI) |

| Observability | • Structured tool-call visibility • Enhanced web search view for Anthropic |

Portkey recognized as a 2025 Gartner® Cool Vendor™ in LLM Observability

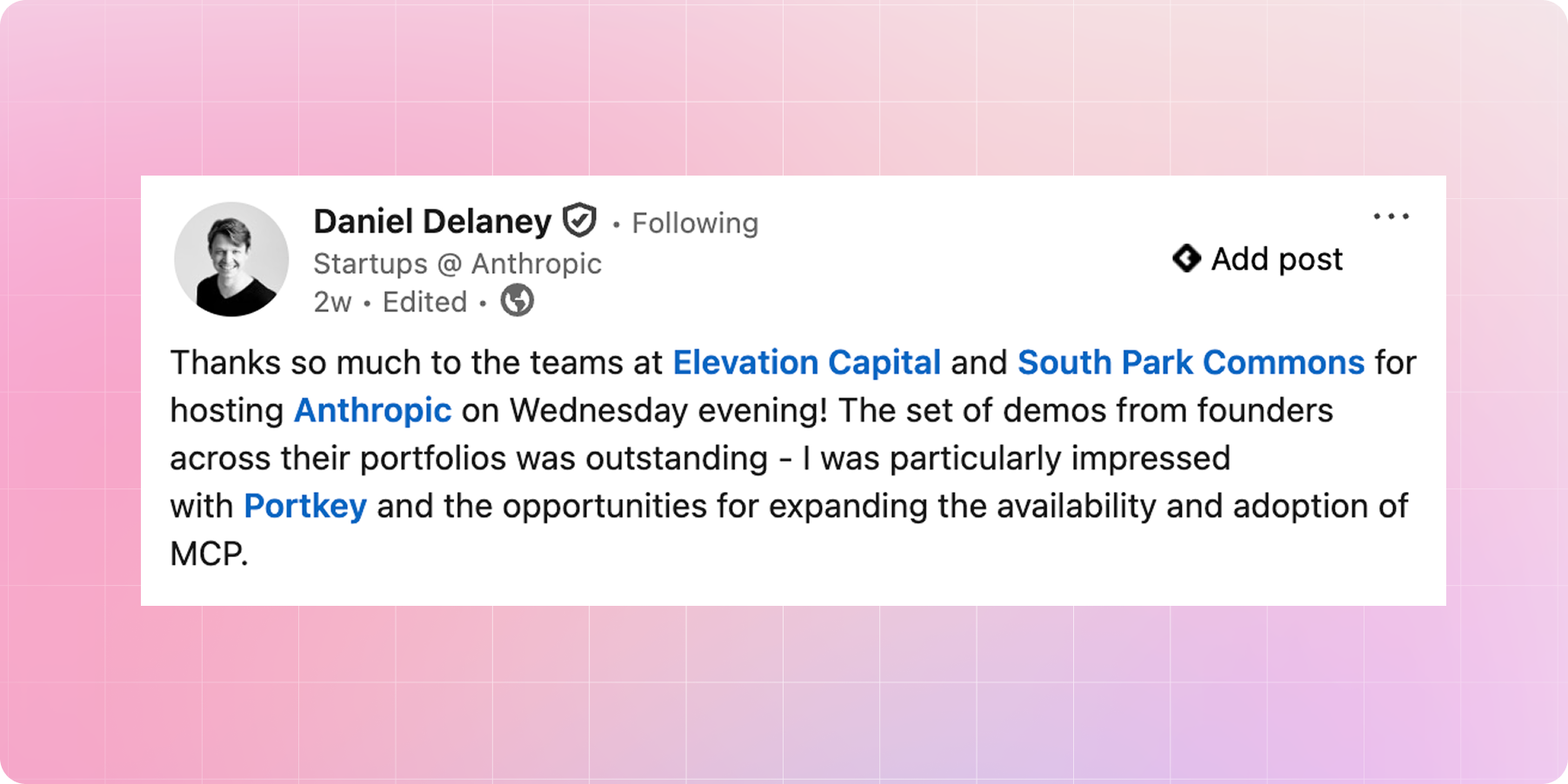

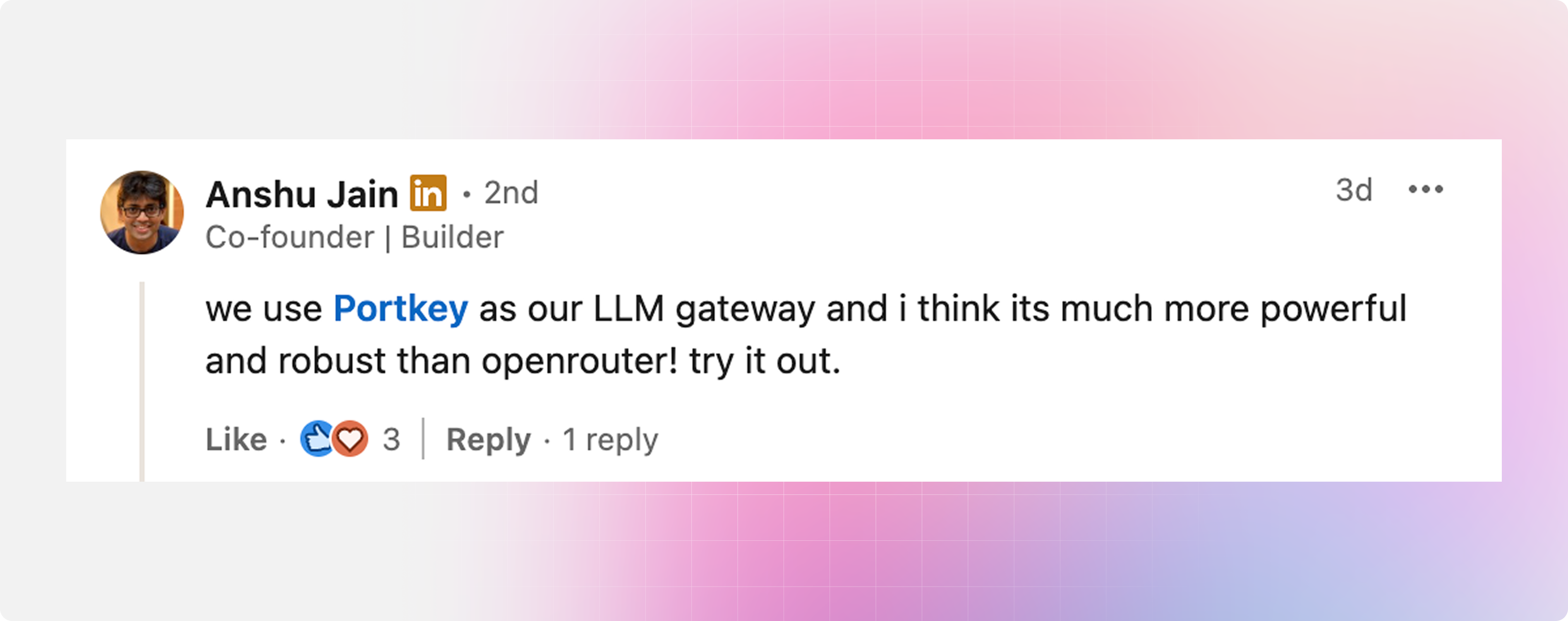

What industry leaders are telling about us!

Platform

Terraform Provider for Portkey

Portkey now has a Terraform provider for managing workspaces, users, and organization resources through the Portkey Admin API. This enables you to manage:- Workspaces: Create and update workspaces for teams and projects

- Members: Assign users to workspaces with defined roles

- Access: Send user invites with organization and workspace access

- Users: Query and manage existing users in your organization

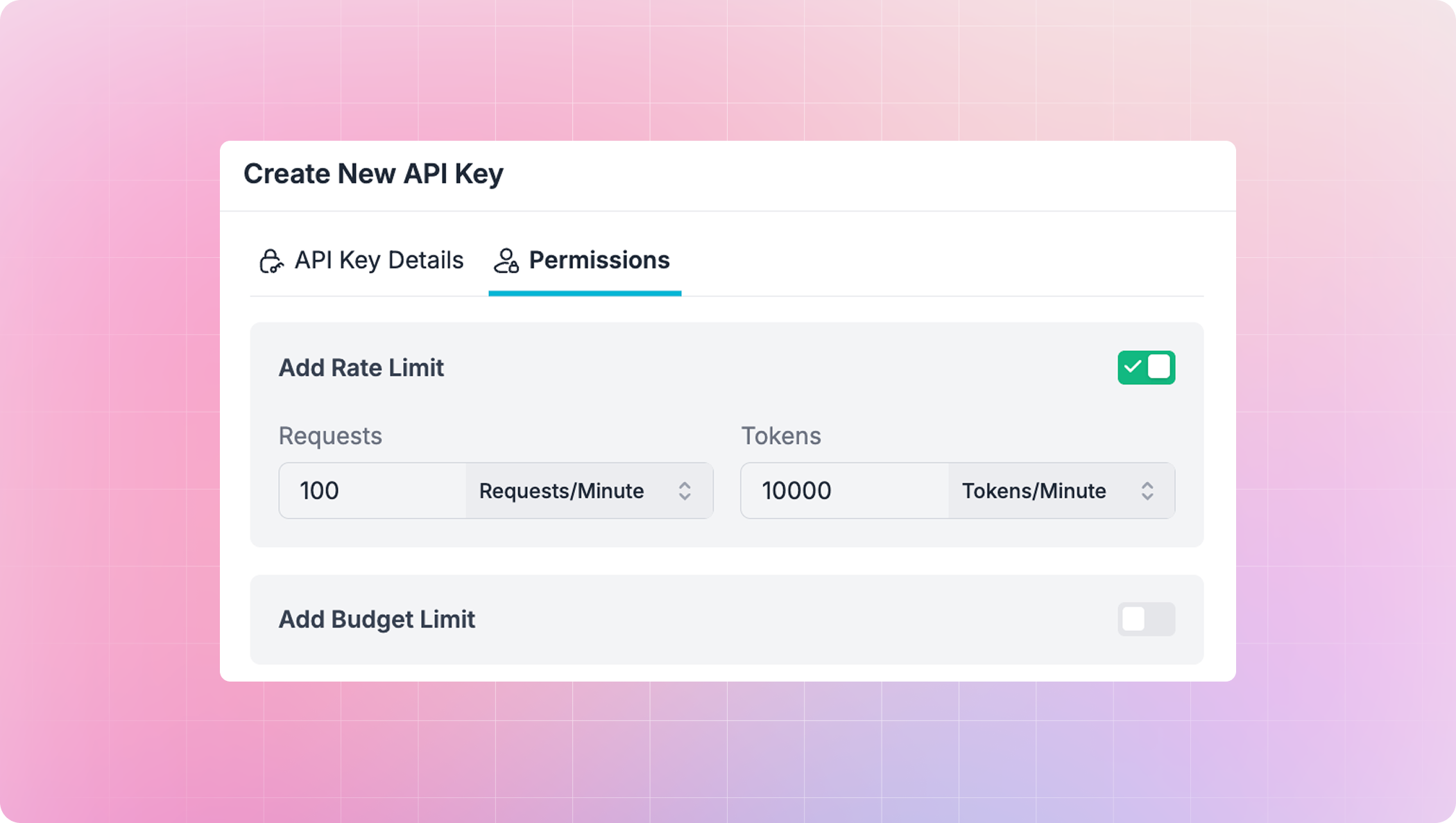

Enhanced rate limits

You can now configure rate limits for both requests and tokens under each API key, giving teams precise control over workload, costs, and performance across large deployments.

- Request-based limits: Cap the number of requests per minute, hour, or day.

- Token-based limits: Cap the number of tokens consumed per minute, hour, or day.

Configurable request timeouts

A newREQUEST_TIMEOUT environment variable lets you control how long the Gateway waits before timing out outbound LLM requests — helping fine-tune latency, retries, and reliability for large-scale workloads.

Customer love!

Guardrails

Javelin AI Security integration

You can now configure Javelin AI Security guardrails directly in the Portkey Gateway to evaluate every model interaction for:- Trust & Safety — detect and filter harmful or unsafe content

- Prompt Injection Detection — identify attempts to manipulate model behavior

- Language Detection — verify and filter language in user or model responses

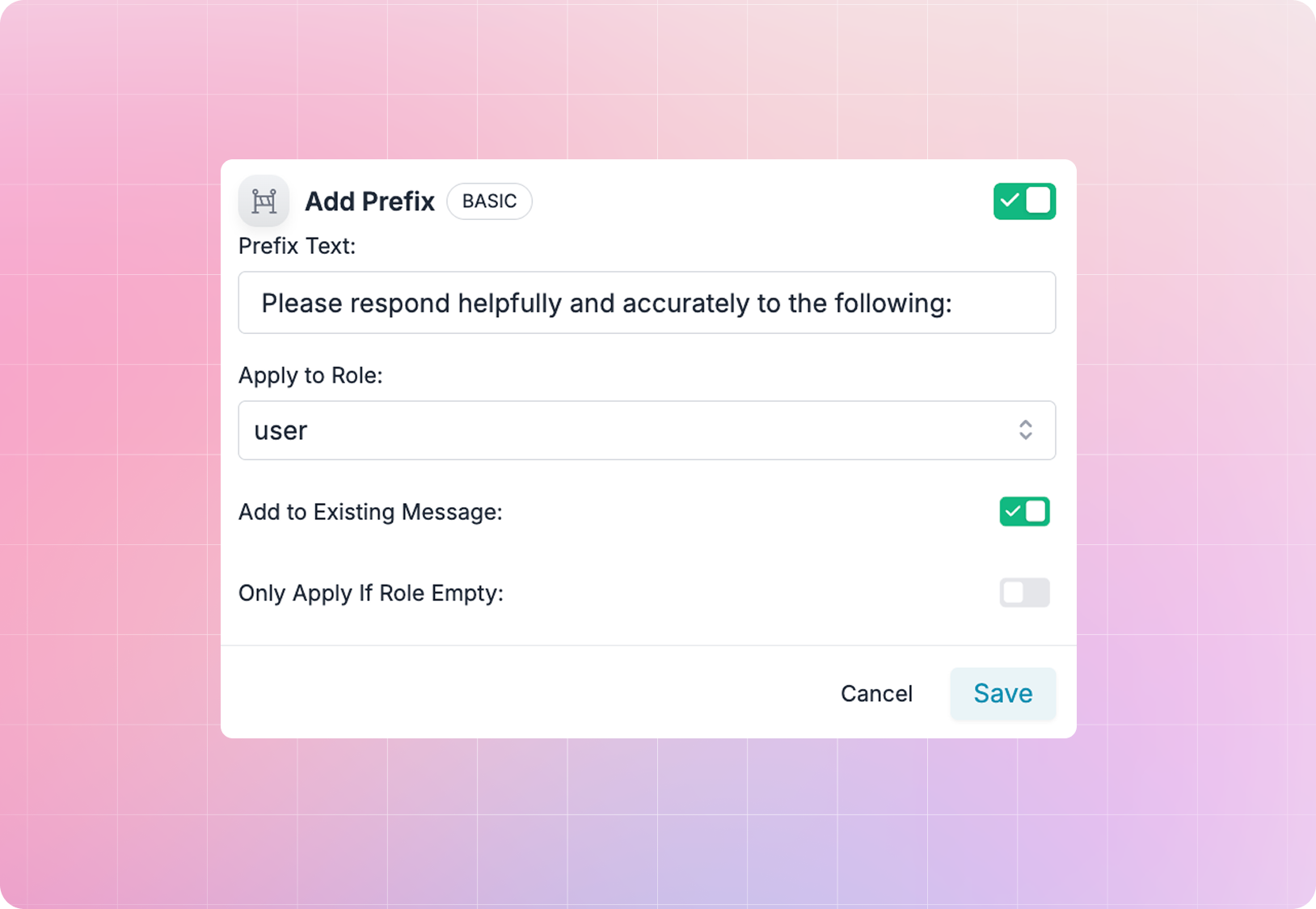

Add Prefix guardrail

The new Add Prefix guardrail lets you automatically prepend a configurable prefix to user inputs before sending them to the model, useful for enforcing consistent tone, context, or compliance instructions across all model interactions.

The new Add Prefix guardrail lets you automatically prepend a configurable prefix to user inputs before sending them to the model, useful for enforcing consistent tone, context, or compliance instructions across all model interactions.

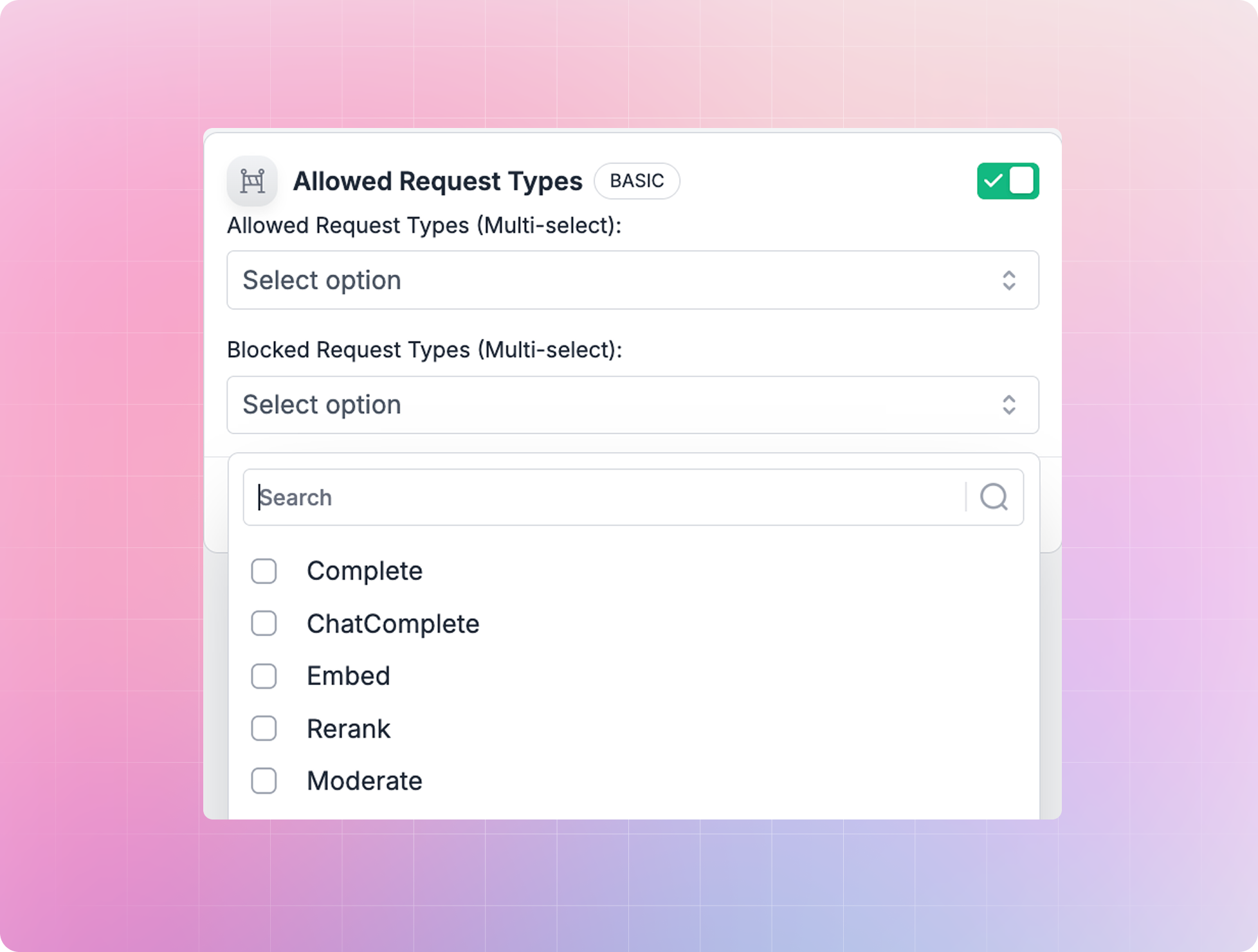

Allowed Request Types guardrail

With the Allowed Request Types guardrail, you can now define which request types (endpoints) are permitted through the Gateway. Use an allowlist or blocklist approach to control access at a granular level and restrict unwanted request types.

With the Allowed Request Types guardrail, you can now define which request types (endpoints) are permitted through the Gateway. Use an allowlist or blocklist approach to control access at a granular level and restrict unwanted request types.

Gateway

New models and providers

- Claude Haiku 4.5: Anthropic’s lightweight model for real-time use cases.

- OpenAI & Azure OpenAI: Added support for streaming audio transcription.

- Vertex AI: Added custom-model support for batching and input-audio parameter.

- AWS Bedrock: Added global profiles, token counting, and batch pass-throughs.

- Azure: Improved Entra caching and image-editing pricing support.

- Anthropic Beta (Vertex AI): Added beta header support for compatibility.

Agents

OpenAI AgentKit with multi-provider support

Building agents is now easier with OpenAI’s AgentKit, Portkey takes it further. You can now connect your AgentKit agents to 1,600+ LLMs across providers through Portkey, while gaining end-to-end observability, guardrails, and cost tracking. See the integration guide hereObservability

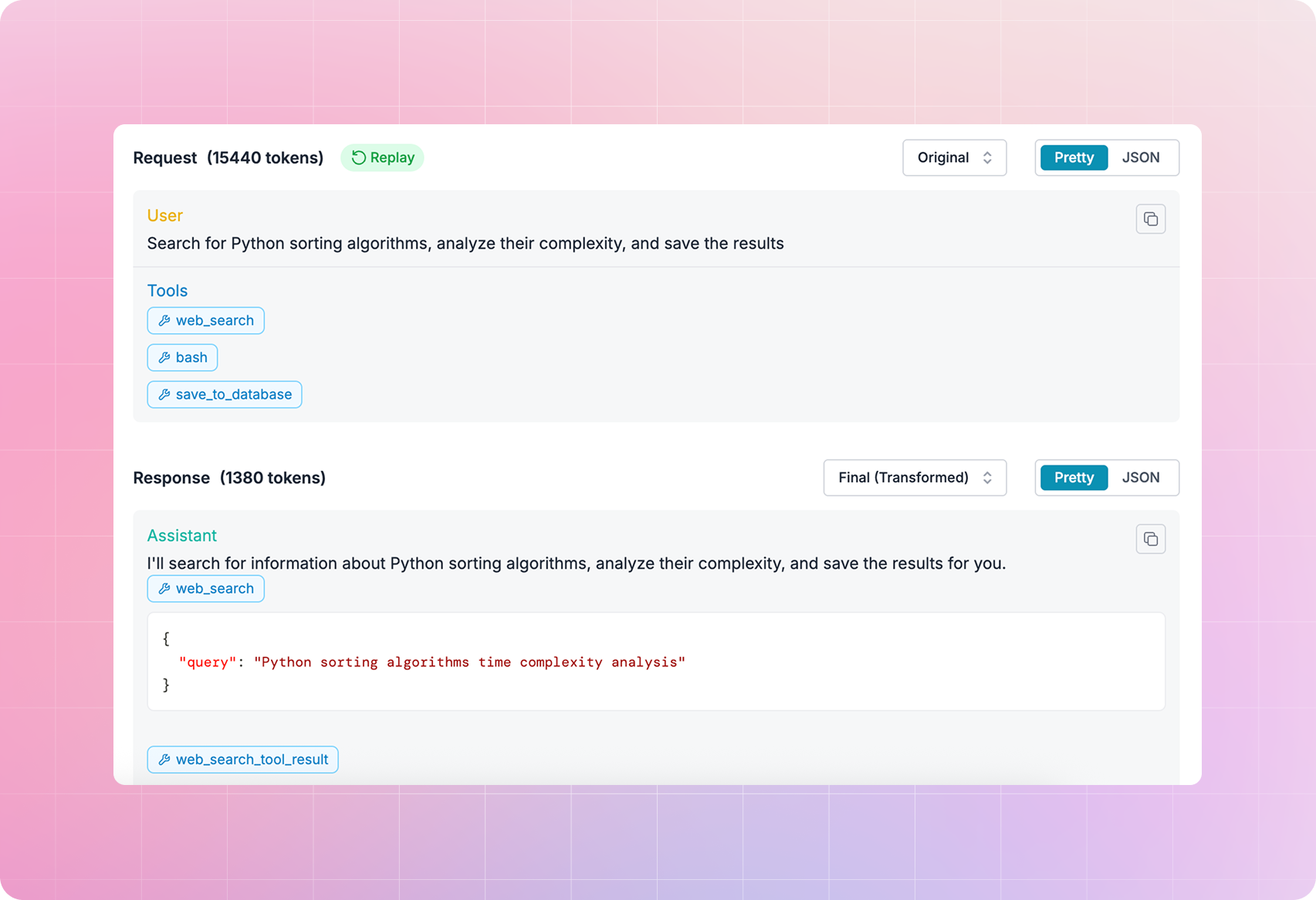

Structured tool call visibility

Tool calls made during model interactions are now automatically detected and displayed as separate, structured entries in the Portkey logs.

Tool calls made during model interactions are now automatically detected and displayed as separate, structured entries in the Portkey logs.This makes it easier to trace the tools invoked, inspect parameters, and understand agent behavior across multi-step workflows.

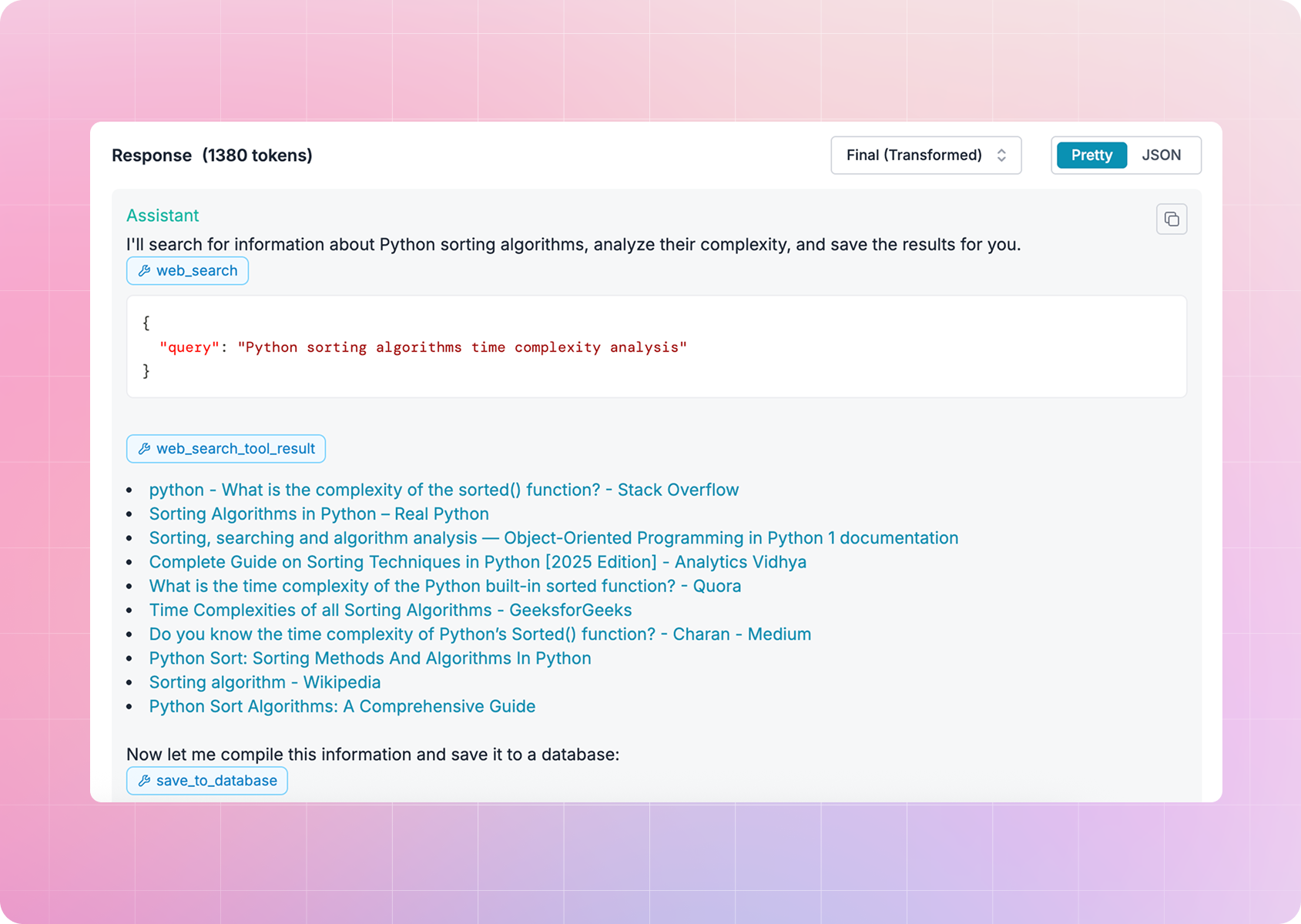

Enhanced view for web search

Web search results from Anthropic models now appear in a clearer, formatted view within the dashboard.

Web search results from Anthropic models now appear in a clearer, formatted view within the dashboard.This update improves readability and makes it easy to see what information the model used to generate its response, enabling faster analysis and better transparency.

Customer Stories: From arm pain to AI gateway

After an unexpected setback forced Rahul Bansal to rethink how he worked, he built Dictation Daddy, an AI-powered dictation platform now used by professionals, doctors, and lawyers to write faster and with greater accuracy, powered by Portkey. Read the full story hereCommunity & Events

MCP Gateway for Higher Education

_01K8T4W2SKT3FVS2CD4G4BC2K1.png) We’re teaming up with Internet2 to host a session on how higher education institutions can implement the Model Context Protocol (MCP) securely and at scale.

Join us to learn how MCP fits within existing campus IT frameworks, what governance models are emerging, and the practical steps universities can take to enable secure, compliant AI access across departments.

We’re teaming up with Internet2 to host a session on how higher education institutions can implement the Model Context Protocol (MCP) securely and at scale.

Join us to learn how MCP fits within existing campus IT frameworks, what governance models are emerging, and the practical steps universities can take to enable secure, compliant AI access across departments.

LibreChat in Production

.png?fit=max&auto=format&n=GEGVgX5TIuMibq6z&q=85&s=a794f820806d34728773c9d426d6c044)

Resources

- Blog: Observability is now a business function for AI

- Blog: Using OpenAI AgentKit with Anthropic, Gemini and other providers

- Blog: What we think of the Opentelemetry semantic conventions for GenAI traces

- Blog: Comparing lean LLMs: GPT-5 Nano and Claude Haiku 4.5